Automate recreation of Synapse dedicated SQL pool environment using Azure DevOps

Published Thursday, September 22, 2022

Author(s): Sarath Sasidharan is a Program Manager in Azure Synapse Customer Success Engineering (CSE) team.

Introduction

There are many scenarios where you want to create a new Synapse dedicated SQL pool environment based on an existing Synapse dedicated SQL pool environment. This may be required when you need to create a development or test environment based on your production environment by copying complete schemas and without copying data.

Performing this set of operations in an environment with tight security requires elevated permissions. You want them to be controlled and automated to make sure that:

- It's easy to use

- Control is in place

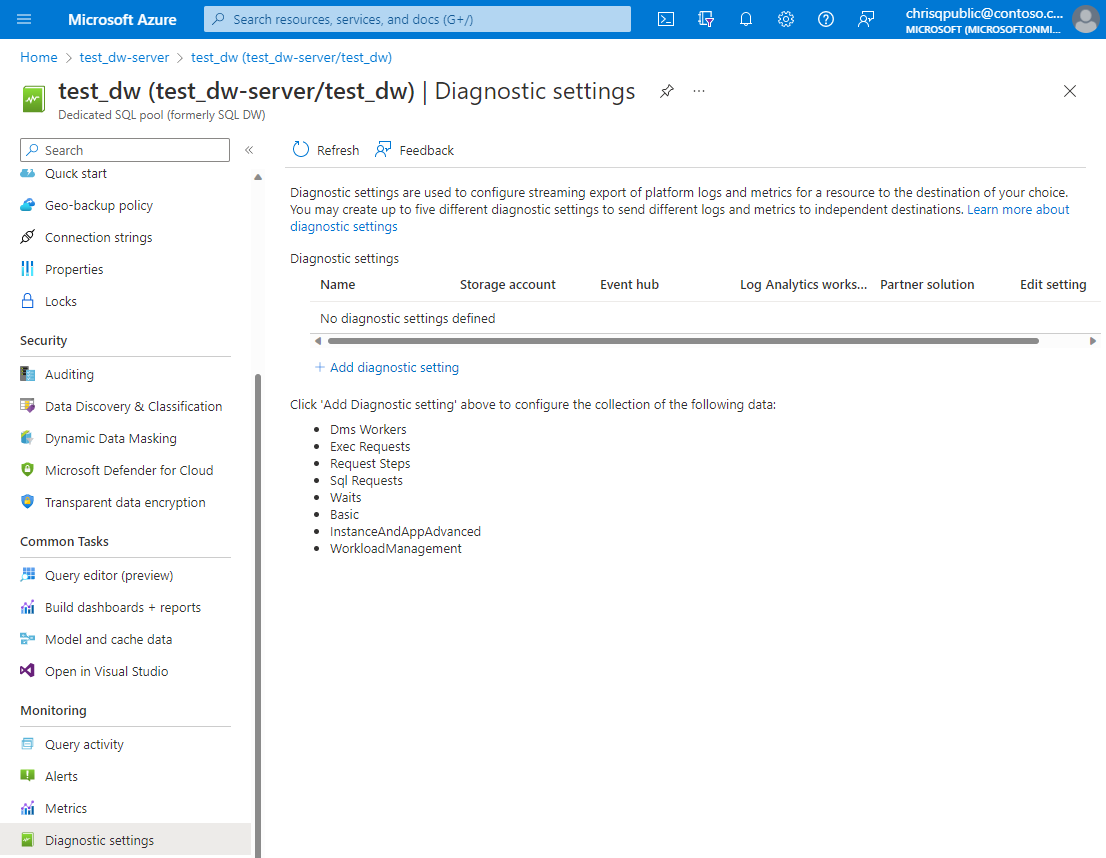

- Monitoring is in check

- Secure usage

In this blog, we are going to use the SQL Package activity as a task in Azure DevOps to help us achieve this goal.

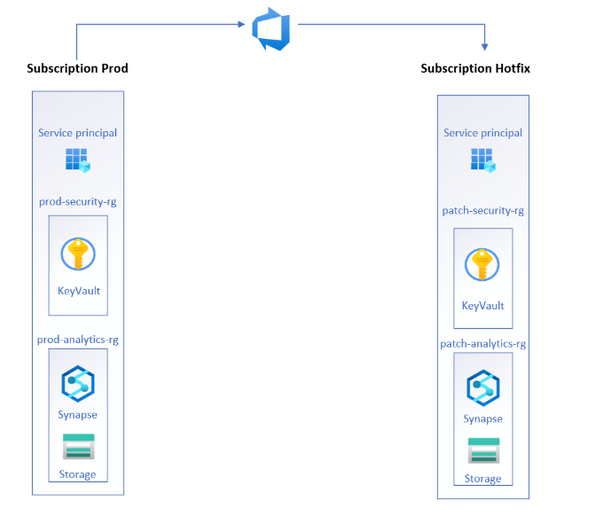

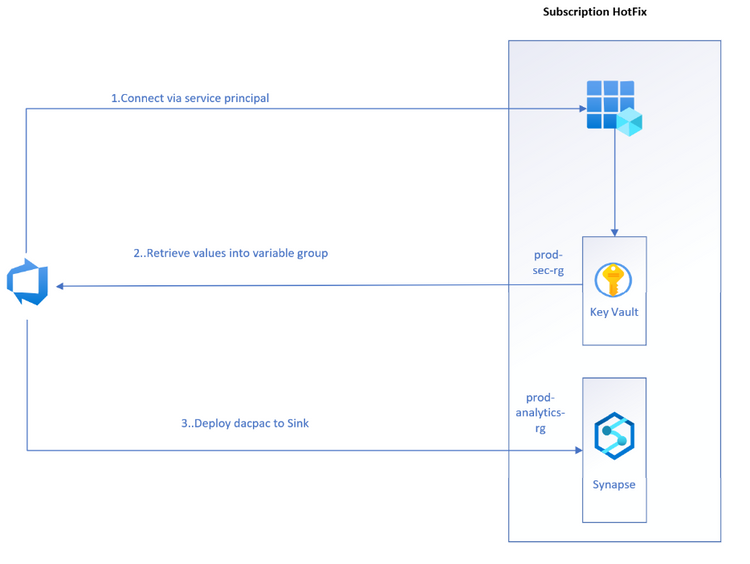

High-Level Architecture

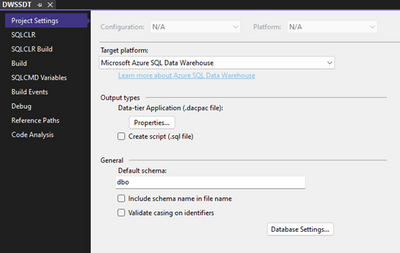

This scenario imitates a dedicated hotfix subscription, which is used to get the current environment to recreate / test issues happening in the live environment. Azure DevOps is used to automatically extract the deployment artifact dacpac.

In this setup, we use the link between azure devops and azure key vault to store secrets/connection strings that secure the pipeline.

Two separate resource groups have been defined, the security resource group which contains the key vault, and the analytics-rg which contains the synapse workspace and the storage account.

The pipeline to create the package and deploy it is built within Azure DevOps.

Workflow Explained

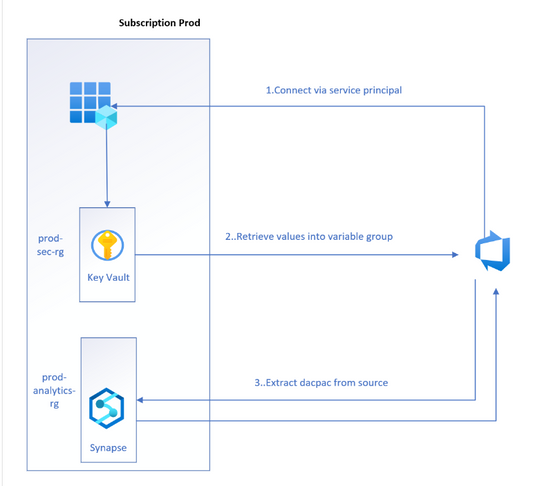

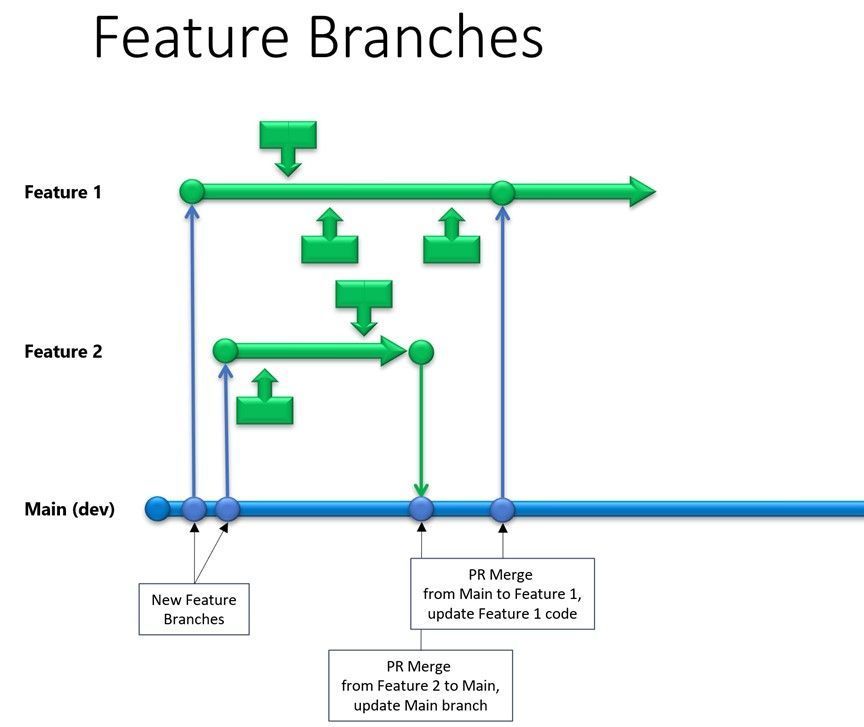

The trigger for this workflow starts with an Azure DevOps Pipeline. In this scenario, two service connections need to be created which have rights to extract the warehouse artifacts from Synapse Dedicated SQL pool in the source subscription and deploy the artifact in a sink subscription. A service connection is a connection object which is used by Azure DevOps to connect to azure. Under the hood, the service connections are referring to service principals which have access to the underlying subscription for reading and writing to the target dedicated SQL pool.

Extract Dacpac from the source Synapse dedicated SQL pool

This represents the flow of how the Azure DevOps pipeline extracts the dacpac from the source dedicated SQL pool.

This section contains the YAML definition for the SQL Package task to extract the dacpac. These variables are picked up from the Azure KeyVault which we will discuss below.

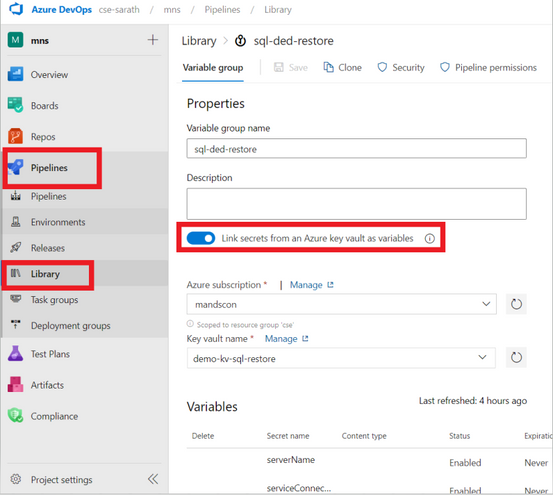

Once the pipeline within Azure DevOps is triggered. The secrets (keys/connection details etc.) of the source dedicated SQL pool are retrieved. This is stored within variable groups of Azure DevOps. Variable Groups are used to store values and secrets which need to be passed into the pipelines. Variable groups can be linked to the Azure KeyVault.

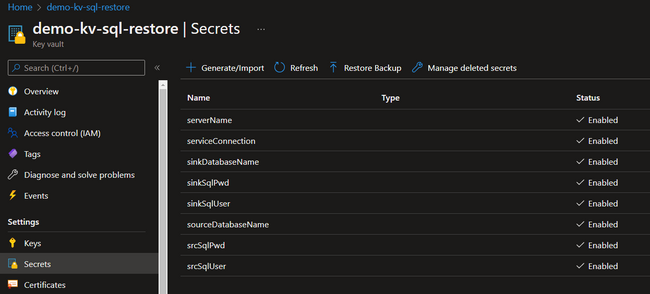

Sensitive identity and connection details are locked inside an Azure KeyVault which is an HSM Solution on azure. The secrets have to be stored inside an Azure KeyVault as shown below.

Variables defined inside the variable group inside the pipeline can be referred to using the $(<variable_name>) syntax as seen in the code snippet above. Below, you can see how you can add a new variable group linking it to an existing Azure Key Vault where your secrets are stored. This makes it available for use within Azure DevOps pipelines.

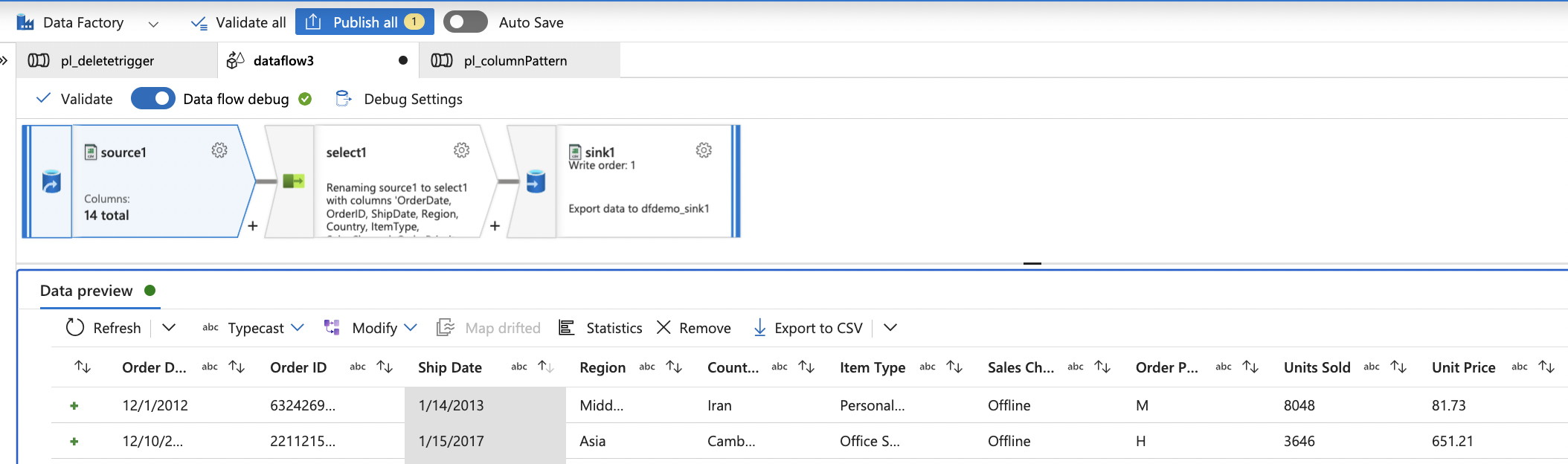

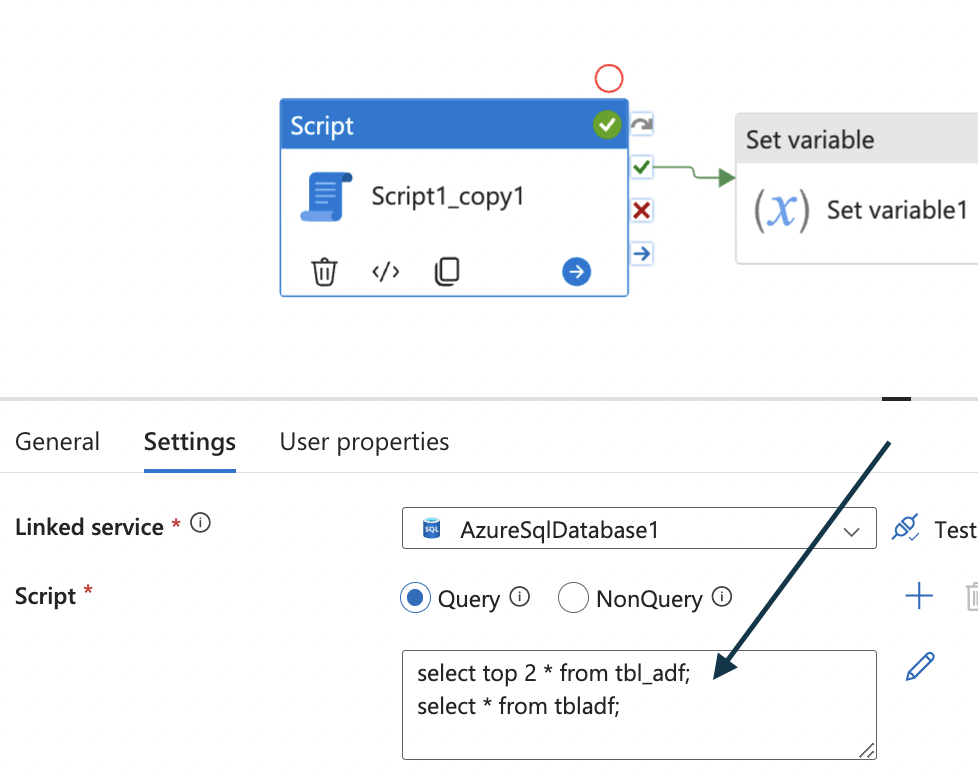

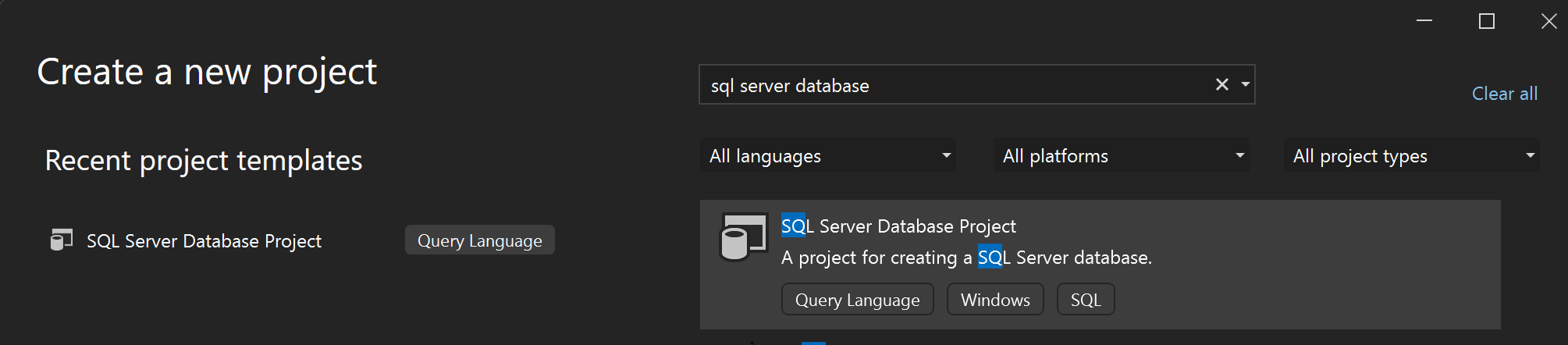

After the details have been extracted from the pipeline, the first activity in the pipeline is a task. A task is an atomic block in a pipeline which is a pre-packaged script that performs the activity which needs to be executed. In our scenario, this is to extract the environment as an artifact. We use the pre-built task named SQL Package activity to achieve this.

This task connects to the Synapse dedicated SQL pools and starts extracting the dacpac , which contains all the information needed to recreate this environment on a different pool. All these tasks are run on VMs which are called Azure Pipeline Agents /build agents. The resulting dacpac extracted from the source dedicated SQL pool is written into the local storage of the build agent. If you are using separate pipelines to extract and deploy the artifact, then you need to store this artifact in an azure artifact. This artifact can then later be retrieved in the second pipeline, to deploy the artifact. In this scenario we have just one single pipeline to extract and deploy, hence we will refer to the local drive of the build agent.

Deploy Dacpac to target Synapse dedicated SQL pool

This flow represents how the Azure DevOps pipeline deploys the extracted dacpac from the source to the target dedicated SQL pool.

The SQL Package action for deployment is quite similar to the previous task, except for the DeploymentAction. By default, it is deployed, so the dacpac provided is deployed to the target environment specified. Please make sure that the Dacpac file location points to the output location of the first task.

The extracted artifact from the source dedicated SQL pool (previous step) is picked and deployed in the target dedicated SQL pool. The second Service connection is used to connect to the second subscription aka the target subscription. The credentials for the sink dedicated SQL pool are picked up from the key vault, via the variable groups (as discussed in the previous step).

A connection is established to the sink dedicated SQL pool and the extracted dacpac is deployed. After a successful deployment, all objects in the source pool will be visible in the sink pool.

The entire code snippet discussed so far can be saved as one single YAML file, as below, that represents the Azure DevOps deployment pipeline to extract the dacpac from the source and deploy it to a target pool.

Summary

This blog post talks about creating a dedicated SQL pool environment based on an existing dedicated SQL pool environment using Azure DevOps automation. This may be required when you need to create lower level environments like development or test environment based on your production environment by copying complete schemas and without copying production data.

Our team publishes blog(s) each week and you can find all these blogs here: https://aka.ms/synapsecseblog

For deeper level understanding of Synapse implementation best practices, please refer our Success By Design (SBD) site: https://aka.ms/Synapse-Success-By-Design

Continue to website...