Metadata-Based Ingestion in Synapse with Delta Lake

Overview

The crucial first step in any ETL (extract, transform, load) process or data engineering program is ingestion, which involves dealing with multiple data sources and entities or datasets. Managing ingestion flow for multiple entities is therefore essential. This article outlines a Metadata-based approach for ingestion that allows for convenient modification of datasets whenever necessary. It also shows how a Delta Lake can be accessed by different forms of compute, such as Spark pools and SQL serverless, in Synapse and how these computes can be utilized in a single Synapse pipeline.

Scenarios:

Metadata-based ingestion is a frequent practice used by various teams. In the past, ASQL was used just for storing Metadata, which resulted in additional resource maintenance only for Metadata. However, with modern architecture and migration to Synapse for analytics, there is an opportunity to move away from other Metadata stores such as ASQL database.

To address this, we explored multiple options to find a new and more effective way to store and read Metadata. After careful consideration, we introduced a new method for Metadata storage that would eliminate the need for ASQL and allow for easier maintenance of Metadata resources.

This novel approach to Metadata storage is based on modern technology and is designed to meet the needs of a Data Engineering team. With this novel approach, the team can better manage Metadata resources as it is easily configurable with Delta tables.

Overall, the introduction of this new Metadata-based ingestion approach represents a significant step forward for every team.

Metadata Store Options

- ASQL

- Dedicated SQL Pool

- Delta Lake tables

- Excel/csv files

- Azure Table Storage

- Serverless SQL Pool

Among the above options, Delta Lake tables are the best fit for storing Metadata, as Delta Lake offers ACID like properties, schema enforcement and data versioning, which makes it more useful.

Pros and cons of Delta Lake tables are as below:

Pros:

- Delta Lake tables are used heavily in all reporting solutions.

- Delta Lake tables support bulk update SQL commands such as update, merge, etc.

- Delta Lake tables support audit and restore previous snapshot of data.

- Delta Lake provides several features such as ACID transactions, schema enforcement, upsert and delete operations, unified stream and batch data processing, and time travel (data versioning) that are incredibly useful for analytics on Big Data.

Cons:

- Synapse pipelines cannot directly read Delta tables. They can only be read through compute like Spark pool.

How to use Delta tables for Metadata-based ingestion

- Using Serverless SQL pool

- Using Synapse dataflows

- Using Spark notebook

From the above listed options, Serverless SQL pool seems to be the optimal way to read Metadata from Delta tables in Synapse.

SQL serverless pool benefits

- SQL serverless pool can now read Delta tables directly from Delta Lake, just like csv files. This can be leveraged in Synapse pipelines to read Metadata stored in Delta tables.

- SQL serverless pool is always available and part of every Synapse workspace.

- SQL serverless pool does not require any spin up time to become active unlike Spark pools.

- The cost of serverless pool is low, using $5 per TB of processed data. In this scenario, there are only a few Metadata rows that are read, which is cost efficient.

- Costing Model:

- Cost is $5 per TB data processed by the query.

- Cost depends on the amount of data processed:

- Data read/write into Delta Lake.

- Data shuffled in intermediate nodes.

- Creation of statistics.

- Minimum data processed will be 10 MB.

Metadata (Delta table) based ingestion using Serverless SQL pool.

Using Serverless SQL pool, we can query Delta tables to read our Metadata and then start our orchestration process using pipelines. For sample Metadata, please refer to the GitHub repository mentioned in appendix.

How to use Serverless SQL pool to read Metadata:

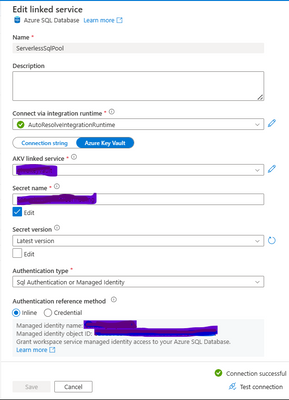

- Creation of linked service:

Prerequisite: Create at least one database under serverless pool for Metadata.

Connection String: Integrated Security=False; Encrypt=True; Connection Timeout=30; Data Source=synapseserverlesspoolname-ondemand.sql.azuresynapse.net ; Initial Catalog=DBName

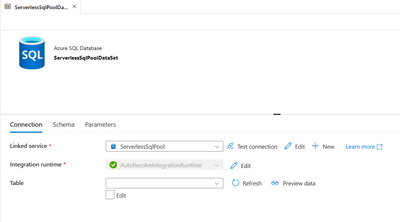

2. Create a new dataset using the linked service created in step 1 and keep the table name empty.

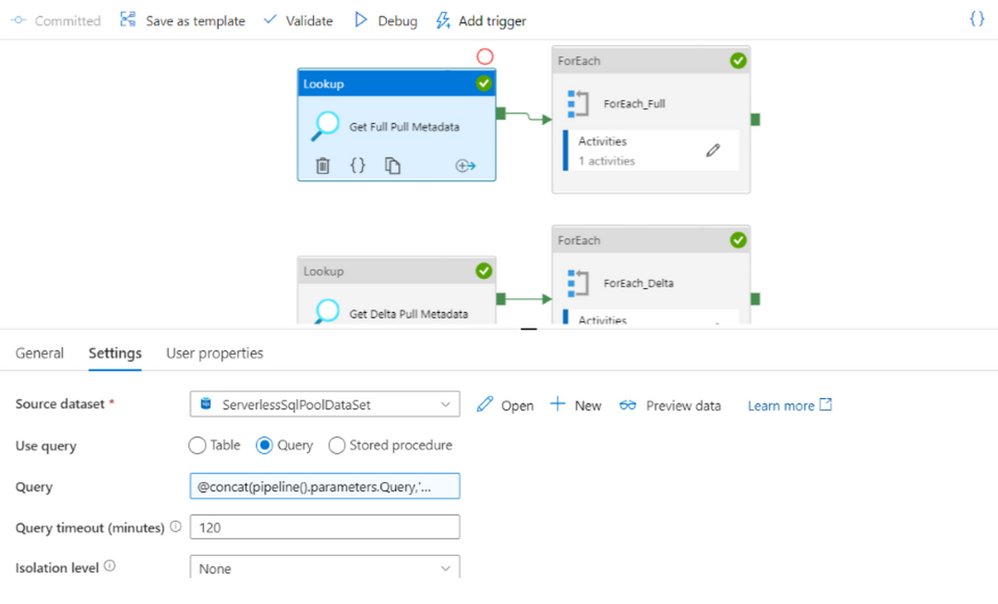

3. As shown in below snapshot, Create a pipeline that uses Look-up activity to read Metadata from Delta Lake. In this Look-up activity we are connecting to dataset (from point 2) to fire user customized query on Delta table. Once the Look-up activity retrieves all rows from the Metadata table, the For-loop activity is used to iterate through each Metadata row from Look-up activity output. And within For-loop activity, a copy activity is used to read data from source to sink using query/table option for given column SourceQuery/SourceEntity from Metadata row, respectively.

Overall, this pipeline efficiently leverages the Look-up and For-loop activities to retrieve Metadata and iterate through it, while the copy activity facilitates data movement from the source to the sink using the appropriate query or table options based on the Metadata information.

Look-up activity to fire user customized query on Delta table as shown below:

Query:

SELECT * FROM OPENROWSET (BULK 'filepath', FORMAT = 'delta') as rows

NOTE:

Use filepath above in format as given: https://datalakegen2.blob.core.windows.net/syanpseworkspace/data/common/metadata/entitymetadata/

With Metadata-based ingestion, Synapse pipeline is simplified and it contains only a few pipeline items like copy activity, for-loop, and look-up, reducing the need of adding 100 of copy activities if you are processing data for 100 entities each day, which makes pipeline huge and difficult to maintain. Metadata-based pipeline makes orchestration simple and clean.

Process to merge raw data into gold table

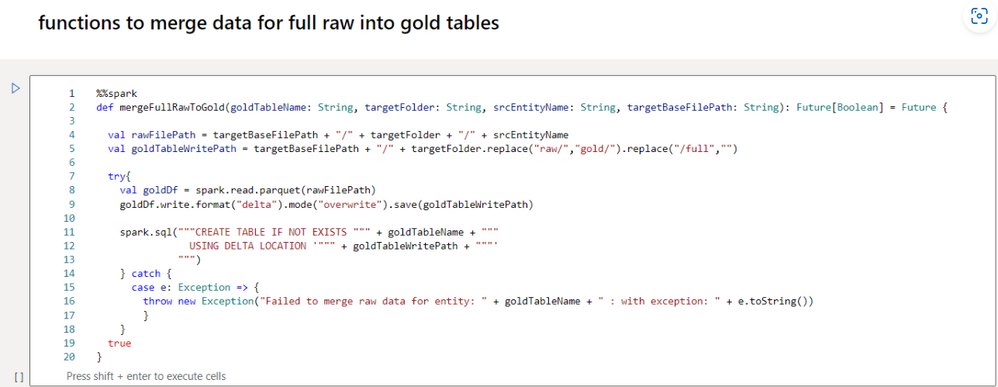

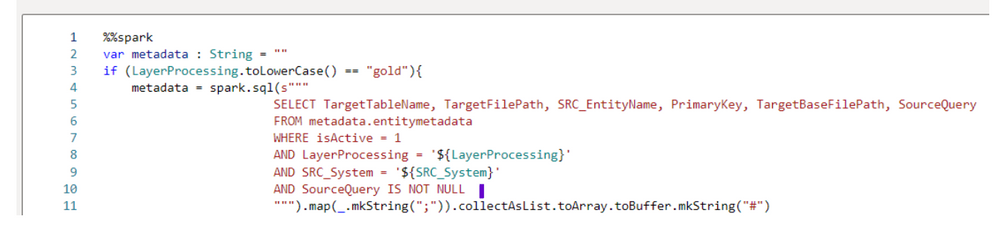

Once we have data copied into raw layer, we call a Synapse notebook which reads Metadata and merge raw parquet files data into gold tables in parallel threads as shown below:

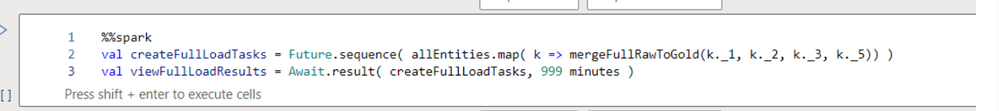

Steps to make notebook in parallel call fashion:

- Create methods which will have logic to merge data to existing tables:

2. Read Metadata in a data frame:

- Call method created above in parallel fashion (async calls):

Advantages:

- As the Synapse session takes 2-3 mins to start, it will save a lot of time by processing multiple entities in parallel executions.

- With Metadata driven approach if there is a change in any attribute, like entities addition/removal, Delta Lake path, then you just need to change Metadata and code will take care of the rest.

- Minimal cost: cost is $5 per TB data processed by the query.

Conclusion

Delta Lake is a valuable resource for persisting data in storage, offering ACID-like properties that are particularly useful for analytics and reporting. It provides several other features such as schema enforcement, upsert and delete operations, unified stream and batch data processing, and time travel (data versioning) that are incredibly useful for analytics on Big Data.

A streamlined ingestion process is critical for ETL. Metadata-based ingestion provides an effective solution for achieving a smooth ingestion flow, with only a one-time setup required. Pipeline activities can be easily extended, to add new entities or retire existing datasets.

In this article, we have explored how Delta Lake can be accessed by different forms of compute in Synapse such as Spark pools and SQL serverless. And how these compute steps can be orchestrated in a single Synapse pipeline. This, combined with the other benefits of Delta Lake mentioned above, make it an incredibly useful format for data ingestion and storage in an ETL process and in Synapse pipelines.

Appendix

- Code Repository: Metadata-IngestionUsing-ServerlessSQLPool

- Other Helpful articles:

Published on:

Learn more