Boost your CICD automation for Synapse SQL Serverless by taking advantage of SSDT and SqlPackage CLI

Introduction

Azure Synapse Analytics Serverless SQL is a query service mostly used over the data in your data lake, for data discovery, transformation, and exploration purposes. It is, therefore, normal to find in a Synapse Serverless SQL pool many objects referencing external locations, using disparate external data sources, authentication mechanisms, file formats, etc. In the context of CICD, where automated processes are responsible for propagating the database code across environments, one can take advantage of database oriented tools like SSDT and SqlPackage CLI , ensuring that this code is conformed with the targeted resources.

In this article I will demonstrate how you can take advantage of thee tools when implementing the CICD for the Azure Synapse Serverless SQL engine. We will leverage SQL projects in SSDT to define our objects and implement deploy-time variables (SQLCMD variables). Through CICD pipelines, we will build the SQL project to a dacpac artifact, which enables us to deploy the database objects one or many times with automation.

Pre-Requisites

Before you run this lab, make sure that you are using the latest version of Visual Studio, since the support for Synapse Serverless was recently introduced in the 17.x version. The one that I've used in this lab was Microsoft Visual Studio Community 2022 (64-bit) Version 17.7.3.

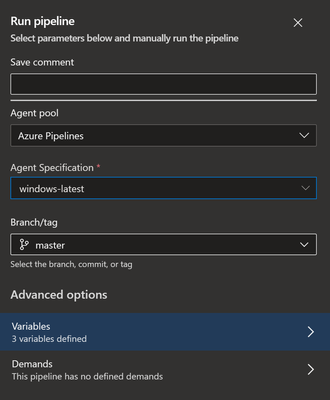

I've used Azure DevOps Git to setup the automated processes to build and deploy the Dacpac. In case you are using your own infrastructure to run these processes, ensure that you have installed the latest SqlPackage version in your agent runner machine. In this lab, I've used a Microsoft-Hosted Agent runner using the latest Windows image (running SqlPackage v162.0.52).

TOC

To facilitate navigating through this lab, I'm breaking down this article in several steps:

Step 1: Adding a new database project to Visual Studio and importing the serverless pool

Step 2: Taking advantage of SQL CMD Variables in your Visual Studio code

Step 3: Integrating your Visual Studio solution with a Git Repository

Step 4: Creating a DevOps Pipeline and building the Dacpac file

Step 5: Creating a Release Pipeline and deploying the Dacpac

Starting the Lab

Step 1: Adding a new database project to Visual Studio and importing the serverless pool

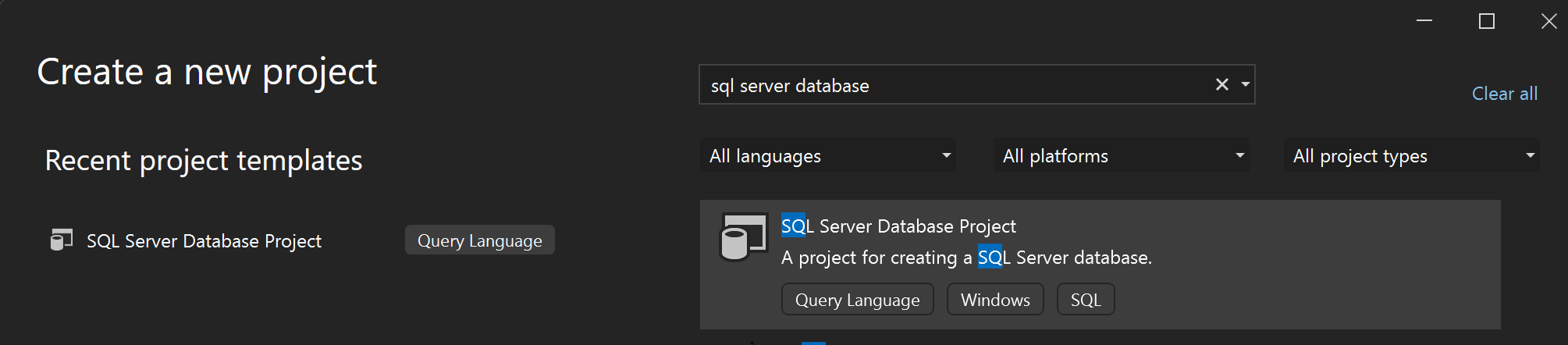

Create a new project and select the "SQL Server Database Project" template.

Select "Next" to start configuring your new project.

Select "Create" to finish the project configuration.

Navigate to the Solution Explorer blade and double-click "Properties". This will open a new window displaying the database project properties.

In "Project Settings", select the "Azure Synapse Analytics Serverless SQL Pool" target platform as shown in the figure below. If you don't see this option available in the dropdown list, most likely you don't have the latest SSDT/ Visual Studio version installed.

Note: You can refer to these links in case you need to update SSDT and Visual Studio:

Download SQL Server Data Tools (SSDT) - SQL Server Data Tools (SSDT) | Microsoft Learn

SQL Server Data Tools - SQL Server Data Tools (SSDT) | Microsoft Learn

After selecting the target platform, you can start importing the Synapse Serverless pool to your project.

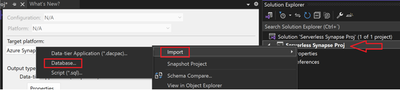

To do this, from the "Solution Explorer" blade, right-click the project name and then select the "Import" --> "Database…" option.

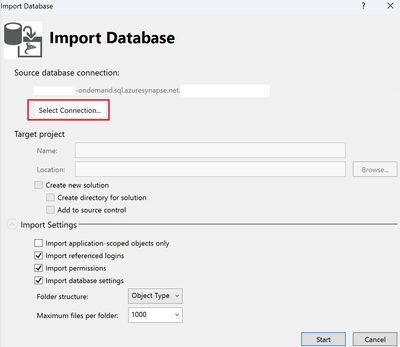

Hit the "Select Connection" button to specify your Synapse Serverless pool and from the "Import Settings" section, make sure to uncheck the "Import application-scoped objects only" in case you need to import any server-scoped objects as well.

Select "Start" to begin importing the Serverless SQL pool objects to your project.

When the import is finished, check the Solution Explorer as it will show the sql files containing your database objects:

Step 2: Taking advantage of SQL CMD Variables in your Visual Studio code

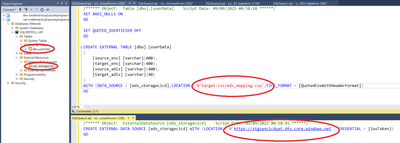

In this lab, I'm using an external table that is pointing to a specific external location in my development environment. This external table, named "userData", is targeting a delimited file, named "eds_mapping.csv", saved in a storage container named "csv".

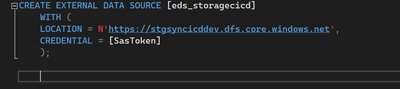

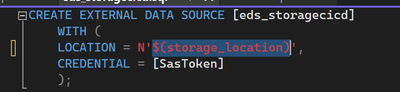

The data source being used by this external table is named "eds_storagecicd" and it is targeting a storage account named "stgsyncicddev".

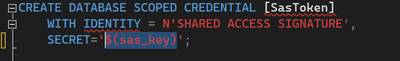

I've decided to use the Shared Access Signature authentication method, when accessing my storage account. That's why I'm defining a database scoped credential with this kind of authentication.

IMPORTANT NOTE:

In this screenshot above you are not seeing the full t-sql statement as the SECRET argument is missing from the CREATE DATABASE SCOPED CREDENTIAL statement. However, a SECRET value was provided at creation time. This is a by design behavior (for security reasons) in Visual Studio, when importing your database objects from your database to the database project, sensitive information is not being exposed in the code.

So, make sure you revise any code that is using sensitive information, like database scoped credentials, validating if the object definition is consistent with what you have in the database.

Here's an example of an error message that can result from deploying an external table to a target environment using a missing or invalid credential:

Msg 16562, Level 16, State 1, Line 27

External table 'dbo.userData' is not accessible because location does not exist or it is used by another process.

Usually, when deploying database objects like external tables from a source environment to a target environment, you require these objects to reference different resources. For example, the files that are referenced by external tables might be stored in a different storage location or storage path (and eventually stored with a different filename).

So, how can you ensure that your database objects are referencing the right resources when being deployed to a target environment?

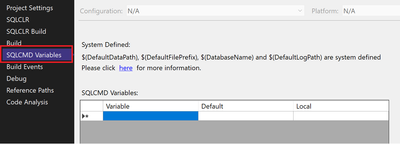

The answer is using SQLCMD Variables. These variables can be used in SQL Server Database Projects, providing dynamic substitution to be used for publishing of Dacpac files, for example. By entering these variables in project properties, they will automatically be offered in publishing and stored in publishing profiles.

In Visual Studio, you can add these variables to your project, from the "Project Properties" window, by selecting the "SQLCMD Variables" menu option:

Important note: by adding these variables to your code in Visual Studio, you are not changing anything at database level, your changes will be reflected in your project files only.

In this example, I'm creating three new variables , setting the values for the storage location, the file path, and the SAS key that will be used by the external table "userData" in my development environment:

In the figures below you can see how the hardcoded values have been replaced by these SQLCMD variables in my database project files:

From the "File" menu, you can save all the changes, and before moving on to the next step, I'd recommend building your solution, from the "Build" menu, ensuring that your code is error free.

And with this last action, we complete the lab's second step.

Step 3: Integrating your Visual Studio solution with a Git Repository

By having your Visual Studio solution integrated with a Git repository, you are leveraging source control in your project and improving your Continuous Integration process, as part of the CICD automation for your Synapse Serverless pool.

As part of this process, the goal is to push the changes from your Visual Studio project to your Git branch, as the Dacpac file will be built on top of these files. This Dacpac file will represent the outcome of this Continuous Integration process.

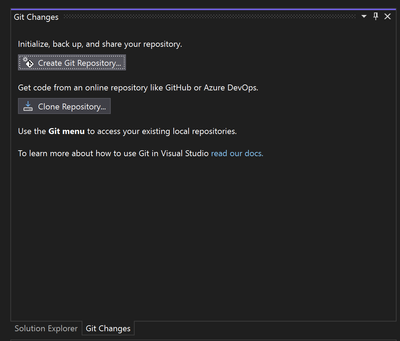

To integrate your Visual Studio solution with your Git provider, switch from the "Solution Explorer" tab to the "Git Changes" tab (you can access this tab from the "View" menu).

From "Git Changes", select the "Create Git Repository…" option to initialize a local Git repository.

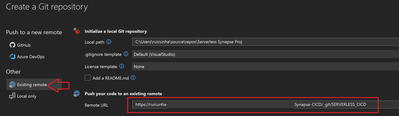

To create a new remote repository to push your changes, select the "Push to a new remote" option, otherwise, in case you prefer to use an existing remote repository, select "Existing remote" and provide your repository URL.

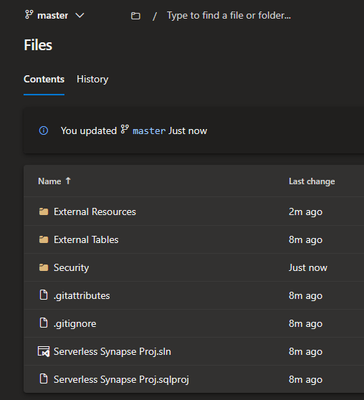

Select "Create and Push" to complete the Git integration. During this integration, your project files will be automatically pushed to your remote repository. You can check the master branch in your remote repository, as it should contain all your project files:

Step 4: Creating a DevOps Pipeline and building the Dacpac file

After integrating your Visual Studio database project with your Git repository, it's time to setup a DevOps Pipeline to build the Dacpac.

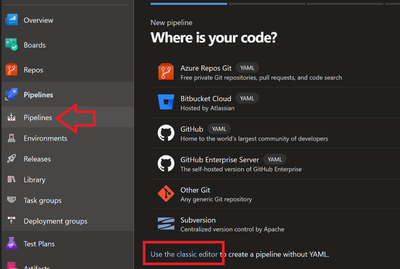

From the left navigation menu, select "Pipelines" and then "New pipeline" to create a new pipeline.

Select "Use the classic editor" to create a new pipeline without YAML.

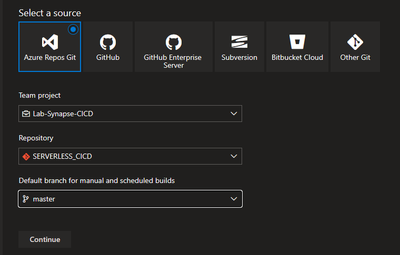

Select "Azure Repos Git" as the source type, and then specify your project name, repository and branch.

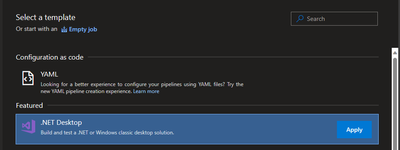

Select the .NET desktop template.

To simply your pipeline, you can just keep these tasks below:

Select "Save & Queue" to save your changes and then select "Save and Run" to run your pipeline.

Once the job run is finished, you can validate the list of published artifacts by selecting the link below:

The link will take you to the Dacpac file:

Now that the Dacpac file has been published, it's now time to configure the Continuous Deliver process.

Step 5: Creating a Release Pipeline and deploying the Dacpac

In this step I'm creating a new release pipeline to deploy the Dacpac file to a target Synapse Serverless SQL pool.

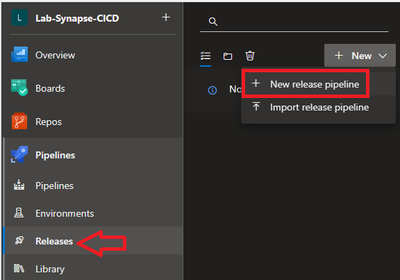

From the left navigation menu, select "Pipelines" and then select "Releases".

To start configuring your new release pipeline, select "+New" and then "New release pipeline".

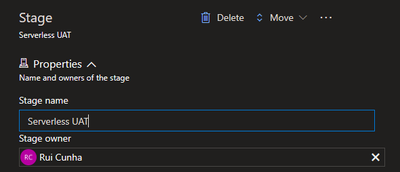

When prompted to select a template, select "Empty Job".

you can name your stage and then close this blade.

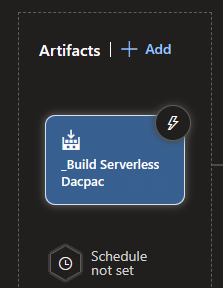

Let's start by adding our Dacpac file as a pipeline artifact. Select "+Add" to add a new artifact.

Under "Source Type" select "Build". you must specify your project name and the build pipeline name.

Select "Add" to add this artifact to your pipeline.

Select the "Tasks" tab to start configuring your pipeline. Click the "+" button in the Agent Job bar to add a new task.

You can type "data warehouse" in the search bar , as you're looking to add the "Azure SQL Datawarehouse deployment" task to your release pipeline. This task will allow deploying a Dacpac file to the target environment.

Select "Add" to add this task to your pipeline.

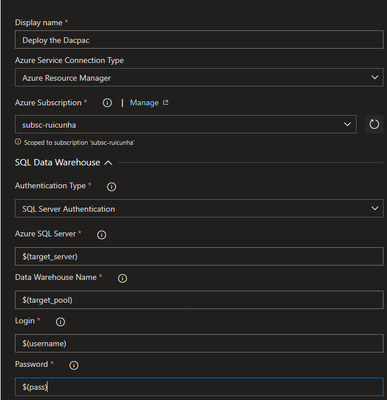

Let's start by configuring the authentication related inputs in this task. Instead of using hardcoded values, I'll take advantage of the user defined Variables in my DevOps pipeline.

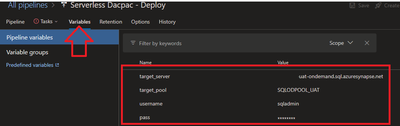

In order to define and set the values for your variables, you must select the "Variables" tab. I'm using these variables below, defining values for my target Synapse Serverless server, database and user credentials.

Back to the "Tasks" tab, let's continue configuring our task , in particular the "Deployment Package" section.

When you select the "SQL DACPAC file" deploy type, the deployment task will execute the SqlPackage CLI to deploy (publish) the Dacpac file. The SqlPackage is a command line utility built on top of the Data-Tier Application Framework (DacFx) framework , and it exposes some of the public DacFx APIs like the Extract, Publish and Script. Since we want to deploy a dacpac file, the action that we are interested in is the PUBLISH action.

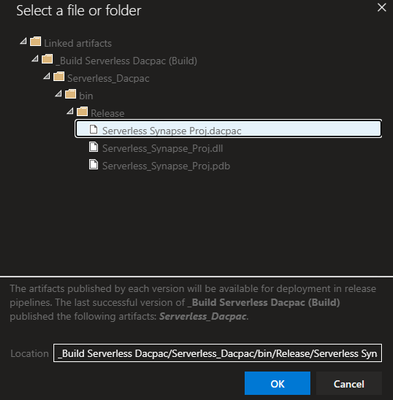

To specify the "DACPAC file" location, hit the "Browse" button

Specify the Dacpac file location from the linked artifact:

There's a final step that you need to take before saving and running your release pipeline: replacing the SQLCMD variables values with new values pointing to your target environment, as these variables are still referencing the resources in your source environment.

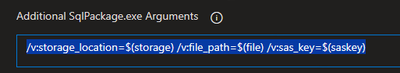

Any valid SQLCMD variable existing in the Dacpac can be overridden by adding the /v: (short form for /Variables:) property to the arguments list.

You can refer to this link to get more details on how to use SQLCMD variables in SqlPackage:

SqlPackage Publish - SQL Server | Microsoft Learn

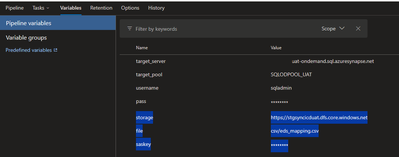

In this example, because I'm using those three SQLCMD variables in Visual Studio (storage_location , file_path and sas_key) , I'm adding three user defined variables to my pipeline, to override the SQLCMD variables.

My external table "userData" will be pointing to a different storage account (stgsyncicduat instead of stgsyncicddev) and to a different file path (target-csv/eds_mapping.csv instead of csv/eds_mapping.csv). I'll be obviously replacing the storage account SAS key as well.

After defining the pipeline variables, return to the task configuration, as you need to configure the SQLCMD variable replacement. This is done via SqlPackage arguments, when using the variables property. Using variables will instruct SqlPackage to override the SQLCMD variables being used in the Dacpac file with the new values defined in your DevOps variables.

This is how I'm overriding my SQLCMD variables (storage_location, file_path, and sas_key).

After the configuration is complete, hit the "Save" button and then select "Create Release" to run your release pipeline.

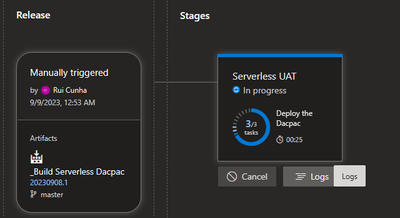

You can track the release progress by selecting the release number link or by selecting the "View Releases" button.

Once in the release, you can mouse over the stage name, and select the "Logs" button to get more details about the actions being performed during the job run.

After the execution is completed, the task output should look similar to this:

To validate that the deployment went well, and all the objects are now pointing to the target environment resources, you can use a client tool such SSMS.

Et voila! My Synapse serverless objects were successfully deployed to the target environment and they are now pointing to a different external location :)

Conclusion

By completing this lab, you should have learned how to take advantage of database oriented tools (like SSDT or SqlPackage) to boost your CICD automation for Azure Synapse Serverless SQL pools. These tools will facilitate the deployment of database changes across the environments, by providing deploy-time variables (SQLCMD variables) that are particularly helpful in the context of CICD for an Azure Synapse Serverless SQL pool, where you must adapt your database objects to the target environment resources.

Published on:

Learn moreRelated posts

Power Automate: Get support for normalized schema import for data ingestion

Customers with existing data pipelines or data products in data mesh mostly want to stick to normalized, efficient forms such as star schema. ...

Power Automate: Export object-centric process mining data to Microsoft Fabric semantic model

Publishing to Microsoft Fabric breaks down data silos and amplifies the impact of your process insights across your organization. Instead of k...

Power Automate: Create and visualize custom KPIs in Process Intelligence Studio

Custom KPIs put your unique business priorities at the center of process analysis. While standard metrics provide valuable baseline insights, ...

Power Automate: Analyze your processes in Process Intelligence Studio

Process Intelligence Studio eliminates the friction between your questions and your answers. Instead of navigating rigid dashboards or using a...

Power Automate: Configure Entra hybrid join for hosted machine groups

Microsoft Entra hybrid join with custom virtual networks (VNETs) and hosted machine groups lets your hosted machine group bots enroll in both ...

Power Automate: Enable version control for desktop flows

With version control in Power Automate for desktop, you can see what changes were made and who made them. This feature makes it easier to debu...

Power Automate: Use Power Platform environment variables in desktop flows

Retrieve Power Platform environment variables directly through their desktop flows without the need to pass them as inputs to the flow. A new ...

Power Automate: Create and edit expressions with Copilot

You can create, edit, and fix your Power Automate expressions by indicating your requirements in natural language. By using this feature to in...

Condition vs. Switch in Power Automate: When to Use Each

A common question I hear from newer Power Automate users is when to use Condition vs Switch in the Control connector. Control is available in ...

Connection references and permissions in Power Automate

Have you ever battled with connection references in Power Automate? If not, you probably haven't worked on projects with multiple developers o...