Synapse Spark - Encryption, Decryption and Data Masking

Author(s): Arshad Ali is a Program Manager in Azure Synapse Customer Success Engineering (CSE) team.

Introduction

As a data engineer, we often get requirements to encrypt, decrypt, mask, or anonymize certain columns of data in files sitting in the data lake when preparing and transforming data with Apache Spark. The extensibility feature of Spark allows us to leverage a library which is not native to Spark. One such library is Microsoft Presidio, which provides fast identification and anonymization modules for private entities in text such as credit card numbers, names, locations, social security numbers, bitcoin wallets, US phone numbers, financial data, and more. It facilitates both fully automated and semi-automated PII (Personal Identifiable Information) de-identification and anonymization flows on multiple platforms.

In this blog post, I am going to demonstrate step by step how to download and use this library to meet the above requirements with Spark pool of Azure Synapse Analytics.

Getting it ready

Microsoft Presidio is an open-source library from Microsoft, which can be used with Spark to ensure private and sensitive data is properly managed and governed. It mainly provides two modules, the analyzer module for fast identification and the anonymization module to anonymize private entities in text such as credit card numbers, names, locations, social security numbers, bitcoin wallets, US phone numbers, financial data, and more.

Presidio analyzer

The Presidio analyzer is a Python based service for detecting PII entities in text. During analysis, it runs a set of different PII Recognizers, each one in charge of detecting one or more PII entities using different mechanisms. It comes with a set of predefined recognizers but can easily be extended with other types of custom recognizers. Predefined and custom recognizers leverage regex, Named Entity Recognition (NER), and other types of logic to detect PII in unstructured text.

You can download this library from here by clicking on “Download files” under Navigation on the left of the page: https://pypi.org/project/presidio-analyzer/

Presidio anonymizer

The Presidio anonymizer is a Python based module for anonymizing detected PII text entities with desired values. Presidio anonymizer supports both anonymization and deanonymization by applying different operators. Operators are built-in text manipulation classes which can be easily extended like custom analyzer. It contains both Anonymizers and Deanonymizers:

- Anonymizers are used to replace a PII entity text with some other value by applying a certain operator (e.g., replace, mask, redact, encrypt)

- Deanonymizers are used to revert the anonymization operation. (e.g., to decrypt an encrypted text).

This library includes several built-in operators.

Step 1 - You can download this library from here by clicking on “Download files” under Navigation on the left of the page: https://pypi.org/project/presidio-anonymizer/

|

Additionally, it also contains, Presidio Image Redactor module as well, which is again a Python based module and used for detecting and redacting PII text entities in images. You can learn more about it here: https://microsoft.github.io/presidio/image-redactor/ |

Presidio uses an NLP engine which is an open-source model (the en_core_web_lg model from spaCy), however it can be customized to leverage other NLP engines as well, either public or proprietary. You can download this default NLP engine library from here:

https://spacy.io/models/en#en_core_web_lg

https://github.com/explosion/spacy-models/releases/tag/en_core_web_lg-3.4.0

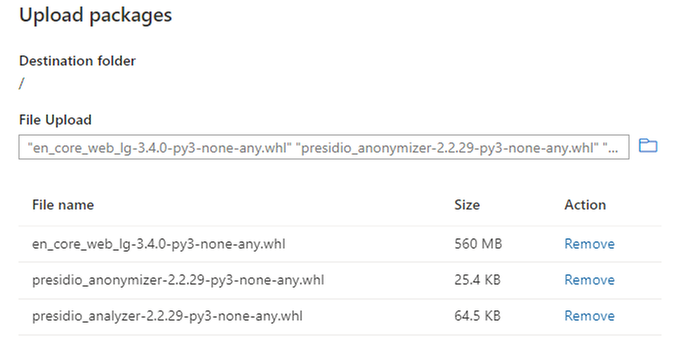

Step 2 - Once you have downloaded all three libraries you can upload them to Synapse workspace, as documented here (https://docs.microsoft.com/en-us/azure/synapse-analytics/spark/apache-spark-manage-workspace-packages) and shown in the image below:

Figure 1 - Upload Libraries to Synapse Workspace

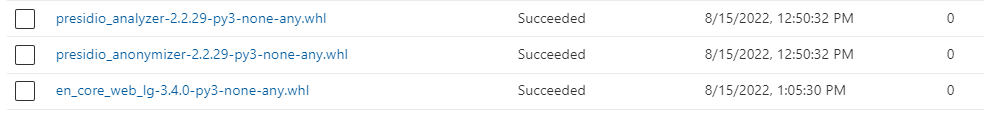

Given that NLP engine library is slightly bigger in size, you might have to wait a couple of minutes for the upload to complete. Once successfully uploaded, you will see a “Succeeded” status message for each of these libraries, as shown below:

Figure 2 - Required libraries uploaded to Synapse Workspace

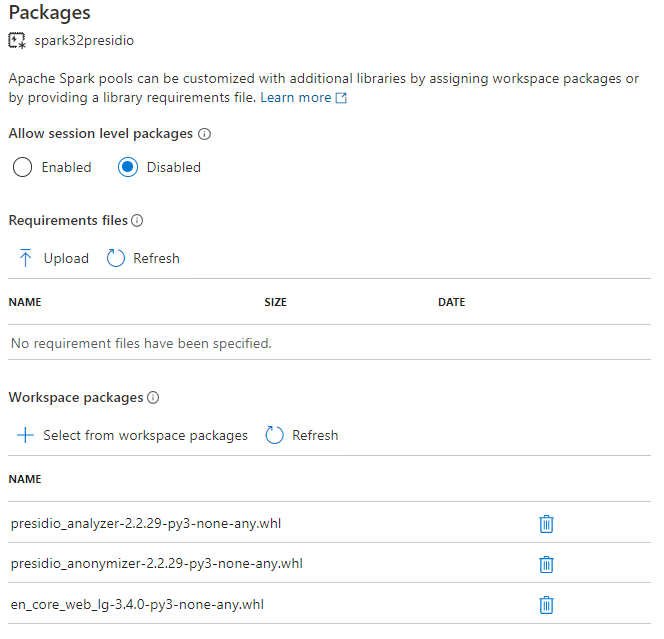

Step 3 - Next, you have to apply these libraries from the Synapse workspace to the Spark pool where you are going to use it. Here are the instructions on how to do that and the screenshot below shows how it looks:

https://docs.microsoft.com/en-us/azure/synapse-analytics/spark/apache-spark-manage-pool-packages

Figure 3 - Applying libraries to Synapse Spark pool

Once you hit “Apply” Synapse is going to trigger a system job to install and cache the specified libraries on the selected Spark pool. This process helps reduce overall session startup time. Once this system job is completed successfully, all new sessions will pick up the updated pool libraries.

Putting it all together in action

Step 1 - First thing first, we need to import the relevant classes/modules (and other relevant classes/modules from other existing libraries) which we just applied to the Spark pool.

Presidio Analyzer

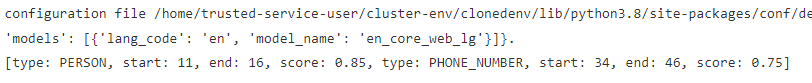

Step 2 - Next, you can use an analyzer module for detecting PII entities in text. Here is an example to detect phone number in the given text.

As you can see, it detected the phone number which starts at position 19 and ends at position 31 with a score of 75%:

In addition to the phone number entity, which we used earlier, you can use any of the other built-in entities, as below, or use custom developed entities:

For example, the next code uses two entities Person and Phone Number to detect the name of the person and phone number in the given text:

Presidio anonymizer

Step 3 - Once you have used the analyzer to identify the text with private or sensitive data, you can use the anonymizer class for anonymizing it using different operators.

Anonymization Example

Here is an example of anonymizing the identified sensitive data by using the replace operator. In this example, for simplicity, I am using the recognizer result as hard-coded values however you can have this information coming directly from the analyzer during runtime.

Figure 4 - Person name anonymized by using replace operator

Encryption Example

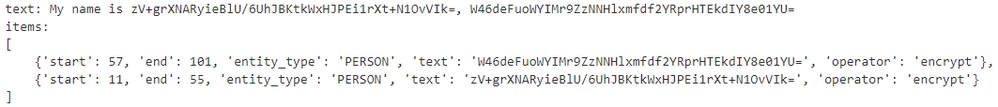

Step 4 - The next example demonstrates how you can use the encrypt operator to encrypt identified sensitive data from the text. Again, in this example, for simplicity I am using recognizer result as hard-coded values however you can have this information coming directly from analyzer during runtime.

Also, I have the encryption key hard-coded, however in your case you will have this information coming from Azure KeyVault: https://docs.microsoft.com/en-us/azure/synapse-analytics/spark/microsoft-spark-utilities?pivots=programming-language-python#credentials-utilities

Figure 5 - Person name anonymized by using encrypt operator

Decryption Example

Step 5 - Like the encrypt operator to encrypt identified private and sensitive data, you can use the decrypt operator to decrypt already encrypted private data with the same key used during encryption.

Figure 6 - Person name decrypted by using decrypt operator

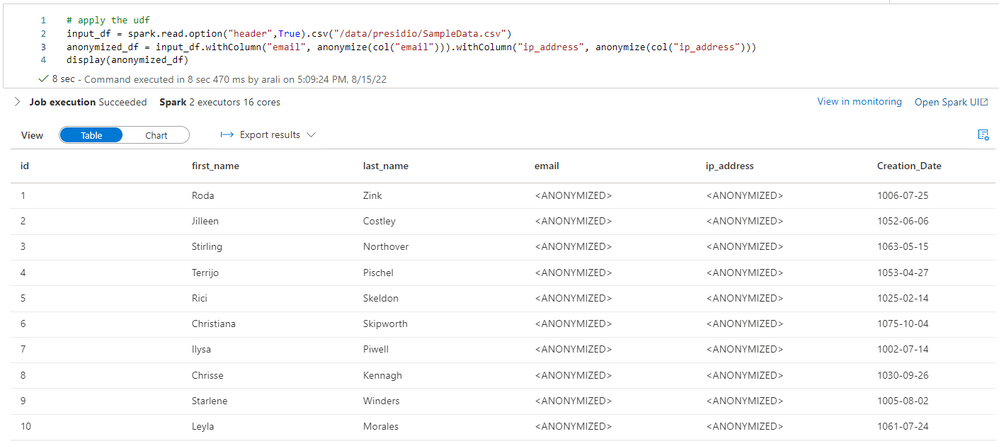

Spark Example – Using it with Dataframe and UDF

Apache Spark is a distributed data processing platform and to use these libraries in Spark you can use a user defined function to encapsulate your logic. Next, you can use that function to perform operations (anonymize, encrypt, or decrypt etc.) on the Spark dataframe, as demonstrated below, for replace.

Figure 7 - Sample data with no encryption yet (for Email and IP Address)

Figure 8 - Sample data anonymized for Email and IP Address

What we discussed so far barely scratched the surface. The possibilities are endless, and Presidio includes several samples for various kinds of scenarios. You can find more details here: https://microsoft.github.io/presidio/samples/

Here is the FAQ: https://microsoft.github.io/presidio/faq/

Summary

As we look to have better control and governance for compliance, we are often tasked with encrypting, decrypting, masking, or anonymizing certain columns with private or sensitive information. In this blog post, I demonstrated how you can use the Microsoft Presidio library with the Spark pool of Azure Synapse Analytics to perform operations on large scales of data.

Our team will be publishing blogs regularly and you can find all these blogs here: https://aka.ms/synapsecseblog

For deeper level understanding of Synapse implementation best practices, please refer to our Success By Design (SBD) site: https://aka.ms/Synapse-Success-By-Design

Published on:

Learn more