CI & CD With Azure Synapse Dedicated SQL Pool

Author(s): Pradeep Srikakolapu is a Program Manager in Azure Synapse Customer Success Engineering (CSE) team.

Automating development practices is hard but we can make it simpler using Version Control, Continuous Integration & Deployment and best practices to manage ALM lifecycle of an Azure Synapse Data Warehouse with this blog article.

Introduction

This article helps developers understand the following:

- How to version control Synapse Dedicated SQL Pool (Azure data warehouse) objects?

- How to continuously develop and deploy data warehouse objects using SSDT & SQL Package?

- How to selectively deploy objects from a dacpac file?

The prerequisites to understand this article are:

- Basic understanding of GIT, a repository in Azure DevOps or GitHub organizations.

- How SSDT and SQL Package tooling works

- Azure DevOps Pipelines

Note: Synapse Serverless SQL Pool is not discussed in this blog. I will blog about version control, CI/CD for Synapse Serverless soon.

Version control in DW is achieved by SQL Server Data Tooling (SSDT) with a database project. You can build a database project using Visual Studio, VS Code, Azure Data Studio. I will be using Visual Studio 2022 in this blog.

Build & publish database code locally from a developer machine

Create a database project – File> New > Project, choose SQL Server Database Project template

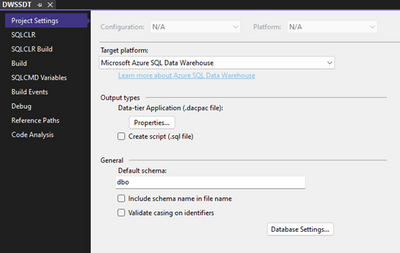

Once the database project is created, right click on the project in the solution explorer. You should choose the target platform as “Microsoft Azure SQL Data Warehouse”.

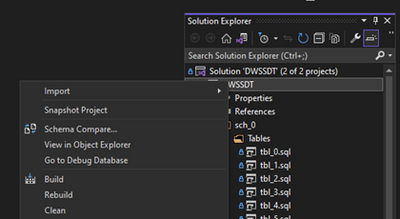

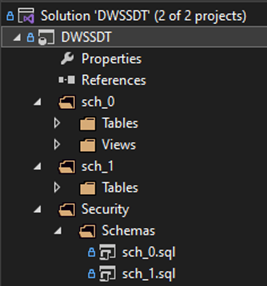

Organize the database project in the same way we organize the objects in SSMS.

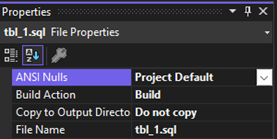

In the above screenshot, see that I organized schema files in Security -> Schemas folder. I also organized tables and views for each scheme for better abstraction like SSMS with minor differences. Please make sure to include .sql files as part of your build by configuring the build action property to “Build” and copy to output directory property to “Do not copy”.

You can build the DACPAC file by building the database project from context menu or the entire solution by F5. The context menu of database project has an option build to compile the database project into DACPAC file.

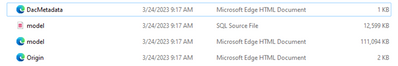

SSDT + SQL Database projects use MS build to compile the source code and extract the DAC package file (DACPAC file). If you unzip/unpack DACPAC file, you will see DacMetadata.xml, Origin.xml, model.xml, and model.sql files. model.sql and model.xml files are the representation of your database model.

Once you build a DACPAC file by building database project, you can deploy it to the target data warehouse using SQL Package – Publish action either via database project or SQL Package.exe from cmd.

You need to configure the target database configuration to deploy the DACPAC file. The other way is to use SQL Package file. An example:

Continuous integration - Build & deploy database project with Azure pipelines

As a developer, you can build data warehouse code and publish it locally. What if you need to deploy the database code to a development or a test or a production environment? We wouldn’t want to manually deploy source code to any environment. The answer to this problem is to enable continuous integration and deployment on the source in Azure DevOps using Azure pipelines or GitHub using Actions. In this example, I will share the source code repository and the Azure pipelines to enable CI & CD aspects of database code development and deployment.

Add a YAML file to your source code to perform three tasks. I am using multistage Azure Pipelines to show these three tasks.

- Build the database project and publish artifacts from this stage. Please note that publishing artifacts is not publish action with SQL Package.

- Compare/verify the DACPAC file from build task with target data warehouse. The target data warehouse can be a dev/test/production environment.

- Deploy the DACPAC file to target environment data warehouse.

Build Database Project & Publish Artifacts

- task: VSBuild@1 uses MS Build to generate the DACPAC file in /{project folder}/{release/debug}/bin/{project name}.dacpac

- task: CopyFiles@2 copies the DACPAC file and AgileSqlClub.DeploymentFilterContributor.dll file to artifact staging directory ('$(build.artifactstagingdirectory)').

- task: PublishPipelineArtifact@1 copies all files and folders from '$(build.artifactstagingdirectory)' to a folder ‘drop’ and will publish the folder ‘drop’ for next steps.

Verify DACPAC & compare with target data warehouse

Verify stage is verifying the contents of the dacpac that are about to be deployed.

- download: current task downloads the ‘drop’ folder and its content generated from previous stage (Build) for dacpac verification process.

- task: SqlAzureDacpacDeployment@1, DeploymentAction: Script task uses SQL Package.exe to perform schema compare on the objects from DACPAC file and target data warehouse. It generates a differential script based on arguments provided so that the content of the differential script can be verified.

Deploy DACPAC using SQL Package

Deploy stage publishes the DACPAC file to target data warehouse. Publish action generates the differential script between DACPAC and target data warehouse and then deploys the differential script to the target data warehouse.

- download: current task downloads the ‘drop’ folder and its content generated from previous stage (Build) for dacpac deployment/publish process.

- task: SqlAzureDacpacDeployment@1, DeploymentAction: Publish task deploys differential script to target data warehouse.

Selective Deployment – Publish Action

SQL Package, publish action support several additional parameters to ignore objects by type. Parameters such as DoNotDropObjectTypes, DropObjectsNotInSource, ExcludeObjectTypes and other properties in SqlPackage Publish - SQL Server | Microsoft Learn lets you selectively deploy the object type(s) of your choice. However, SQL Package does not selective deployment of specific objects by name, regex expressions. Many customers with a large data warehouse (>2000 objects) are having trouble deploying DACPAC solutions without specifying object name(s).

GitHub - GoEddie/DeploymentContributorFilterer provides an alternative to filter objects by name/regex expressions as part of dacpac deployment. Please provide Additional arguments to Publish action - AdditionalDeploymentContributors, AdditionalDeploymentContributorPaths, AdditionalDeploymentContributorArguments to apply filters before DACPAC deployment.

- AdditionalDeploymentContributors takes a namespace of the library. This library is applied as an additional deployment contributor as part of Publish action.

- AdditionalDeploymentContributorPaths takes path of the library.dll as an input

AdditionalDeploymentContributorArguments takes filters as an input to ignore/filter/keep the objects as part of DACPAC for publish action.

Our team publishes blog(s) regularly and you can find all these blogs at https://aka.ms/synapsecseblog.

For a deeper level of understanding of Synapse implementation best practices, please refer to our Success by Design (SBD) site: https://aka.ms/Synapse-Success-By-Design

Published on:

Learn moreRelated posts

Azure Cosmos DB TV Recap: Supercharging AI Agents with the Azure Cosmos DB MCP Toolkit (Ep. 110)

In Episode 110 of Azure Cosmos DB TV, host Mark Brown is joined by Sajeetharan Sinnathurai to explore how the Azure Cosmos DB MCP Toolkit is c...

Introducing the Azure Cosmos DB Agent Kit: Your AI Pair Programmer Just Got Smarter

The Azure Cosmos DB Agent Kit is an open-source collection of skills that teaches your AI coding assistant (GitHub Copilot, Claude Code, Gemin...

Introducing Markers in Azure Maps for Power BI

We’re announcing a powerful new capability in the Azure Maps Visual for Power BI: Markers. This feature makes it easier than ever for organiza...

Azure Boards additional field filters (private preview)

We’re introducing a limited private preview that allows you to add additional fields as filters on backlog and Kanban boards. This long-reques...

What’s new with Azure Repos?

We thought it was a good time to check in and highlight some of the work happening in Azure Repos. In this post, we’ve covered several recent ...