Azure Stack HCI - Network configuration design with SDN

Software defined networking (SDN) provides an efficient way to centrally configure and manage networks and network services such as switching, routing, and load balancing in your datacenter. In order to adapt your network to the changing demands of your apps, you can utilize SDN to establish, protect, and link it in a variety of ways. SDN is the only technology that makes it viable to manage massive datacenter networks for services like Microsoft Azure, which successfully executes tens of thousands of network modifications per day.

This article will describe how SDN should be configured in Azure Stack HCI as well as some significant variables that should be kept in mind. The following Azure Stack HCI features must be deployed on each node before we enable SDN:

- Network ATC

- Data Center Bridging (DCB)

- Failover Clustering

- Hyper-V

Automatic network/traffic control (NATC) will assist in directing traffic to the appropriate NIC. You must set up the appropriate intents and identify the adapters and VLANs connected to them. Having the same name on each node is a requirement for any new adapters to immediately join the network and route traffic to the appropriate node. Please refer. https://learn.microsoft.com/en-us/azure-stack/hci/deploy/network-atc

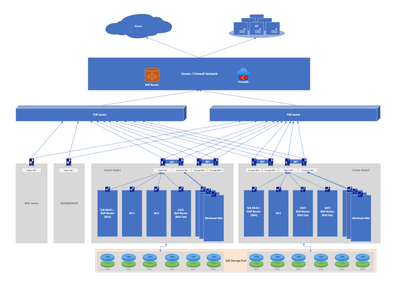

High Level Architecture (depiction of 16 node cluster)

Low Level Architecture (depiction of 2 node cluster)

Let's look more closely at each Network in the SDN architecture for Azure stack HCI.

Management Network

The management server (WAC, AD/DNS/DHCP), SDN control plane VMs (Network controllers, SLB Muxes, Gateways), and all physical nodes in the HCI cluster should be setup with this network. One network adapter should be set aside on each of these systems specifically for this network. The estimated IP range for this network would therefore be;

|

Servers |

Instances |

IPs |

|

Cluster nodes |

16 |

16 |

|

SDN SLB |

2 - 3 |

3 |

|

SDN NC |

3 |

3 |

|

SDN GW |

3 - 10 |

10 |

|

Mgmt |

4 |

4 |

|

|

Total |

36 |

So /26 CIDR would be an optimum size.

Compute Network

All workload VMs running in the HCI cluster have Ips that are associated to the Compute network. The number of IPs should be assigned/determined by the applications and workloads in the cluster and should be established using Vnets.

We can add both the compute and management networks to the same virtual switch (SET vSwitch). Alternately, we could keep them in separate intents. A virtual switch has to have the Virtual Filtering Platform (VFP) extension activated in order to be compatible with SLB. The Windows Admin Centre deployment wizard, System Centre Virtual Machine Manager (SCVMM) deployment, and SDN deployment PowerShell scripts all carry out this automatically.

HNV PA Network

The Hyper-V Network Virtualization (HNV) Provider Address (PA) network serves as the underlying physical network for East/West (internal-internal) tenant traffic, North/South (external-internal) tenant traffic, and to exchange BGP peering information with the physical network. This network is only required when there's a need for deploying virtual networks using VXLAN encapsulation for another layer of isolation and for network multitenancy.

PA Ips will be updated by NC to the SDN VMs. Every cluster node will have two IP addresses, while each GW/Mux instance will have one from the PA network. We therefore require at least 39 IP addresses and may configure /26 CIDR if we have 16 nodes, 5 GW instances, and 2 Muxes.

On different compartments of the network adapter, PA IPs are assigned to the nodes, GWs, and muxes. Run ipconfig /allcompartments to check the IP addresses on such systems.

When an ILB is made from the PrivateVIP pool, one IP will be assigned to it; this IP is not visible to users or on the LB. That is for speaking with SLB and will be assigned to a compartment in Mux.

BGP routers that SLB and GW will be managing should connect to external routers (BGP Peers) on a different VLAN. Both VLANs would require a public routable /29 network.

Also SLB needs to have Public VIP range and Private VIP range for providing Ips for LB frontends. SLB will be updating these IP ranges to NC and NC will be providing it while creating Public Ips/Frontends for NIC/LBs respectively. And SLB install process will create 3 Logical Networks (PublicVIP, PrivateVIP and HNVPA). Logical Network is the VLAN network which is differ from VNet that is for compute resources.

There are 3 types of GW options available (GRE/IPsec/L3). They need to create in a gateway subnet of your VNet. And that subnet can have /29 prefix since it covers max number of GW instances with additional buffer.

Storage Network

The storage network operates in different IP subnets. Each storage network uses the ATC predefined VLANs by default (711 and 712). However, these VLANs can be customized if necessary. In addition, if the default subnet defined by ATC isn't usable, you're responsible for assigning all storage IP addresses in the cluster. Since each node has 2 Storage NIC and each NICs are in different network (with different VLAN ID) we can use /27 CIDR for each Storage Network. And configure them with same Intent by ATC.

Architecture elements for the gateway

SDN provides 3 types of Gateway connections; Site-to-Site IPsec VPN, Site-to-Site GRE Tunnel and Layer 3 Routing. Below, we'd like to draw your attention to a few crucial L3 connection facts.

The SDN gateway includes a distinct compartment for each connection to ensure isolation because the L3 connections are for several virtual networks.

There is one interface in the virtual network space and one interface in the physical network space for each SDN gateway compartment.

On the physical network, each L3 connection must map to a different VLAN. The HNV provider VLAN, which serves as the underlying physical network for data forwarding for virtualized network traffic, must be distinct from this VLAN.

To access the L3 GW private IP, an external BGP router must be configured with a static /32 route, using the L3 GW BGP router IP as next hop. Instead of using /32, you can also set the gateway subnet prefix. Additionally, you must correctly configure the BGP peer as L3 GW private IP at the external BGP router.

Once L3 is created and turned in connected state the respective Vnets, need to be connected with this Gateway, should have BGP router (L3 BGP router IP) and BGP peer (External BGP router IP), ASN numbers are set correctly.

Important aspects of SDN for deployment and operations

- Currently we can allocate only static Ips for the VMs from Vnet using Powershell/WAC. But Dynamic IP allocation is in the roadmap and should be available by end of the current year.

- A VM's NIC may be connected to a public IP address. But as of right now, only Powershell makes that possible. The WAC does not offer that choice. But this feature will be available in the future though.

- To connect from external BGP router to SLB/GW BGP peer, we have to enable BGP multihop. Or else, the connection would not get established And/Or will not advertise the route to the BGP Peer.

- SLB outbound rules are currently the only means of enabling outgoing NAT. There is no native NAT Gateway service yet.

- We must set up at least two GW instances. If you configure just one GW instance, it will be in an uninitialized state and not be active.

- Even if we give a static IP, the BGP router IP for GW (local IP) is always chosen dynamically. BGP connection won't be made if we supply static IP but it uses a different dynamic IP and we make a peer setting to the static IP but actually obtained the dynamic IP. Therefore, we must confirm that the L3's assigned IP is correct and establish a peer at an external router. Also make sure the gateway subnet has a static route as well from external router.

- We must manually set the Dhcpoptions attribute on the Vnet for the DNS server because WAC does not have the ability to provide DNS to the Vnet. Otherwise, applications like AKS HCI will encounter a DNS error and fail. It will be fixed soon because it is on the roadmap. However, we can set it using PowerShell at any time after the Vnet has been created.

- Multiple GWs can currently be built in the same Vnet. The GW instance's compartment will receive the IP address designated for that GW from that subnet. Furthermore, if every GW is from the same Vnet, they will all be assigned to the same compartment of the GW VM. Alternatively, in various compartments.

- The sdnexpress script can be used to scale up for just GW/mux VM. But in order to scale back, current traffic/bandwidth utilization must be automatically or manually verified. Otherwise, that would result in the failure of current connections.

- All clusters utilize the same default MAC address range by default. But we can always set a different pool if there is communication between two clusters. The customer must figure out a mechanism to identify the unique pool if communication is with an external party.

Conclusion:

SDN configuration is certainly a bit complex but if you configure it correctly, it is stable and very sophisticated. Please go through following links for more details on SDN for Azure Stack HCI.

Ref: https://learn.microsoft.com/en-us/azure-stack/hci/concepts/software-defined-networking

Published on:

Learn moreRelated posts

From Backlog to Delivery: Running Scrum in Azure DevOps

This is a practical, end-to-end guide to run Scrum with Azure DevOps — from backlog grooming through sprint delivery and continuous deployment...

Azure SDK Release (November 2025)

Azure SDK releases every month. In this post, you'll find this month's highlights and release notes. The post Azure SDK Release (November 2025...

Microsoft Purview: Information Protection-Azure AI Search honors Purview labels and policies

Azure AI Search now ingests Microsoft Purview sensitivity labels and enforces corresponding protection policies through built-in indexers (Sha...

Soluzione Earns Microsoft Solutions Partner Designation for Data & AI (Azure)

Soluzione has been a Microsoft partner for over a decade – a journey that began with Microsoft Silver Partnership, progressed to achieving Mic...

What’s New with Microsoft Foundry (formerly Azure AI Foundry) from Ignite 2025

Microsoft Ignite 2025 just wrapped up, and one of the biggest themes this year was the evolution of Azure AI Foundry, now simply called Micros...