Automated HPC/AI compute node health-checks Integrated with the SLURM scheduler

Overview

It is best practice to run health-checks on compute nodes before running jobs, this is especially important for tightly coupled HPC/AI applications. The virtual machines that fail the health-checks should not be used to run the job, they can either be deallocated or off-lined by the scheduler. Ideally, the process of running health-checks and the decision to off-lining or on-lining the virtual machines should be automated. In this post we give an example of how to automate health-checks on ND96asr_v4 (A100), by integrating the Lawrence Berkeley National Laboratory (LBNL) node health check (NHC) framework into a SLURM scheduler (via CycleCloud). Some additional GPU specific health-checks have been added to the NHC framework. This example could be easily expanded to support other Schedulers like PBS and LSF and other Azure specialty SKUs, like NDv2, NCv3, HBv3, HBv2 and HC series. NHC performs health-checks on individual nodes, multi-node health-checks can also be useful some examples can be found here.

Installation and configuration of NHC with Cyclecloud and SLURM

Scripts and a cyclecloud cluster-init project to install and configure NHC with cyclecloud and SLURM is located here.

Prerequisites

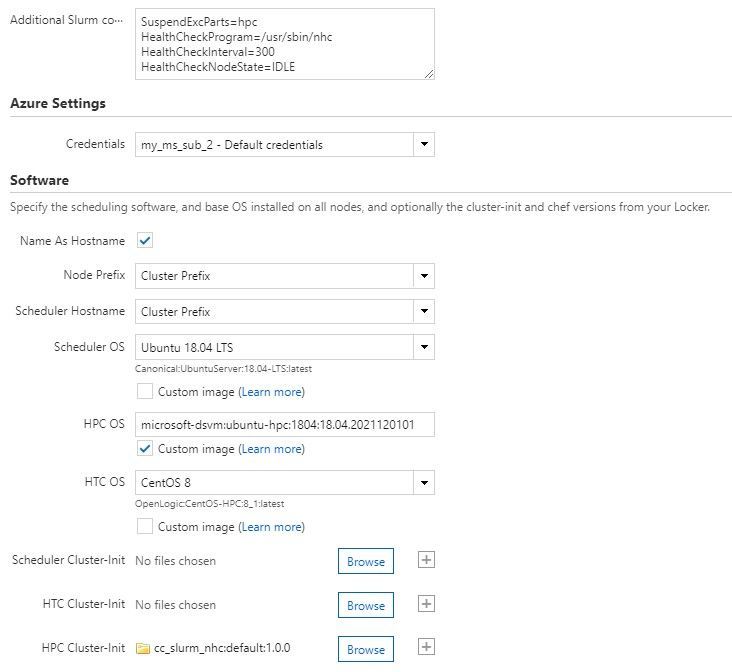

In the Cyclecloud portal, edit your cluster and go to the advanced setting. Select the cc_slurm_nhc cluster-init project for the compute nodes and add some additional options to your slurm.conf using the Additional slurm conf text box.

SLURM options

SuspendExcParts=hpc : Disables SLURM autoscaling. When Autoscaling is disabled, unhealthy virtual machines will be put into a DRAIN state (i.e., The current job running on the node can complete, but no new jobs can be submitted to this node) and will remain allocated until the health check error is resolved, or the virtual machine is manually deleted. If Autoscaling is enabled an unhealthy node will be put into a DRAIN state and automatically deallocated.

HealthCheckProgram=/usr/sbin/nhc : Path to the health-check program. In our case its NHC.

HealthCheckInterval=300 : The time interval in seconds the SLURM slurmd daemon will run the health check program.

HealthCheckNodeState=IDLE : Defines which SLURM nodes the health checks will run on. IDLE, means the health checks will only run-on IDLE nodes (i.e., will not run-on nodes with running jobs). ALLOC, run on nodes in the ALLOC state (i.e., nodes with running jobs), MIXED, nodes in a MIXED state (i.e., nodes have jobs, but they are not using all cores), ANY, run on any node in any state and CYCLE, which can be combined with other options, run health-checks in batches on all the nodes (rather than running the health-checks on all the nodes at the same time.)

It's important to note that SLURM has 60 second time limit for the health check program, it will be killed if it exceeds this limit. This is to prevent the slurmd daemon from being blocked. We can overcome this limit in NHC by forking the NHC health-checks (see the DETACHED_MODE option below).

If after deploying you change any of these SLURM options, you will need to restart the slurmctld (on the scheduler) and the slurmd (on the compute nodes).

NHC options

Global configuration options set in file (/etc/default/nhc)

TIMEOUT=300 : Time in seconds that NHC health check must complete, If NHC tests exceed this time it will be killed.

VERBOSE=1 : If set to 1, then /var/log/nhc.log will contain more verbose output, it set to 0 the output will be minimized.

DEBUG=0 : if set to 1, then there will be additional debug output to /var/log/nhc.log. If set to 0, no debug output will be written.

DETACHED_MODE=1: If set to 1 then the NHC program will be immediately forked, so the slurmd will not be blocked, and control will be passed immediately to slurmd to continue with other tasks. Check /var/run/nhc/* to see if any errors occurred. In detached mode, nhc will read this file first before proceeding with the checks.

NVIDIA_HEALTHMON=dcgmi : This defines what GPU health check will be run when check_nv_healthmon is specified in the nhc.conf file (i.e., Define which health checks to run).

NVIDIA_HEALTHMON_ARGS=”diag -r 2”: This defines what argumens to pass to the NVIDIA_HEALTHMON program.

The nhc.conf file (/etc/nhc/nhc.conf) defines what health checks to run on each node. Some of the above global NHC options can be defined directly in this file.

The format of the nhc.conf file is

<pattern of hostname to run test on> || <check_name_of_test <test_args>

e.g.

To check infiniband interface ib0 on all hostnames (e.g. * pattern)

* || check_hw_eth ib0

What health check tests does NHC perform?

NHC supports many types of health checks, please consult the NHC documentation to see a complete list. NHC is written in bash and additional tests can be easily added to the NHC framework. For the ND96asr_v4 (A100) virtual machine, the following additional tests were added.

- Check that the maximum application GPU clock frequencies are set, if not, it attempts to set them. (check_app_gpu_clocks)

- Check the CUDA bandwidth from host to device and device to host, compare the performance with the expected performance. (check_cuda_bw)

- Check that GPU persistence mode is enabled, if not attempted to enable it. (check_gpu_persistence)

- Check for ECC errors, any row remap pending, row remap errors or very high uncorrectable error counts. (check_gpu_ecc)

By default, NHC looks for a configuration file names nhc.conf in /etc/nhc to define what tests to run.

The included nd96asr_v4.conf file runs the following health checks.

- Check all mounted filesystems (including shared filesystems and local NVMe SSD)

- Check if filesystems are nearly full (OS disk, shared filesystems and local NVMe SSD)

- Check all IB interfaces

- Check ethernet interfaces

- Check for large loads

- Check that key process daemons are running.

- Check GPU persistence mode, if disabled then attempt to enable it

- Nvidia Data Center GPU Monitor diag -r 2 (medium test)

- Cuda bandwidth tests (dtoh and htod)

- Basic GPU checks like lost GPU

- Check application GPU clock frequencies

- Check GPU ECC errors

- IB bandwidth test using ib_write_bw, on NDv4 (4 pairs of tests)

- NCCL all-reduce IB loopback test

The NHC environment also provides a script called nhc-genconf, that by default will generate a nhc config file, /etc/nhc/nhc.conf.auto, by probing the node its running on.

Type ./nhc-genconf -h to see all options.

This is very useful when you want to create a nhc.conf for a new node, nhc-genconf will create starting nhc configuration file which you can edit and use.

Example usage

NHC was installed and tested on ND96asr_v4 virtual machines running Ubuntu-HPC 18.04 managed by cyclecloud SLURM scheduler.

In this example autoscaling is disabled, and so unhealthy nodes will be put into a DRAIN state and remain allocated until some action is taken. By default, the NHC health checks are run every 300 seconds on IDLE nodes only (i.e., not on any nodes with running SLURM jobs).

If all the NHC node health checks pass, /var/log/nhc.log will look like the following.

etc Running check: "check_hw_ib 200 mlx5_ib5:1" Running check: "check_hw_ib 200 mlx5_ib6:1" Running check: "check_hw_ib 200 mlx5_ib7:1" Running check: "check_hw_eth ib7" Running check: "check_hw_eth ib3" Running check: "check_hw_eth docker0" Running check: "check_hw_eth lo" Running check: "check_hw_eth ib6" Running check: "check_hw_eth ib5" Running check: "check_hw_eth ib4" Running check: "check_hw_eth ib0" Running check: "check_hw_eth ib2" Running check: "check_hw_eth eth0" Running check: "check_hw_eth ib1" Running check: "check_ps_loadavg 192" Running check: "check_ps_service -S -u root sshd" Running check: "check_ps_service -r -d rpc.statd nfslock" Running check: "check_nvsmi_healthmon" Running check: "check_gpu_persistence" Running check: "check_nv_healthmon" Running check: "check_cuda_bw 22.5" Running check: "check_app_gpu_clocks" Running check: "check_gpu_ecc 5000" 20220201 22:04:22 [slurm] /usr/lib/nhc/node-mark-online hpc-pg0-1 /usr/lib/nhc/node-mark-online: Node hpc-pg0-1 is already online. /usr/lib/nhc/node-mark-online: Skipping idle node hpc-pg0-1 (none ) Node Health Check completed successfully (185s).

Now let’s see what will happen when one of the health checks fails (e.g. local NVMe SSD is not mounted)

Running check: "check_fs_mount_rw -t "tracefs" -s "tracefs" -f "/sys/kernel/debug/tracing"" Running check: "check_fs_mount_rw -t "nfs4" -s "10.2.4.4:/sched" -f "/sched"" Running check: "check_fs_mount_rw -t "nfs" -s "10.2.8.4:/anfvol1" -f "/shared"" Running check: "check_fs_mount_rw -t "xfs" -s "/dev/md128" -f "/mnt/resource_nvme"" ERROR: nhc: Health check failed: check_fs_mount: /mnt/resource_nvme not mounted 20220201 22:11:17 /usr/lib/nhc/node-mark-offline hpc-pg0-1 check_fs_mount: /mnt/resource_nvme not mounted /usr/lib/nhc/node-mark-offline: Marking idle hpc-pg0-1 offline: NHC: check_fs_mount: /mnt/resource_nvme not mounted

We see that node hpc-pg0-1 is put into a DRAIN state.

NHC also gave a clear reason why the node was put into a DRAIN state.

Once the local NVMe SSD is mounted again, NHC will then automatically put node hpc-pg0-1 back to an IDLE state, ready to accept SLURM jobs again.

Note: If a node has been put into a DRAIN state not by NHC, then NHC will not change the state of the node (even if all the health checks pass). This default behavior can be changed.

You can also run all the NHC checks manually. For example to run all the NHC checks, disabling off-lining of nodes and printing the report to STDOUT.

Conclusion

It is best practice to run health checks on HPC specialty virtual machines especially before running large tightly coupled HPC/AI applications. Health checks are most effective when they automated and integrated with a job Scheduler and take action on any identified unhealthy nodes. An example of integrating LBNL NHC framework with cyclecloud SLURM scheduler for NDv4 (A100) running Ubuntu-HPCV 18.04 is demonstrated.

Published on:

Learn more