Best Practice for Running Cadence Spectre X on Microsoft Azure

Co-authors: Richard Paw and Andy Chan

Electronic Design Automation (EDA) consists of a set of software (tools) and workflows for designing semiconductor products, most noticeable being advanced computer chips. Given today's rapid pace of innovation, there is a growing demand for higher performance, smaller chip sizes, and lower power consumption chips. EDA tools require multiple nodes and many CPUs (cores) in a cluster to meet this growing demand. A high-performance network and a centralized file system support this multi-node cluster, ensuring that all components of a single cluster can act as a single unified whole to provide both consistency and scale-out performance.

The cloud's ability to provide EDA engineers with access to massive computing capacities has been widely publicized. What is not widely publicized is the critical high-performance networking and centralized file system infrastructure required to ensure the massive compute capacities can work together as one.

In this example we use the Cadence Spectre X simulator, which is a leading EDA tool for solving large-scale verification simulation challenges for complex analog, RF, and mixed-signal blocks and subsystems. Spectre X simulator is capable for users to massively distribute simulation workloads to Azure cloud to fully utilize up to thousands of CPU cores computing resources to improve performance and capacity.

Objective

In this article, we will first provide performance best practices for compute nodes when running EDA simulations on Azure. We will exercise those best practices to run Spectre X jobs among different Azure virtual machines (VMs) in different scenarios, including single-threaded, multi-threaded, and XDP (distributed mode). We will then conduct cost-effective analysis to provide you guidance about which VMs are the most suitable.

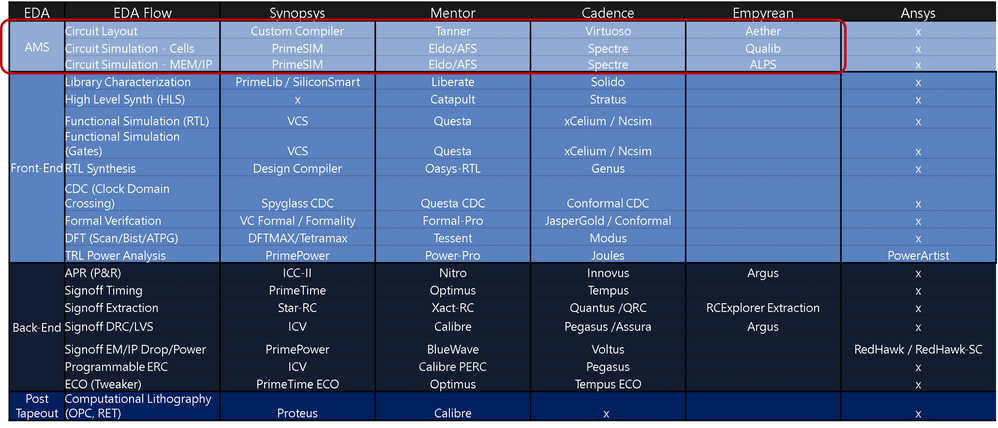

Table 1: EDA Tools landscape, with Circuit Simulation tools in red rectangle.

Best Practice running EDA on Azure

Below are the key configurations for best performance for Compute nodes when running EDA simulations on Azure:

- Reside resources in the same Proximity Placement Group, to improve networking performance between VMs.

- Disable Hyper Threading (HT) for all VMs, which would boost performance especially for CPU bound EDA tools like Spectre X.

- Enable Accelerated Networking by default to ensure low network latency among compute VMs and front-end storage solutions.

For a full list of best practice please refer to the Azure for the Semiconductor industry whitepaper, which includes leveraging CycleCloud to help orchestrating Azure resources to run their HPC type workload.

Spectre X benchmark environment

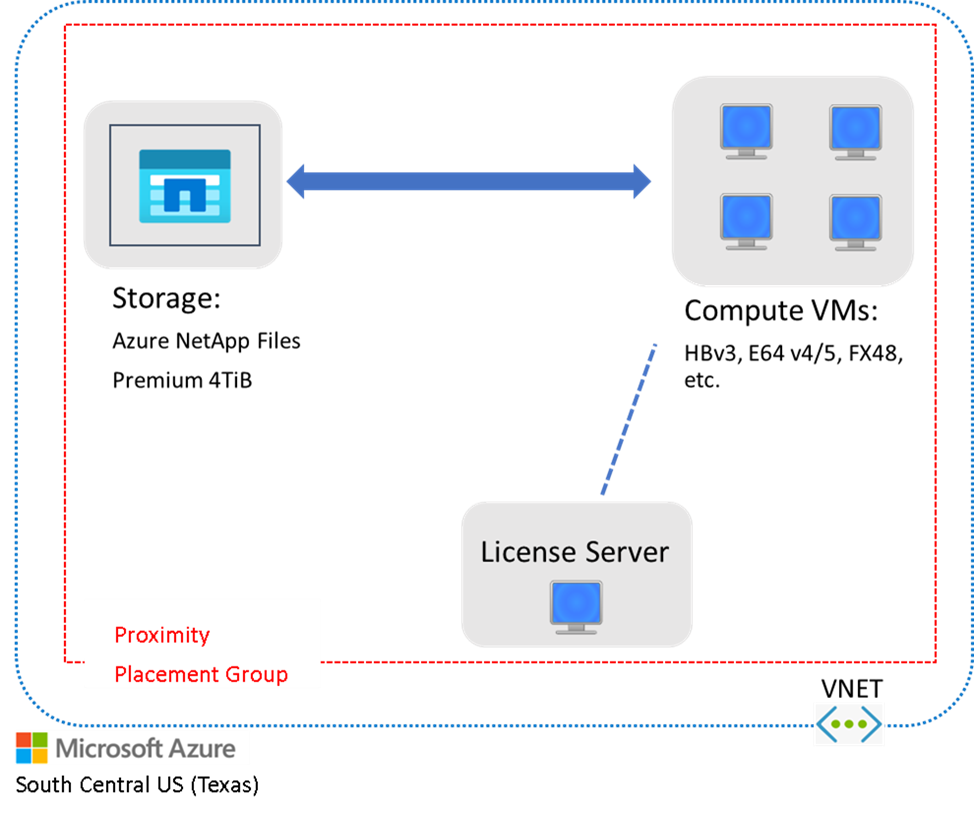

Figure 1: Spectre X benchmark environment architecture

The compute VMs, license server VM, and storage solution all reside in the same Proximity Placement Group. The network latency among VMs to license server and storage is 0.1~0.2 milliseconds. We used Azure NetApp Files (ANF) as our Network File System (NFS) storage solution with a Premium 4TiB volume, which provides up to 256 MB/s throughput. Cadence Spectre X (version 20.10.348) is installed on that ANF volume. The testing design is a representative Post Layout DSPF design with 100+K circuit inventories. The design and the output files are stored in the same ANF volume as well.

Azure VMs benchmarked

Table 2 shows all the Azure VMs benchmarked for this test, along with their CPU type, memory size and local disks. Hyper Threading (HT) is a technique for splitting a single physical core (pCPU) into 2 virtual cores (vCPUs) in Azure. For example, FX48mds is designed for low core count and high memory per core ratio (48GB per physical core), which are best for EDA back-end workloads. M-series, with total memory sized over 11TB, are suitable for large layout or other jobs require intensive memory. HBv3 contains the AMD Milan-X with larger L3 cache, which helps with simulation workloads. HBv3 also has a lower memory to core ratio and a large number of cores making it a very good option for front-end workloads which tend to scale out smaller workloads horizontally. Because disabling HT is one of our best performance practices, we calculated the price per physical core per hour in the rightmost column for the cost-effective analysis later. All list prices in Azure EAST US region as March 2022.

Resources utilization when running Spectre X

The simulations are run by altering the number of threads per job (+mt option), and the total elapsed time is retrieved from the output log files for each run. Below is the example command to run a single-threaded (+mt=1) Spectre X job.

spectre -64 +preset=cx +mt=1 input.scs -o SPECTREX_cx_1t +lqt 0 -f sst2

As expected, we found that during the run, the number of utilized CPUs will be equal to the number of threads per job. Figure 2 is a screenshot that shows CPU utilization kept 95+% on 16 CPUs when running a 16-threaded Spectre X job on a 44-cores VM (HC44rs). We also observed low storage read/write operations (<2k IOPS), low network bandwidth utilization, and small memory utilization during the run. Which indicates Spectre X is a very compute-intensive and CPU-bound workload.

Figure 2: CPU utilization kept 95+% on 16 CPUs when running a 16-threaded Spectre X job on a 44-cores VM (HC44rs)

Benchmark results

Performance among VMs

Figure 3 shows the elapsed runtime (sec) when running a single-threaded Spectre X job on different VMs. The lower the better. HBv3 performs the best no matter if it’s running on a standard 120 cores VM, or on a constrained 16 cores VM. D64 v5 and E64 v5 ranked second in the test. HBv3, D64 v5 and E64 v5 can perform 10~30% better than the same test over other clouds.

Figure 3: Elapsed runtime in seconds when running a single-threaded Spectre X job on different VMs with HT disabled. The lower the better.

Performance improves linearly of multithreading jobs

Figure 4 shows how the elapsed time decreases when running the job in multi-threads. We observed the elapsed time decreased linearly and stably, when running the same job in 1, 2, 4, and 8 threads across all VMs. The same job runs in 8 threads can be 4~6x faster than runs in single threaded.

Figure 4: Elapsed time decreases linearly when increasing # of threads per Spectre X job.

Performance improves when disabling Hyper Threading

Figure 5 shows that disabling Hyper Threading (HT) can perform up to 5~10% better than when HT is enabled, especially when running in the small number of threads per job.

Figure 5: Disable Hyper Threading (HT) can perform up to 5~10% better than when HT is enabled

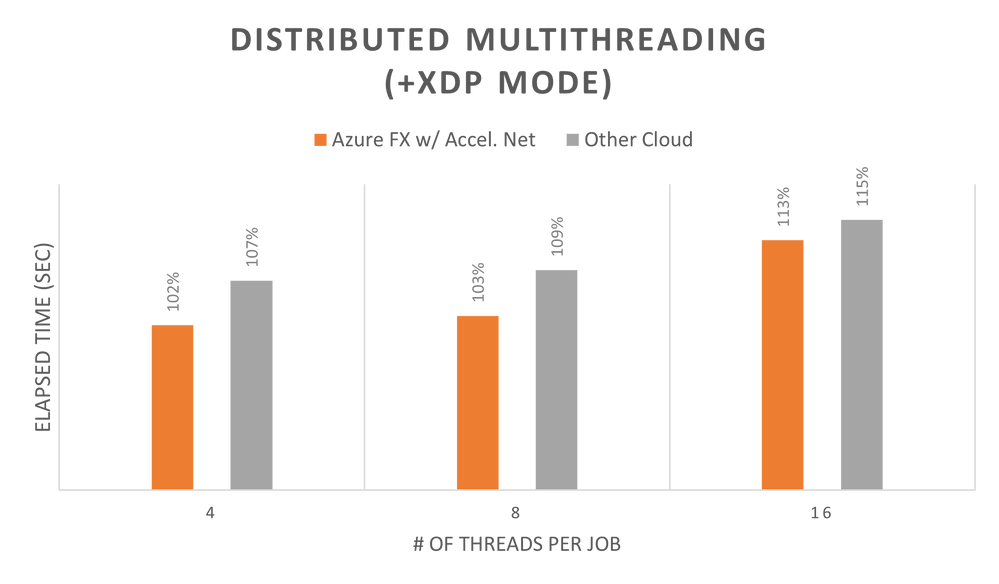

Distributed Multithreading performance relies on inter-node communication bandwidth

Cadence recommends running Spectre X jobs in the same VM to avoid any inter-communication overheads. Though it does support using other VM’s cores across VMs to run multithreading jobs, which is called “distributed multithreading” (XDP mode). In the “distributed multithreading” scenario, inter-node communication bandwidth is critical in performance. Cadence recommends having a minimum of 10GbE connection between VMs to reduce latency and to improve overall performance.

Azure Accelerated Networking feature, which is by default enabled for Azure VMs, provides up to 50Gbps of throughput and 10x reductions in network latency among VMs and to front-end storage. Figure 6 shows that Spectre X can enjoy up to 5% performance boost in Azure over other clouds just on the networking component.

Figure 6: Spectre X enjoyed up to 5% performance boost in Azure over other clouds in Distributed Multithreading scenarios

Cost-effective Analysis

Figure 7 shows a preliminary cost estimation considering only the Compute VMs’ cost. The estimation assumes that 500 single-threaded jobs are submitted at the same time, so we would know how many VMs are needed for the 500-jobs run based on the number of physical cores per VM. The total elapsed time was then calculated based on the results in Figure 3, multiplied by 500. The total cost of the Compute VMs can be calculated along with the cost information in Table 2.

Figure 7: A preliminary cost estimation which considers only the Compute VMs’ cost. Assuming 500 single-threaded jobs submitted at the same time. Total elapsed Time calculation is based on Figure 3 and rounding up to integer, ignoring any interactive influence. Total VM cost is based on Table 2, rounding up to integer too.

Please note the overall cost running EDA simulations contains 2 main parts: EDA license cost and infrastructure cost (including computing, networking, storage, operations, management, etc.). In fact, EDA license cost takes a substantial proportion of total cost and is proportional to the elapsed time. With that in mind, HBv3 is considered a preferred Azure VM to run Spectre X simulation, not only because it has the most inexpensive total VM cost, but also because its smallest total elapsed time would significantly save on the license costs. In addition, based on our experience, HBv3 is also the most cost-effective VM for all other circuit simulation EDA tools including Synopsys HSpice and SiliconSmart, Cadence Liberate, Mentor Edlo/AFS, Empyrean Qualib and ALPS.

Pricing Options

We compared the pay-as-you-go prices for the VMs tested. However, other pricing models exist. Check with your company’s cloud administrator to see if there are select pricing for specific VMs suitable for your workload. That could change which VM selection would be the most cost effective.

For short workloads, another pricing option which can be appropriate is Spot pricing. Spot pricing is a mechanism to provision unused Azure compute capacity at deep discounts compared to pay-as-you-go prices. Spot VM instances are ideal for workloads that can be interrupted, providing scalability while reducing costs.

Short Spectre X simulation workloads can make use of Spot pricing since these jobs can be interrupted and restarted with little overall effect to the runtime with the savings more than compensating for rerunning the jobs.

Orchestration

On-premises workloads run on systems that with fixed costs. These systems cost the same regardless if jobs are running on them or not. However, on the cloud, customers are only charged for systems while they are provisioned.

When running EDA workloads on the cloud, it’s important to make sure that you can provision the VMs required and also shut them down when done. In order to facilitate this on the Azure cloud, Microsoft provides CycleCloud as a fully supported service. CycleCloud enables customers to assign VMs to queues managed by popular job schedulers such as LSF and GridEngine, which are popular in EDA, as well as PBS and SLURM. CycleCloud will provision VMs to the limits specified by the customer as long as the job scheduler has jobs. In addition, CycleCloud will also shut down and deprovision VMs after a specified amount of time with no move jobs assigned. This allows customers to automate scaling based on the jobs in the job scheduler queue.

Summary

In this article, we benchmarked Spectre X jobs among different Azure VMs, and excised the below best practice:

- Reside resources in the same Proximity Placement Group.

- Disable Hyper Threading (HT) for all VMs.

- Enable Accelerated Networking by default.

- Match memory per core to your job

- Check with your cloud administrator on specific pricing

- Consider Spot pricing

- Use CycleCloud to manage VM orchestration

We found the Azure HBv3 series VM performs the best and both the D64 v5 and E64 v5 series VMs ranked second; all of which can perform ~10~30% better than other clouds. We found the Spectre X performance improves linearly and stably on Azure when running multi-threading jobs. We verified that disabling HT would boost performance by 5~10%. And Spectre X enjoyed a 5% performance boost in Azure over other clouds in Distributed Multithreading scenarios. Finally, we did a preliminary analysis and found HB120v3 is the most cost-effective VM for Cadence Spectre X.

These results highlight how important it is to match the proper VM to your workload. Because the design we used was relatively small, we needed only a small amount of memory per core. The large number of cores that the HBv3 contains allows us to pack a large number of jobs onto a single VM and only 5 VMs are required to complete the run. The FX48, which typically outperforms most VMs on back-end workloads, does poorly in this case. Having a large memory per core ratio hurts the FX48 in this case since much of the cost of the system is from the memory. The low core count requires 24 FX48 VMs to accomplish the same job as 5 HBv3 which makes it even more costly to accomplish the same job.

Learn More

- Learn more about Azure High-Performance Compute

- Learn more about Azure HBv3 virtual machines; now upgraded

Published on:

Learn moreRelated posts

Microsoft Purview: Data Lifecycle Management- Azure PST Import

Azure PST Import is a migration method that enables PST files stored in Azure Blob Storage to be imported directly into Exchange Online mailbo...

How Snowflake scales with Azure IaaS

Microsoft Rewards: Retirement of Azure AD Account Linking

Microsoft is retiring the Azure AD Account Linking feature for Microsoft Rewards by March 19, 2026. Users can no longer link work accounts to ...

Azure Function to scrape Yahoo data and store it in SharePoint

A couple of weeks ago, I learned about an AI Agent from this Microsoft DevBlogs, which mainly talks about building an AI Agent on top of Copil...

Maximize Azure Cosmos DB Performance with Azure Advisor Recommendations

In the first post of this series, we introduced how Azure Advisor helps Azure Cosmos DB users uncover opportunities to optimize efficiency and...

February Patches for Azure DevOps Server

We are releasing patches for our self‑hosted product, Azure DevOps Server. We strongly recommend that all customers stay on the latest, most s...

Building AI-Powered Apps with Azure Cosmos DB and the Vercel AI SDK

The Vercel AI SDK is an open-source TypeScript toolkit that provides the core building blocks for integrating AI into any JavaScript applicati...

Time Travel in Azure SQL with Temporal Tables

Applications often need to know what data looked like before. Who changed it, when it changed, and what the previous values were. Rebuilding t...