Azure Synapse Analytics January Update 2023

Azure Synapse Analytics January Update 2023

Welcome to the January 2023 update for Azure Synapse Analytics! Read on for sections on Apache spark Advisor in Azure Synapse and the new Apache Spark 3.3 runtime, as well as additional updates in Apache Spark for Synapse, Synapse Data Explorer, and Data Integration.

Table of contents

- Apache Spark for Synapse

- Synapse Data Explorer

- Cosmos DB synapse link to Azure Data Explorer [Public Preview]

- Apache log4j2 sink connector for Azure Data Explorer

- Ingest preexisting Event Hub events to ADX

- Multivariate Anomaly Detection in Azure Data Explorer

- ADX One Click file ingestion just became much more scalable

- ADX Dashboards enhancements: Conditional Formatting

- ADX Dashboards enhancements: Pie Chart displays

- ADX Kusto Web Explorer (KWE) JPath viewer

- Data Integration

Apache Spark for Synapse

Job and API Concurrency Limits for Apache Spark for Synapse

Azure Synapse Analytics allows users to create and manage Spark Pools in their workspaces thereby enabling key scenarios like data engineering/ data preparation, data exploration, machine learning and streaming data processing workflows. You can create any number of spark pools in their workspaces based on their data processing requirements. These data processing workflows are orchestrated as spark jobs which are submitted to the selected spark pools in workspace.

When you are trying to submit jobs using the Jobs APIs in high traffic scenarios, using a random or constant or exponential time interval for the retries would still result in HTTP 429 failures and will incur in high number of retries. This would result in an increase in the overall time taken for the requests to get accepted by the service. Instead by using the service provided Retry-After value, you would experience higher success rate in job submissions.

You can have 1000 active jobs in a single workspace at any given point in time. Active jobs include jobs currently running and jobs that are in queued state.

To learn more about job and API concurrency limits enforced across regions, read Concurrency and API rate limits for Apache Spark pools in Azure Synapse Analytics

Reservation of Executors as part of Dynamic Allocation

It’s often hard to size the number of executors for a Spark application as the number of executors required vary across different stages of the executor of a spark job. With Dynamic Allocation which can be enabled as part of the pool configurations by providing the min and max number of executors the platform automatically allocates executors for the spark application. As part of this dynamic allocation the platform reserves a set of executors for every spark application submitted based to support successful auto scale scenarios to provide users with reliable job execution experience.

To learn more about how reservation of executors works, read Reservation of Executors as part of Dynamic Allocation in Synapse Spark Pools.

Azure Synapse Runtime for Apache Spark 3.3 [Public Preview]

You can now create Azure Synapse Runtime for Apache Spark 3.3. The essential changes include features which come from upgrading Apache Spark to version 3.3.1 and upgrading Delta Lake. Please review the official release notes for Apache Spark 3.3.0 and Apache Spark 3.3.1 to check the complete list of fixes and features. In addition, review the migration guidelines between Spark 3.2 and 3.3 to assess potential changes to your applications, jobs, and notebooks.

To learn more about Azure Synapse Runtime, read Azure Synapse Runtime for Apache Spark 3.3 components versions and Azure Synapse Runtime for Apache Spark 3.3 is now in Public Preview.

Spark Advisor for Azure Synapse Notebook [Public Preview]

Synapse Spark Advisor analyzes code run by Spark and displays real-time advice for Notebooks. The Spark advisor offers recommendations for code optimization based on built-in common patterns, performs error analysis, and locates the root cause of failures.

To learn more about Spark Advisor, read Apache Spark Advisor in Azure Synapse Analytics (Preview)

Synapse Data Explorer

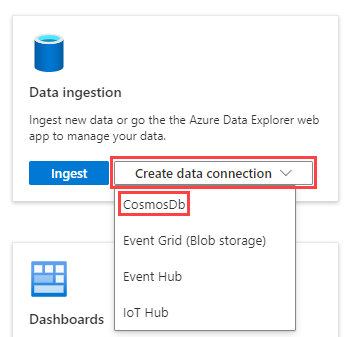

Cosmos DB synapse link to Azure Data Explorer [Public Preview]

Azure Cosmos DB is a fully managed NoSQL distributed database for web, mobile, gaming, and IoT application that needs to handle massive amounts of data, reads, and writes at a global scale with near-real response times. Azure Data Explorer (ADX) is a big data analytics platform. It can scale to petabytes of data, is optimized for time series, and supports structured, semi-structured (JSON) and unstructured (text) data. In addition, it has advanced geo-spatial capabilities. Those two technologies greatly complement each other, and they can now do so in a fully managed way with the new Cosmos DB to Azure Data Explorer Synapse Link. ADX native ingestion of Cosmos DB brings the high-throughput / low-latency transactional Cosmos DB data to the analytical world of Kusto, delivering the best of both worlds. Data can be ingested in near real-time (streaming ingestion) to run analytics on the most current data or audit changes.

To learn more about Cosmos DB Synapse Link for ADX, read Ingest data from Azure Cosmos DB into Azure Data Explorer (Preview)

Apache log4j2 sink connector for Azure Data Explorer

New year, new Kusto connector! Log4j2 allows you to easily stream your log data to Azure Data Explorer, where you can analyze, visualize, and alert on your logs in real-time.

Log4j is a popular logging framework for Java applications maintained by the Apache Foundation. Log4j allows developers to control which log statements are output with arbitrary granularity based on the logger's name, logger level, and message pattern. One of the options for storing log data is to send it to a managed data analytics service, such as Azure Data Explorer (ADX).

The Log4j2-ADX connector is an open-source connector that was developed to easily send Log4J2 data to ADX. In the Log4j2-ADX connector, we created KustoStrategy (a custom strategy) to be used in the RollingFileAppender, which can be configured to connect to the ADX cluster. Logs are written into the rolling file to prevent any data loss arising out of network failure while connecting to the ADX cluster. The data is safely stored in a rolling file and then flushed to the ADX cluster.

Log4j2-ADX connector also provides a demo/sample application that can be used to quickly get started with producing logs that can be ingested into the ADX cluster.

To learn more about the log4j2 connector, read Getting started with Apache Log4j and Azure Data Explorer.

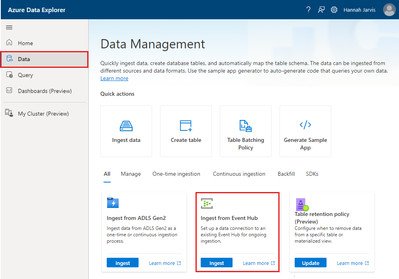

Ingest preexisting Event Hub events to ADX

ADX can now ingest Event Hub data that existed before the creation of an Event Hub data connection in your ADX cluster. You can use this feature by entering the Event retrieval start date (under "Advanced settings") on the ADX's Event Hub data connection page of Azure portal, on the Kusto Web UI ingestion wizard (One-Click), or by using the properties.retrievalStartDate of the data connection REST API.

To learn more about ingesting preexisting Event Hub events to ADX, read Connect to the event hub, Use the ingestion wizard to create an Azure Event Hubs data connection for Azure Data Explorer, and Data Connections - Create Or Update.

Multivariate Anomaly Detection in Azure Data Explorer

ADX contains native support for detecting anomalies over multiple time series by using the function series_decompose_anomalies(). This function can analyze thousands of time series in seconds, enabling near real time monitoring solutions and workflows based on ADX. Univariate analysis is simpler, faster, and easily scalable and is applicable to most real-life scenarios. However, there are some cases where it might miss anomalies that can only be detected by analyzing multiple metrics at the same time.

For some scenarios, there might be a need for a true multivariate model, jointly analyzing multiple metrics. This can be achieved now in ADX using the new Python-based multivariate anomaly detection functions.

To support multivariate anomaly detection, we have introduced three new UDFs (User Defined Functions) that apply different multivariate models on ADX time series. These functions are based on models from scikit-learn (the most common Python ML library), taking advantage of ADX capability to run inline Python as part of the KQL query.

To learn more about how Multivariate Anomaly Detection is performed in ADX, read Multivariate Anomaly Detection in Azure Data Explorer.

To learn more about anomaly detection and forecasting, read Anomaly detection & forecasting in Azure Data Explorer.

ADX One Click file ingestion just became much more scalable

The ADX One click file upload now supports up to 1000 files (previously 10) at one go. This will be handy for users who have many small files and would like to complete the ingestion process at one go.

To learn more about ADX one click file ingestion, read Ingest data wizard.

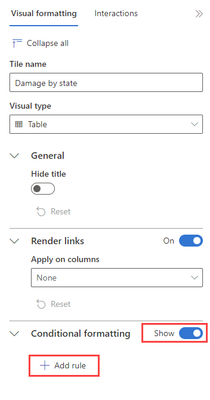

ADX Dashboards enhancements: Conditional Formatting

Conditional formatting helps in surfacing anomalies or just interesting data points in a result set. We revamped our conditional formatting component in table, stat, and multi stat visuals to include much more. Now you can either format a visual by using conditions or by applying themes to numeric columns or discrete values to non-numeric ones. The formatting can be applied to a specific column or to the entire row.

To learn more about conditional formatting, read Conditional formatting

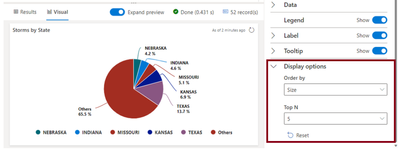

ADX Dashboards enhancements: Pie Chart displays

New display options supported for Pie chart visualization in Dashboards allow you to focus on the data you care about. By selecting the column by which you order by and the top N values, you can get a clearer picture of the data distribution.

To learn more about Pie Chart displays, read Pie chart

ADX Kusto Web Explorer (KWE) JPath viewer

JPath notation describes the path to one or more elements in a JSON document. Using the JPath viewer in a result’s expanded view allows you to quickly get a specific element of a JSON text and easily copy its path expression. This is extremely useful when performing investigation of data (i.e., query results) that contain dynamic fields.

To learn more about JPath viewer, read JPath viewer.

Data Integration

Express virtual network injection for SSIS [Generally Available]

Express virtual network injection for SSIS is now Generally Available. This method of injecting your SSIS integration runtime into a virtual network allows for your SSIS integration runtime provisions and starts faster. Inbound traffic is also not needed to meet Enterprise security compliance requirements.

To learn more about express virtual network injection for SSIS, read General Availability of Express Virtual Network injection for SSIS in Azure Data Factory.

Flowlets now supports schema drift

Allow schema drift is now supported for flowlets. Flowlets are reusuable activities that can be created from scratch or from an existing mapping dataflow. Now, with schema drift support, users can use their flowlets for datasets that have changing source columns, allowing for more flexible flowlet use across many mapping dataflows. This is easily enabled in source and sink by selecting Allow schema drift under options.

To learn more about schema drift, read Schema drift in mapping data flow.

SQL CDC incremental extract now supports numeric columns

Enabling incremental extract in SQL sources in mapping dataflows allows you to only process rows that have changed since the last time that pipeline was executed. Previously, when enabling incremental extract, users were only allowed to select a date/time column as a watermark. Now, supported incremental column types include date/time and numeric columns.

To learn more about change data capture, read Auto incremental extraction in mapping data flow.

Published on:

Learn moreRelated posts

Fabric Mirroring for Azure Cosmos DB: Public Preview Refresh Now Live with New Features

We’re thrilled to announce the latest refresh of Fabric Mirroring for Azure Cosmos DB, now available with several powerful new features that e...

Power Platform – Use Azure Key Vault secrets with environment variables

We are announcing the ability to use Azure Key Vault secrets with environment variables in Power Platform. This feature will reach general ava...

Validating Azure Key Vault Access Securely in Fabric Notebooks

Working with sensitive data in Microsoft Fabric requires careful handling of secrets, especially when collaborating externally. In a recent cu...

Azure Developer CLI (azd) – May 2025

This post announces the May release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) – May 2025 appeared first on ...

Azure Cosmos DB with DiskANN Part 4: Stable Vector Search Recall with Streaming Data

Vector Search with Azure Cosmos DB In Part 1 and Part 2 of this series, we explored vector search with Azure Cosmos DB and best practices for...