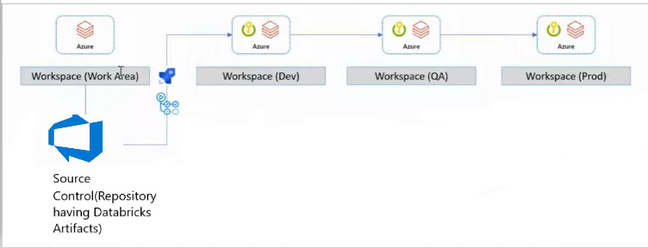

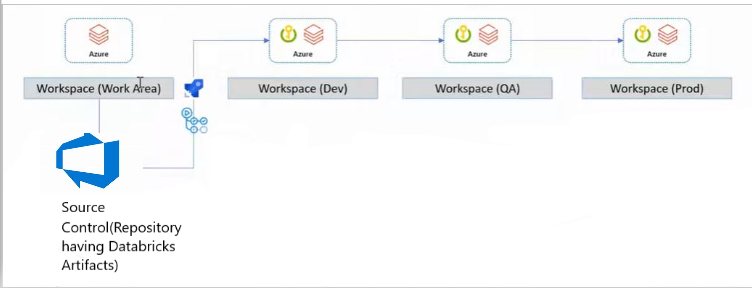

Azure Databricks Artifacts Deployment

This article is intended for deploying Jar Files, XML Files, JSON Files, wheel files and Global Init Scripts in Databricks Workspace.

Overview:

- In Databricks Workspace, we have notebooks, clusters, and data stores. These notebooks are run on data bricks clusters and use datastores if they need to refer to any custom configuration in the cluster.

- Developers need environment specific configurations, mapping files and custom functions using packaging for running the notebooks in Databricks Workspace.

- Developers need a global Init script which runs on every cluster created in your workspace. Global Init scripts are useful when you want to enforce organization-wide library configurations or security screens.

- This pipeline can automate the process of deploying these artifacts in Databricks workspace.

Purpose of this Pipeline:

- The purpose this pipeline is to pick up the Databricks artifacts from the Repository and upload to Databricks workspace DBFS location and uploads the global init script using REST API's.

- The CI pipeline builds the wheel (.whl) file using setup.py and publishes required files (whl file, Global Init scripts, jar files etc.) as a build artifact.

- The CD pipeline uploads all the artifacts to DBFS location and also uploads the global Init scripts using the REST API's.

Pre-Requisites:

- Developers need to make sure that all the artifacts that need to be uploaded to Databricks Workspace need to be present in the Repository (main branch). The location of the artifacts in the repository should be fixed (Let us consider’/artifacts’ as the location). The CI process will create the build artifact from this folder location.

- The Databricks PAT Token and Databricks Target Workspace URL should be present in the key vault.

Continuous Integration (CI) pipeline:

- The CI pipeline builds a wheel (.whl) file using the a setup.py file and also creates a build artifact from all files in the artifacts/ folder such as Configuration files (.json), Packages (.jar and .whl), and shell scripts (.sh).

- It has the following Tasks:

- Building the Wheel file using setup.py file (Subtasks below):

- using the latest python version

- updating pip

- Installing wheel package

- Building the wheel file using command "python setup.py sdist bdist_wheel"

- This setup.py file can be replaced with any python file that is used to create .whl files

- Copying all the Artifacts(Jar,Json Config,Whl file, Shell Script) to artifact staging directory

- Publishing the Artifacts from the staging directory.

- The CD Pipeline will then be triggered after a successful run.

- The YAML code for this pipeline is included in next page with all the steps included.

CI- Pipeline YAML Code:

Continuous Deployment (CD) pipeline:

The CD pipeline uploads all the artifacts (Jar, Json Config, Whl file) built by the CI pipeline into the Databricks File System (DBFS). The CD pipeline will also update/upload any (.sh) files from the build artifact as Global Init Scripts for the Databricks Workspace.

It has the following Tasks:

- Key vault task to fetch the data bricks secrets(PAT Token, URL)

- Upload Databricks Artifacts

- This will run a PowerShell script that uses the DBFS API from data bricks -> https://docs.databricks.com/dev-tools/api/latest/dbfs.html#create

- Script Name: DBFSUpload.ps1

Arguments:

Databricks PAT Token to access Databricks Workspace

Databricks Workspace URL

Pipeline Working Directory URL where the files((Jar, Json Config, Whl file) are present

3.Upload Global Init Scripts

- This will run a PowerShell script that uses the Global Init Scripts API from data bricks - > https://docs.databricks.com/dev-tools/api/latest/global-init-scripts.html#operation/create-script

- Script Name : DatabricksGlobalInitScriptUpload.ps1

Arguments:

Databricks PAT Token to access Databricks Workspace

Databricks Workspace URL

Pipeline Working Directory URL where the global init scripts are present

- The YAML code for this CD pipeline with all the steps included. and scripts for uploading artifacts are included in the next page.

CD-YAML code:

DBFSUpload.ps1

DatabricksGlobalInitScriptUpload.ps1

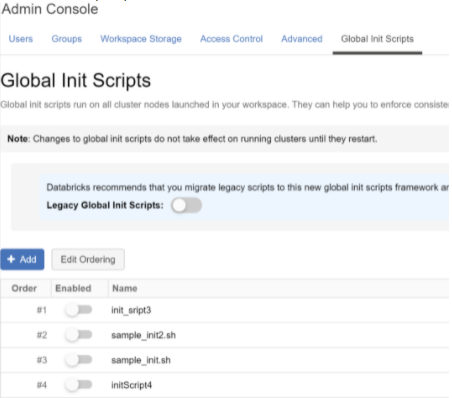

End Result of Successful Pipeline Runs:

Global Init Script Upload:

Conclusion:

Using this CI CD approach we were successfully able to upload the artifacts to the Databricks file system.

References:

- https://docs.databricks.com/dev-tools/api/latest/dbfs.html#create

- https://docs.databricks.com/dev-tools/api/latest/global-init-scripts.html#operation/create-script

Published on:

Learn moreRelated posts

IntelePeer supercharges its agentic AI platform with Azure Cosmos DB

Reducing latency by 50% and scaling intelligent CX for SMBs This article was co-authored by Sergey Galchenko, Chief Technology Officer, Intele...

From Real-Time Analytics to AI: Your Azure Cosmos DB & DocumentDB Agenda for Microsoft Ignite 2025

Microsoft Ignite 2025 is your opportunity to explore how Azure Cosmos DB, Cosmos DB in Microsoft Fabric, and DocumentDB power the next generat...

Episode 414 – When the Cloud Falls: Understanding the AWS and Azure Outages of October 2025

Welcome to Episode 414 of the Microsoft Cloud IT Pro Podcast.This episode covers the major cloud service disruptions that impacted both AWS an...

Now Available: Sort Geospatial Query Results by ST_Distance in Azure Cosmos DB

Azure Cosmos DB’s geospatial capabilities just got even better! We’re excited to announce that you can now sort query results by distanc...

Query Advisor for Azure Cosmos DB: Actionable insights to improve performance and cost

Azure Cosmos DB for NoSQL now features Query Advisor, designed to help you write faster and more efficient queries. Whether you’re optimizing ...

Azure Developer CLI: Azure Container Apps Dev-to-Prod Deployment with Layered Infrastructure

This post walks through how to implement “build once, deploy everywhere” patterns using Azure Container Apps with the new azd publ...