Data bricks Notebook Deployment using YAML code

Purpose of this Blog:

- We have defined end-end workflow for code check-in using two methods

- Notebook Revision History (standard check-in process which was defined to check-in the notebook code)

- Azure Databricks Repos (No Repo Size Limitation from May 13th)

- Changes made externally to the Databricks notebook will not automatically sync with the Databricks Workspace. Due to this limitation, it is recommended that developers sync the entire git repository as detailed in the process below.

- We have written yaml template for CI and CD with Powershell code which can deploy notebooks from multiple folders and the powershell code in pipeline will create folder in the destination if the folder doesn't exist against the Data thirst extension which can only deploy notebooks in single folder.

- One stop for whole Databricks deployment workflow from code-check-in to pipelines with detailed explanation(which is used by stakeholders)

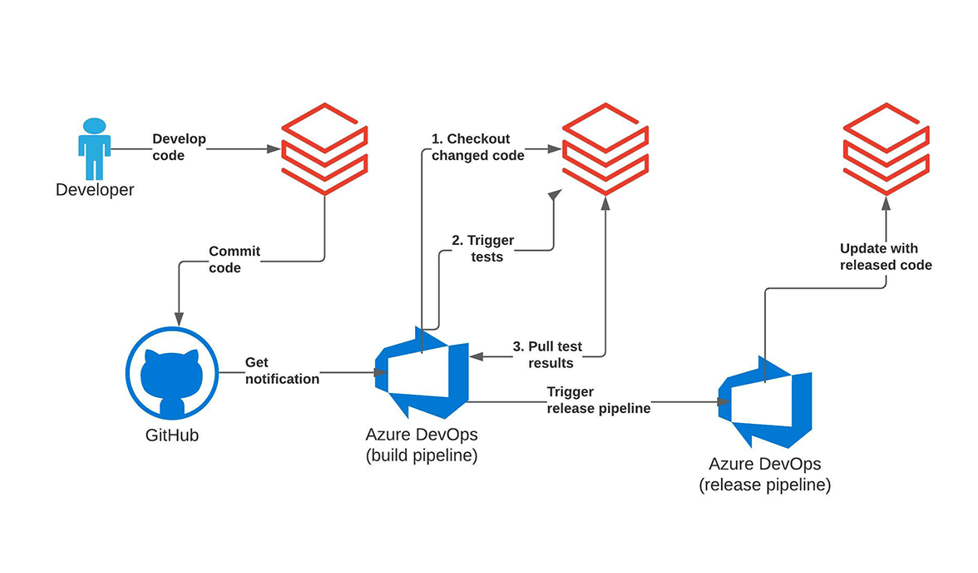

Developer Workflow (CI/CD)

Git Integration:

-

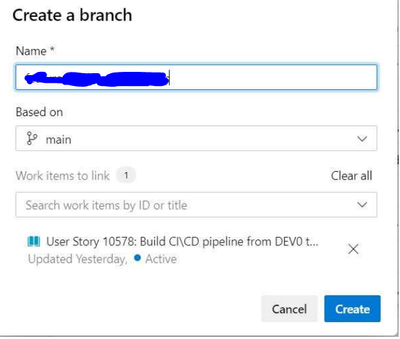

Create a feature branch based on the main branch and link a work item to it.

-

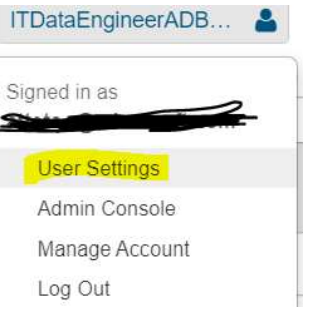

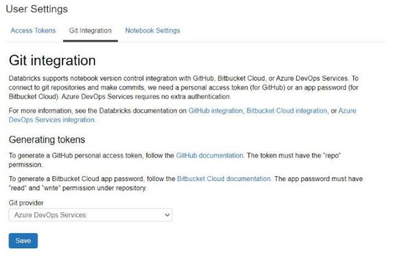

Login into your Azure Databricks Dev/Sandbox and click on user icon (top right) and open user settings.

-

Click on Git Integration Tab and make sure you have selected Azure Devops Services

-

There are two ways to check-in the code from Databricks UI (described below)

1.Using Revision History after opening Notebooks

2.Work with notebooks and folders in an Azure Databricks repo(Repos which is a recent development - 13th May)

Code Check-in into the Git repository from Databricks UI

I. Notebook Revision History:

-

Go to notebook you want to make changes and deploy to another environment.

Note: Developers need to make sure to maintain a shared/common folder for all the notebooks. You can make all required changes in your personal folder and then finally move these changes to the shared/common folder. The CI process will create the artifact from this shared/common folder.

-

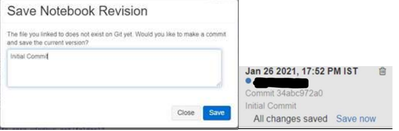

Click on the Revision history on top right.

-

If it is a new notebook, you will be able to notice that git is not linked to the notebook or Git might be linked to older branch which might not exist.

-

Click on the ‘Git:Not Linked’(New Notebook) or 'Git Synced'(Already existing notebook).

-

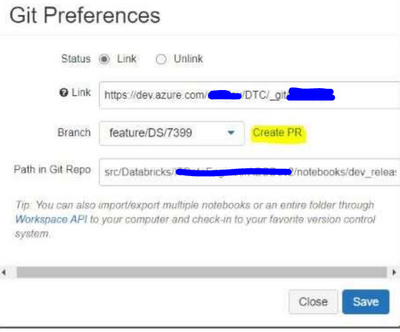

We need to configure the Git Repository. (screenshot below)

- Select feature branch from the list of branches in the drop down

- Mention the URL of the repository in the format

https://dev.azure.com/<organisationname>/<ProjectName>/_git/<Repo> - Mention the path of the notebook in the repository. In our case it is

src/Databricks/ITDataEngineerADBDev2/notebooks/<folder name>/<notebook.py>

-

Click on Save Notebook by adding a comment to integrate the code to the repository.

-

We need to create a PR after the changes are reflected in the feature branch.

-

After PR gets approved, the code now is merged into the main branch and CI-CD process will start from here.

Note: Linking individual notebooks has the following limitation

- Changes made externally to the Databricks notebook (outside of the Databricks workspace) will not automatically sync with the Databricks Workspace. This issue is documented here: https://forums.databricks.com/questions/6752/external-changes-to-git-in-synced-notebook.html*

- Due to this limitation, it is recommended that developers sync the entire git repository as detailed below.

II. Azure Databricks Repos:

Introduction

- Repos is a newly introduced feature in Azure Databricks which is in Public Preview.

- This feature used to have a 100mb limitation on the size of the linked repository but this feature is now working with larger repositories as of May 13th.

- We can directly link a repository to the Databricks workspace to work on notebooks based on git branches.

Repos Check-in Process:

-

Click on Repos tab and right click on the folder you want to work and then select "Add Repos".

-

Fill in the Repo URL from Azure Devops and select the Git provider as "Azure Devops Services" and click on create.

-

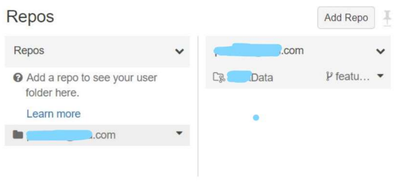

The repo gets added with folder name as repo name(Data in the screenshot below) and a selection with all the branch names(branch symbol with feature/ and down arrow in screenshot below). Click on the down arrow beside the branch name.

-

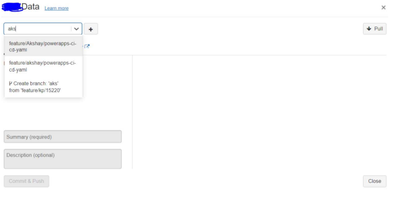

After clicking on down arrow(previous screenshot), search and select your existing feature branch OR create a new feature branch (as shown in screenshot below).

-

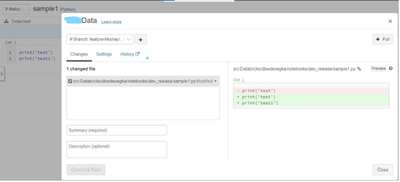

All the folders in the branch are visible (refer the screenshot below)

-

Open the folder which contains the notebooks(refer the screenshot below). Create a new notebook and write code(Right click on the folder and select "create"---->"Notebook" like screenshot below) or edit an existing notebook in the folder.

-

After creating a new notebook or editing an existing notebook, click on top left hand of the notebook which contains feature branch name. Then, new window will appear which will show the changes. Add Summary(mandatory) and Description(optional), then click on "commit and push".

- Then a pop up with the following message appears that it is "committed and pushed" and then the user should raise a PR to merge the feature branch into the "main" branch.

- After successful PR merge, the CI-CD pipeline is run for the deployment of the notebooks to the higher environments(QA/Prod).

CI-CD Process

Continuous Integration(CI) pipeline:

The CI pipeline builds the artifact by copying the notebooks from main branch to staging directory.

It has two tasks:

-

Copy Task - Copies from main branch to staging directory.

-

Publish Artifacts - publishes artifacts from $(build.stagingdirectory)

- YAML Template

Continuous Deployment(CD) pipeline:

Deployment with secure hosted agent

a. Run the release pipeline for the specified target environment.

This will download the previously generated Build Artifacts. It will also download secure connection strings from Azure Key Vault. Make sure your self hosted agent is configured properly as per Self-Hosted Agent.Then it will deploy notebooks to your Target Azure Databricks Workspace.

The code below shows how to run your release agent on a specific self hosted agent: Take note of the pool and demands configuration.

- In the continuous deployment pipeline we have to deploy the artifacts build to Dev , QA, UAT and prod environments.

- We will have approval gates setup before the deployment to each environment(stage) gets started.

We have two steps for the deployment:

-

Getting Key Vault Secrets PAT(Personal Access Token) and Target Data bricks Workspace URL from the Key Vault.

-

Importing the Notebooks to the target Databricks using import Rest API.(PowerShell Task with Inline Script)

-

We can perform multiple folder notebook deployments using this script.

-

We are creating a folder(Folder in Repository similar to Sandbox/Dev environment) if it does not exist in the target(dev/QA/UAT/Prod) data bricks workspace and then importing the notebooks into the folder.

-

YAML template

Published on:

Learn more