EDA workloads on Azure NetApp Files- Performance Best Practice

Azure NetApp Files (ANF) has been widely adopted across industries, including many silicon companies running their Electronic Design Automation (EDA) workloads on Azure. Azure NetApp Files provides 3 different service levels to ensure throughput, NFS 3.0/NFS4.1/SMB mount protocols connecting from Windows or Linux VMs, and takes only minutes to setup. Enterprise can seamlessly migrate their applications to Azure with an on-premises-like experience and performance.

We focused on using SPEC SFS® benchmark suite to spawn multi-threads simulating EDA-production like workloads. The standard FIO tool was also been used for stress test.

The goal of this article is to share lessons learned from running those tests on Azure NetApp files, and to:

- Provide practical performance best practices in real world.

- Examine ANF’s scale-out capabilities with multiple volumes.

- Cost-effective analysis for users to choose the most suitable ANF’s service level.

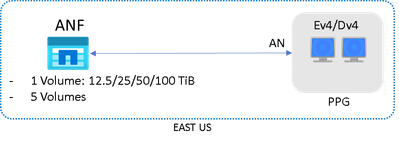

Architecture

The test was performed in Azure EAST US region. Mix of Premium and Ultra service level with different sizes of volumes were tested. E64dsv4 or D64dsv4 VMs were acting as Client VMs, they are the same machines with D-series save the amount of memory provisioned. The Client VMs are reside in the same Proximity Placement Group with Accelerated Networking enabled.

The average ping latency from Client VMs to ANF was around 0.6~0.8 milliseconds.

The overall performance is determined by two key metrics: Operations/sec and Response Time (millisecond). The test will incrementally generate EDA workload Operations, and Response Time will be recorded accordingly. The Operations/sec also indicates the combined read/write throughput (MB/s).

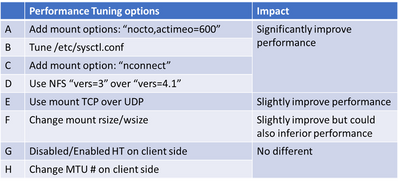

Performance Tuning

For all the results charts this article, if no specifically stated, NFS vers=3, mountproto=TCP, and default MTU=1500 were applied.

Please note:

1. The purpose of "nconnect" is to provide multiple transport connections per TCP connection or mount points on a client. This helps increase parallelism and performance for NFS mounts. The less # of clients, the more value "nconnect" can help to boost performance as it could potentially utilize all possible network bandwidth. And it's value gradually diminishes when # of clients increases, as there is only certain amount of bandwidth in total to go around.

2. "nocto" and "actimeo=600" is recommended only on scenarios where datasets are static. For example, it's especially advisable for EDA /tools volumes, as files aren’t changing so there is no cache coherency to maintain. Those setting will eliminate metadata and improve throughput.

The below results shows that the first 3 (A, B & C) options can all improve response time and maximize throughput significantly, and the effectiveness can be added up.

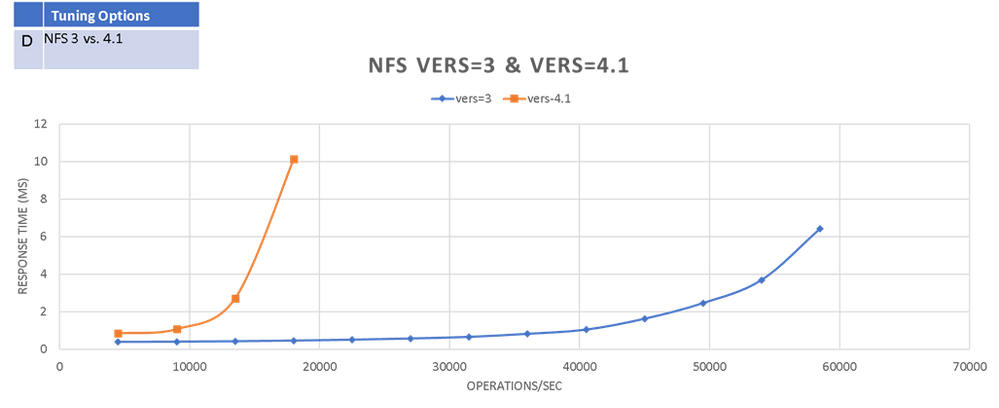

NFS 4.1 shows poor performance in this test compared to NFS 3. So be cautious of using it if there’s no specific security requirements.

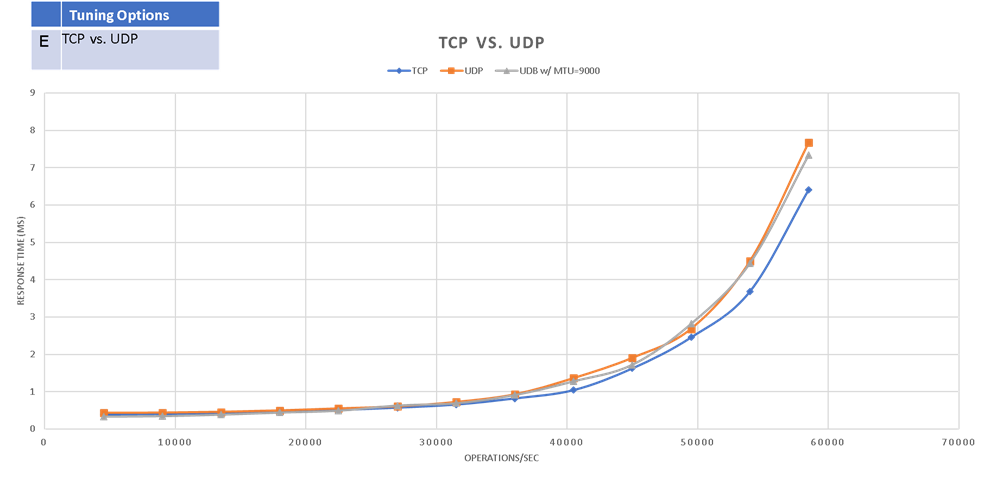

TCP shows slightly better performance than UDP (‘mountproto=udp’)

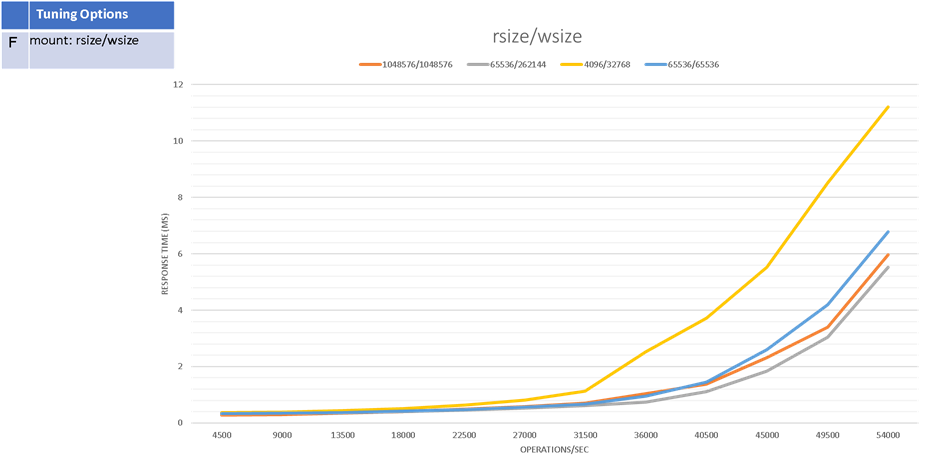

Appropriate rsize/wsize could improve performance. Using 1:1 rsize and wsize is always recommended, and 256K/256K is usually recommended in most cases for ANF.

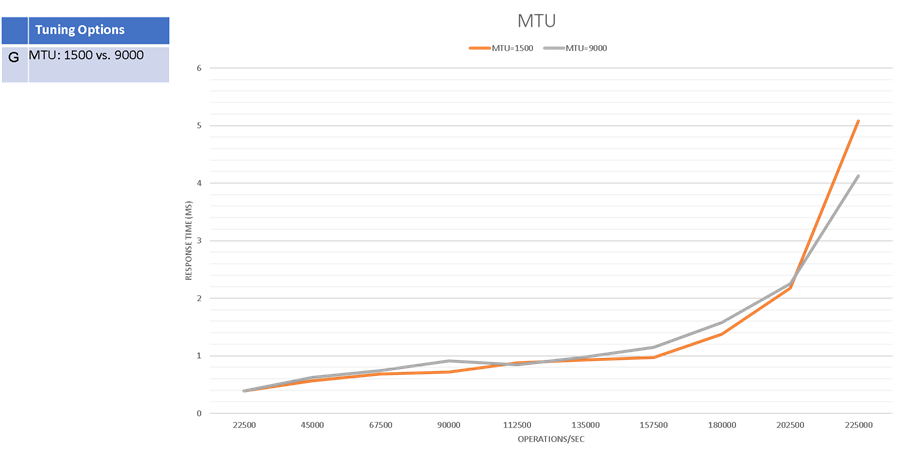

As there's no support for Azure Compute for jumbo frames, no significant impact on performance when changing VM's MTU # from default 1500 bytes to 9000 bytes.

Cost-Effective analysis

One advantage of ANF is it provides 3 different service level with different pricing structure. That is, users are able to change the service level and volume size to reach the most cost-effective sweet spot when running their applications on Azure.

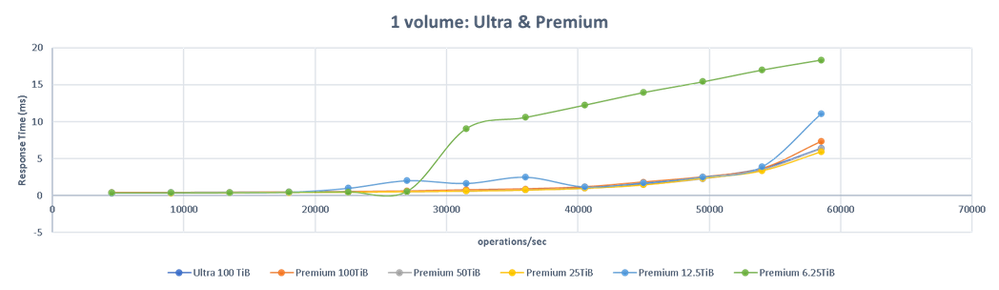

In below chart it shows:

- Premium 25TiB, 50TiB and 100TiB were no different as the application didn’t drive sufficient throughput to warrant this much bandwidth (max. throughput=58,500 ops/sec while maintaining same response time)

- Premium 12.5TiB can achieve max. throughput=54,000 ops/sec.

- Premium 6.25TiB can achieve max. throughput=27,000 ops/sec.

Which implies high IOPS EDA workloads (such as the LSF events share) can generally be done on the Premium service level and without excessively large volumes.

Scalability

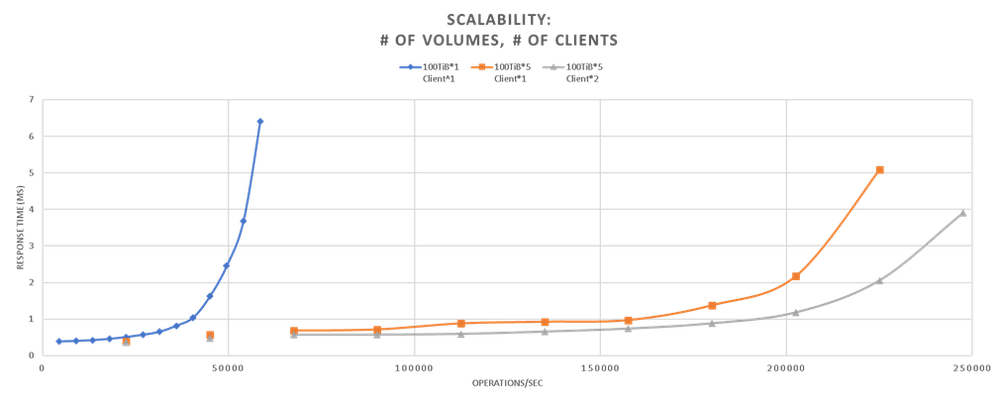

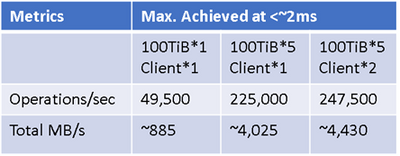

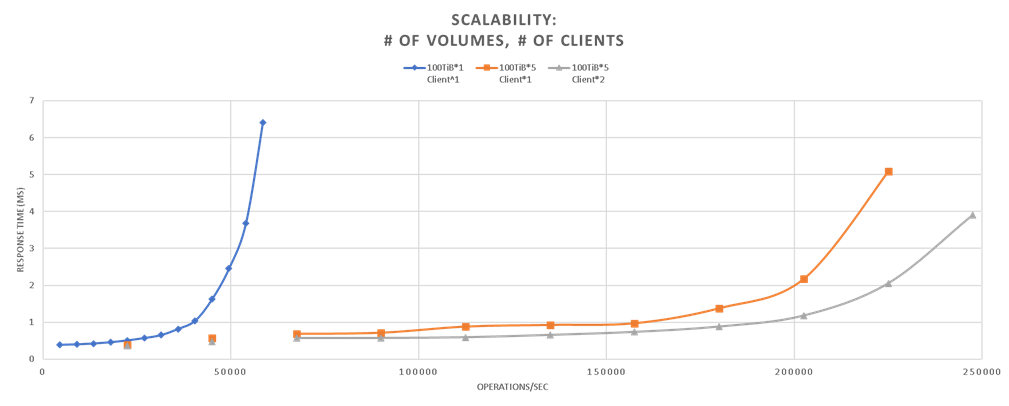

- By simply adding # of volumes to ANF, max. throughput can scale linearly, and still maintaining low response time. (~500% from 1 to 5 volumes). In practice, more volumes is always better even if more throughput is not garnered to keep pressure on storage subsystems low.

- In real world, EDA workloads are running on hundreds or thousands of nodes (VMs) connecting to the same ANF. Below chart shows more clients could further improve performance on either response time or overall throughput. As it surpasses the limitation of network bandwidth and max connections from one single VM.

Summary

It's important to keep in mind that in real-world, storage performance is impacted by a wide range of factors. This article is by no means to provide an ultimate guidance, but to share lessons learned from running the standard EDA benchmarking tools, and examine some generic performance best practice could be applied in your applications running on Azure.

Generally, the first 4 options (A, B, C & D) are suggested to be applied when applicable. As their effectiveness can be added up, they also improve Max. IOPS and bandwidth on regular FIO test:

And as stated please be cautious to change rsize/wsize (option F) as it could also impact performance in different way.

Appendix:

1. Tuning /etc/sysctl.conf (option B) example on Ev4/Dv4:

To make the change effective:

2. Upgrade Linux kernel to 5.3+ to be able to utilize “nconnect” (option C). Please note that you will need to reboot the VM at the end of the upgrade. So it might not be applicable for some cases.

3. actimeo and nocto

The actimeo and nocto mount options are used primarily to increase raw performance. Please review NetApp ONTAP’s Best Practice Guide for applicability of your applications.

4. mounting examples:

TCP:

UDP:

Learn more

- Choose the best service level of Azure NetApp Files for your HPC applications

- Improve Azure NetApp Files performance for your EDA and HPC applications by using best practices

Published on:

Learn moreRelated posts

Introducing Microsoft Azure Face Liveness

AI Builder – Use your own generative AI model from Azure AI Foundry in Prompt builder in Copilot Studio

We are announcing the ability to use your own generative AI model from Azure AI Foundry in prompt builder. This feature has reached general av...

Azure SDK Release (August 2025)

Azure SDK releases every month. In this post, you'll find this month's highlights and release notes. The post Azure SDK Release (August 2025) ...

Azure Developer CLI (azd) – August 2025

This post announces the August release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) – August 2025 appeared fir...

Azurite: Build Azure Queues and Functions Locally with C#

Lets say you are a beginner Microsoft Azure developer and you want to : Normally, these tasks require an Azure Subscription. But what if I tol...

Data encryption with customer-managed key (CMK) for Azure Cosmos DB for MongoDB vCore

Built-in security for every configuration Azure Cosmos DB for MongoDB vCore is designed with security as a foundational principle. Regardless ...

Azure Developer CLI: From Dev to Prod with Azure DevOps Pipelines

Building on our previous post about implementing dev-to-prod promotion with GitHub Actions, this follow-up demonstrates the same “build ...

Azure DevOps OAuth Client Secrets Now Shown Only Once

We’re making an important change to how Azure DevOps displays OAuth client secrets to align with industry best practices and improve our overa...