Performance impact of enabling Accelerated Networking on HBv3, HBv2 and HC virtual machines

Azure Accelerated Networking is now available on HBv3, HBv2, HC and HB virtual machines (VMs). Enabling this feature improves networking performance between VMs when connecting over the Ethernet-based vNICs, which is useful for scenarios like high-performance filesystems created on Azure VMs and mounted against client compute VMs. In this article we measure the network latency, bandwidth and I/O performance connecting HPC VMs to an NFS server, with Accelerated Networking enabled and disabled to see the impact. This article also covers network tuning to get the best performance with Accelerated Networking on HBv3, HBv2 and HB VMs.

Ethernet network latency and bandwidth benchmarks

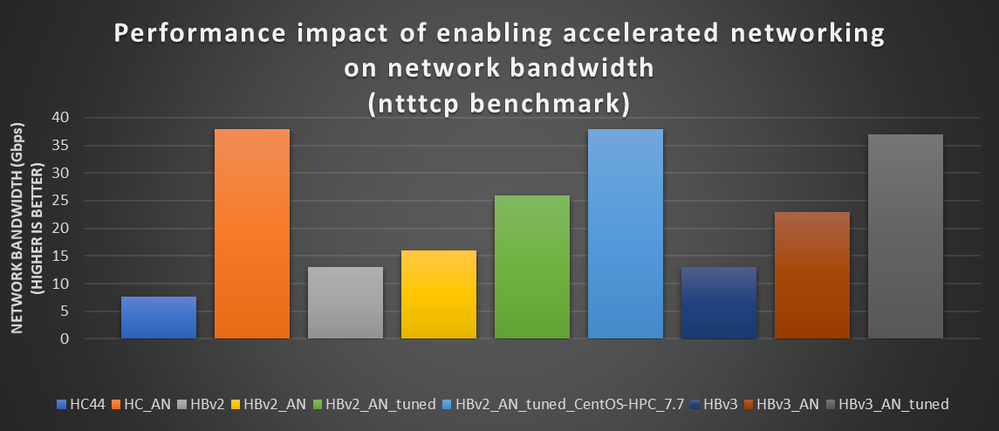

The ntttcp tools was used to perform Accelerated Networking bandwidth tests.

The following command line parameters were used

ntttcp -r -m 64,* --show-tcp-retrans --show-nic-packets eth0 (on receiver)

ntttcp -s -m 64,*,$server_ip --show-tcp-retrans --show-nic-packets eth0 (on sender)

Network latencies were measured using the linux sockperf tool.

/usr/sbin/sysctl -w net.core.busy_poll=50

/usr/sbin/sysctl -w net.core.busy_read=50

sockperf server -i $server_ip --tcp -p 8201 (on receiver)

sockperf sockperf ping-pong -i $server_ip -p 8201 -t 20 --tcp --pps=max (on sender)

NOTE: CentOS-HPC 7.8 was used on HB, HC and HBv2, but CentOS-HPC 8.1 gen2 was used on HBv3. The HBv2 network

bandwidth test applying the network tuning described below did not achieve the expected ~38 Gbps,

but using CentOS-HPC 7.7 we were able to achieve ~38 Gbps. An updated version of CentOS-HPC 7.8

will be released at a later date to correct this performance problem.

Ethernet network tuning for HBv3, HB120_v2 and HB60

On HBv3, HB120_v2 and HB60 some manual network tuning is needed to see the performance benefits of accelerated networking.

NOTE: Network tuning will be included in future Marketplace HPC images

To find the device associated with the accelerated networking virtual function. (e.g In this case DEVICE=enP45424s1)

[hpcadmin@hbv3vmss000004 ~]$ ibdev2netdev | grep an0

mlx5_an0 port 1 ==> enP45424s1 (Up)

Here are the manual network tuning steps

- Change the number of multi-purpose channels for the accelerated networking network device. The default number of multi-purpose channels on HBv3, HBv2, HB and HC SKU's is 31. In out testing, 4 multi-purpose channels gives the best performance.

ethtool -L $DEVICE combined 4

- Pin the first four multi-purpose channels of device to vNUMA 0

To get the first four multi-purpose channel indices

ls /sys/class/net/$DEVICE/device/msi_irqs

Map first four multi-purpose channel to vNUMA 0

echo "0" > /proc/irq/${irq_index[0]}/smp_affinity_list

echo "1" > /proc/irq/${irq_index[1]}/smp_affinity_list

echo "2" > /proc/irq/${irq_index[2]}/smp_affinity_list

echo "3" > /proc/irq/${irq_index[3]}/smp_affinity_list

NOTE: Please skip the first msi_irqs index, it's used for a different IRQ. There is a script called map_irq_to_numa.sh in the azurehpc git repo to do this automatically. (here)

- Pin your executable (i.e ntttcp) to vNUMA 0

taskset -c 0-3 ntttcp <ntttcp_args>

I/O Performance benchmark

We performed synthetic I/O benchmarks (FIO) on HC44 and HB120_v2 connected to an NFS server, to determine the performance impact of Accelerated Networking on network storage I/O performance.

NFS server configuration

D64s_v4 (6 x P30 disks)

NFS server used CentOS 7.8 and HPC I/O clients used CentOS-HPC 7.8

Expected theoretical peak I/O performance = ~1200 MB/s (Due to D64s_v4 and P30 disk limits)

NOTE: In this I/O benchmark an NFS server was used in which the D64s_v4 and P30 disk limits restricted I/O performance

even though the network had more bandwidth to go faster. If a network storage solution is used with faster disks

or higher throughput, greater gains in I/O performance would be expected by enabling Accelerated Networking.

Summary

- Enabling accelerated networking on HPC VMs has a significant impact on front-end network performance (latency and bandwidth).

- HBv3, HB120_v2 and HB60 SKUs require network tuning to benefit from Accelerated Networking.

- Accelerated networking improves network storage I/O performance, especially read I/O at lower client counts.

Published on:

Learn more