How To: Send requests to Azure Storage from Azure API Management

Overview

In this How To, I will show a simple mechanism for writing a payload to Azure Blob Storage from Azure API Management (APIM). Some examples where this approach is useful:

- Claim-Check

Claim-Check pattern is used to split a large message into a claim check and a payload. The claim check is sent to a downstream system which has a reference to the location of the payload. The downstream system then retrieves the payload for handling. You might want to do this when working with technology that are not optimised suited for very large messages (Service Bus) either by a size limitation or associated cost, or when you do not need to process all messages. - Message Logging

Application Insights is awesome, and though you can send payloads as part of your telemetry, this can result in a surprisingly high usage charge. As a way to address this, you might want to store the payloads in Azure Storage instead.

There are more scenarios of course, but those are the two top ones that jump out at me.

Example Use Case

In this example, I will amend the policy of an API to write the payload received to a storage account blob container. This example will show how to modify the payload as well as how to establish the required permission using service identity and RBAC permissions. This is my preferred way of handling authentication and authorisation when working with Azure Storage. API Keys and SAS tokens are possible but I find them challenging for most teams to manage and code.

Setup

This How To starts after an Azure Storage service and a APIM service have been provisioned. Thanks to the APIM team, the service provides a sample API, Echo API, that is suitable for a our purposes.

This example will update the POST operation Create resource.

Permissions

The next step is to enable the APIM to access blob storage. To do this, navigate to the Managed identities blade:

You will want the System assigned on:

Using Azure role assignments, create a assignment for Storage Blob Data Contributor over your storage account.

The entry above shows access for my APIM, tmp-apim-ase, over the sttempase Azure Storage account.

Policy

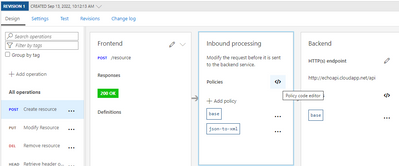

Now back on the Echo API, select the Create resource operation and click the Policy code editor:

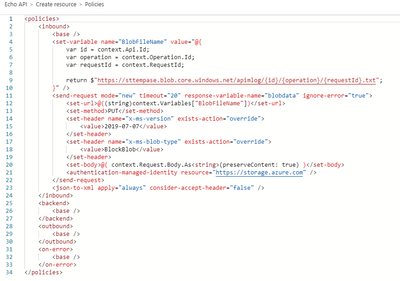

This will provide an xml editor. Insert the following xml in the inboud element.

You will need to update the value for [yourstorage] to your Azure Blob Storage account. The result should look something like this:

A couple things to note. I set the file name to use the unique RequestId generated by APIM, and I created a folder structure to reflect the api and operation called. Have a look at the supported Context Variables to suit your purpose.

Also note the ignore-error attribute is set to true. In my case, I do not want to fail the message but this might not be suitable if implementing the Claim-Check pattern. Also, when reading the request body, be sure to preserveContent.

Test

Testing the API is nice and simple using the Test tab.

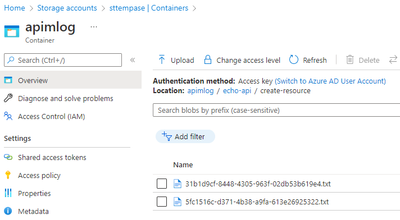

If the Azure gods are with you, you should not see an error and instead see a new file created in your storage account.

Now, what if something goes wrong, or if you are not familiar with the Trace feature, read on.

Troubleshooting

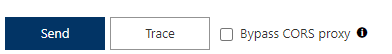

The best way to troubleshoot is using Trace. This is located next to the send button.

This provides more detail as to the steps involved. For example, you can investigate the step where the file is created:

Trace is your friend ![]()

Summary

This How To illustrated a simple way to publish to Azure Storage. There are other ways I explored including publishing the claim to Event Hub and the payload to the backend, but I thought this approach was simplest and made for a good How To. Let me know if this saves you some time!

Cheers

Published on:

Learn moreRelated posts

Coding at the Speed of Innovation: AI and more with Azure SQL Database

The Azure SQL Database team is all set to unveil new product announcements as Build 2024 approaches. Innovation is the prominent theme this ti...

Generate insights from audio and video data using Speech analytics in Azure AI Studio

In this video, we explore the power of speech analytics in Azure AI Studio to extract insights from audio and video data. This technology help...

Azure Custom Policy- PostgreSQL Product - Compliance Report not Available- New Feature Request

If you're attempting to create custom policies for Azure Cosmos DB for PostgreSQL at the subscription level and are running into issues where ...

Microsoft Causes Fuss Around Azure MFA Announcement

Microsoft's recent announcement regarding the requirement of Azure MFA for connections to services starting in July 2024 has caused quite a st...

PostgreSQL for your AI app's backend | Azure Database for PostgreSQL Flexible Server

If you want to use Postgres as a managed service on Azure and build generative AI apps, then the Azure Database for Postgres Flexible Server i...

Storage migration: Combine Azure Storage Mover and Azure Data Box

If you are looking to migrate your data from on-premises to Azure Storage, it can be challenging, but with Microsoft's solutions, you can make...

Loop DDoS Attacks: Understanding the Threat and Azure's Defense

This article provides a comprehensive overview of Loop DDoS attacks, a sophisticated and evolving cybersecurity threat that exploits applicati...

Azure Communication Services at Microsoft Build 2024

Join us for Microsoft Build 2024, either in-person in Seattle or virtually, to learn about the latest updates from Azure Communication Service...

Azure Developer CLI (azd) – May 2024 Release

The Azure Developer CLI (`azd`) has received a May 2024 update, version 1.9.0, making it simpler for developers to create, manage, and deploy ...

Join us at Build 2024: Get the latest on Azure Cosmos DB in Seattle or online!

Join Microsoft Build 2024 to get a sneak peek into the future of AI and data innovation. Taking place in Seattle and online from May 21 to 23,...