Performance Tuning Synapse Dedicated Pools - Understanding the Query Lifecycle

Author(s): Sarath Sasidharan is a Program Manager in Azure Synapse Customer Success Engineering (CSE) team.

Introduction: Understanding Query Lifecycle to Maximize Performance

Synapse Dedicated pools have been battle tested at enterprise customers across the globe. We deal with data in the magnitude of PetaBytes. Synapse can provide you with the scale of the cloud and the high performance required for your enterprise-grade requirements. The key to maximizing your performance is to follow best practices, check out best practices for dedicated SQL pools in Azure Synapse Analytics

Failure to do so causes performance issues. In such scenarios, is it important to understand where the bottlenecks are. This blog focuses on the different steps a query goes through; from the time the query is fired from the client until it returns back. Delay caused in any of the steps would impact the overall run-time of the query and hence indicate degraded performance.

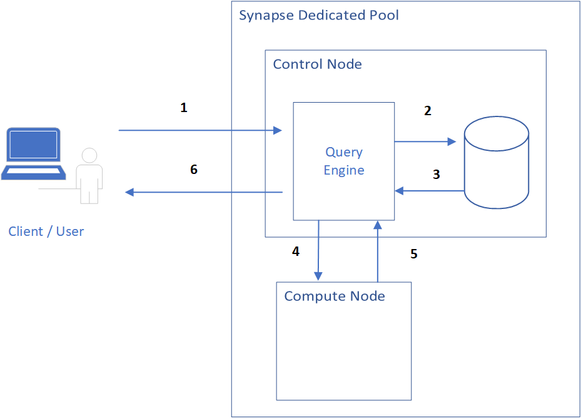

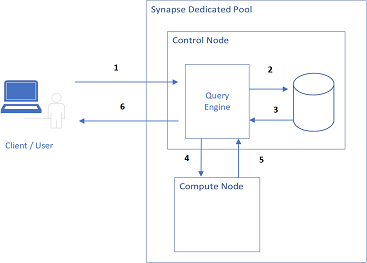

High-Level Architecture

This architecture represents a query submission done via a user/client application. The following steps are highlighted in bold numbers :

- Query submitted from a user /client application. The query lands on a control node that hosts the distributed query engine. Refer to our Synapse architecture document to revisit the duties of the control node.

- The Query Engine then forwards the request to an internal Database service for validation/schema checks etc.

- Database service returns the inputs (which we will discuss in detail below), which are required for the query service to plan the execution

- The execution commands are sent to the compute nodes to be run.

- Compute nodes return the result set to the control node after the run is complete.

- The control node then returns the data to the client/user application.

In order to understand this, let's dive into each of these steps in detail.

Query engine request towards internal SQL Database Service

This is the first major step in your query lifecycle. Different steps are executed here:

- Parsing: The query is parsed for T-SQL syntax errors

- Binding: Schema checks are done.

- Memo Compilation: Search Space for the query plans, and statistics are returned to the query engine for further processing.

What can cause a performance impact?

Parsing and binding are usually quick and done in no time. The third step could at times be a bottleneck. If you have a very complicated query, with a large number of tables involved the search space calculation can cause delays. The algorithms are smart enough to handle many complicated scenarios and have thresholds on when to stop calculations. However, in some scenarios, bad queries can impact this.

Remediation

The best fix for this step is to see if the query quality can be improved. If this has already been done, you may consider adding the FORCE ORDER hint to your query. Force order will enforce the order of joins to be executed as written as opposed to the optimizer reordering joins to attempt optimization. For memo compilation, this hint also reduces the search space of possible plans so there is less work to do in this step. This needs to be used with caution since in most scenarios it's best to leave it to the Query optimizer to decide the best plan.

Query Engine Processing output from Internal SQL Database Engine

Memo compilation results from the previous step are used as a starting point for this stage. The results are used to :

- Acquire Object Resources / Apply Locks as applicable

- Rebind: Checks if the state of objects has not changed as compared to the start of the query

- Plan Generation: Based on the search space data and statistics, the Query engine analyses the result and creates a distributed SQL plan to be executed on the compute nodes.D-SQL plan is different than a regular SQL Server plan since it also takes into account the data movement involved to create the final plan.

- Acquire System Resources: Resources that are required to run the query are acquired during this phase.

What can cause a performance impact?

- Acquiring Locks: If there are locks on tables/objects used in the query this can lead to delays.

- Plan Generation: Complex/bad queries can lead to a larger plan generation time.

- Acquiring System Resources: If there are no resources available for the query to run, this can lead to a resource wait.

Remediation:

- Acquiring Locks: If this is occurring during ETL/ELT loads, plan to look to schedule the workloads accordingly to make sure that there are no locking issues.

- Plan Generation:

- Look for data skew and make sure the distributions are done in the right way. Incompatible joins can cause shuffle moves, the goal should be to reduce the number of steps and minimize data movement operations / DMS operations.

- Try to clean up the query in case of bad queries. Force the Query Optimizer to use the order inside the query to generate the plan using FORCE ORDER hints. This asks the Query Optimizer to use the order inside the query to generate the query plan.

- Acquiring System Resources: Usage of WorkloadManagement /WLM should help. However, this needs a good understanding of the system and workloads run on the engine.

Execution of Query

This step is where the actual execution of the query starts. The DSQL plan generated in the previous step contains SQL/DMS statements which are sent to the compute nodes. Based on the plan, there are instructions on where these statements need to run and if it is a data movement step or a SQL step.

What can cause a performance impact?

This step contributes to a majority of query performance issues. They are mostly caused due to the wrong usage of the platform and poor query quality.

- Bad Data distribution / Data Skew: Choosing the wrong distribution key can cause data skews due which in turn leads to hot-spotting. Synapse uses 60 distributions under the hood. A bad distribution can result in most of the data going to a few distributions, which leads to load on a few distributions which can massively hit performance.

- Incompatible joins: Incompatible joins can cause data movements across nodes.

- TempDB fills: TmpDB is used to move data between computes, due to the points listed above this can fill up the temp DB at a quick pace, which can cause temp DB issues. This also impacts performance.

- Outdated Statistics: Synapse does has the auto stats feature on, however, there are no automatic updates on stats. Non Stats maintenance leads to bad plan generation which can introduce inefficient data movement steps in the query.

- Wrong Indexing Strategies: Synapse is an MPP engine and differs from SQL Server, In many implementations, customers /users do use clustered indexes on large tables with Non-Clustered Indexes on other predicate columns. Synapse benefits largely in using Clustered Column Store indexes for larger tables, since it leads to larger compression and lesser IO being moved over the wire. This is a common bottleneck that causes performance issues.

- Unhealthy Indexes: Clustered Column store indexes algorithms use effective compression methods and have thresholds (100K min - 1Million max) where row groups are compressed to get the best compression ratio. However, this can be impacted due to frequent updates /inserts/deletes or a low amount of resources available during compression, etc. This can lead to low-quality segments, which in turn will result in higher IO.

- Over Partitioning: Partitioning is a good strategy to get rid of unwanted data segments while running queries on larger tables, however, on CCI tables it can backfire if there are not enough amount of rows, which can lead to bad segment quality.

- Slow Inserts / Deletes: Inserts and deletes can be slow at times. This is a fully logged operation due to which this delay might happen.

- Slow Return Operation: In some cases, a slow return operation is seen, this could be related to IO Contention.

Remediation:

- For bad data distribution/data skew you could use the distribution advisor to find a better distribution key. You could also use the command dbcc pdw_showspaceused to find data skew on tables

- Incompatible Joins: Review the query and in particular the join conditions. Check if the datatypes are the same.

- TempDB fills: Follow this document which quite clearly explains how to identify TempDB fills. Eliminating data skews and incompatible joins will help to release Temp DB pressure.

- Outdated Statistics: Maintenance of stats can give your superior performance. For larger tables when undergoing updates /loads, it is highly recommended to update statistics.

- Wrong Indexing Strategies: Synapse is an MPP engine that massively benefits from Clustered column store indexes for larger tables. Clustered indexes on larger tables cannot leverage the higher level of compression which CCI offers on all columns. This reduces IO, which is a major factor in query performance degradation.

- Unhealthy Indexes: CCI tables need a considerable amount of memory to compress data into optimal sets. If there are not enough resources this can lead to bad segment quality which in turn can increase IO. Follow this document, which explains how to maintain index quality for CCI tables.

- Over-Partitioning: Over-partitioning can also lead to too few rows in compressed segments, which can lead to bad performance. The best practice is to have at least 1 Million rows per partition for CCI tables.

- Slow Inserts / Deletes: Using CTAS instead of Insert and delete can be an effective step to counter full logging. The concept of partition switching can also be used to replace these operators to see better performance. These best practices can be found on our transaction best practices page

- Slow Return Operation: Check the workload window and see if there are other queries that are also IO-heavy and running at the same time. Try to see if this is intelligently scheduled and if they are regular ETL/ELT jobs.

Summary

This concludes the query lifecycle in Synapse. These steps and remediations have been applied to our customers and we have seen massive improvements in performance and efficient usage of the platform.

Published on:

Learn more