Why the infamous 'Lets make a list of use cases' is THE way to kill AI innovation

AI is here. Everyone knows it. Every leadership team wants a piece of the action. And so, the first instinct? “Let’s make a list of use cases!” Sounds logical, right? Compile a list, prioritize, evaluate, and eventually pilot the best ones. In theory. Because in practice, this approach rarely leads to anything meaningful. Instead, it becomes a corporate graveyard for AI ideas.

The illusion of progress

On the surface, making a list of AI use cases feels productive. It creates the illusion of momentum. Meetings are scheduled, brainstorming sessions are held, and a massive spreadsheet is filled with potential AI applications. But then… nothing. The energy fizzles out, and AI remains a PowerPoint slide rather than a business reality.

So what’s the problem?

Glad you asked. So many!

1. The black hole of stakeholder alignment

AI use cases often require input from multiple departments: IT, business units, compliance, legal, finance, operations. And if you are in Germany like I am: involvement of the workers council, the data protection officer, a union, and several more representative bodies. Getting all these stakeholders to the table is an exercise in herding cats. When they do finally meet, discussions stall because:

- Some people don’t understand the technology well enough to make an informed decision

- Others overcomplicate things, insisting on massive, transformative AI projects instead of quick wins

- No one knows who actually “owns” the initiative

Result? Endless debates and no decisions.

2. Paralysis by analysis

Once the list is created, the next step is evaluation. This means assigning scores, defining impact metrics, and analyzing feasibility. But AI is complex. Many factors, like data availability, model accuracy, regulatory concerns, are unpredictable. Decision-makers, unfamiliar with AI (or only equipped with very superficial knowledge gained from private ChatGPT usage), struggle to evaluate viability.

So they delay. And delay. And delay. (Or have more meetings. Or both.)

Meanwhile, competitors who skipped the endless evaluation phase have already implemented something, learned from it, and moved forward.

3. Low Cloud maturity: flying blind

One of the biggest, yet least discussed, blockers to AI success is low cloud maturity. Many organizations don’t have the foundational systems in place to even see where AI could be useful. They are essentially flying blind.

Take customer service as an example. In many companies, customer inquiries land in shared mailboxes, where service reps do their best to process them manually. But because these emails remain locked inside Outlook instead of being routed into a CRM or case management system, there’s no way to measure key metrics like:

- When a ticket is opened

- When it is resolved

- What category of inquiry it falls under

- How much back-and-forth is required to resolve it

Without this basic visibility, organizations can’t even begin to evaluate which categories of inquiries take the longest or where AI could automate processes. Instead of fixing this foundational issue by integrating emails into structured workflows (something that requires cloud maturity and organizational buy-in) companies stay in the dark, making AI decisions based on assumptions rather than data. And so, the “use case list” exercise becomes meaningless.

4. Skipping the homework: AI needs a strong foundation

Before AI can deliver value, organizations need to do their homework. Many companies want AI to solve their problems but haven’t built the necessary infrastructure to support it. This includes:

- Cloud adoption - Without cloud-based workflows, AI cannot efficiently integrate into business processes

- Governance frameworks - AI initiatives need clear rules on data access, compliance, and security

- Data readiness - AI is only as good as the data it processes. If data is siloed, messy, or unstructured, AI will fail

- Change management and adoption - Employees need training to understand and trust AI solutions, rather than resisting automation

Skipping these steps means organizations are trying to apply AI to broken systems. This will lead to inefficiencies, frustration, and failed projects

Ask me how I know!

5. Overcomplexity kills buy-in

Most organizations, when creating AI use case lists, aim high. They propose game-changing, business-critical, high-impact AI applications:

- AI-powered supply chain optimization for global operations

- Predictive maintenance across thousands of assets

- Fully automated customer support with zero human intervention

Sounds great. But these require major investment, long implementation timelines, and buy-in from too many decision-makers. Getting approval for these is like pushing a huge boulder uphill.

Meanwhile, simpler, practical AI applications like document automation, intelligent search, email triage are overlooked because they don’t sound as exciting in a strategy meeting. Ironically, these are the projects that could actually deliver quick ROI and build momentum for larger AI initiatives.

6. Users don’t know what they need

Business users and decision-makers often struggle to articulate their AI needs because they don’t know what’s possible. They don’t understand the nuances of AI capabilities, nor do they have a clear picture of how different technologies (NLP, computer vision, machine learning) work together.

As a result, many use cases are either:

- Overly generic (“Let’s use AI for customer service”)

- Technically unrealistic (“Can AI predict exactly when each customer will churn?”)

- Too vague to act on (“We need AI to improve efficiency”)

This leads to further delays as teams struggle to turn these ideas into actionable projects.

A better approach: Think small, move fast

Instead of making long lists, organizations should focus on rapid experimentation:

- Skip the big list and start small - Pick one simple, tangible use case that solves a specific, well-defined problem

- Build a quick Proof of Concept (PoC) - Instead of overanalyzing, test something small within weeks (or even days), not months

- Measure impact fast - Focus on metrics that prove immediate value (e.g., time savings, cost reduction, accuracy improvements)

- Expand based on learnings - Use insights from small wins to refine and scale AI initiatives

The reality: execution beats ideation

Most companies don’t fail at AI because of a lack of ideas. They fail because they get stuck in analysis mode. The longer they debate use cases, the further behind they fall. AI rewards action, not committee-driven wish lists.

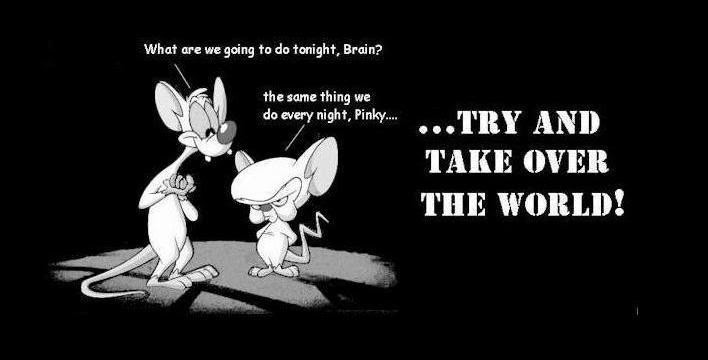

Companies who fail to understand this, always remind me a bit of a cartoon I used to watch when I was way younger… These orgs try to “take over the world”, and for some reasons (see above) these nefarious world domination plans fail.

The question isn’t “What are all the ways we could use AI?” but “What’s one thing we can implement next month that delivers value?”

Narf.

Published on:

Learn more