Copilot Studio: Part 1 – when automation bites back – autonomy ≠ chaos

Autonomy scares people. Not because they’re against AI. They’re against losing control, and rightly so. Most organizations aren’t afraid of what their Copilot Studio agent might do. They’re afraid of what it might do without asking. And yet, that’s the whole point of agents. If you need a human in the loop for every decision, you didn’t build an agent. You built a clunky wizard. Autonomy, done well, is not chaos. Autonomy, done well, is clarity. But clarity takes work. And most deployments skip that part.

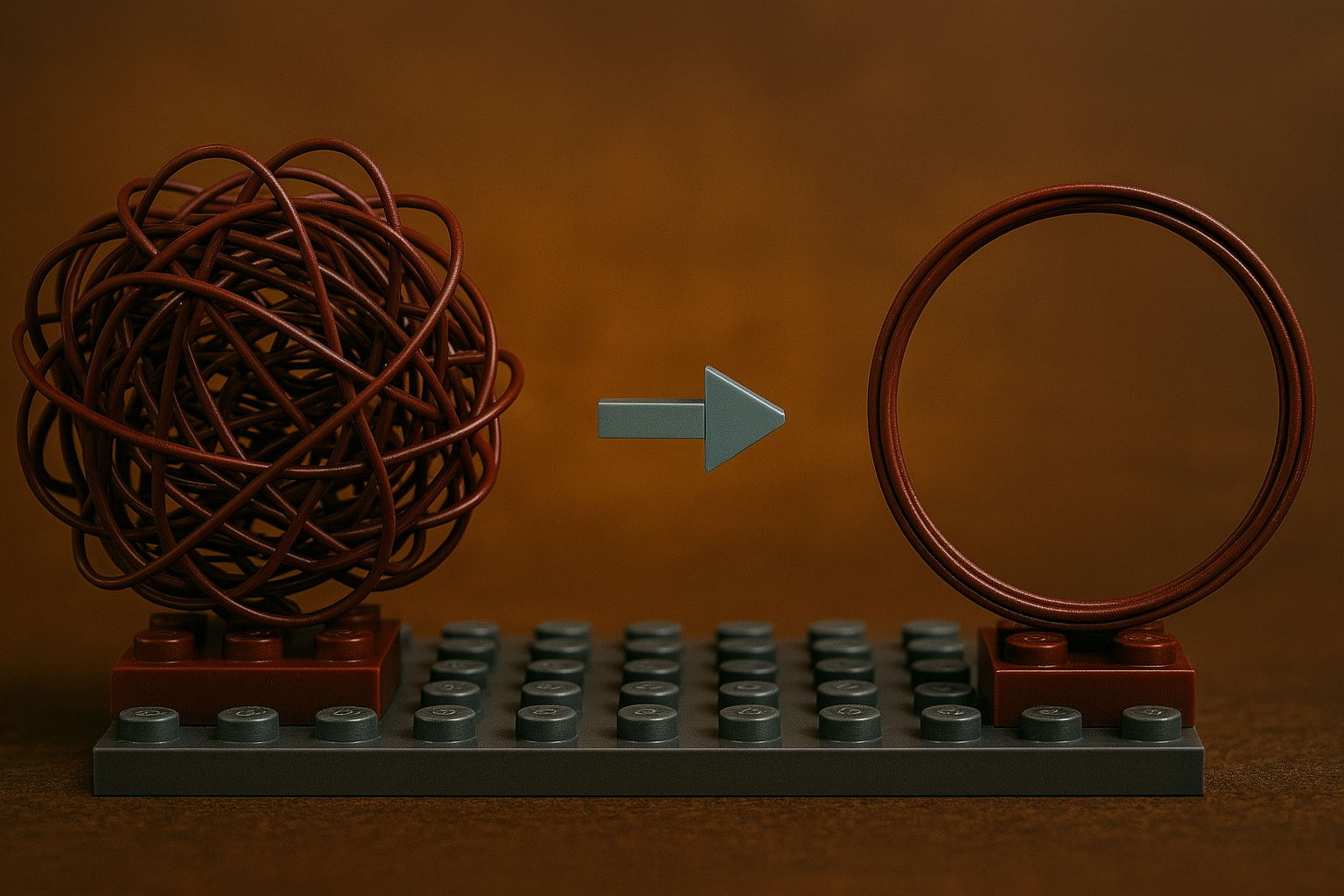

[Sidenote: I hope you know this character:]

Autonomy is not a vibe

There’s a misconception that autonomy is a checkbox or a personality trait. As if some agents are independent and others are obedient. But autonomy isn’t about tone. It’s about timing, trust, and triggers. An autonomous agent doesn’t ask before it acts. It listens for signals. It builds a plan. It executes. Ideally, it also knows when to escalate. Not because you told it to in a script, but because it understood the stakes. That’s not advanced AI. That’s just responsible system design. Never heard about that? Might be a good idea to talk :-)

Agents don’t hallucinate: people hallucinate them

Here’s the uncomfortable truth: most agent hallucinations aren’t the model’s fault. They’re design failures. Someone told the agent it could retrieve knowledge and take action, but never clarified when to do which, or how to resolve conflicts between instructions and context.

The mess isn’t in the model. The mess is in the instructions we shipped and forgot to version.

[💡 So in case you haven’t talked to me in a while: everything is code and should be in source control for versioning and traceability.]

This is where autonomy becomes dangerous: not because the agent is too powerful, but because the environment around it is too fuzzy. No escalation logic. No fallback plans. No logging that anyone actually checks. It’s not that the agent acts alone. It’s that no one takes responsibility when it does.

Autonomy needs a job description

Want autonomy that doesn’t backfire? Treat your agents like new hires. They need

- Clear goals

- Explicit limits

- A decision framework

- Supervision that kicks in only when needed

In fact, the best mental model might be onboarding a junior colleague. You teach them how to handle 80% of cases. They ask for help on edge cases. Eventually, they escalate less because they’ve learned more. The difference? Your agents won’t magically learn unless you build for that too.

“Let’s start with retrieval” is how you stay stuck

Organizations love to “start simple.” Let’s build a retrieval agent. Let’s just do FAQs. Let’s just surface policy links. That’s fine—if the goal is to stall. Because retrieval agents don’t scale business value. They reduce helpdesk noise, maybe. But they don’t change how work gets done. The moment you want the agent to take action (submit a request, assign a case, file a report) you’ve stepped into task or autonomous territory. And if your architecture, data model, and governance aren’t ready? You’re back to waiting for a human to fix it.

Build trust into the agent, not around it

We don’t need to wrap every agent in disclaimers and safety rails. We need to build trust into the agent’s behavior. That means:

- Explainability: show users how the agent reached a conclusion

- Escalation: hand over control gracefully when the agent isn’t confident

- Containment: don’t let one bad action cascade across systems

- Auditability: store not just what the agent did, but why

Autonomy isn’t the enemy. It’s the maturity test. And right now, too many orgs are failing it.

Coming up next

- Part 0: Everything is an agent, until it isn’t

- Part 1: When automation bites back – autonomy ≠ chaos [📍 You are here]

- Part 2: Good agents die in default environments – ALM or bust to be published soon™️

- Part 3: The cost of (in)action – what you’re really paying for with Copilot Studio to be published soon™️

- Part 4: Agents that outlive their creators – governance, risk, and the long tail of AI to be published soon™️

- Part 5: From tool to capability – making Copilot Studio strategicto be published soon™️

Published on:

Learn more