Azure Synapse Spark Notebook – Unit Testing

Author(s): Arun Sethia is a Program Manager in Azure Synapse Customer Success Engineering (CSE) team.

Introduction

In this blog post, we will cover how to test and create unit test cases for Spark jobs developed using Synapse Notebook. This is an extension of my previous blog, Synapse - Choosing Between Spark Notebook vs Spark Job Definition, where we discussed selecting between Spark Notebook and Spark Job Definition. Unit testing is an automated approach that developers use to test individual self-contained code units. By verifying code behavior early, it helps to streamline coding practices for larger systems.

For spark job definition developer usually develops the code using the preferred IDE and deploys the compiled package binaries using Spark job definition. In addition, developers can use their choice of unit test framework (ScalaTest, pytest, etc.) to create test cases as part of their project codebase.

This blog is more focused on writing unit test cases for a Notebook so that you can test them before you roll them out to higher environments. The common programming languages used by Synapse Notebook are Python and Scala. Both languages follow functional and object-oriented programming paradigms.

I will refrain from getting into the deep-inside selection of the best programming paradigm for Spark programming; maybe some other day, we will pick this topic.

Code organization

The enterprise systems are modular, maintainable, configurable, and easy to test, apart from scalable and performant. In this blog, our focus will be on creating unit test cases for the Synapse Notebook in a modular and maintainable way.

Using Azure Synapse, we can organize the Notebook code in multiple ways using various configurations provided by Synapse.

- External Library - Libraries provide reusability and modularity to your application. It also helps to share business functions and enterprise code across multiple applications. Azure Synapse allows you to configure dependencies using library management. The Notebook can leverage installed packages within their jobs. We should avoid writing unit test cases for such an installed library inside the Notebook. A fair amount of test frameworks is available to create unit tests for those libraries within the library source code (or outside). The Notebook will leverage APIs from the installed libraries to orchestrate the business process.

|

Pros

|

|

|

Cons |

|

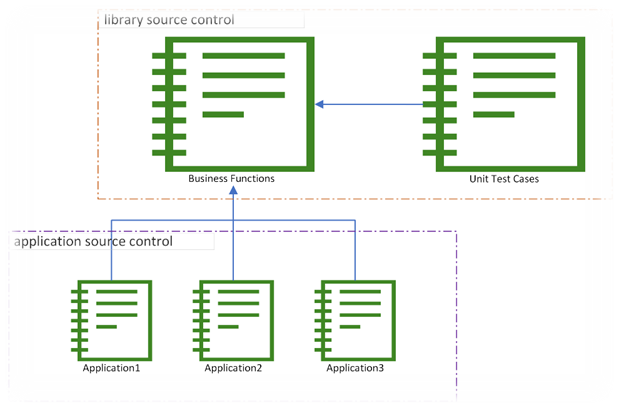

- Functions and unit test in different Notebook – Azure Synapse allows you to run/load a Notebook from another Notebook. Given that, you can create a reusable code part of a Notebook and write test cases part of another Notebook. Using continuous integration and source control, you can control versions and releases.

|

Pros

|

|

|

Cons |

|

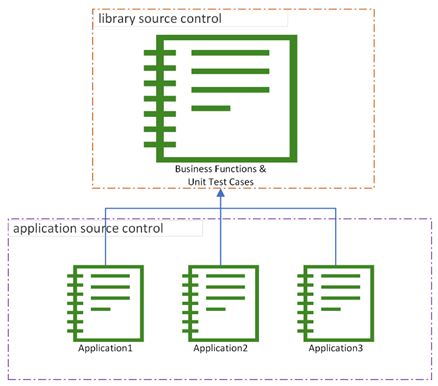

- Functions and unit test in same Notebook – The difference between this and the earlier approach is that only creating functions and test cases should be part of the same Notebook. You can still use continuous integration and source control for versioning and releases.

|

Pros

|

|

|

Cons |

|

Unit test examples

This blog will cover some example codes using Approach#1 and Approach#2. The example codes are written in Scala; in the future, we will also add more code for PySpark.

An example project code is available on github; you can clone the github on your local computer.

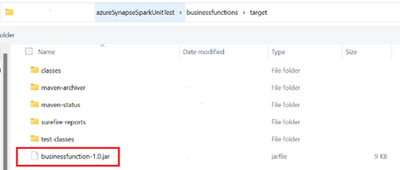

- The businessfunctions folder has code related to the approach using an external library (package). The source code of business functions APIs and unit test cases for these functions are part of the same module.

- The Notebook folder has various Synapse Notebook used for these examples.

Unit test with external library

As we described earlier in this blog, this approach does not require us to write any unit test cases part of Notebook. Instead, the library source code and unit test cases coexist outside Notebook.

You can execute test cases and build a package library using the maven command. The target folder will have a business function jar, and the command console will show executed test cases (alternatively, you can download the pre-build jar)

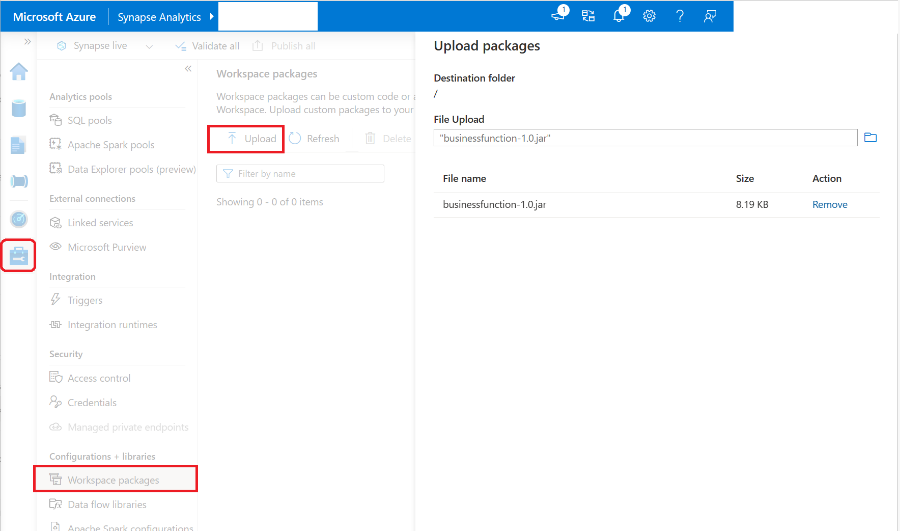

Using your Synapse workspace studio; you can add the business function library.

Within Azure Synapse, an Apache Spark pool can leverage custom libraries that are uploaded as Workspace Packages.

Using Spark pool, the Notebook can use business library functions to build business processes. The source code of this Notebook is available on the GitHub.

Unit test - functions and unit test Notebook

As we described earlier in this blog, this approach will require a minimum of two Notebooks, one for the business functions and the other one for unit test cases. The example code is available inside notebook folder (git clone code).

The business functions are available inside the BusinessFunctionsLibrary Notebook and respective test cases are in UnitTestBusinessFunctionsLibrary Notebook.

Summary

Using multiple Notebooks or library approaches depends on your enterprise guidelines, individual choice, and timelines.

My next upcoming blog will explore more code and data quality in Azure Synapse.

Published on:

Learn moreRelated posts

Boost your Azure Cosmos DB Efficiency with Azure Advisor Insights

Azure Cosmos DB is Microsoft’s globally distributed, multi-model database service, trusted for mission-critical workloads that demand high ava...

Microsoft Azure Fundamentals #5: Complex Error Handling Patterns for High-Volume Microsoft Dataverse Integrations in Azure

🚀 1. Problem Context When integrating Microsoft Dataverse with Azure services (e.g., Azure Service Bus, Azure Functions, Logic Apps, Azure SQ...

Using the Secret Management PowerShell Module with Azure Key Vault and Azure Automation

Automation account credential resources are the easiest way to manage credentials for Azure Automation runbooks. The Secret Management module ...

Microsoft Azure Fundamentals #4: Azure Service Bus Topics and Subscriptions for multi-system CRM workflows in Microsoft Dataverse / Dynamics 365

🚀 1. Scenario Overview In modern enterprise environments, a single business event in Microsoft Dataverse (CRM) can trigger workflows across m...

Easily connect AI workloads to Azure Blob Storage with adlfs

Microsoft works with the fsspec open-source community to enhance adlfs. This update delivers faster file operations and improved reliability f...

Microsoft Azure Fundamentals #3: Maximizing Event-Driven Architecture in Microsoft Power Platform

🧩 1. Overview Event-driven architecture (EDA) transforms how systems communicate.Instead of traditional request–response or batch integration...

Azure Developer CLI (azd) – October 2025

This post announces the October release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) – October 2025 appeared f...

Microsoft Azure Fundamentals #2: Designing Real-Time Bi-Directional Sync Between Dataverse and Azure SQL for Multi-Region Deployments

Here’s a detailed technical breakdown of designing a real-time bi-directional sync between Dataverse and Azure SQL for multi-region deployment...

Azure DevOps local MCP Server is generally available

Today we are excited to take our local MCP Server for Azure DevOps out of preview 🥳. Since the initial preview announcement, we’ve work...

Announcing the new Azure DevOps Server RC Release

We’re excited to announce the release candidate (RC) of Azure DevOps Server, bringing new features previously available in our hosted version....