Structured streaming in Synapse Spark

Author: Ryan Adams is a Program Manager in Azure Synapse Customer Success Engineering (CSE) team.

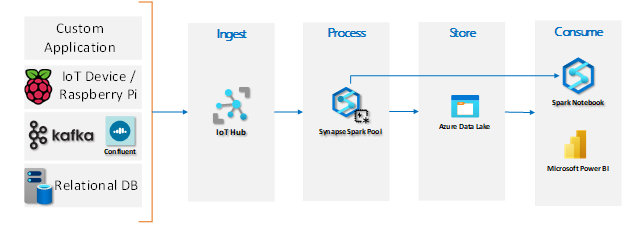

In this post we are going to look at an example of streaming IoT temperature data in Synapse Spark. I have an IoT device that will stream temperature data from two sensors to IoT hub. We’ll use Synapse Spark to process the data, and finally write the output to persistent storage. Here is what our architecture will look like:

Prerequisites

Our focus for this post is on streaming the data in Synapse Spark so having a device to send data and the setup of IoT Hub are considered prerequisites. Their setup will not be covered in this article.

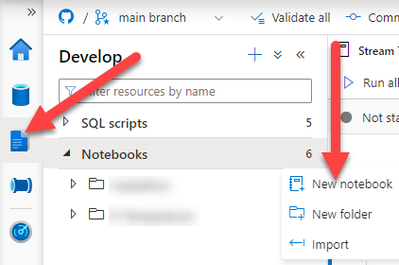

Step 1 – Create A New Notebook

The first thing you need to do is create a new notebook in Synapse, initialize the variables, and do some setup in the first cell. The setup code is provided below. Go to the Develop Hub and click the ellipsis that appears when you hover your cursor over the Notebooks heading. That will display a drop-down menu where you can select “New notebook”.

Step 2 – Setup and configuration

The code below has comments to help you fill everything in with the service names and keys of your own services. You need to setup your source by providing the name of your IoT or Event Hub along with the connection string and consumer group (if you have one). Your destination will be Data Lake, so you need to supply the container and folder path where you want to land the streaming data.

There are two pieces of sensitive information that you do not want to expose in plain text, so you’ll store the key for the storage account and the Event Hub connection string in Azure Key Vault. You can easily call these using mssparkutils once you create a linked service to your Key Vault.

Step 3 - Start fresh and wipe out ADLS

In case you want to run this action multiple times, you can delete everything in your ADLS container for a fresh start.

Step 4 - Start streaming the data

Now that you have everything setup and configured it’s time to read the data stream and then write it out to ADLS. First you create a data frame that uses the Event Hub format and our Event Hub Configuration created in Step 1.

The next part gets a little tricky because the entire JSON payload of the stream is stored in a single column called “body”. To handle this, we create the schema we intend to use when landing the data in a final data frame. Last, we write the data out to ADLS in parquet format.

Step 5 - Read the data streamed to ADLS with Spark Pool

Now that we have the data streaming to ADLS in parquet format, we are going to want to read it and validate the output. You could use the Azure portal or Azure Storage Explorer, but it would be much easier to do it right here using Spark in the same notebook.

This part is super easy! We simply configure a few variables for connecting to our ADLS account, read the data into a data frame, and then display the data frame.

Conclusion

Spark Pools in Azure Synapse support Spark structured streaming so you can stream data right in your Synapse workspace where you can also handle all your other data streams. This makes managing your data estate much easier. You also have the option of four different analytics engines to suit various use-cases or user personas.

Our team publishes blog(s) regularly and you can find all these blogs here: https://aka.ms/synapsecseblog

For deeper level understanding of Synapse implementation best practices, check out our Success by Design (SBD) site at https://aka.ms/Synapse-Success-By-Design.

Published on:

Learn more