Using OpenAI GPT in Synapse Analytics

Azure OpenAI hardly needs an introduction, but for those who managed to evade all tech new lately, let me give you a brief overview. Azure OpenAI is a suite of natural language processing (NLP) models developed by OpenAI. The models can be used in a very wide range of applications, including text generation, summarization and translation. Microsoft offers Azure OpenAI as an Azure Cognitive Service, which means that it comes with the same feature set as existing cognitive services. It is available as an API, and it supports private connectivity and Azure RBAC. In this post I’ll demonstrate you how to access Azure OpenAI’s GPT models from within Synapse Spark.

The key to accessing OpenAI from Synapse is SynapeML. If you’ve never heard of SynapseML, you should really check it out! It’s an open-source library and it helps you in building scalable Machine Learning pipelines. SynapseML is built on the Apache Spark framework and provides APIs for a variety of machine learning tasks, including text analytics, vision, anomaly detection, and many others. To learn more about its features, check out the Microsoft documentation page or the SynapseML github page.

One of the SynapseML’s capabilities is providing simple APIs for pre-built intelligent services, such as Azure cognitive services. As I mentioned before, Azure OpenAI is part of the cognitive services stack, making it accessible from within Synapse Spark pools. So that's exactly what we’re going to do!

In order to use the Azure OpenAI in Synapse Spark, we’ll be using three components. The setup of these components is out of scope for this article.

- A Synapse Analytics workspace with a Spark Pool

- An Azure OpenAI cognitive service with text-davinci-003 model deployed

- Azure Key vault to store the OpenAI API key

Of course, we’ll need some data to work with. I want to do sentiment analysis on restaurant reviews. So, I decided to ask ChatGPT to generate me some! I put the result in a delimited text file and uploaded it to my workspace’s primary ADLS account.

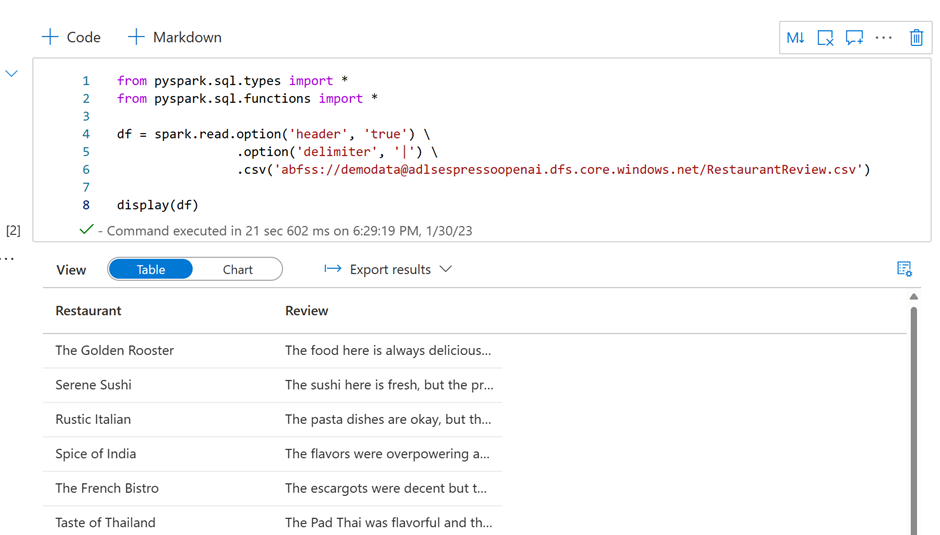

The file that I uploaded to ADLS contains two columns, one with restaurant names and another with their respective reviews. Again, it’s important to note this data is generated by ChatGPT and is not based on actual reviews!

For those interested in using Azure OpenAI and Synapse Spark to generate test data, check out our latest video.

Once we have our data and all necessary components set up, it's time to start writing some code! First up, we need to define some variables. These include the name of your Azure OpenAI service, the name of the deployed GPT model (in this case, I'm using the text-davinci-003 model), and the secret containing your OpenAI API key. It's important to ensure that the managed identity of your Synapse Workspace has the appropriate permissions to access the secret!

Next, we load the data into a dataframe and display it to confirm that everything has been loaded as expected.

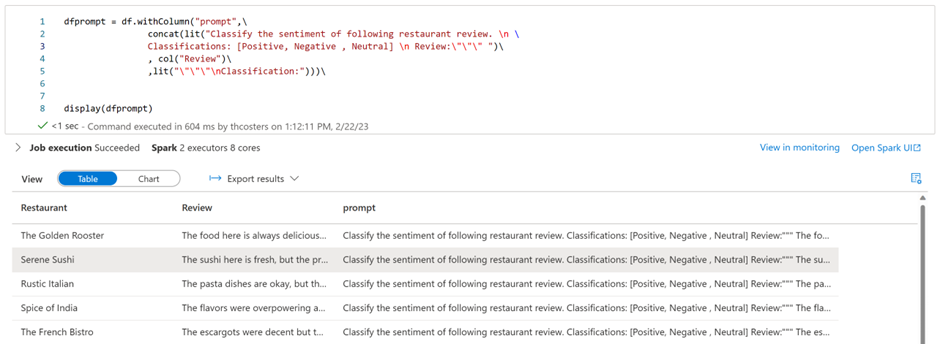

Now that we have our data in a dataframe, we can begin working with it. To use the Azure OpenAI GPT models, we need to provide them with textual instructions, or prompts. The Azure OpenAI is a great place to start developing and testing these prompts. The playground is part of the Azure OpenAI Studio. To access the playground, you will need an Azure OpenAI resource first. Below you can find the prompt that we’ll be using in Synapse to classify the reviews. We'll be providing OpenAI with three classification options: Positive, Negative, and Neutral.

This translates to the following pyspark code. We generate a column called “prompt” and create a new dataframe to store the results. This column will be sent to Azure OpenAI as the prompt for classification.

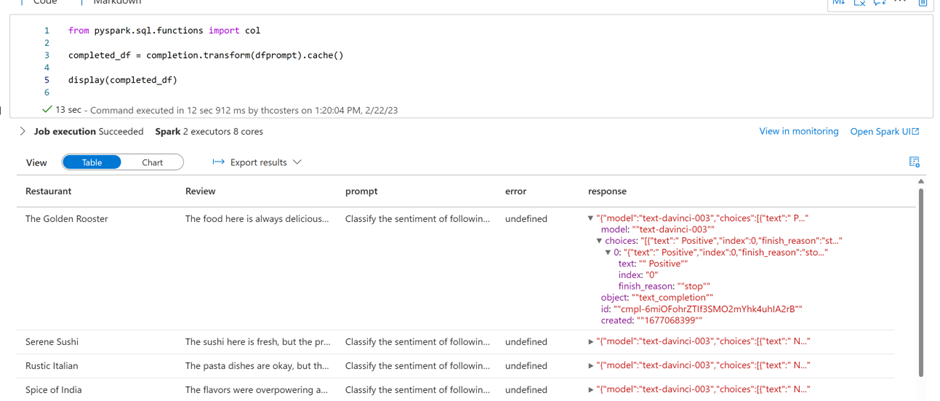

Next, we'll be using the SynapseML libraries to apply the OpenAI completion service to the dataframe. To do this, we create an OpenAICompletion object, which will serve as a distributed client. It will use the variables we created earlier to define the connection settings. Furthermore, the following settings are particularly important:

- setPromptCol: The name of the column that contains the prompt

- setErrorCol: The name of the column that will be created to store any errors returned

- setOutputCol: The name of the column that will be created to store the response

Now that we've configured up the OpenAICompletion client, we can start using it. We do this by using the “transform” function passing in the dataframe with the prompt column as input. This will create two new columns: "error," which should be empty, and "response," which contains the JSON response from our API call.

The only remaining step is parsing the JSON and extracting the sentiment classification from it.

That's all you need to do to start using the Azure OpenAI GPT models within Synapse Spark! The GPT models are incredibly versatile, and I'm excited to see what kind of use cases you can come up with. If you need some inspiration, check out the notebooks used in Synapse Espresso, all of which are available on Github. Now it's up to you to start scripting and have fun!

References:

- Azure OpenAI: https://learn.microsoft.com/en-us/azure/cognitive-services/openai/overview

- SynapseML: https://microsoft.github.io/SynapseML/

- Azure Cognitive Services: https://learn.microsoft.com/en-us/azure/cognitive-services/what-are-cognitive-services

- GitHub: https://github.com/thcosters/SynapseSparkGPT

- Synapse Analytics Channel: https://www.youtube.com/@AzureSynapse

Published on:

Learn more