Enabling Synapse and Azure ML pipeline workflows

As more customers standardize on the Synapse data platform, enabling machine learning workflows through Azure Machine Learning (Azure ML) becomes particularly interesting. This is especially true as more customers look to bring their data engineering and data science practices together and mature capabilities on both sides.

The goal of this blog post is to highlight how Synapse and Azure ML can work well together to deliver key insights. This is motivated by a scenario where a customer modernized their data platform on Azure Synapse but was looking to improve their data science practices through Azure ML. The focus of this blog is to expose existing functionality, and it is not a “hardened” solution with security or other cloud best practice implementations. The workflow steps also assume some level of comfort with Python and working with the Azure Python SDKs.

The Scenario

Dan Adams LLC is a restaurant business that receives customer reviews from a third-party source. They are interested in segmenting customers based on their reviews to improve their services using feedback. The reviews will be stored in a data lake, and the final outputs after running a machine learning process will be written back to the data lake. The downstream sources, such as Power BI, will consume the data from the data lake.

To achieve this, the workflow follows the following steps:

- Setup basic infrastructure to provision a Synapse workspace, a Data Lake, and an Azure Machine Learning environment.

- Load parquet files containing customer reviews into the data lake and convert them into the Delta table format.

- Trigger an Azure ML pipeline process with two stages:

- The first stage makes a REST call to a sentiment API to classify reviews into positive, negative, or neutral categories.

- The second stage uses a clustering algorithm to classify customers based on their feedback.

By following this workflow, Dan Adams LLC can better understand their customers' feedback and make data-driven decisions to improve their services.

The workflow steps

To follow along with the steps, you can clone this repository. Most steps are run locally using the Python SDKs of various services. Only Step 5 will require running certain code snippets in a Synapse workspace notebook.

Setup local environment and Azure infrastructure

- A virtual environment should be provisioned to run from a compute of choice (for example, your own client machine). Setting up a local virtual environment can be done through various package managers. For this workflow, we will use Conda to setup a local virtual environment. If Conda is installed, run the following command:

This will create a Conda environment called synapseops and then activate it in the same command.

- Once activated, install the required dependencies by running:

pip install -r requirements.txt Installing these dependencies should take a few minutes.

Note: If running scripts on an Apple M1 device, there are some package conflicts for the mltable library. Resort to an Intel-based architecture to run this.

The next step is to provision the needed infrastructure and Azure resources. This includes creation of a data lake, a Synapse workspace and an Azure ML workspace within the same resource group. The default location in the script is eastus region.

Note: As mentioned in the introduction, this is a minimal viable setup to complete this workflow. For example, some aspects like setting up a Storage Blob contributor role to enable access, is automated. However, other cloud best practices, such as setting up managed identities or private networks, are not included in this minimal setup.

- First create a local file to store your subscription information. Do this by running the following command:

echo “SUB_ID=<enter your subscription ID>” > sub.env

This will create a file you’re your subscription credential which is used by the bash script to authenticate.

Note: It may help to pre-log into the Azure CLI by running az login and authenticating to the right tenant and subscription.

- Run the setup script by executing the following command:

This script goes through the following steps:

- Creates an ADLS Gen 2 account (the data lake) with a container called reviews.

- Creates a Synapse workspace with a small Spark pool.

- Creates an Azure ML workspace with associated resources.

- Creates a Cognitive text analytics resource (for sentiment analysis).

If the script completes successfully, a variables.env file will be created and stored at the root of the directory. This contains details of the resource group, location, workspace name, etc. in addition to service principal credentials.

Note: If there are intermittent failures during provisioning, delete the resource group from the existing run, and re-execute the script.

If all resources have been successfully created, you should see similar resources below all contained in the same resource group.

Note: You can see two storage accounts created – the adlsamlwfstrorageacct is the ADLS Gen 2 Data Lake, while the other storage account is created as part of the default provisioning experience for Azure ML to store experiment runs, code snapshots and other Azure ML artifacts.

Sample data and converting to Delta table format

Next, we will run a python script locally to generate some sample data. The resulting dataset also merges some static customer review data. The result should be the creation of a local folder called generated-data which contains 10 parquet files of 100 records each. Each file has the following schema:

- Name (name of the customer)

- Address (address of the customer)

- IPv4 (IP address of the customer)

- Customer_review (review by the customer)

To generate these files, run the following command:

Confirm that the generated-data folder has been created.

If the files above have been generated, upload these samples to the data lake by running the following command:

This script uses the Python SDK for Azure Storage to easily upload files to the appropriate blob container or file path. The output should look similar to the screenshot below. Below, the files are stored in a folder path called reviews/listings.

Note: The parquet files are numbered from 0 to 9.

Now that the files are in the data lake, the next step is to convert these files into the Delta Table format. This will be executed through a series of code snippets in Synapse Studio using a notebook.

- Open Synapse Studio, navigate to Data, and select Linked. Under Linked, there should be the ADLS Gen 2 account entitled reviews. Select this source and expand listings. On any one of the files, select New Notebook and Load to Dataframe. This should open a notebook with the first cell pre-populated with PySpark code to load the selected file into a data frame.

- Notebook operations:

- In the notebook, execute the following code by overwriting what’s pre-populated. The only change is to load all 10 parquet files into the dataframe vs. the one selected.

- Then, check the count of rows. Each of the parquet files should have 100 records. In total, 1000 records should have been loaded into the dataframe.

- Finally, save this dataframe in the Delta table format using the code snippet below. Note that this will be saved to a new filepath in the data lake called customer-reviews:

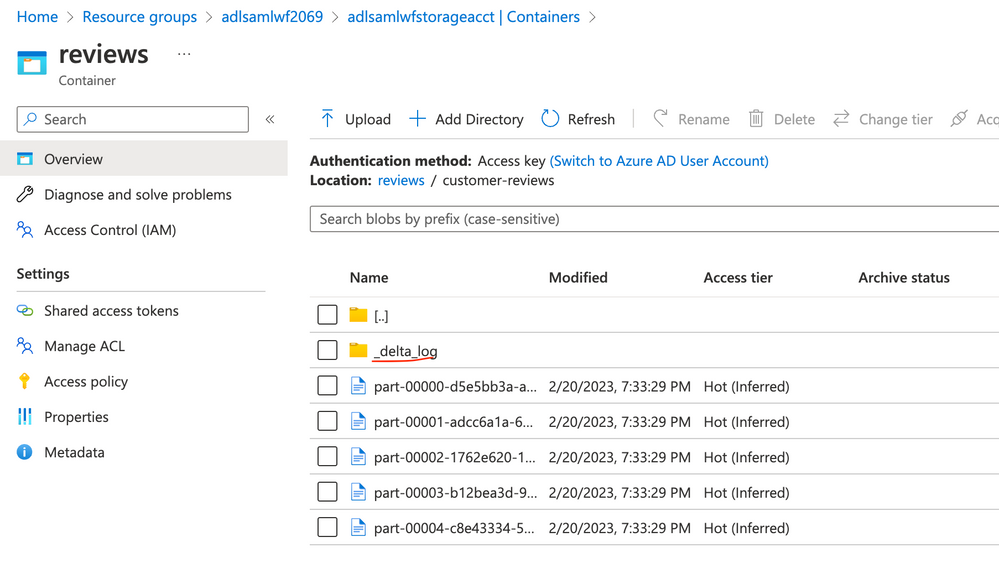

When this step is completed, a new file path called customer-reviews should have the parquet files stored in the new Delta table format as shown below.

Note: Why convert to Delta table format? In short, to bring the benefits of ACID and time travel to parquet files. This is particularly useful for data science scenario where you may want to compare datasets at various points in time. To learn more, check out What is Delta Lake.

Running the Azure ML pipeline

Next, we head to Azure ML to register the data lake as a ‘datastore’.

Note: This is not actually creating a datastore, just storing a reference to an existing one with service principal credentials.

This is done by running the following command at the root of the repository:

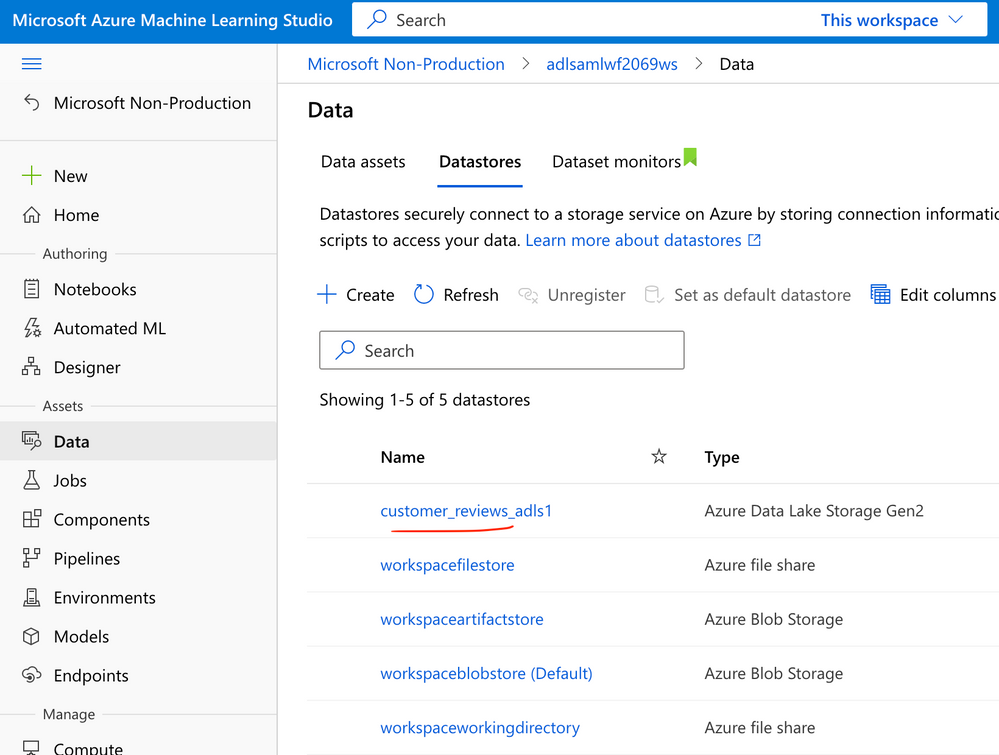

This command also leverages the variables.env file created in Step 2 to authenticate to Azure ML using service principal credentials. If successful, open the Azure ML Studio workspace, and under Data, you should see the following datastore called customer_reviews_adls1 registered as a datastore.

To support the Azure ML pipeline, we need to also create a compute cluster and an environment (Docker + Conda file) to run our code. Pre-created environments exist in Azure ML (making it faster to deploy code) but since we’ll need to add the Cognitive Service for language capability, we’ll build a specific image. To enable this, execute the following command:

This will create a cluster in Azure ML with a maximum capacity of 4 nodes and a minimum capacity of 1 node.

Note: A best practice is to save the minimum capacity as 0, so nodes will auto-scale down once any task is complete.

Successful execution of this should result in the following snapshot.

After this, create a custom environment. To do this, run the following command:

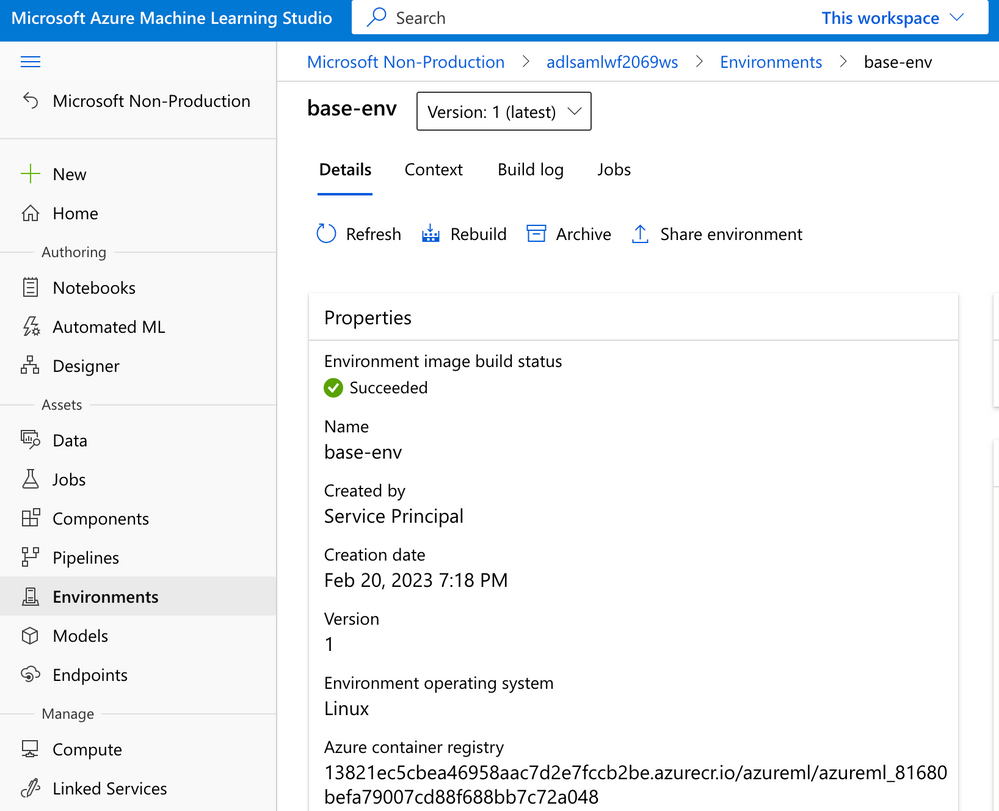

This will build a container image from the environment specifications in the script. The image will be saved in a container registry in the same resource group. When an Azure ML pipeline is triggered, this image will run on the provisioned compute to ensure runtime support for the python scripts. Successful building of the environment would be the following:

One of the recent innovations on the Azure ML service to help with structuring data pulls and parsing multiple files is the use of an MLTable file – essentially, a specification of how to read in data. Check out Working with tables in Azure Machine Learning to learn more.

This is particularly helpful since this specification supports Delta table files. As required, the specification needs to sit in the same directory as the delta table files. To enable this, execute the following command:

If this completes successfully, the delta lake file path should look similar to the screenshot below.

The final step is to trigger the Azure ML pipeline. Do this by executing the following command:

As mentioned previously, this has two stages:

- The first stage rolls up the delta files and then uses the sentiment API as part of the Azure Cognitive Service for Language to classify each customer review.

- The second stage takes those customer reviews and clusters each customer into discrete groups using a K-Means clustering algorithm.

Note: This is not a sophisticated use of K-Means clustering. Each review is already categorized into either positive, neutral or negative categories so the algorithm merely picks out this distinction for segmenting into clusters. However, this is mostly to demonstrate how to orchestrate multi-stage pipelines, either for data preparation or model building.

If the pipeline completes successfully, it should look like the following screenshot.

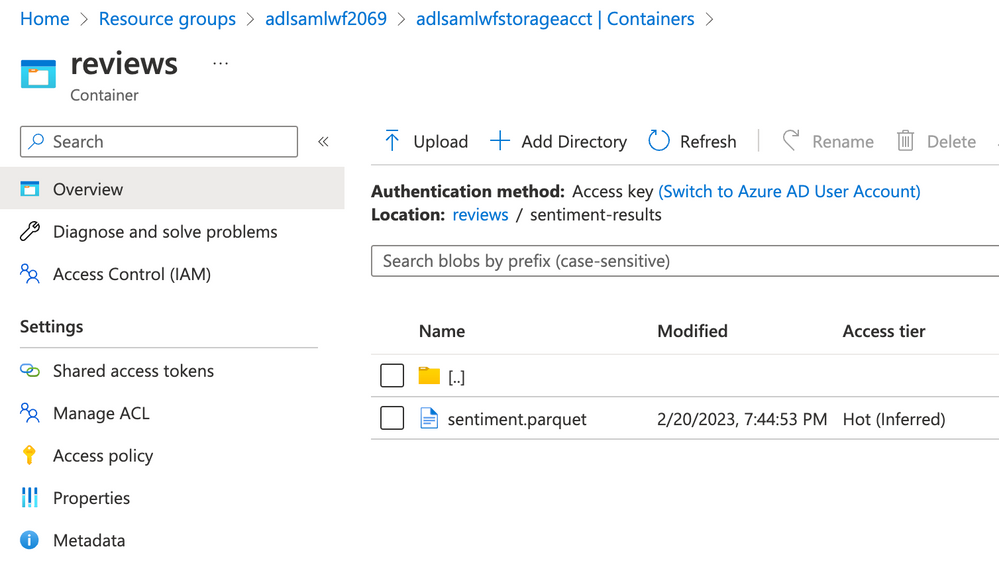

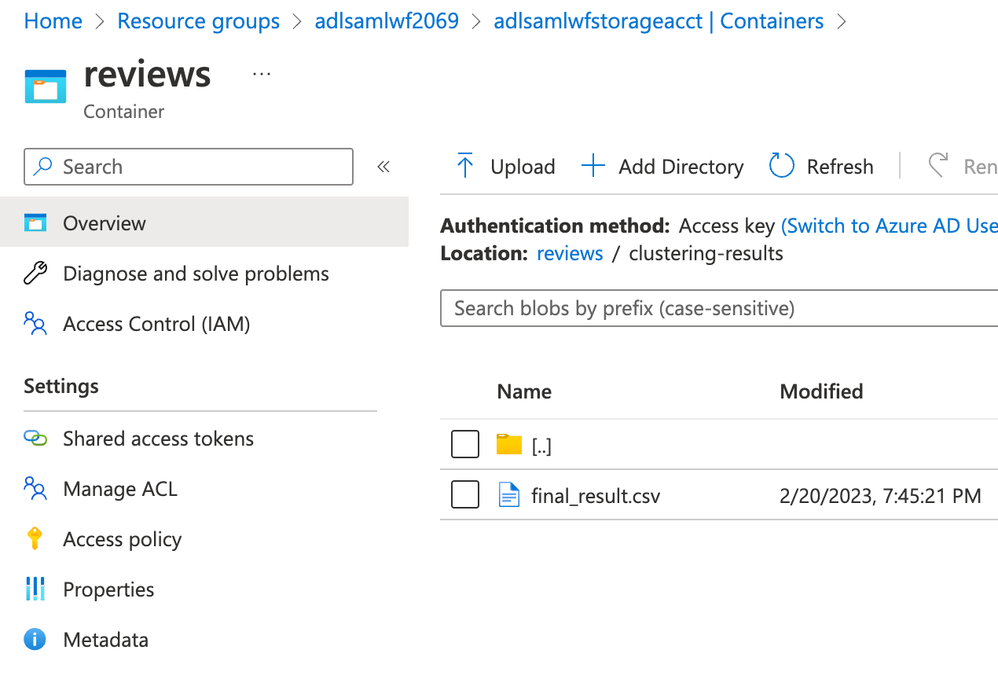

Also, the outputs of the various Azure ML pipeline stages have also been captured in the reviews/sentiment-results file path, and the reviews/clustering-results file path (see screenshots below). The latter is the final dataset to be consumed by downstream processes.

Final points

As demonstrated above, it's straightforward to orchestrate workflows using Azure Synapse and Azure ML to build robust data engineering and data science practices. To summarize, we demonstrated a workflow that leverages Delta table files from Synapse in Azure ML. This workflow includes the following steps:

- Provisioning the required infrastructure and access between services

- Generating data and converting it to the Delta table format

- Using this data to enrich and further analyze with machine learning workflows in Azure ML

This is a common workflow that many customers embrace when adopting a Delta Lake architecture. It is a key step to delivering timely insights and helping customers improve their services.

To learn more about either service, check out the following documentation:

Published on:

Learn moreRelated posts

From Real-Time Analytics to AI: Your Azure Cosmos DB & DocumentDB Agenda for Microsoft Ignite 2025

Microsoft Ignite 2025 is your opportunity to explore how Azure Cosmos DB, Cosmos DB in Microsoft Fabric, and DocumentDB power the next generat...

Episode 414 – When the Cloud Falls: Understanding the AWS and Azure Outages of October 2025

Welcome to Episode 414 of the Microsoft Cloud IT Pro Podcast.This episode covers the major cloud service disruptions that impacted both AWS an...

Now Available: Sort Geospatial Query Results by ST_Distance in Azure Cosmos DB

Azure Cosmos DB’s geospatial capabilities just got even better! We’re excited to announce that you can now sort query results by distanc...

Query Advisor for Azure Cosmos DB: Actionable insights to improve performance and cost

Azure Cosmos DB for NoSQL now features Query Advisor, designed to help you write faster and more efficient queries. Whether you’re optimizing ...

Power Automate Retry and Error Handling Patterns for Reliable Power Pages Integrations

When Power Pages integrates with Power Automate, reliability becomes key. Portal users expect instant responses — whether submitting a form, u...

Handling Large Files in Power Automate

Power Automate can handle large files, but how large? SharePoint Online supports files up to 250 GB, which sounds generous until you try movin...

Azure Developer CLI: Azure Container Apps Dev-to-Prod Deployment with Layered Infrastructure

This post walks through how to implement “build once, deploy everywhere” patterns using Azure Container Apps with the new azd publ...