Synapse - Choosing Between Spark Notebook vs Spark Job Definition

Author(s): Arun Sethia and Arshad Ali are Program Managers in Azure Synapse Customer Success Engineering (CSE) team.

Introduction

Apache Spark applications are used by businesses to perform big data processing (ELT/ETL load), Machine Learning, and complex analytics need. Primarily Spark applications can be allocated into three different buckets:

- Batch Application – Execution of a series of job(s) on a Spark runtime without manual intervention, such as long-running processes for data transformation and load/ingestion.

- Interactive Application – Interactive application request user input or visualizes output, for example, visualizing data during model training.

- Stream Application – Continuous data processing coming from an upstream system.

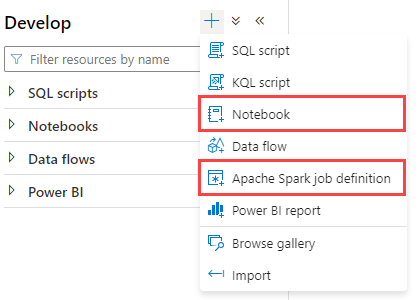

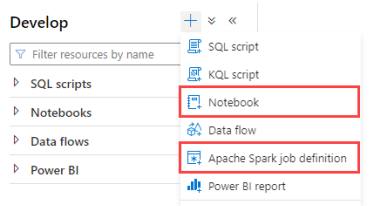

You can develop spark applications using Synapse Studio integrated Notebook (web-based interactive interface) or your preferred IDE (Integrated Development Environment) locally to deploy them using Spark Job Definition(SJD).

This blog will help you decide between Notebooks and Spark Job Definition (SJD) for developing and deploying Spark applications with Azure Synapse Spark Pool.

Synapse Spark Development Using Notebook

Overview

A Synapse Spark Notebook is a web-based (HTTP/HTTPS) interactive interface to create files that contain live code, narrative text, and visualizes output with rich libraries for spark based applications. Data engineers can collaborate, schedule, run, and test their spark application code using Notebooks. Notebooks are a good place to validate ideas and do quick experiments to get insight into the data. You can integrate the Synapse Notebook into Synapse pipeline.

The Notebook allows you to combine programming code with markdown text and perform simple visualizations (using Synapse Notebook chart options and open-source libraries). In addition, running code will supply immediate feedback, output, and progress tracking within Notebook.

Notebooks consist of cells, which are individual blocks of code or text. Each block of code is executed on the serverless Apache Spark Pool remotely and provides real-time job progress indicators to help you to understand execution status.

Development

Synapse Notebooks support four Apache Spark languages: PySpark (Python), Spark (Scala), Spark SQL, .NET Spark (C#) and R. You can set the primary language for a Notebook. In addition, the Notebook supports line magic (denoted by a single % prefix and operates on a single line of input) and cell magic (denoted by a double %% prefix and operates on multiple lines of input) commands. You can list all available magic commands in the Synapse Notebook using the cell command %lsmagic. Magic commands are fully compatible with IPython (Azure Synapse Analytics July Update 2022 - Microsoft Community Hub).

You can use multiple languages in one Notebook by specifying the correct language magic command at the beginning of a cell. The following table lists the magic commands to switch cell languages.

|

Magic Command |

Description |

|

%%pyspark |

Python code |

|

%%spark |

Scala code |

|

%%sql |

SparkSQL code |

|

%%csharp |

.NET for Spark C# |

|

%%sparkr |

R Language (currently in Public Preview) |

Synapse Notebooks are integrated with the Monaco editor to bring IDE-style IntelliSense (Syntax highlight, error marker, and automatic code completions) to the cell editor. It helps developers to write code and identify issues quicker.

Since Notebooks allow you to use multiple language codes across multiple cells, sharing data or variables created by one language code can be consumed by other language codes using “temp” tables.

Spark Session Configuration

You can configure or modify Spark Session setting using multiple methods.

- %%configure magic command - The spark session needs to restart to affect the settings. Therefore, running the %%configure at the beginning of your Notebook is recommended

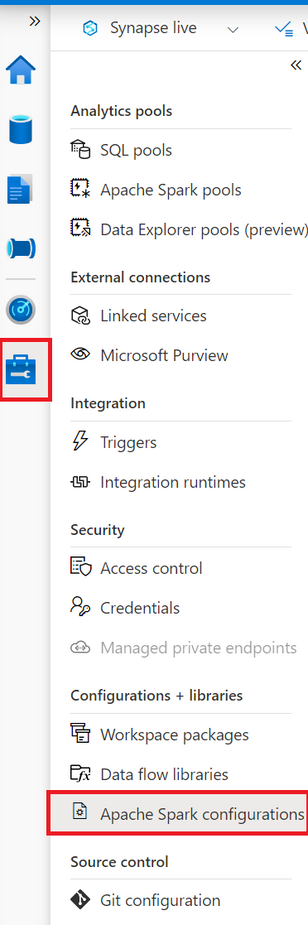

- Spark Configuration provided by Synapse Studio - You can create spark configuration using Synapse Studio -> Manage -> Spark Configuration and assign it to a Notebook.

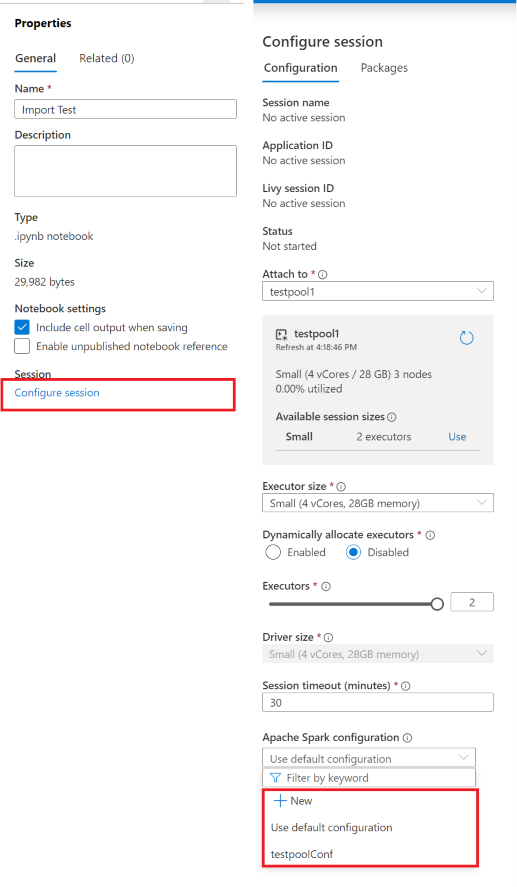

- You can configure existing Spark Configuration from Notebook or can create a new one.

|

Create Spark Configuration |

Configure Session from Notebook |

|

|

Note: Synapse pipeline allows you to create and schedule complex ETL workflow from disparate data stores. Only the following magic commands are supported in Synapse pipeline: %%pyspark, %%spark, %%csharp, %%sql.

Deploy

You can save a single Notebook or all Notebooks in your workspace. You can run a Notebook without saving; saving a Notebook is going to publish a version in the cluster. Select the Publish button on the Notebook command bar to save changes you made to a single Notebook.

By default, Synapse Studio authors directly against the Synapse service. You can collaborate using Git for source control; the Synapse Studio allows you to associate your workspace with a Git repository, Azure DevOps, or GitHub.

Modular & Reusable

Code modularity and reusability are core design principles for developing an application. In Synapse Spark Notebook, you can achieve the same with a couple of options:

- Using Custom or third-party Libraries/packages

- Reuse Notebook

You can configure and organize third-party libraries or your custom project/enterprise libraries and packages at Synapse workspace(default), Spark Pool, and spark session (Notebook) level. Synapse allows you to configure libraries without visiting the user interface, using PowerShell and REST API.

Session scoped installed packages will be available only for the current Notebook. Packages are installed on top of the base runtime and pool-level libraries. Therefore, Notebook libraries will take the highest precedence.

To refer to another Notebook within the current Notebook, you can use %run <notebook absolute path or notebook name> line magic command, it can help in code reusability and modularity.

Synapse Spark Development with Job Definition

Using Spark Job definition, you can run spark batch, stream applications on clusters, and monitor their status. Synapse Studio makes it easier to create Apache Spark job definitions and then submit them to a serverless Apache Spark Pool. You can integrate spark job definition in the Synapse pipeline.

Code Development

You can develop your spark application (batch and stream) locally using any of your preferred IDE (For example - VSCode, IntelliJ, etc.). In addition, you can use a Synapse-provided plug-in for IntelliJ or VSCode to connect to your serverless Spark Pool for local development.

The VSCode plug-in allows you to create and submit Apache Hive batch jobs, interactive Hive queries, and PySpark scripts for Apache Spark. In addition, you can submit jobs to Spark and Hive from your local environment.

The IntelliJ plug-in allows you to develop Apache Spark applications and submit them to a serverless Spark Pool directly from the IntelliJ integrated development environment (IDE). You can develop and run a Spark application locally. To develop your code locally, you can use Scala, Java, PySpark, or .NET C#/F#.

Spark Session Configuration

You can configure the Spark Session setting using multiple methods.

- Spark Configuration in Spark Pool – You can define a Custom Spark Configuration in a Spark Pool and assign it in Spark Job definition.

- Config Property in SJD - You can specify spark configuration-related parameters in the config property part of Spark Job Definition (SJD).

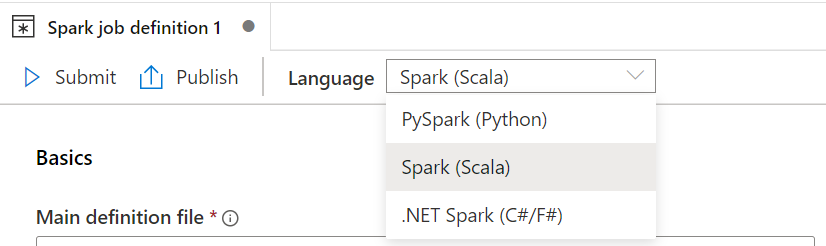

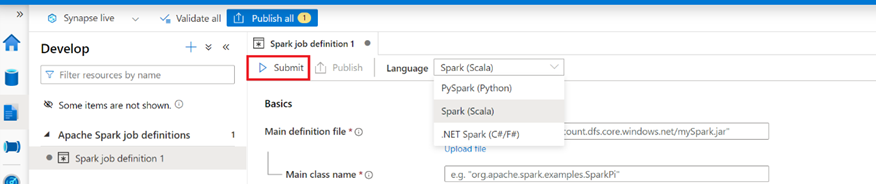

Deploy

Before you can publish or submit the spark application to the Spark Pool, you need to have compiled package binaries available to ADLS Gen2 storage attached to the workspace, or any other storage linked to Synapse Studio.

The Synapse spark job definition is specific to a language used for the development of the spark application.

There are multiple ways you can define spark job definition (SJD):

- User Interface – You can define SJD with the synapse workspace user interface.

- Import json file – You can define SJD in json format. The SJD json file is fully compatible with Livy POST Session API, you can specify spark configuration related parameters in the config property.

- Using powershell

You can submit the job to the Spark Pool without publishing. Publish is not going to submit the job to the pool, you need to submit the job using the submit button.

Modular & Reusable

You can use the design principal offered by Scala, Java, .NET (C#/F#), and Python while developing code for the spark application. For example, code written in Java and Scala can create multiple Maven modules, or PySpark can create python modules (wheels).

You can configure third-party libraries or your custom project/enterprise libraries and packages at the Synapse workspace and Spark pool level. In addition, synapse allows you to configure libraries without visiting the user interface, using PowerShell and REST API.

Comparison

The following section covers comparison between Notebook vs SJD (Spark Job Definition):

|

Development Tool → Dimension ↓ |

Notebook |

Job Definition |

|

Setup Effort |

Zero |

You need to build the application using IDE and package it. For example, in the case of Java and Scala, we need to package it in a jar file using Maven/Gradle/Scala-sbt. |

|

Data Visualization |

built-in, used for data exploration, data science and machine learning. |

You can use server-side libraries to create output on the driver and store output to linked or associated storage. |

|

Interactive Query |

Yes |

No |

|

Debugging |

Immediate feedback and associated outputs are part of Notebook execution. |

You need to use azure log analytics. |

|

Collaboration |

Zero Setup is required for any code collaboration |

You would require source control or a centralized repository for collaboration. |

|

Deployment |

Online publish a Notebook |

jar, py file, dll |

|

Programming |

Need to know programming language skills, like Python/Scala/.NET |

Need to know use of IDE, programming language and build/packaging tool (Maven/Gradle/Scala-sbt/etc.) |

|

Multiple Languages |

Quick and easy - You can use multiple languages in one Notebook by specifying the correct language magic command at the beginning of a cell. |

Need a few additional steps: You can practically include multiple languages; for example, create a UDF function in scala (jar file) and call it from PySpark. |

|

Development Cycle |

Quick and easy to do iterative development. |

Need to build package binary file, upload to storage account or use IDE plugins. (VSCode/IntelliJ) |

|

Test Suites |

Couple of options:

|

You can use language-specific testing frameworks (like Junit5, ScalaTest, python unittest, etc.) to create functional testing, unit testing, integration testing, end-to-end testing. These test suites can be part of the CI/CD pipeline. |

|

Code Coverage & Profiling |

No direct option |

Code coverage and profilers are integrated with IDE |

|

Development Box |

Lightweight Browser |

IDE + Compiler + Optional run time environment for testing |

|

Type of Jobs |

Interactive and Batch |

Batch, Stream |

|

Data Exploration |

Quick and easy to validate |

You must create output to the storage account. |

|

Integrate with Synapse Pipeline |

Yes |

Yes |

|

Package Dependencies |

You can manage the package by uploading custom or private wheel or jar files for the Workspace/Spark Pool/Notebook session. |

Similar to Notebook + language specific build tools. For example, you can create Uber-JAR for Java/Scala application. Easy to bundle dependencies within the application (Uber-Jar). |

|

Monitoring |

Interactive monitoring after each cell execution. |

Monitor from Synapse Studio and Spark History/UI. |

Summary

The perspective of using Notebook vs. SJD (Spark Job Definition) can vary from one user to another; based on our comparison data, we can conclude as follows:

- Notebook-based development would require zero setup time, quick, interactive, and easy to collaborate, whereas using SJD would require expertise in packaging tools and IDE. In addition, the SJD allows you to write stream applications and test suites before you release them to your environment.

- Notebook is an effective use case for the data exploration and machine learning workload where data scientists can do iterative development.

Our team publishes blog(s) regularly and you can find all these blogs here: https://aka.ms/synapsecseblog

For deeper level understanding of Synapse implementation best practices, please refer our Success By Design (SBD) site: https://aka.ms/Synapse-Success-By-Design

Published on:

Learn more