All you need to know about Replicated Tables in Synapse dedicated SQL pool

Author(s): Pedro Martinez is a Program Manager in Azure Synapse Customer Success Engineering (CSE) team.

Introduction

If you have ever used Azure Synapse Analytics dedicated SQL pool you would know there are multiple table types to choose from, for your workload. You might ask yourself, “when can I use Replicated table type and how I can efficiently use them”?

This blog is going to talk in detail about replicated table type, when to use and what are best practices for its usage. But before that, let's start by understanding the different table types:

Round Robin Table Type

Round robin is the default table type available in Azure Synapse dedicated SQL Pool. When using round robin, all data will be evenly distributed across all distributions. Wonderful way to get started and quickly load your data to Synapse dedicated SQL Pool.

Hash Distributed Table Type

Hash table distributes data based on a column (or multiple columns with Multiple Column Distribution support now). Hash tables are good for big fact tables with over 60 million rows.

Replicated Table Type

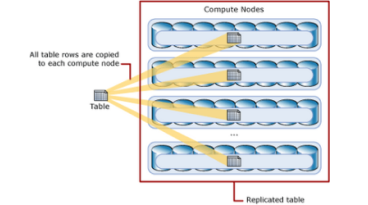

A replicated table appears as a single table, but it's replicated across your compute nodes with the main intent of minimizing data movement across compute nodes. So, it has a full copy of your table on each compute node, for example a dedicated SQL pool with an SLO of DW7500c has 15 compute nodes, in this case a replicated table is replicated 15 times - one copy on the each of the compute nodes.

Before choosing a replicated table you first need to answer the following questions:

- How large is the table?

- How often is the table refreshed?

- How many replicated tables will my workload include?

- If the replicate tables are refreshed frequently, by when will they need to be available?

Replicated tables are great for reducing data movement and will end up being more beneficial than harmful when they are properly used. But take the following into consideration: replicated tables are actually stored as round robin and therefore are not optimized for a first time read. Any initial read will result in a full table scan when accessing the data on disk. A replicated table is cached for performance as an in-memory table and therefore should perform better than a scan across 60 distributions even if all distributions are on a single compute node

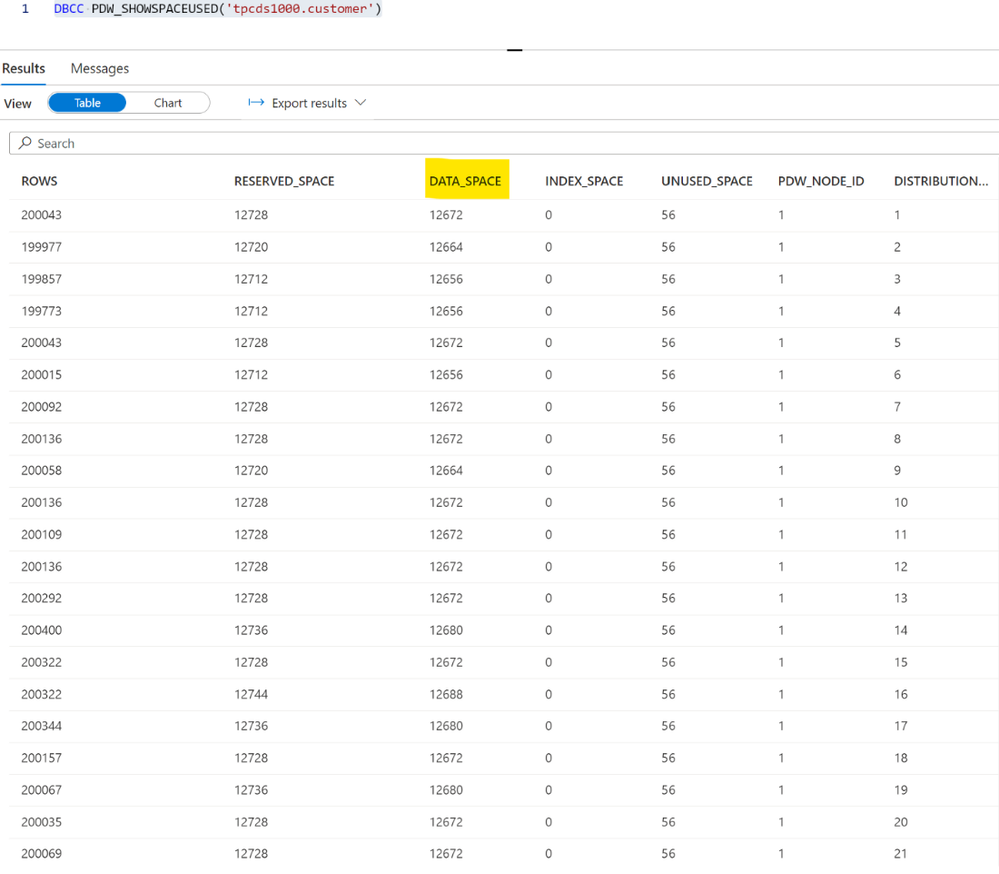

This is not a hard limit, but it's recommended to choose tables less than 2GB total size on disk. To find the size of a table, you can use the command DBCC

From the sample below I’m using table customer from TPCDS 1TB. To get the total size you will need to add all the 60 rows and convert it from KB to GB. 12670x60= 760,200 or .76GB so this means this table qualifies for replicated table.

It is also important to understand how often a table is refreshed/updated. Replicated tables are meant for tables with infrequent refresh or static tables as the engine will need to replicate the data to all compute nodes every time you refresh the data.

If you have plenty of replicated tables, you need to be careful. Having too many replicated tables could potentially lead to performance issues. There are 2 main reasons, the first one is because the engine needs a trigger to replicate the table. When you create a replicated table and load the data, the table is in a “Not replicate ready” state and the first query that reads from this table will use this table as a ROUND ROBIN and will end up with heavy broadcast move operations.

Whenever there is data change in the replicated table, we recommend running a SELECT top 100 from replicate_table to trigger the replication process so the final user does not pay for the time to replicate.

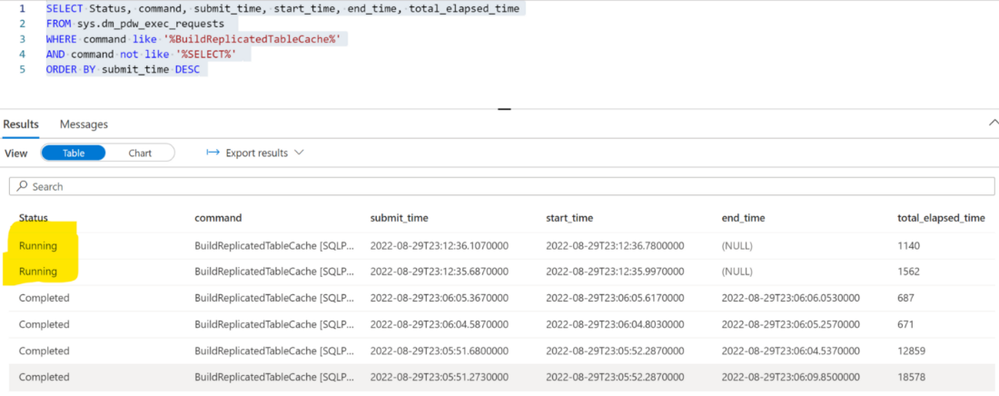

The second reason is you can only replicate two tables at the same time. The engine, after receiving a trigger, will start the replication process FIFO (First In First Out) for first two requests, more than that, requests will be queued. The engine will automatically use a staticrc20 to minimize the impact to other workloads in your instance.

So now let me share with you some extremely useful SQL Queries to identify all the replicated tables in your instance, which tables are replicated or not ready and how to monitor which queries are currently in the replication process.

This query will list all tables from the instance and will also help you identify the state of your replicated tables. If the State column is marked as “Ready” then it means it has successfully been replicated. If is marked as “NotReady" then this table, it will be round robin and expect broadcast move operations.

This next query will help you identify which replicated tables are currently in the process of replication, start/end time and total time. This will help you prioritize during your ETL (extract, transform, load) process which tables will need to be triggered first based on size and priority.

Summary

In this blog post, I explained different types of tables we can create in Azure Synapse Analytics dedicated SQL pool. Further, I explained creating a replicated table, different considerations to use when using it and best practices to optimize it's performance.

Our team publishes blog(s) regularly and you can find all these blogs here: https://aka.ms/synapsecseblog

For deeper level understanding of Synapse implementation best practices, please refer our Success By Design (SBD) site: https://aka.ms/Synapse-Success-By-Design

Published on:

Learn more