Writing data using Azure Synapse Dedicated SQL Pool Connector for Apache Spark

Summary

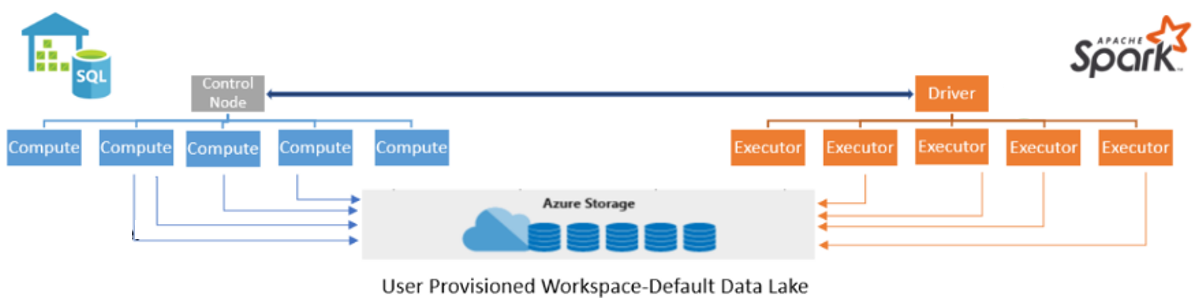

A common data engineering task is explore, transform, and load data into data warehouse using Azure Synapse Apache Spark. The Azure Synapse Dedicated SQL Pool Connector for Apache Spark is the way to read and write a large volume of data efficiently between Apache Spark to Dedicated SQL Pool in Synapse Analytics. The connector supports Scala and Python language on Synapse Notebooks to perform this operations.

The intention of this Guide is not explain all the Connector features if you require a deeper understanding of how this connector works start here.

Scenario

Usually, customers do this kind of operation using Synapse Apache Spark to load data to Dedicated Pool within Azure Synapse Workspace, but today, I would like to reproduce a different scenario that I was working on one of my support cases. Consider a scenario where you are trying to load data from Synapse Spark to Dedicated pool (formerly SQL DW) using Synapse Pipelines, and additionally you are using Synapse Workspace deployed with Managed Virtual Network.

The intention of this guide is to help you with which configuration will be required if you need to load data from Azure Synapse Apache Spark to Dedicated SQL Pool (formerly SQL DW). If you prefer take advantage of the new feature-rich capabilities now available via the Synapse workspace and Studio and load data directly from Azure Apache Spark to Dedicated Pool in Azure Synapse Workspace is recommended that you enable Synapse workspace features on an existing dedicated SQL pool (formerly SQL DW).

Before we start, here is some initial considerations.

To write data to internal tables, the connector now uses COPY statement instead of CETAS/CTAS approach. It also requires storage permissions.

- If reading/writing to storage via pipeline, Synapse Workspace MSI would be the security principal performing any operation (Read, Write, Delete) on the storage.

- Make sure the Workspace MSI account has Storage Blob Data Contributor role to perform all action.

- If your storage account is associated with a VNet or if firewall protected storage, you must authenticate using MSI (Managed Identity).

- If notebooks are used to access storage account, logged in account will be used unless accessing storage via linked services.

- Logged in user account should Storage Blob Data Contributor role to perform all action.

- Make sure Logged in account have the correct SQL Permissions to read and write on dedicated pool.

- If reading/writing to Dedicated SQL Pool (formerly SQL DW) via pipeline, SQL Server System assigned managed identity needs to be enabled from SQL Server side. t

- Make sure SQL Server System assigned managed identity is turned-on from sql server side.

- Make sure that application registered at Azure Active Directory is assigned Storage Blob Data Contributor on the Azure Storage.

- If reading/writing to Dedicated SQL Pool in Azure Synapse Workspace via pipeline, Synapse Workspace MSI would be the security principal performing any operation on the storage and/or on the Dedicated SQL Pool.

- Make sure the Workspace MSI account has Storage Blob Data Contributor role on storage account to perform all action.

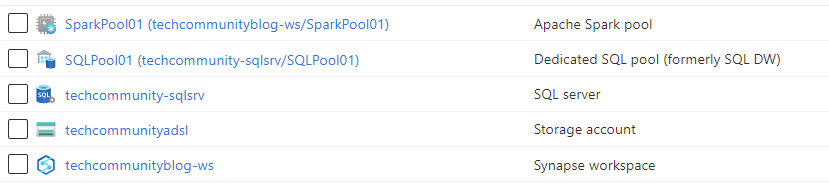

Environment

There are 3 deployments needed to make this configuration possible

- Create an Azure Synapse Workspace Deployment with Managed Virtual Network enabled. Start here.

- Create an Azure Apache Spark pool using Synapse Studio. Start here.

- Create a Dedicated SQL pool (formerly SQL DW). Start here.

Fig 1 - Resources

Implementation

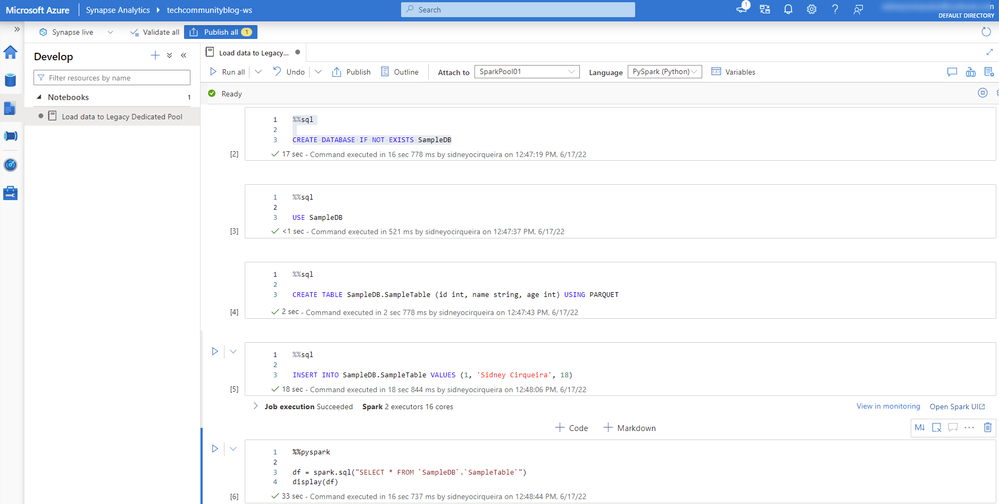

Step 1 - Let's create a Synapse Notebook that will perform read and write operation to be executed on the Synapse Pipeline.

Fig 2 - Creating an Azure Synapse Notebook

Here is Spark script used within my sample notebook to generate data:

Below is the sample to write using AAD Authentication (Internal Table) using python connector:

Here is the sample of Synapse Notebook with the code:

Fig 3 - Synapse Notebook with sample code

After creating synapse notebook, click Publish all to save your modifications.

Fig 4 - Publish all

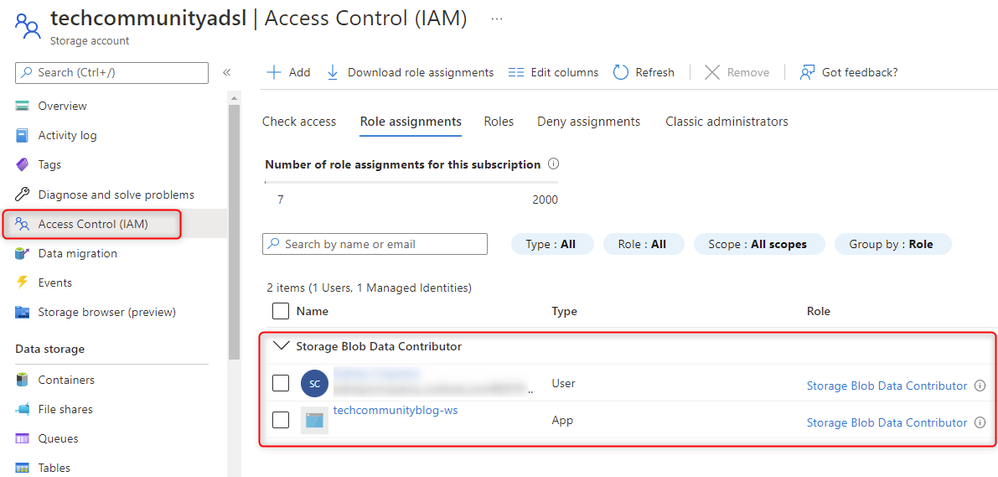

Step 2 - Granting Storage Permissions

Go to Storage account access control -> Click +Add -> add role assigned -> select Storage Blob Data Contributor role -> Assign access to -> Select your Synapse Workspace Managed Identity and also your logged account -> Review + assign.

Fig 5 - Storage account permissions

Step 3 - Granting SQL Permissions to logged in account

If you are trying to read or write on dedicated pool using synapse notebook and you are not SQL Active Directory Admin, following SQL authorization is necessary on Dedicated SQL Pool for user logged in account:

Read:

Write:

Now, let's Run the Synapse Notebook to test if logged in user account will have right permissions to perform all actions.

When the notebook was executed, the error below was throw:

Fig 6 - Error when notebook try to access SQL Server

This error is pretty clear: Synapse Pipeline IP is not allowed to access the server.

We need a allow Azure services and resources to access SQL Server.

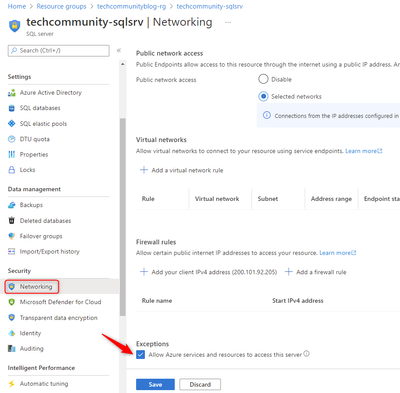

Step 4 - Allowing Azure services and resources on SQL Server

To allow this configuration: Go to your SQL Server -> Security -> Networking - > Exceptions -> Allow Azure services and resources to access this server -> Save

Fig 7 - Allowing Azure services and resources to access SQL Server

Let's re-run the code that loads data into Dedicated SQL Pool (formerly SQL DW).

Fig 8 - Error related to Storage permissions

Now we can see a different error throwed:

ERROR: 'Not able to validate external location because The remote server returned an error: (403) Forbidden.

This generic error means that now we can get on SQL Server, but System assigned managed identity that will be used to perform this operation may not be enabled.

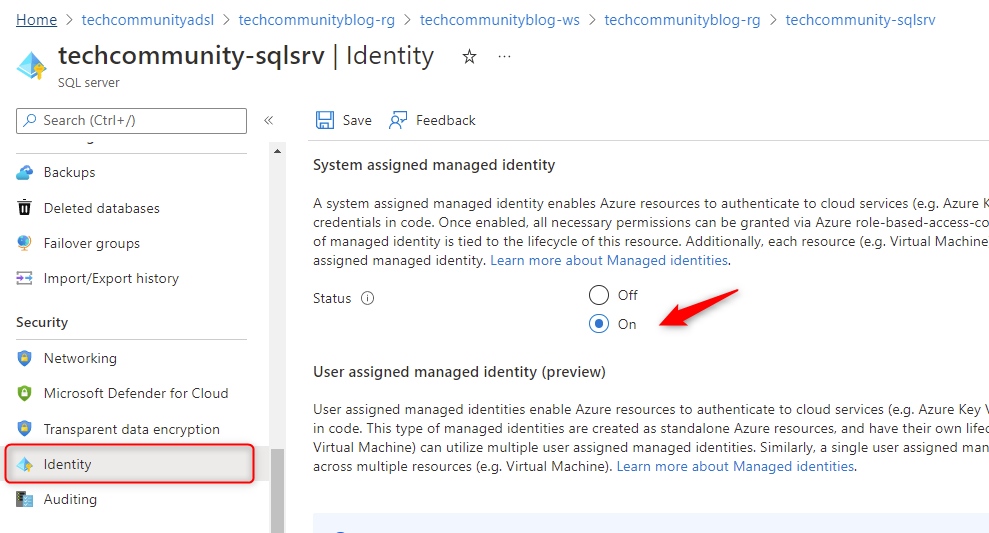

Step 5 - Allowing System assigned managed identity on SQL Server

To allow this configuration: Go to your SQL Server Security -> Identity- > Turn Status On -> Save

Fig 9 - Allowing System assigned managed identity from SQL Server

Now when we run the write cell again, it will work because logged in user account have Storage Blob Data Contributor role to perform all action.

Fig 10 - Write on Dedicated SQL Pool succeeded

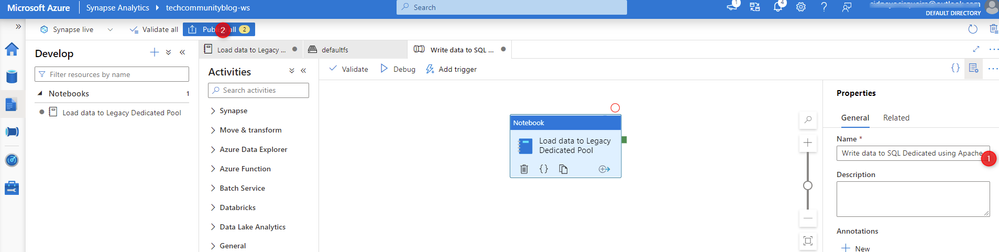

Step 6 - Adding Synapse Notebook activity within Synapse Pipeline to perform data load into Dedicated SQL Pool (formerly SQL DW).

To allow this configuration: Go to your Synapse Notebook -> On the upper right side Click on Add pipeline -> New pipeline.

Fig 11 - Adding Synapse Notebook to Pipeline

Next screen:

1 - On properties, choose a Name for you pipeline.

2 - Publish your pipeline.

Fig 12 - Naming and publishing.

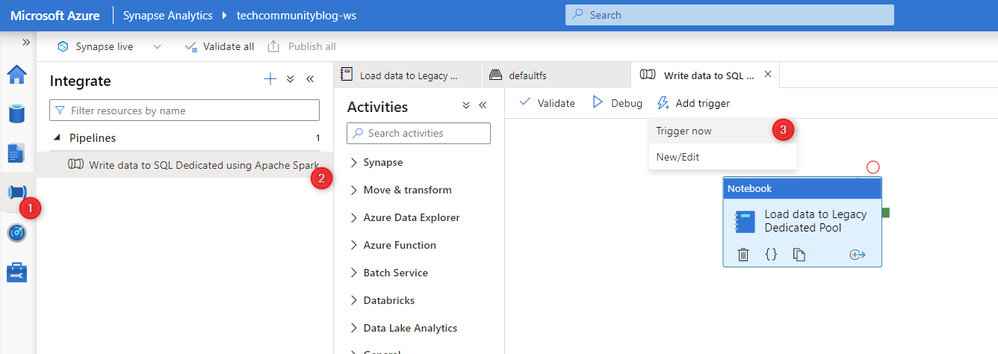

Now let's execute our pipeline to ensure that all configuration is done.

Go to Integrate -> Select the pipeline that was created on the last step -> Click on +Add trigger -> Trigger now -> Ok.

Fig 13 - Triggering Pipeline

Go to Monitor-> integration -> Pipeline runs.

As we can see on the screenshot below, now we have an error when executing from Synapse Pipeline.

Fig 14 - Monitoring Pipeline

Click on Error to see the complete error:

: com.microsoft.spark.sqlanalytics.SQLAnalyticsConnectorException: Internal error - Attempt to run SQL Transaction failed with {ErrorNumber - 105215, ErrorMessage - Not able to validate external location because The remote server returned an error: (403) Forbidden.}

This error is saying that System assigned managed identity doesn't have permission to perform this operation.

It means that the System assigned managed identity should be assigned Storage Blob Data Contributor on the Azure Storage.

Usually, the name of the System assigned managed identity is the same of the SQL Server name, as shown on the screenshot below.

Fig 15 - Checking system assigned managed identity name

Go back to Step 2 and grant Storage permissions for System assigned managed identity.

Fig 16 - Checking system assigned managed identity Storage permissions

Again, Let's trigger the pipeline.

Now it works. ![]()

Fig 17 - Pipeline succeeded.

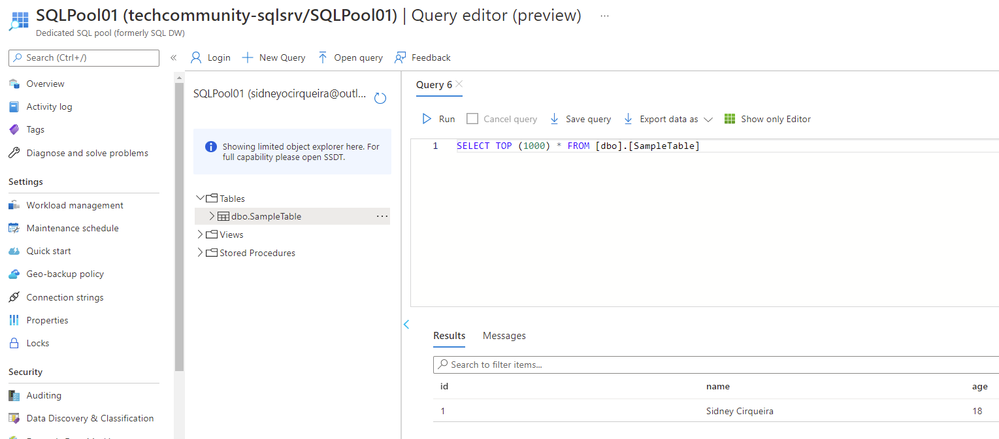

Step 7 - Checking the data loaded into Dedicated SQL Pool (formerly SQL DW).

Fig 18 - Checking data on Dedicated SQL Pool table.

Solution Overview

The new Azure Synapse Dedicated SQL Pool Connector for Apache Spark is designed to efficiently transfer data between Spark pools and dedicated SQL Pools in Azure Synapse Analytics.

The solution allows Synapse notebooks read and write data from or to Dedicated SQL Pool.

The Azure Synapse Apache Spark to SQL Connector works on a dedicated SQL pools only.

The Synapse Workspace Managed Identity allows you to perform more secure operations on the storage and dedicated pool and no longer requires user details or storage keys to be shared.

The System assigned managed identity needs to be enabled from Logical Server.

The Storage account security is streamlined, and we now grant RBAC permissions to the Managed Service Identity for the Logical Server and Synapse Workspace.

To write data to internal tables, the connector now uses COPY statement instead of CETAS/CTAS approach.

Conclusion

When using The Azure Synapse Dedicated SQL Pool Connector for Apache Spark, users can take advantage of read and write a large volume of data efficiently between Apache Spark to Dedicated SQL Pool in Synapse Analytics. The connector supports Scala and Python language on Synapse Notebooks to perform these operations.

If you are unable to complete the steps in the article, please do reach out to us by logging a Support Request.

SIDNEY CIRQUEIRA

Synapse Analytics Support Engineer

Published on:

Learn moreRelated posts

Accelerate Your Cosmos DB Infrastructure with GitHub Copilot CLI and Azure Cosmos DB Agent Kit

Modern infrastructure work is increasingly agent driven, but only if your AI actually understands the platform you’re deploying. This guide sh...

Accelerate Your Cosmos DB Infrastructure with GitHub Copilot CLI and Azure Cosmos DB Agent Kit

Modern infrastructure work is increasingly agent driven, but only if your AI actually understands the platform you’re deploying. This guide sh...

SharePoint: Migrate the Maps web part to Azure Maps

The SharePoint Maps web part will migrate from Bing Maps to Azure Maps starting March 2026, completing by mid-April. Key changes include renam...

Microsoft Azure Maia 200: Scott Guthrie EVP

Azure Cosmos DB TV Recap: Supercharging AI Agents with the Azure Cosmos DB MCP Toolkit (Ep. 110)

In Episode 110 of Azure Cosmos DB TV, host Mark Brown is joined by Sajeetharan Sinnathurai to explore how the Azure Cosmos DB MCP Toolkit is c...

Introducing the Azure Cosmos DB Agent Kit: Your AI Pair Programmer Just Got Smarter

The Azure Cosmos DB Agent Kit is an open-source collection of skills that teaches your AI coding assistant (GitHub Copilot, Claude Code, Gemin...