Getting started with REST APIs for Azure Synapse Analytics - Apache Spark Pool

Author: Abid Nazir Guroo is a Program Manager in Azure Synapse Customer Success Engineering (CSE) team.

Introduction

Azure Synapse Analytics Representational State Transfer (REST) APIs are secure HTTP service endpoints that support creating and managing Azure Synapse resources using Azure Resource Manager and Azure Synapse web endpoints. This article provides instructions on how to setup and use Synapse REST endpoints and describe the Apache Spark Pool operations supported by REST APIs.

Authentication

In order to perform any operation using Azure REST APIs you need to authenticate the request using an azure active directory authentication token. This token can be generated by various interactive and non-interactive methods. In this tutorial we will use a azure active directory service principal for generating a token with below steps:

- Register an application with Azure AD and create a service principal. You can create a new service principal using Azure Portal by following the steps outlined in Create an Azure AD app and service principal

- Create a secret for the registered service principal and save it securely for usage. Instructions for creating a secret are documented at - Create a secret for an application

- Assign an appropriate role to the registered service principal on the Synapse Workspace based on the operations you want to perform using the REST APIs. You can find detailed information on all available Azure Synapse Analytics roles and corresponding permissions at - Azure Synapse RBAC roles

REST API Client

You can use various command line utilities like CURL or Powershell, programming languages or GUI clients to interact with REST API endpoints. In this article we will leverage a widely used GUI application - Postman for its simplicity and ease of setup. Postman can be downloaded with this link.

Example: How to get an Azure AD Access Token

The following parameters are needed to successfully get an Azure AD access token:

|

Parameter |

Description |

|

Tenant ID |

The Azure Active Directory (tenant) ID where the application is registered. |

|

Client ID |

The Application (client) ID for the application registered in Azure AD. |

|

Client secret |

The Value of the client secret for the application registered in Azure AD. |

On the Postman UI

- Create a new HTTP request (File > New > HTTP Request or using the new tab (+) icon).

- In the HTTP verb drop-down list, select POST.

- For Enter request URL, enter https://login.microsoftonline.com/<tenant_id>/oauth2/token, where <tenant_id> is your Active Directory (tenant) ID.

- On the headers tab enter a new key Content-Type with value application/x-www-form-urlencoded.

- On the Body tab, select x-www-form-urlencoded and enter below key value pairs.

grant_type:client_credentials client_id:<client_id> client_secret:<Client_secret> resource:https://management.azure.com/ - Click send. You will receive the bearer token in the json response to the request. Below is an example of the response.

Note: The "resource" parameter value should be "https://management.azure.com/" or ""https://management.core.windows.net/" for control plane operations and "https://{workspace name}.dev.azuresynapse.net" for data plane operations.

Azure Synapse Analytics Apache Spark REST APIs

Apache Spark Pools in Azure Synapse Analytics supports the following REST API operations that can be invoked using a HTTP endpoint:

|

Operation |

Description |

Method |

API Docs |

|

Create Or Update |

Create a new Apache Spark Pool or modify the properties of an existing pool. |

PUT |

|

|

Delete |

Delete a Apache Spark Pool pool. |

DELETE |

|

|

Get |

Get the properties of a Apache Spark Pool. |

GET |

|

|

List By Workspace |

List all provisioned Apache Spark Pools in a workspace. |

GET |

|

|

Update |

Update the properties of an existing Apache Spark pool. |

PATCH |

Detailed documentation of all the REST API operations supported overall by Azure Synapse Analytics can be found with this link.

Example: How to invoke an Apache Spark REST endpoint to create or update a spark pool

The Create Or Update REST API can be used to create a new Apache Spark Pool or change configurations of an existing pool, including the ability to upgrade/downgrade the Spark runtime version of a pool. For example, an existing Apache Spark pool with spark runtime version 3.1 can be upgraded to Spark version 3.2 without the need of deletion of the existing pool.

On the Postman UI

- Create a new HTTP request (File > New > HTTP Request or using the new tab(+) icon).

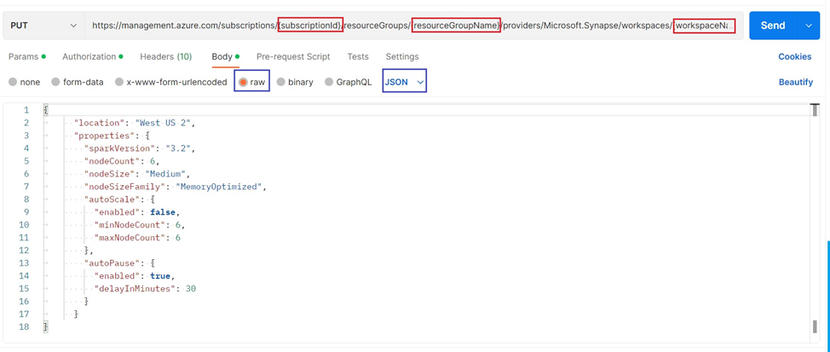

- In the HTTP verb drop-down list, select PUT.

- For Enter request URL, enter https://management.azure.com/subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName}/providers/Microsoft.Synapse/workspaces/{workspaceName}/bigDataPools/{bigDataPoolName}?api-version=2021-06-01-preview where {subscriptionId} is the subscription Id of where the Azure Synapse workspace has been provisioned, {resourceGroupName} is the resource group of the workspace, {workspaceName} is name of the Synapse workspace and {bigDataPoolName} is the name of the Apache spark pool.

- On the headers tab enter a new key Content-Type with value application/json.

- Click on the Authorization tab, select Bearer Token in the Type dropdown menu and enter the AAD Token generated in the previous example.

- On the Body tab, select raw and select json on the dropdown menu and enter target Spark Pool JSON definition in the body.

Example:

Detailed list of configuration properties supported by Apache Spark Pools are documented in this link.

7. Click the send button. A successful creation or modification REST API operation will return a detailed provisioning JSON response like the below sample:

Note: You can include an optional Boolean(true/false) parameter in the request URL to stop all currently running sessions/job on the target Spark pool.

The new request URL with forced termination of sessions: https://management.azure.com/subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName}/providers/Microsoft.Synapse/workspaces/{workspaceName}/bigDataPools/{bigDataPoolName}?api-version=2021-06-01-preview&force={true}

Summary

Azure Synapse Analytics REST APIs REST APIs can be used for operation of various Synapse services. The REST APIs can be used to upgrade/downgrade Spark runtime version, auto-scale configuration, library management and more.

Our team publishes blog(s) regularly and you can find all these blogs here: https://aka.ms/synapsecseblog

For deeper level understanding of Synapse implementation best practices, please refer our Success By Design (SBD) site: https://aka.ms/Synapse-Success-By-Design

Published on:

Learn moreRelated posts

New Secure Boot update resources for Azure Virtual Desktop, Windows 365, and Microsoft Intune

New documentation is now available to help IT administrators prepare for Secure Boot certificate updates and manage update readiness across vi...

Azure DocumentDB: A Fully Managed MongoDB-Compatible Database

Running MongoDB at scale eventually forces a trade-off: invest heavily in managing your own infrastructure or move to a managed service and ri...

Azure SDK Release (February 2026)

Azure SDK releases every month. In this post, you'll find this month's highlights and release notes. The post Azure SDK Release (February 2026...

Recovering dropped tables in Azure Databricks with UNDROP TABLE

Oops, Dropped the Wrong Table? What now? We’ve all been there: you’re cleaning up some old stuff in Databricks, run a quick DROP TABLE… and su...

Azure Developer CLI (azd) – February 2026: JMESPath Queries & Deployment Slots

This post announces the February 2026 release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) – February 2026: JM...

Improved Python (PyPi/uvx) support in Azure MCP Server

Azure MCP Server now offers first-class Python support via PyPI and uvx, making it easier than ever for Python developers to integrate Azure i...

Microsoft Purview: Data Lifecycle Management- Azure PST Import

Azure PST Import is a migration method that enables PST files stored in Azure Blob Storage to be imported directly into Exchange Online mailbo...