Retrieve Azure Synapse role-based access control (RBAC) Information using PowerShell

Azure Synapse Analytics is a limitless analytics service that brings together data integration, enterprise data warehousing and big data analytics. It gives you the freedom to query data on your terms, using either serverless or dedicated resources—at scale. Azure Synapse brings these worlds together with a unified experience to ingest, explore, prepare, manage and serve data for immediate BI and machine learning needs.

Synapse RBAC extends the capabilities of Azure RBAC for Synapse workspaces and their content.

Synapse RBAC is used to manage who can:

- Publish code artifacts and list or access published code artifacts,

- Execute code on Apache Spark pools and Integration runtimes,

- Access linked (data) services protected by credentials

- Monitor or cancel job execution, review job output, and execution logs.

Azure Synapse RBAC has built-in roles and scopes that helps to manage permissions in Azure Synapse Analytics -

|

Role |

Permissions |

Scopes |

|

Synapse Administrator |

Full Synapse access to SQL pools, Data Explorer pools, Apache Spark pools, and Integration runtimes. Includes create, read, update, and delete access to all published code artifacts. Includes Compute Operator, Linked Data Manager, and Credential User permissions on the workspace system identity credential. Includes assigning Synapse RBAC roles. In addition to Synapse Administrator, Azure Owners can also assign Synapse RBAC roles. Azure permissions are required to create, delete, and manage compute resources.

Can read and write artifacts Can do all actions on Spark activities. Can view Spark pool logs Can view saved notebook and pipeline output Can use the secrets stored by linked services or credentials Can assign and revoke Synapse RBAC roles at current scope |

Workspace Spark pool Integration runtime Linked service Credential |

|

Synapse Apache Spark Administrator |

Full Synapse access to Apache Spark Pools. Create, read, update, and delete access to published Spark job definitions, notebooks and their outputs, and to libraries, linked services, and credentials. Includes read access to all other published code artifacts. Doesn't include permission to use credentials and run pipelines. Doesn't include granting access.

Can do all actions on Spark artifacts Can do all actions on Spark activities |

Workspace Spark pool |

|

Synapse SQL Administrator |

Full Synapse access to serverless SQL pools. Create, read, update, and delete access to published SQL scripts, credentials, and linked services. Includes read access to all other published code artifacts. Doesn't include permission to use credentials and run pipelines. Doesn't include granting access.

Can do all actions on SQL scripts Can connect to SQL serverless endpoints with SQL db_datareader, db_datawriter, connect, and grant permissions |

Workspace |

|

Synapse Contributor |

Full Synapse access to Apache Spark pools and Integration runtimes. Includes create, read, update, and delete access to all published code artifacts and their outputs, including credentials and linked services. Includes compute operator permissions. Doesn't include permission to use credentials and run pipelines. Doesn't include granting access.

Can read and write artifacts Can view saved notebook and pipeline output Can do all actions on Spark activities Can view Spark pool logs |

Workspace Spark pool Integration runtime |

|

Synapse Artifact Publisher |

Create, read, update, and delete access to published code artifacts and their outputs. Doesn't include permission to run code or pipelines, or to grant access.

Can read published artifacts and publish artifacts Can view saved notebook, Spark job, and pipeline output |

Workspace |

|

Synapse Artifact User |

Read access to published code artifacts and their outputs. Can create new artifacts but can't publish changes or run code without additional permissions. |

Workspace |

|

Synapse Compute Operator |

Submit Spark jobs and notebooks and view logs. Includes canceling Spark jobs submitted by any user. Requires additional use credential permissions on the workspace system identity to run pipelines, view pipeline runs and outputs.

Can submit and cancel jobs, including jobs submitted by others Can view Spark pool logs |

Workspace Spark pool Integration runtime |

|

Synapse Monitoring Operator |

Read published code artifacts, including logs and outputs for notebooks and pipeline runs. Includes ability to list and view details of serverless SQL pools, Apache Spark pools, Data Explorer pools, and Integration runtimes. Requires additional permissions to run/cancel pipelines, Spark notebooks, and Spark jobs. |

Workspace |

|

Synapse Credential User |

Runtime and configuration-time use of secrets within credentials and linked services in activities like pipeline runs. To run pipelines, this role is required, scoped to the workspace system identity.

Scoped to a credential, permits access to data via a linked service that is protected by the credential (also requires compute use permission) Allows execution of pipelines protected by the workspace system identity credential(with additional compute use permission) |

Workspace Linked Service Credential |

|

Synapse Linked Data Manager |

Creation and management of managed private endpoints, linked services, and credentials. Can create managed private endpoints that use linked services protected by credentials |

Workspace |

|

Synapse User |

List and view details of SQL pools, Apache Spark pools, Integration runtimes, and published linked services and credentials. Doesn't include other published code artifacts. Can create new artifacts but can't run or publish without additional permissions.

Can list and read Spark pools, Integration runtimes. |

Workspace, Spark pool Linked service Credential |

There are multiple ways that the RBAC roles can be configured.

The easiest and most friendly way is to perform this action using Azure Synapse workspace. (more)

In PowerShell, there are number of PowerShell cmdlets that helps to manage or retrieve the information in Synapse RBAC.

Example:

- Get-AzSynapseRoleAssignment - Gets a Synapse Analytics role assignment. (more)

- New-AzSynapseRoleAssignment - Creates a Synapse Analytics role assignment. (more)

- Remove-AzSynapseRoleAssignment - Deletes a Synapse Analytics role assignment. (more)

One of the key use cases that most customers face difficulties while retrieving or assigning the role-based access control in Azure Synapse Analytics, that they cannot find the correct usernames, group names or the service principal names using the PowerShell cmdlet "Get-AzSynapseRoleAssignment". The PowerShell cmdlet only provides limited information and it's difficult to understand since that contains the object IDs.

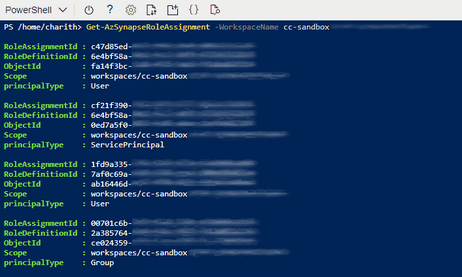

Example: (Following output is captured from “Get-AzSynapseRoleAssignment”)

In order to retrieve the additional information, users can have following example scripts that provides the username and other relevant information for Azure Synapse RBAC.

Get all the Synapse RBAC Information:

Below PowerShell script helps to map the RBAC Object IDs with usernames, groups and the service principals.

The script output provides all the RBAC information in Azure Synapse Analytics Workspace.

Note: This is only an example to retrieve the information and not to use any production code

Script Name: GetSynapseRBACInfo.ps1

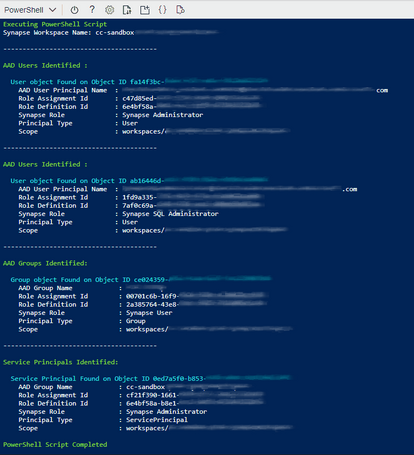

Example: (Following is the output of above script)

Get Specific user RBAC information:

Below PowerShell script helps to map a specific user with Synapse RBAC.

The script provides the information for a specific username, group or a service principal.

Note: This is only an example to retrieve the information and not to use any production code

Script Name: GetSynapseRBACUser.ps1

Example: (Following output is the output of above script)

How to execute the scripts?

There are multiple ways you can execute the scripts.

- From local host -

Copy the commands to a PowerShell script

Rename the PowerShell scripts as "GetSynapseRBACInfo.ps1" & "GetSynapseRBACUser.ps1"

Execute the PowerShell script

Note: Az.Synapse module & Az.Resources modules needs to be installed - From Azure Cloud Shell.

Upload both scripts to Azure Cloud Shell

Execute the scripts as #1.

The workspace name, AAD username, AAD user group or Service principal name is required as parameters.

Example: (Following output captured while the script is requesting the necessary information)

Published on:

Learn moreRelated posts

Azure Boards integration with GitHub Copilot

A few months ago we introduced the Azure Boards integration with GitHub Copilot in private preview. The goal was simple: allow teams to take a...

Microsoft Dataverse – Monitor batch workloads with Azure Monitor Application Insights

We are announcing the ability to monitor batch workload telemetry in Azure Monitor Application Insights for finance and operations apps in Mic...

Copilot Studio: Connect An Azure SQL Database As Knowledge

Copilot Studio can connect to an Azure SQL database and use its structured data as ... The post Copilot Studio: Connect An Azure SQL Database ...

Retirement of Global Personal Access Tokens in Azure DevOps

In the new year, we’ll be retiring the Global Personal Access Token (PAT) type in Azure DevOps. Global PATs allow users to authenticate across...

Azure Cosmos DB vNext Emulator: Query and Observability Enhancements

The Azure Cosmos DB Linux-based vNext emulator (preview) is a local version of the Azure Cosmos DB service that runs as a Docker container on ...

Azure Cosmos DB : Becoming a Search-Native Database

For years, “Database” and “Search systems” (think Elastic Search) lived in separate worlds. While both Databases and Search Systems oper...