Demystifying Data Explorer

Author(s): Gilles L'Hérault is a Program Manager in Azure Synapse Customer Success Engineering (CSE) team.

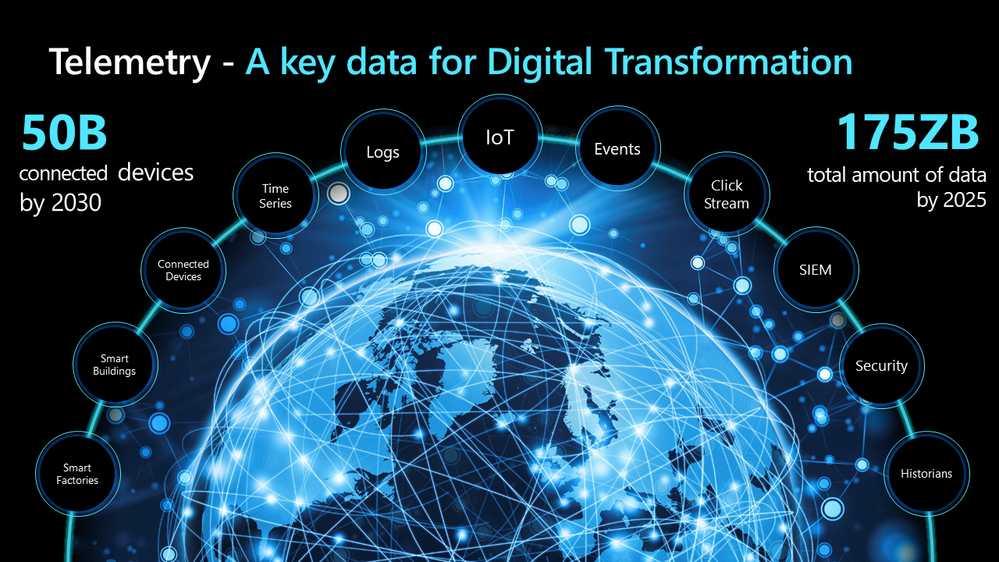

Enterprises today have massive amounts of data being generated on a continuous basis from systems, applications, processes, devices, events, interactions, etc. You will relate this to telemetry, IoT data, security logs, application, system logs, and the like. This data is valuable for varied purpose and not limited to auditing, security, understanding user patterns, analyzing system behavior and trends, IT and operational monitoring, anomaly detection, troubleshooting and diagnostics, enhancing user experience, etc. However, this data is also time sensitive, and in many cases have needs for long term storage.

This requires a service that can handle large volumes of data while providing near real time analysis at blazing speeds and is also highly cost efficient. This is where Azure Data Explorer or the Synapse Data Explorer comes to the rescue.

Note: Azure Data Explorer (ADX) is the name for the standalone Data Explorer service available in Azure. The same underlying technology that runs ADX is available in Synapse as Synapse Data Explorer (SDX) Pools. SDX complements the existing SQL (Data Warehouse) and Spark (Data Engineering and Data Science) Synapse services and together form an integrated analytics platform.

The contents of this blog are applicable to both ADX and SDX and hence these terms are used interchangeably.

Azure Data Explorer at a glance

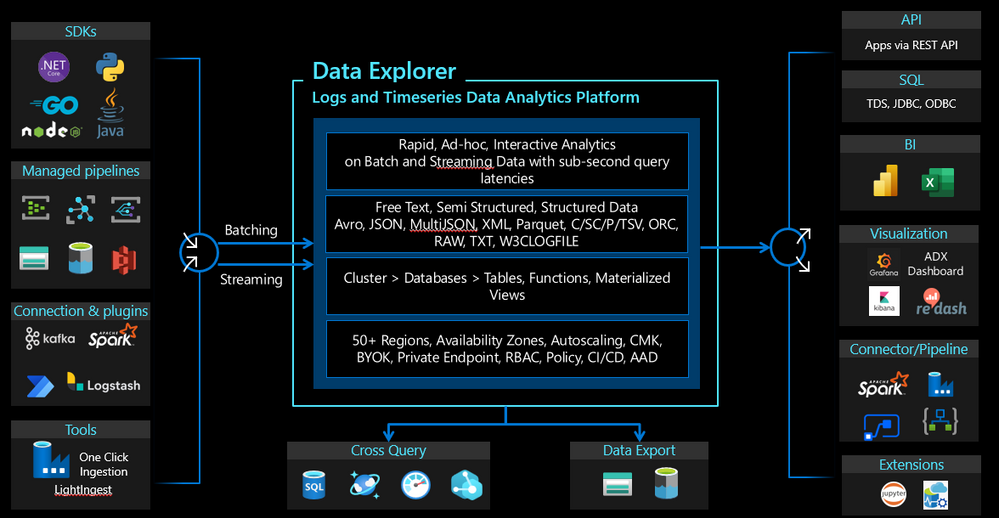

Azure Data Explorer (ADX) is a high performance fully managed big data analytics platform for near real time analysis of large volumes of fresh and historical data (incl streaming data) to generate insights. It offers powerful ingestion, optimized and distributed storage, and intuitive querying capability using the Kusto Querying Language (KQL). Data is supported across all formats of free text, structured and semi structured. Its unique data compression, columnar storage, full text indexing, and storage format ensures cost efficiencies along with lightening performance.

The infographic below shows a high-altitude view of data explorer.

On the left you have all options to get data into Data Explorer:

- SDKs for programmatically ingesting data with most of the popular languages:

- .NET

- Python

- Java

- And much more…

- Managed pipeline services like

- Event hub, IoT hub

- Event Grid combined with Storage Accounts

- Third party integration such as:

- Kafka topics

- Spark connector

- LogStash

- Traditional Batch services like:

- Azure Data Factory, Synapse Pipelines

- Logic Apps.

On the right you see options to egress data out of data explorer or to present it through the many visualization tools we support.

- APIs and SDK (see above)

- BI visualization tools such as

- Power BI and Excel

- ADX Dashboards, Graphana, and ReDash

- Legacy support to other tools through ODBC

- Interoperability with Spark through

- the Spark connector

- Continuous export of Parquet files

- Traditional Batch services like:

- Azure Data Factory, Synapse Pipelines

- Logic Apps.

Stay tuned for a deep dive article on data ingestion and all the other ways to integrate with data explorer.

Nature of Data: Append-only immutable!

Azure data explorer began its journey as a data store for logs, and by definition logs are immutable, and you only add or delete them. This is the philosophy that underpins the foundation of ADX. The traditional expectations of CRUD (create-read-update-delete) operations have no relevance, and the focus is on how quickly it can be queried and read interactively.

One of the biggest adaptations is that there is no update command in ADX. This may seem like a showstopper but there are many ways to model your data in such a way that you never really need to run an update. In fact, updates are inherently difficult to run at scale and the sooner we stop doing them the better.

Here, you may want to ramp up on two very important architectural patterns that are exquisitely well tuned to ADX. Event Sourcing and CQRS.

Getting deeper under the hood

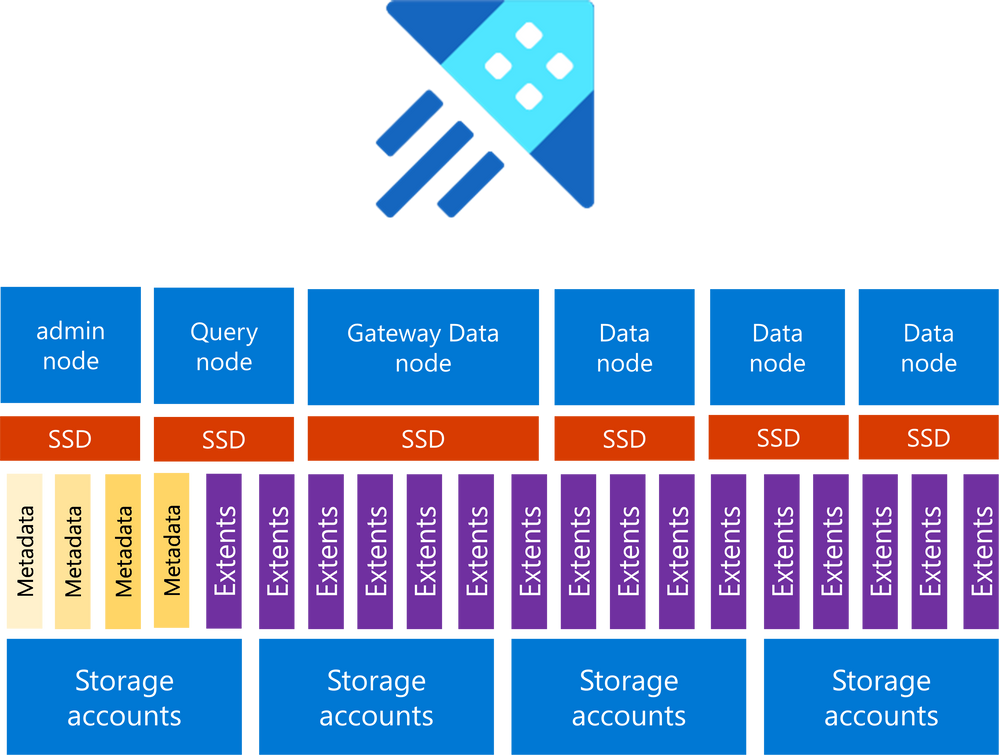

Azure Data Explorer, just like it’s brethren in the intelligence platform, is a distributed data platform. As expected, storage is separate from compute, and we leverage a columnar format we call “extents” to store data.

What makes ADX different is that data that is persisted on blob, but also mounted on the cluster node’s solid-state-drives (SSD) resulting an ultra-fast response time. This is called the “hot-cache”, how much of the data stays in the cache is controlled because SSDs are not cheap. Here’s a high-level infographic with what a typical ADX cluster looks like.

As you can see, most of the compute nodes are dedicated “data nodes” and their only purpose in life is to store as much data in their SSD drive as possible to serve up rapidly all the incoming queries. The other specialized nodes will take on additional duties such as endpoint traffic manager, query plan build and execution, metadata maintenance and administrative task such as security policy enforcement.

The extents are a highly compressed columnar file format and every bit of data ingested is heavily indexed when the files are created. Thus, the cluster knows exactly where every piece of data is. Therefore, you can literally search for a needle in a haystack and find it within milliseconds. The cluster knew ahead of time where the needle was and regardless of how big the haystack was.

Compression helps save cost on both blog storage and SSD consumption with compression rates of roughly 10x but much higher rate observed in the field. Once you have a good idea of how much compressed space your data requires, you can easily size your cluster accordingly and save cost there as well.

Where does it fit across the data stream?

There is a diverse toolset available across the data stream and ADX is very friendly to both upstream and downstream services that need to interact with it. For append-only data streams, to truly benefit from ADX you should put it up front at the very beginning of the journey that the data takes through the intelligence platform.

To understand why ADX is best positioned upfront, you must consider the data “temperature” or in other words, how fast the data is coming in, how much do you need to hang on to immediately and how fast you need to reason over it. If you have a lot of data (many Gigabytes/sec) and you need Terabytes or even petabytes of data to complete your workload and you have precious little time to complete the reasoning, then only Data Explorer can accomplish this within a reasonable budget. Therefore, by putting it upstream of traditional data platforms, you can accommodate workloads that have these characteristics and continue to reason over that data later using traditional data platforms.

Data Explorer Pools in Synapse

The native integration of SDX enables analytics of log and time series workloads in Synapse. The SDX pools offer close integration to the other Synapse engines, eases network connectivity and automatically inherits Synapse workspace configurations.

SDX will traditionally be positioned upstream from either a Spark engine like Synapse Spark or a Synapse Dedicated SQL pool. This is best described with an end-to-end Scenario.

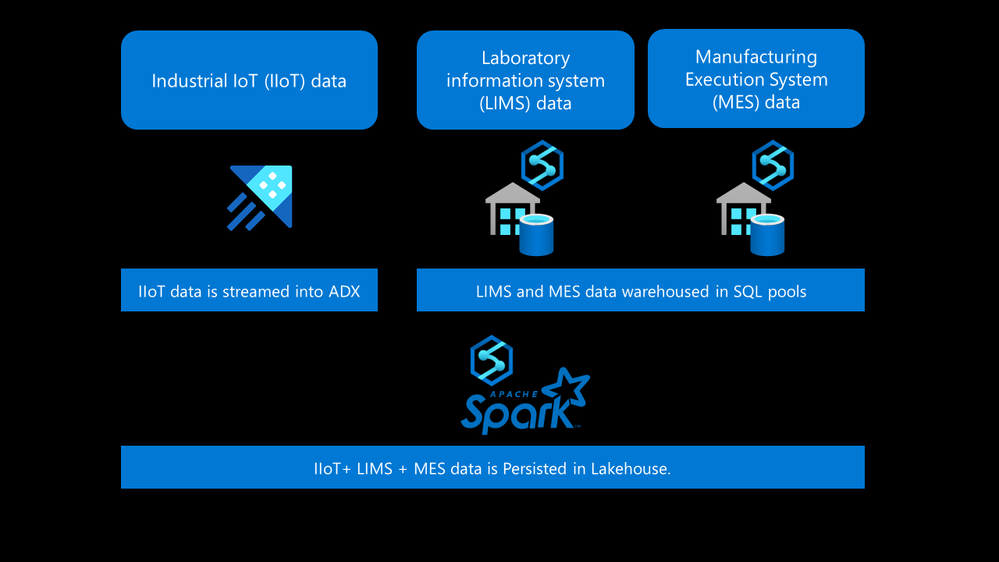

Consider the following: ACME chemical wants to leverage the Intelligence Platform with all its pillars to improve their chemical production plant. Specifically, they want to predict the properties of a particular end product and find optimal recipes to maximize customer satisfaction and profit.

They have three major data sources to consider:

- Telemetry from the chemical plant where the mixers, vats and other industrial IoT (IIoT) devices are reporting in a constant stream of important data.

- Manufacturing Execution Systems (MES) that manages core business domains like production batches, shipping and handling, crew shifts, etc.

- Laboratory information management systems (LIMS) that manages the core scientific domain objects that Experiments, recipes and what is typically referred to as “crown jewel data”

An end-to-end Intelligence platform for this scenario would stream the IIoT data into SDX, warehouse the LIMS and pass the MES data into Synapse Dedicated SQL pool(s). Finally, all the three datasets would be merged in the Lakehouse using Synapse Spark pools to train the Machine Learning model that ACME needs.

To conclude, ADX or SDX is certainly the choice if

… you have the following:

- You must reason over telemetry, IoT data, logs, or text files.

- You have complex time series operations to perform.

- Your log data is every expanding, and you want cost efficient storage.

- Your data is heavily partitioned on time, and you need fast access to it.

There are many more, but this should get you started!

Stay tuned for more content and deep dives into the nuts and bolts of Data Explorer.

In the meantime, please refer to https://aka.ms/learn.adx

Our team publishes blog(s) regularly and you can find all these blogs here: https://aka.ms/synapsecseblog

For deeper level understanding of Synapse implementation best practices, please refer our Success By Design (SBD) site: https://aka.ms/Synapse-Success-By-Design

Published on:

Learn more