Programmatically documenting table columns in Microsoft Purview with Purview Python SDK

In ever-growing data strategy projects in an organization, data governance is an utmost priority to ensure that the data artifacts are governed, harmonized, and well-documented for business users to understand and interpret the data meaningfully.

As part of this effort in data governance, it becomes an essential priority for the data steward to maintain the data glossary/dictionary pertaining to the data entities. With Microsoft Purview, once a data source is registered, data owners can start to document the entities.

There are several approaches to work with Microsoft Purview entities programmatically, especially when needing to perform bulk operations such as documenting a large number of tables and columns dynamically.

This article shows how to use the Python SDK for Purview to programmatically document Purview table columns in bulk - assuming there are many tables and columns that needed to be automatically documented based off a reference tables - as in this example, the data dictionary maintained in Excel.

On the other hand, Purview REST APIs can be used to natively work with the REST APIs whereas the Python SDK for Purview is a wrapper that makes it easier to programmatically interacts with the Purview Atlas REST APIs in the backend.

For other SDK options, please refer to the following documentation:

.NET: Azure Purview SDK for .NET - Azure for .NET Developers | Microsoft Learn

PowerShell: Az.Purview Module | Microsoft Learn

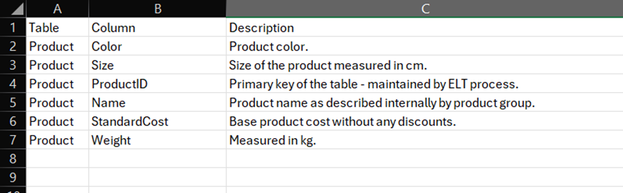

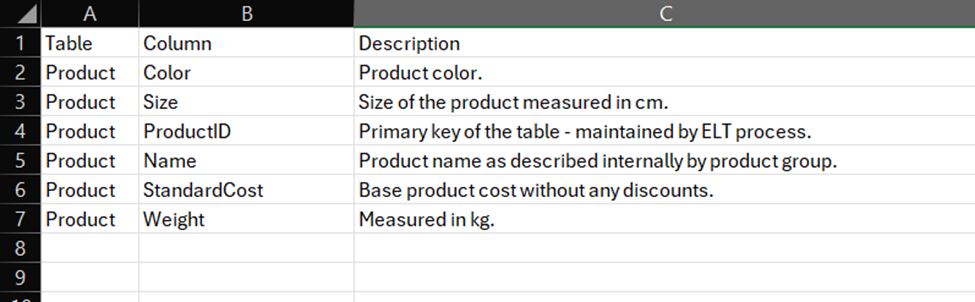

Assuming the data dictionary is maintained in an Excel spreadsheet. It can be any text file or even a table maintained in a database. For the purpose of this write-up, it is based on an Excel file. It can be updated as needed by the data owners as needed. The schema is simple as below, with three columns defining the table name, column, and its description. The Excel spreadsheet can be located anywhere and at the time of writing this article, the Excel file is maintained in Fabric OneLake.

Moving on, the file is being loaded into Pandas dataframe; PySpark notebook in Fabric.

The following Python SDK packages are required to be installed on the compute, you may install them with pip install command:

- azure-purview-scanning

- azure-identity

- azure-purview-administration

- azure-purview-catalog

- azure-purview-account

- azure-core

Next, the packages are imported as below:

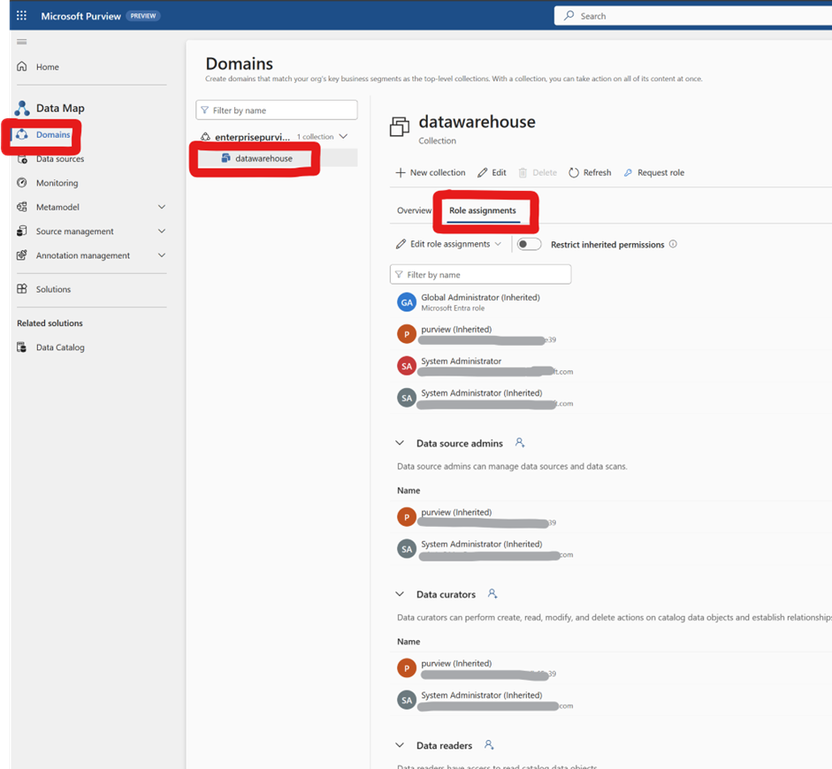

From the Purview side, ensure that the service principal assigned to the following Purview roles – these roles are to be assigned at the collection. The Python script will be authenticated through the service principal in which these roles are required to program the SDK for this scenario.

- Role: Collection admins

- Role: Data source admins

- Role: Data curators

- Role: Data readers

Returning to the notebook, the required variables are initialized for the service principal authentication.

These values can be easily obtained from the application registered in Microsoft Entra. Refer here for the documentation on creating a service principal:

Create a Microsoft Entra app and service principal in the portal - Microsoft identity platform | Microsoft Learn

With the required packages installed and the service principal related variables initialized, the first step in orchestration is to read the source data dictionary Excel file.

Next, there are functions defined that are being called forming the majority of the script.

The get_credentials() function initializes the ClientSecretCredential object that is used as a wrapper to authenticate against Purview APIs.

The get_catalog_client(reference_name_purview) initializes the PurviewCatalogClient object that primarily accesses Purview catalog interface. This is the client wrapper that is used at most part to perform the update of the column description.

The get_admin_client(reference_name_purview) initializes the client wrapper that is used to access the admin-privileged operations including such as querying the collection objects.

The get_collection_id(collection_name) function queries the collection to obtain the collection id based on the collection name that is being specified to be queried – notice the collection_name variable which is being initialized earlier.

This is the friendlier collection name that would have been assigned when creating the collection however, the collection id is of interest here to programmatically access the collection items which is obtained through the following function:

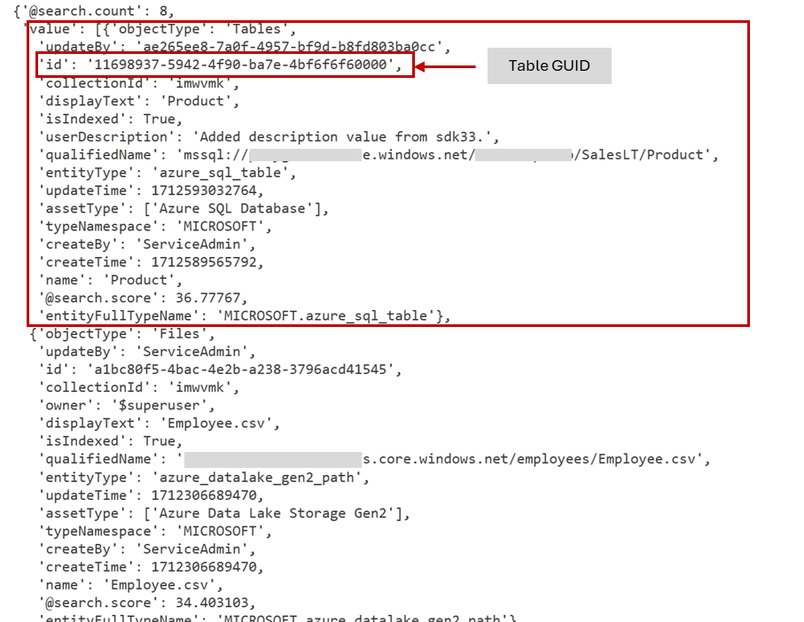

Calling the queryColection function by passing in the two parameters collection name and the reference name, which is the Purview account name, returns the JSON object as below.

Tip: This is the function that can be further enhanced to add in filter parameters to selectively pick the entity specifically -which could be parameterized from the data dictionary file. This function can be then looped to pick all the tables if the table names are maintained in the data dictionary file and calling this function to iterate over the columns dynamically.

The JSON object above lists all the items found in the specified collection name and the interest here is the Product table GUID which is identifiable as the azure_sql_table in the entityType for one of the objects in the payload above.

The table in this context is the entity. The GUID above is used to query the entity items that lists out the columns for the given entity (table).

The cell above defines another helper function that returns the column description based on the parameters passed, table and column names. The lookup is based on the data dictionary mapping table which is loaded into a Pandas dataframe.

The next cell of the code calls the function get_catalog_client(reference_name_purview) to initialize the catalog client wrapper object to serve as the client interface. The client interface is then used to call the entity methods to query the entity items – note the guid of the table being specified in line 2.

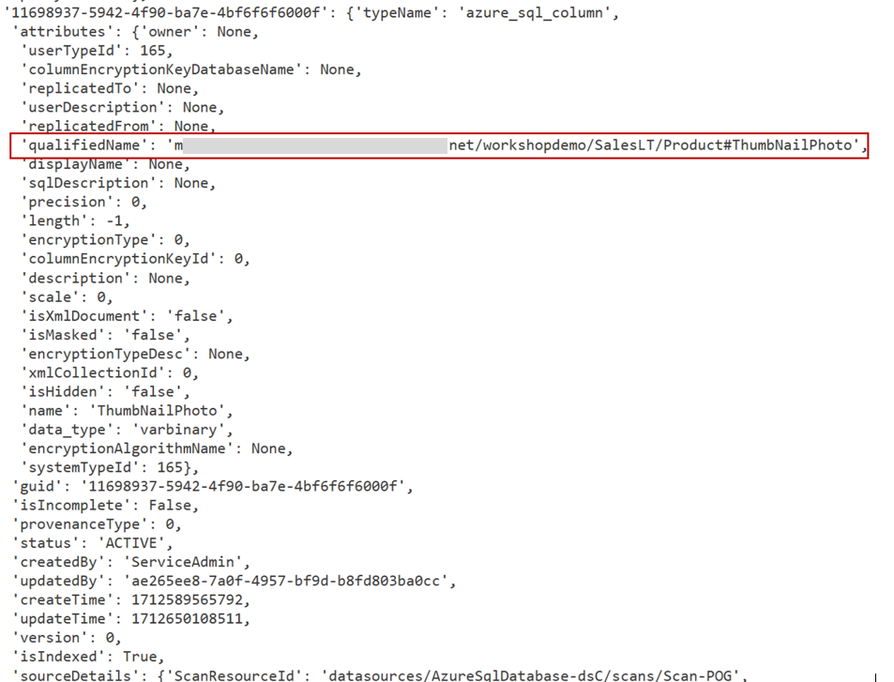

The above new cell iterates the GUID of the referred entities in which the cell earlier had the variable initialized as response_Entities. The referredEntities holds the column information for the given entity (table).

The GUID is used to access the referredEntity item in the collection and programmatically assign the attribute properties userDescription which is the column description.

The code above extracts the column and table name from the referredEntity item enumerated which is being passed as parameters to the function getColumnDescription. The function getColumnDescription returns the column description based on the mapping table.

The qualifiedName holds the table name and the column name as below thus, a script to extract its value individually as shown below.

The entity_response initialization in line 5 updates the JSON payload for the entity_response returned for the entity – in this case, the column description (userDescription).

The last line of the cell 7 calls the create_or_update(entity_response).

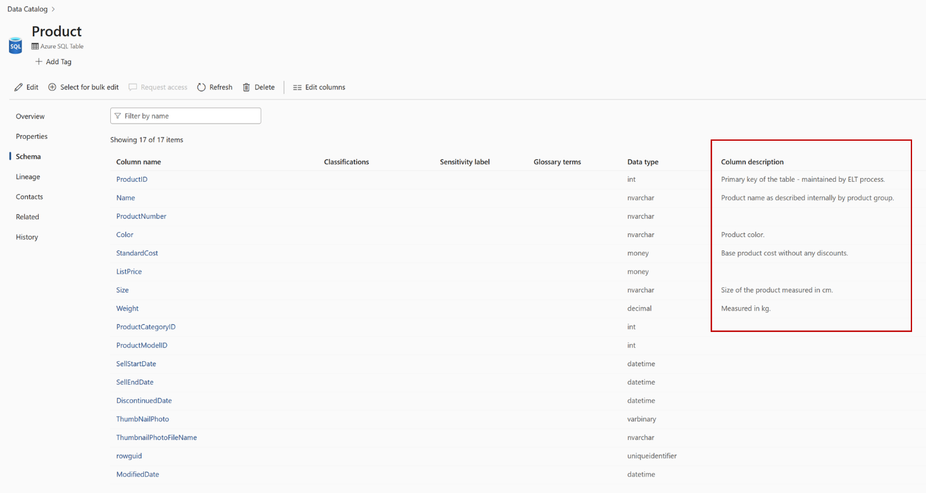

Upon the script finished running the create_or_update method, head over to Purview portal and look for the Product table’s column with its description documented – note that only subset of columns listed in the Excel mapping file. :smiling_face_with_smiling_eyes:

The full code:

In conclusion, this whole program can be automated to dynamically document the entities in Purview using a data dictionary reference table. This article provides the how to guide on how to use the Python SDK for Purview to programmatically document entities in Purview often the case for large number of entities to be documented. The function queryCollection would be the function to add further parameters to dynamically loop through the entities to be documented. This whole Python script can be orchestrated with the code deployed to Azure functions or scheduled via notebook in Fabric to run at a scheduled interval - there are several options that can be considered for this certainly.

Read more on the entity payload here when working with Python SDK: azure.purview.catalog.operations package — Azure SDK for Python 2.0.0 documentation (windows.net).

Thank you to my colleague Samarendra Panda for sharing the design patterns.

GitHub repo maintained here: purview-playground/metadata_extract_for_reporting at main · Sam-Panda/purview-playground · GitHub.

Additional article on exploring Purview's REST API: Exploring Purview’s REST API with Python (microsoft.com)

For REST API documentation, please refer here: Entity - REST API (Azure Purview) | Microsoft Learn.

Published on:

Learn more