Patient Referral Document Summarization using Azure OpenAI

Introduction

This article explores how the healthcare industry can utilize Generative AI, Large Language Model (LLM) Evaluation Metrics, and Machine Learning to streamline the patient referral process. Delays in reviewing referral documents can impact patient outcomes, making timely diagnosis and treatment is crucial. Generative AI Summarization enables hospitals to condense referral documents efficiently, speeding up patient admission and diagnoses while reducing physician review time. LLM evaluation metrics ensure trust in the summarization pipeline, enhancing physician confidence in using AI-generated content.

Imagine the following Scenario:

In a busy hospital setting, Dr. Jon Doe, a specialist, receives a referral for a critically ill patient. Typically, Dr. Jon Doe would spend days reading over multiple referral documents, trying to gather pertinent information. However, with the implementation of Generative AI Summarization powered by Azure technologies, Dr. Jon Doe receives a concise summary of the patient's medical history, symptoms, and relevant tests within minutes. This accelerated process allows Dr. Jon Doe to promptly assess the situation and initiate lifesaving treatment, ultimately improving patient outcomes. With the assurance provided by LLM evaluation metrics, Dr. Jon Doe trusts the AI-generated summary, confident that it captures all essential details accurately. This scenario illustrates how AI and ML technologies revolutionize healthcare, enabling faster decision-making and better patient care.

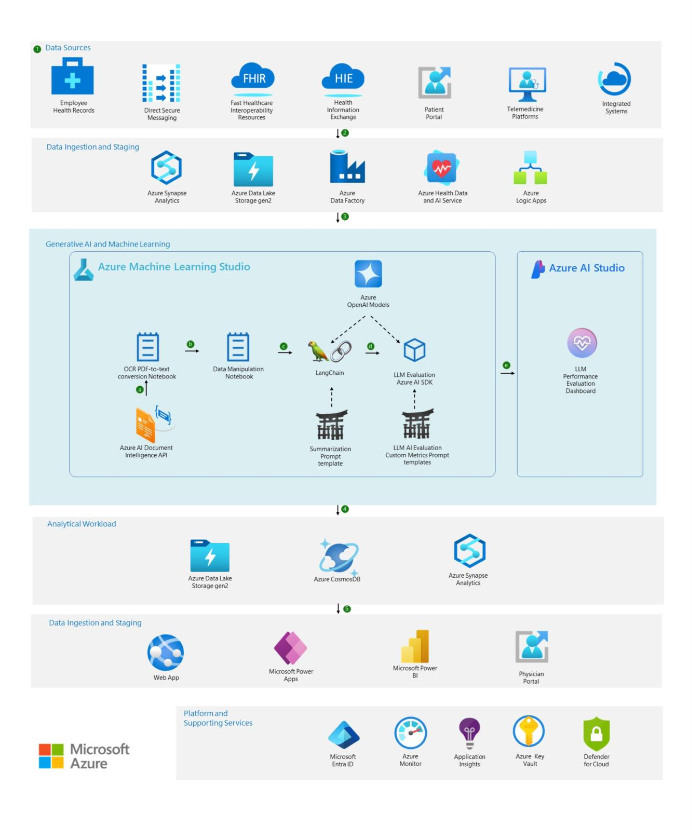

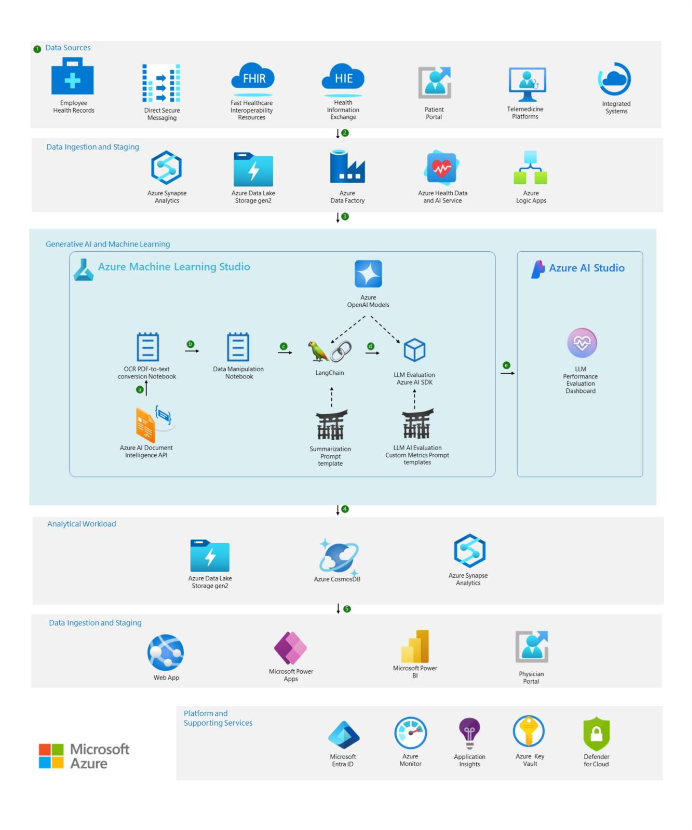

Architecture :

Use case Workflow :

1. Data Sources

Efficient patient referral systems in healthcare rely on access to diverse sources of patient information, encompassing handwritten notes and digitized data from various sources. These include Electronic Health Record (EHR) systems, enabling secure electronic transmission of referral documents, and Direct Secure Messaging facilitated by Health Information Service Providers (HISPs). Health Information Exchange (HIE) networks allow for the seamless sharing of patient data among different healthcare entities, while patient portals offer secure document exchange between patients and providers. Adoption of interoperability standards like HL7 or FHIR enhances data exchange between systems, complemented by the integration of telemedicine platforms for secure document sharing. Hospitals within integrated healthcare systems benefit from centralized patient information management. Analyzing these diverse data sources provides physicians with comprehensive patient health insights, guiding treatment decisions prior to admission.

2. Data Ingestion and Staging

Data ingestion is the process of moving data from different sources to a specific location. This process requires using specific connectors for each data source and target location.

Azure health Data API service enables rapid exchange of data through FHIR APIs, backed by a managed PaaS offering in the cloud. This service makes it easy for anyone working with health data to ingest, manage, and persist Protected Health Information (PHI).

Azure Data Factory offers a comprehensive range of connectors that can be leveraged to extract data from various sources, including databases, file systems, and cloud services. The health documents, usually in the form of PDFs, could be ingested using Azure Data Factory and placed inside a secure and private container inside Azure Data Lake Storage.

Logic Apps offer a visual designer to automate workflows and connect applications, data, and services across on-premises and cloud environments. By incorporating Logic Apps into the workflow, organizations can streamline data movement and processing tasks, further enhancing the efficiency and reliability of the data ingestion process.

3. Generative AI and Machine Learning

Azure Machine Learning Studio is a cloud-based service offering a user-friendly graphical interface (GUI) for constructing and operationalizing machine learning workflows within the Azure environment. Tailored to streamline the entire machine learning lifecycle, it facilitates tasks ranging from data preparation to model deployment and management, providing an integrated environment for building, training, and deploying machine learning models.

a. Data Extraction with Azure AI Document Intelligence

Referral documents, generally in unstructured PDF format, are securely stored in a private container within Azure Data Lake Storage (ADLS), potentially necessitating REST encryption for safeguarding sensitive information. In the workflow, an Azure ML Studio Python notebook is employed to retrieve documents from ADLS and invoke the Azure AI Document Intelligence API to utilize its OCR (Optical Character Recognition) capabilities to convert referral PDFs into textual format, facilitating easier retrieval and summarization in subsequent steps.

b. Data Manipulation for Summarization

Preparing data is a vital step in utilizing Generative AI foundational models for summarization. Prior to summarizing referral documents, it's essential to cleanse the text by eliminating irrelevant characters like special symbols, punctuation marks, or HTML tags that might hinder the summarization process. Furthermore, expanding abbreviations and acronyms to their full forms is crucial to maintain clarity and coherence in the summarization output. Additionally, tokenizing the text into smaller units, such as words or sub-words, helps create a structured input suitable for the OpenAI model.

c. Prompt Engineering & Summarization

To achieve precise summarization using Large Language Models (LLMs), prompt engineering is indispensable. Jinja templates offer a solution for crafting these prompts, which can also be conveniently stored in ADLS storage. Users have the flexibility to finely tune various prompts to invoke Azure OpenAI Chat Completion models, thereby generating customized summaries tailored to different types of referral documents.

LangChain serves as an open-source orchestration framework designed to streamline the application-building process with LLMs. By leveraging the summary chain feature within LangChain, alongside OpenAI models and summary prompts, one can efficiently generate the required summaries for referral documents in the desired format.

d. LLM Evaluation using Azure AI SDK

Developing an evaluation strategy is crucial for instilling trust among users, stakeholders, and the broader hospital community in the output generated through summarizing referral documents using Generative AI Large Language Models (LLMs).

The Azure AI SDK offers a solution, providing both out-of-the-box AI assisted metrics such as Groundedness, Coherence, Fluency, and Relevance, as well as the capability to create custom AI assisted metrics tailored to business and stakeholder requirements.

These standardized and customized metrics ensure that the generated summaries adhere to quality standards, evaluating coherence, relevance, and groundedness. Moreover, they enable researchers and developers to compare different models or prompt variations thereof to pinpoint the most effective one. Evaluation also gauges the generalization ability of LLMs across unseen data formats, showcasing their resilience and practical applicability. Ultimately, insights gathered from evaluation processes can guide iterative enhancements to LLM architectures, training methodologies, or prompt fine-tuning strategies, thereby elevating summarization performance.

e. LLM Evaluation Dashboard for Performance Monitoring in Azure AI Studio

Azure AI Studio is a comprehensive platform tailored for developers, facilitating the creation of generative AI applications within an enterprise-grade environment. With direct interaction capabilities via the Azure AI SDK and Azure AI CLI, developers can seamlessly engage with project code-first. The platform is designed to be inclusive, accommodating developers of varying abilities and preferences, fostering innovation in AI and influencing future advancements. Through Azure AI Studio, users can effortlessly explore, construct, evaluate, and deploy cutting-edge AI tools and machine learning models while adhering to responsible AI practices. Additionally, the platform fosters collaborative development, providing enterprise-grade security and a shared environment for team collaboration, enabling easy access to pre-trained models, data, and computational resources.

Azure AI Studio incorporates a Large Language Model (LLM) evaluation dashboard, which improves the user experience by enabling streamlined monitoring. This dashboard simplifies the tracking of crucial out-of-box and custom AI-assisted metrics essential for evaluation purposes. Developers and data scientists can utilize this dashboard to monitor these metrics continuously, enabling them to evaluate and respond to the performance and reliability of referral document summaries generated using Gen AI LLM Models effectively.

4. Analytical Workload

The extracted summaries from health documents can be effectively stored within resilient analytics systems, including Azure Synapse Analytics, Azure Data Lake, or Azure CosmosDB, capitalizing on their robust database capabilities. The extensive output produced in Azure Machine Learning (AML) notebooks, covering results from the Large Language Model (LLM) evaluation toolbox workflow, are securely archived in any of the recommended storage solutions. This deliberate storage strategy not only ensures the secure preservation of information but also facilitates future retrieval, in-depth analysis, and comparative assessments of the generated outputs. This approach underscores a seamless and comprehensive data management strategy, contributing to the efficiency and reliability of operations within the Azure ecosystem.

5. End-user Consumption

Physicians can see the summaries in their portal and instead of having to go through hundreds of documents, they just read a summary, streamlining the patient referral process and securing a faster admission process for a potential patient. Also, if needed, web apps and a visualization in PowerBI can be built.

Components

- Azure Synapse Analytics: Accelerates data insight with SQL, Spark, and Azure services integration.

- Azure Data Factory: Automates data movement and transformation in the cloud.

- Azure Data Lake: Limitless storage with easy integration and enterprise-grade security.

- Azure Health Data Services: Managed suite for healthcare data management and compliance.

- Azure AI Document Intelligence: Extracts text and data from documents with advanced machine learning.

- Azure OpenAI Service: Provides advanced language models for various AI use cases.

- Azure Machine Learning: Enterprise-grade service for model development and deployment.

- LangChain: Open-source framework for language model-powered applications.

- Power BI: Business analytics with rich connectors and visualization.

- Power Apps: Rapid development of custom business apps connecting to data sources.

- Azure AI Studio: Simplifies AI application development in the cloud.

- Microsoft Entra ID: Identity and access management for resource authentication.

- Key Vault: Key management solution for securing sensitive data.

- Azure Monitor: Comprehensive monitoring solution for cloud environments.

- Application Insights: Monitors web application availability and performance.

- Defender for Cloud: Cloud-native platform for application protection.

Potential Use Cases:

The integration of Generative Artificial Intelligence (GenAI) has the potential to bring transformative benefits across various industries. Here are alternative use cases in different sectors:

- Finance: Streamline financial report analysis for quicker decision-making.

- Retail: Summarize product reviews to understand customer sentiments rapidly.

- Manufacturing: Analyze quality control reports for efficient issue identification.

- Education: Automatically summarize educational content for quicker comprehension.

- Customer Service: Summarize emails to improve response times and communication.

- Human Resources: Streamline resume screening for efficient talent acquisition.

- Legal: Summarize complex legal documents for faster information extraction.

Contributors

This article is maintained by Microsoft. It was originally written by the following contributors.

Principal authors:

- Manasa Ramalinga | Principal Cloud Solution Architect – US Customer Success

- Oscar Shimabukuro Kiyan | Senior Cloud Solution Architect – US Customer Success

- Abed Sau | Senior Cloud Solution Architect – US Customer Success

Published on:

Learn moreRelated posts

Automating Business PDFs Using Azure Document Intelligence and Power Automate

In today’s data-driven enterprises, critical business information often arrives in the form of PDFs—bank statements, invoices, policy document...

Azure Developer CLI (azd) Dec 2025 – Extensions Enhancements, Foundry Rebranding, and Azure Pipelines Improvements

This post announces the December release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) Dec 2025 – Extensions En...

Unlock the power of distributed graph databases with JanusGraph and Azure Apache Cassandra

Connecting the Dots: How Graph Databases Drive Innovation In today’s data-rich world, organizations face challenges that go beyond simple tabl...

Azure Boards integration with GitHub Copilot

A few months ago we introduced the Azure Boards integration with GitHub Copilot in private preview. The goal was simple: allow teams to take a...

Microsoft Dataverse – Monitor batch workloads with Azure Monitor Application Insights

We are announcing the ability to monitor batch workload telemetry in Azure Monitor Application Insights for finance and operations apps in Mic...

Copilot Studio: Connect An Azure SQL Database As Knowledge

Copilot Studio can connect to an Azure SQL database and use its structured data as ... The post Copilot Studio: Connect An Azure SQL Database ...

Retirement of Global Personal Access Tokens in Azure DevOps

In the new year, we’ll be retiring the Global Personal Access Token (PAT) type in Azure DevOps. Global PATs allow users to authenticate across...

Azure Cosmos DB vNext Emulator: Query and Observability Enhancements

The Azure Cosmos DB Linux-based vNext emulator (preview) is a local version of the Azure Cosmos DB service that runs as a Docker container on ...