Azure IaaS, Silk Platform, and Silk Instant Extracts: Relational Databases to Azure AI

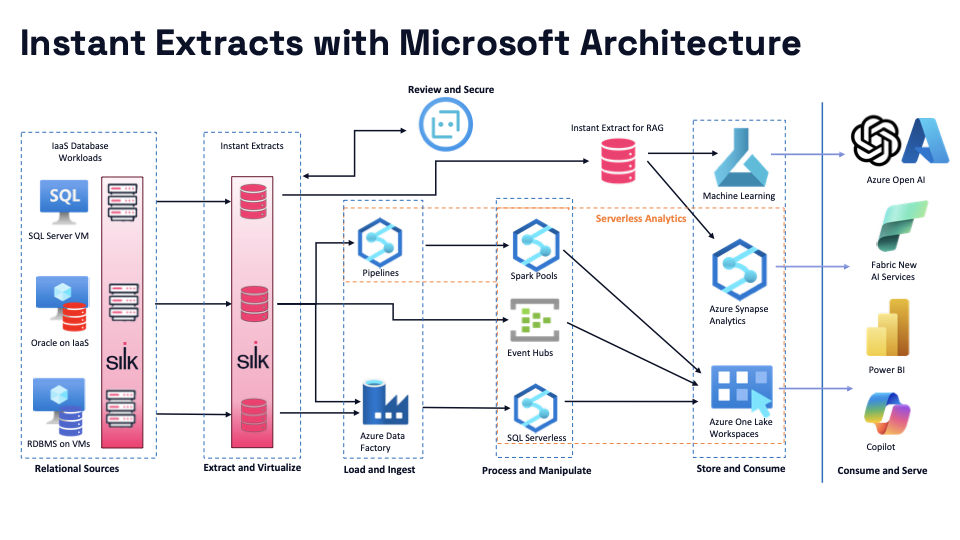

This documentation provides a comprehensive guide on how to integrate Oracle, SQL Server, and other database platforms installed on an Azure Virtual Machine (VM) with Silk virtualization for the storage layer to then use Instant Extracts to Azure AI services. We will cover how to architect for success and utilize Instant Extracts, connect with Azure Data Factory, Event Hubs, and Azure Synapse Analytics, culminating in the integration with Azure services like Fabric, Power BI, and Azure Machine Learning services.

Organization data in the cloud may house archaic data either in the process or modernizing or unable to modernize due to application or resource requirements, demanding these databases leverage Infrastructure as a Service (IaaS) solutions. These workloads can take advantage of advance features in third-party services like Silk enabling AI services without data leaving robust relational databases when SLAs demand it or support AI and ML initiatives avoiding excess demand on the original RDBMS resources.

|

This document covers how to use Silk’s Instant Extract snapshot technology to provide additional database read/write copies for use with serverless ML/AI services and advanced analytics in Azure. https://learn.microsoft.com/en-us/azure/search/retrieval-augmented-generation-overview

Prerequisites

- An active Azure subscription.

- An Azure Virtual Machines (VM) with Oracle, SQL Server, or other database platforms installed.

- Silk Intelligent Data Platform in the Azure subscription and current tenant, supporting the underlying storage layer, compression, deduplication, and database virtualization.

- Basic knowledge of the Azure cloud, Powershell, Azure Data Factory, Event Hubs, Azure Synapse Analytics, Fabric, Power BI, and Azure Machine Learning.

Step 1: Setting Up Your Environment

1.1 Azure VM Configuration

Ensure that your Azure VM is running and the database (Oracle, SQL Server, etc.) is correctly installed and configured. Ensure recommended Azure network connectivity and proper NSGs for security are in place.

1.2 Install Silk Virtualization

Follow Silk's documentation in Azure Market place to install and configure Silk Intelligent Data Platform as part of your tenant and attach each Azure VM to the Silk Data Pod(s). All database files should reside on Silk to ensure the most optimal performance and to take advantage of advance features like advance compression, deduplication, and zero-footprint, read/write, instant extracts.

Step 2: Creating Instant Extracts

2.1 Configure Silk Instant Extracts

- Collect vital information about the VM, such as IP address/host name, database name(s), logins used, including certificates for SSH/RDP

- Using the Instant Extract automated Powershell scripts, an Instant Extract can be produced, offering a read/write database replica to be used for analytics, machine learning and AI without additional workload pressure to the primary relational database.

- Execute the Powershell script:

PS >.\New-VolumeSnapshot.ps1 -volumeGroupName prodsqldb -targetHostName sql -snapshotName InstantExtract01 -retentionPolicyName Analytics

- Choose the number of disks and if the creation will be done with the source offline or performed while online, (most perform instant extracts online):

PS >Set-disk number 3 -isoffline $false

With the simple completion of a few arguments with the script, the Instant Extract completes in just a matter of minutes!

2.2 Attach the Database and Validate

Using another Powershell script, the extract can now be attached:

PS >.\attachdatabases.ps1

Attaching database Instant Extract1…Done!

You can now use SQL Server Management Studio (SSMS) to view the database, including objects, just as you would the original database.

These same steps can be performed for as many databases or for as many instant extracts as required to support the needs of the organization for analytics, machine learning and/or AI.

Step 3: Integrating with Azure Data Factory

3.1 Set Up Azure Data Factory

Create an Azure Data Factory instance if you haven't already. Configure the Data Factory to access the Instant Extract just as you would for any SQL Server database.

3.2 Create Data Pipelines

Develop Azure Data pipelines that extract data from the snapshots, perform necessary transformations, and load the data into suitable formats for downstream processing.

Step 4: Feeding into Event Hubs and Azure Synapse Analytics

4.1 Configure Event Hubs

Azure Event Hubs allows for real-time data from streaming sources to enter the system easily.

4.2 Integrate with Azure Synapse Analytics

Load the processed data into Azure Synapse Analytics for advanced analytics and data warehousing. Configure Synapse to ingest data from both Azure Data Factory and Event Hubs which lets organizations analyze data immediately with Azure Stream Analytics when real-time dashboard requirements must be met.

As demonstrated in the architecture diagram, data can be centrally stored in Azure Data Lake as part of workspaces for further analysis reporting, or simply retained in Instant Extracts and used when needed.

Step 5: Leveraging Azure Services for Insights and Analytics

5.1 Integrate with Fabric

Utilize Azure Fabric for managing microservices and orchestrating complex processes. Connect Fabric with Synapse to automate workflows based on the analytical insights.

5.2 Visualize Data with Power BI

Connect Power BI to Azure Synapse Analytics to create interactive reports and dashboards. Ensure that Power BI has the necessary permissions to access Synapse data.

5.3 Leverage Azure Machine Learning

Configure Azure Machine Learning to utilize the data stored in Azure Synapse for building, training, and deploying machine learning models. Set up serverless endpoints to operationalize the models without managing infrastructure. Instant Extracts can feed Spark Pools to perform deep analysis via Azure Databricks and/or with Apache Spark pools compute capabilities with Kafka or feed into Data Lake Storage Gen2 to use with additional Fabric services.

5.4 Maximizing Data Value with RAG

Integrating a relational database via a Silk Instant Extract copy and using Azure AI services offers substantial value to the Record, Analyze, and Govern (RAG) framework, enhancing data management, analysis, and compliance across an organization. In the context of RAG, a relational database serves as a robust foundation for accurately recording data, providing a structured, reliable, and scalable environment for critical data. This structured data solution is crucial for maintaining data integrity and consistency, which are fundamental for effective analysis and governance.

With the introduction of vector database solutions in enterprise RDBMS distributions and when used in conjunction with multiple Azure services via an instant extract copy to provide as many copies as required for AI workloads, Azure's advanced analytics, AI capabilities, and security features can then leverage this to further amplify its value in the RAG process.

For example, Azure's AI and machine learning services can analyze the data stored in the database to uncover insights, predict trends, and automate decision-making processes without critical data having to leave the relational database platform required by an organization’s security policy, while still furthering democratization of data. This integration allows organizations to move beyond traditional descriptive analytics to more predictive and prescriptive analytics, enhancing their ability to make informed decisions quickly.

Step 6: Monitoring and Maintenance

Regularly monitor the performance and health of your integration. Ensure that data flows seamlessly across the components and that the storage, compute, and networking resources are optimized for cost and performance.

The Synapse Monitor Hub monitors Azure Synapse pipelines, and Azure Monitor can monitor Data Factory, along with other resources such as IaaS.

Conclusion

By following these steps, you can effectively integrate various database platforms on an Azure VM with Silk virtualization into the Azure ecosystem, leveraging Azure Data Factory, Event Hubs, Azure Synapse Analytics, Fabric, Power BI, and Azure Machine Learning to drive insights and value from your data. Always ensure to adhere to best practices for security, monitoring, and maintenance to ensure a robust and reliable data integration architecture.

Will need links to the scripts when available for this document.

The silk powershelgl scripts are available on the public silk GitHub repository that JR manages

https://github.com/silk-us/scripts-and-configs/tree/main/PowerShell

Published on:

Learn moreRelated posts

Azure Cosmos DB Data Explorer now supports Dark Mode

If you spend time in the Azure Portal’s using Azure Cosmos DB Data Explorer, you know it’s a “lots of screens, lots of tabs, lots of work happ...

Microsoft Entra ID Governance: Azure subscription required to continue using guest governance features

Starting January 30, 2026, Microsoft Entra ID Governance requires tenants to link an Azure subscription to use guest governance features. With...

Azure Developer CLI (azd) – January 2026: Configuration & Performance

This post announces the January 2026 release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) – January 2026: Conf...

Azure SDK Release (January 2026)

Azure SDK releases every month. In this post, you'll find this month's highlights and release notes. The post Azure SDK Release (January 2026)...

Azure Cosmos DB TV Recap – From Burger to Bots – Agentic Apps with Cosmos DB and LangChain.js | Ep. 111

In Episode 111 of Azure Cosmos DB TV, host Mark Brown is joined by Yohan Lasorsa to explore how developers can build agent-powered application...

Accelerate Your Cosmos DB Infrastructure with GitHub Copilot CLI and Azure Cosmos DB Agent Kit

Modern infrastructure work is increasingly agent driven, but only if your AI actually understands the platform you’re deploying. This guide sh...

Accelerate Your Cosmos DB Infrastructure with GitHub Copilot CLI and Azure Cosmos DB Agent Kit

Modern infrastructure work is increasingly agent driven, but only if your AI actually understands the platform you’re deploying. This guide sh...

SharePoint: Migrate the Maps web part to Azure Maps

The SharePoint Maps web part will migrate from Bing Maps to Azure Maps starting March 2026, completing by mid-April. Key changes include renam...