Why you shouldn’t say 'please' or 'thank you' to AI (and why it matters)

We’ve all been there: asking ChatGPT, Copilot or whatever AI, for something and instinctively saying “please” or “thank you.” It feels polite, right? But AI doesn’t care. Talking to it like it’s a person isn’t helping anyone, and it might actually harm how we think about these systems.

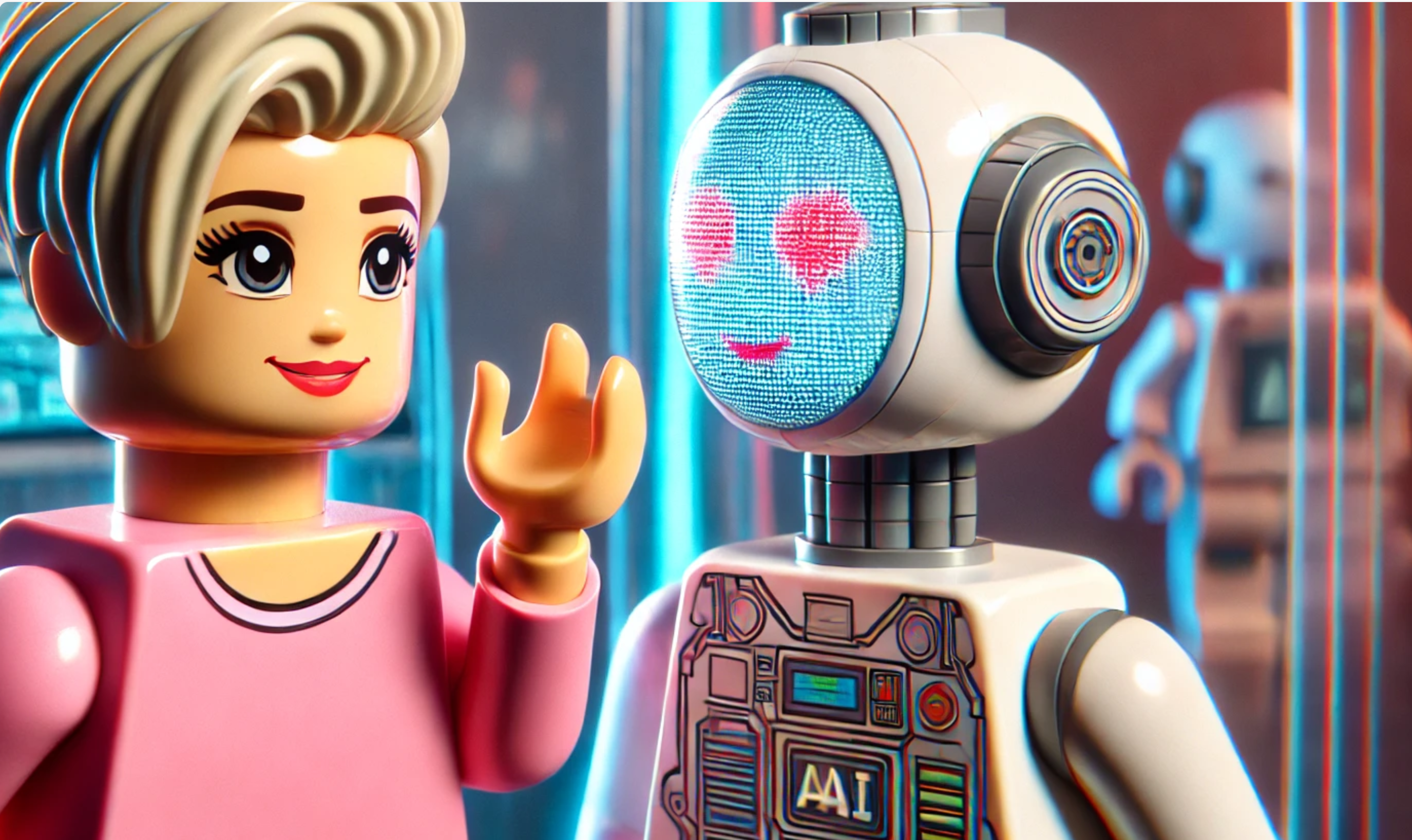

We humans love to project human traits onto things that aren’t human - a concept called “anthropomorphism”. We name our cars, swear at our laptops when they freeze, and now, we talk to AI as if it has thoughts and feelings. AI, however, doesn’t think, feel, or care about your mood. When we say “please” or “thank you” to it, we’re blending the lines between what’s human and what’s not.

This tendency to anthropomorphize AI is everywhere. We refer to AI as “learning”, “reasoning” or even “understanding”, but in reality, these systems aren’t experiencing anything like a human would. We talk about AI this way because it helps us make sense of the complex tech behind it. But in doing so, we risk overestimating its capabilities and assuming it has qualities - like empathy or understanding - that it simply doesn’t. This leads to problems:

- it creates false expectations: We’re subtly reinforcing the idea that it understands us in the same way a human would. It doesn’t. AI processes data and performs tasks. It’s not reading between the lines, and it doesn’t “feel” appreciated when you’re polite.

- it distorts what AI can really do; anthropomorphizing AI can make us think it’s more capable than it is. We might assume AI can “learn” like a human or make decisions with some form of understanding. But that’s not the case.

- it shifts responsibility away from us. The more we treat AI like it’s human, the more we might let go of our own responsibility. AI can’t be held accountable for its actions or biases - we can.

Oh yeah, how could I forget “Being Nice to the Future Overlords”? Some of us joke about being polite to AI “just in case they become our future robot overlords.” The idea is that if AI takes over, those of us who were nice to them will get a better deal. It’s a funny thought, but it also fuels this whole Terminator-style narrative where AI is this malevolent force waiting to exploit us. That’s not what AI is about - treating it like a potential overlord makes it harder to understand what AI really is: a tool designed by humans, for humans. When we treat AI like it’s something with power or agency, we buy into this dystopian idea of AI taking control, which really just distracts us from thinking about AI responsibly. The killer robot storyline harms our ability to grasp the real issues around AI, like biases, privacy, and ethical usage.

If we want to use AI responsibly, we need to understand it better. It’s not enough to not anthropomorphize it - We need to know how to interact with it to advance society.

Published on:

Learn more