Your Testing strategy is broken - Let’s fix it

Test coverage is not quality

That’s a hard truth most teams don’t want to hear. But we need to. Because too many development teams are stuck in the wrong mindset: chasing 100% test coverage as if it guarantees safe deployments, stable apps, and happy users. Spoiler alert: It doesn’t.

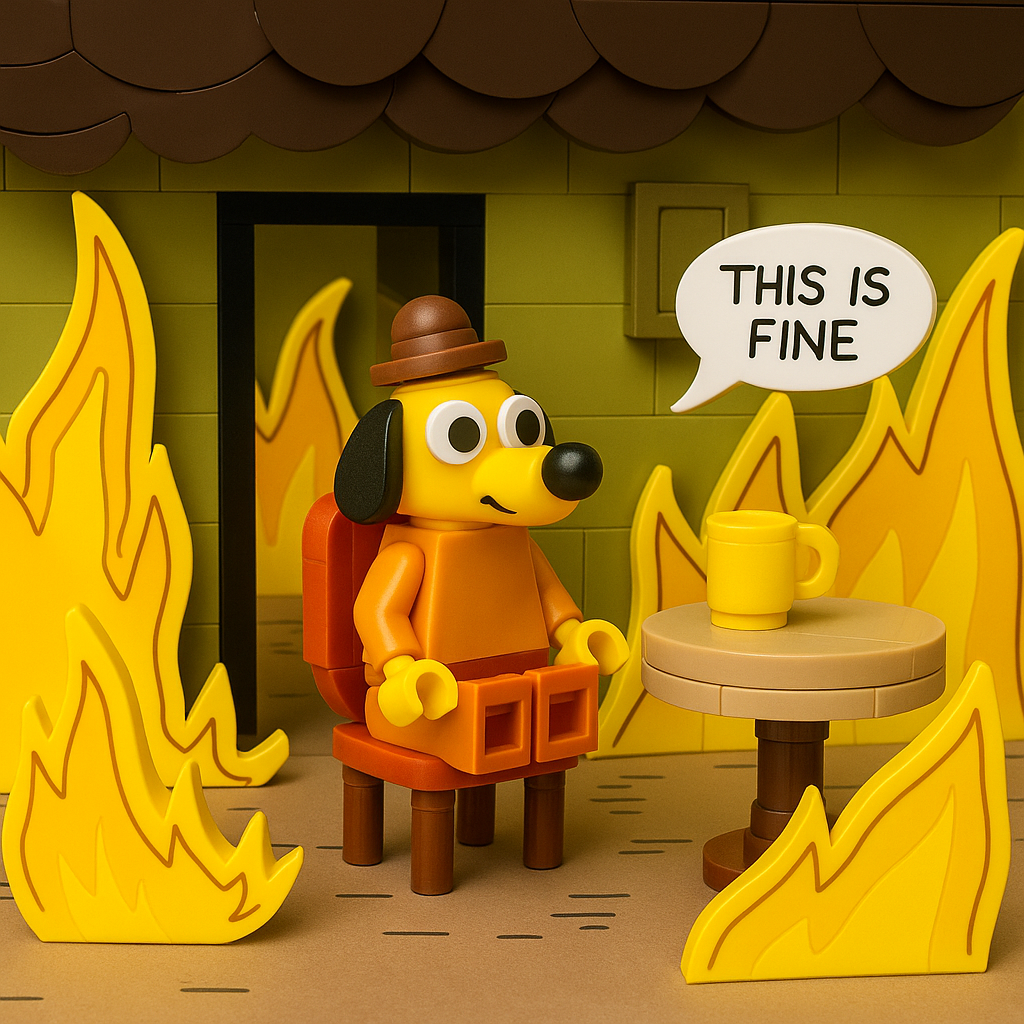

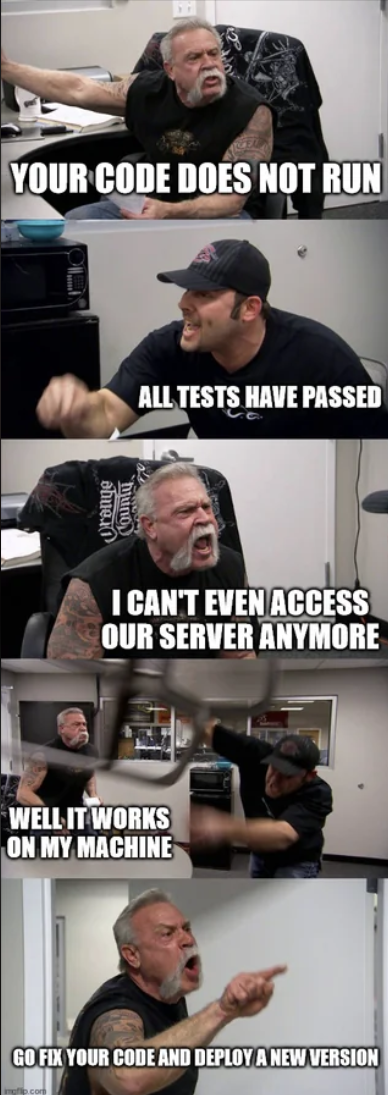

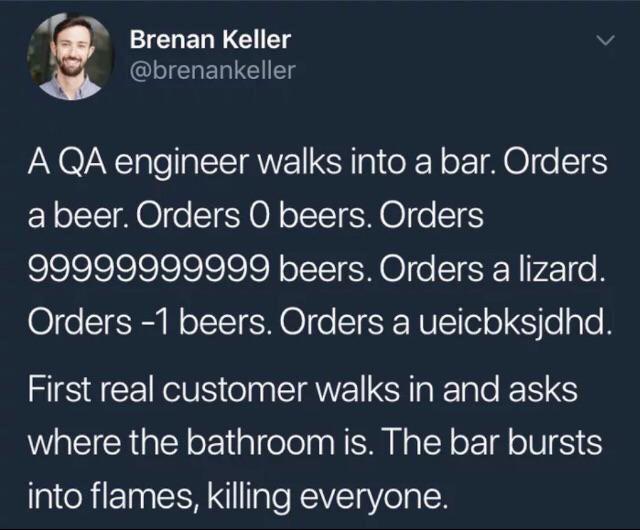

In fact, the more you worship coverage metrics, the more likely you are to miss the actual point of testing: to reduce risk and increase confidence in the system you ship. Most test suites in the wild aren’t robust safety systems — they’re duct-taped scaffolding, held together with mock data, over-simplified edge cases, and a hope that it probably won’t crash again 🤞🤞. You’ll find unit tests that mock everything except the actual behavior, stubs that assume the happy path, and assertions that test for the existence of a response rather than its correctness. While exact numbers vary, industry observations consistently show that many defects in production stem from logic errors and integration issues — the kind that unit tests alone rarely catch. Reports from quality tooling vendors and case studies in enterprise environments highlight how overly mocked test suites often miss real-world behavior and system contracts. This pattern is common in integration-heavy systems, especially when test suites don’t include API contract validation. For example, many teams have reported incidents where upstream API changes—such as renamed fields or altered data types—slipped past their test suites because mocked data hid the real risk. These failures are rarely caught by line coverage metrics and often occur in systems showing high test coverage but lacking true behavioral checks. The coverage looks impressive. The confidence, in practice, is often misplaced.

The illusion of safety

Test coverage tells you which lines of code have been executed during testing. That’s it. Not whether they were tested with relevant inputs. Not whether they behaved correctly. Not whether they matter to users. This is how you end up with 92% coverage and still break production.

These aren’t edge cases. They are blind spots: the kind you miss when your “quality gate” is a green bar glued on with test doubles and wishful thinking.

What coverage doesn’t tell you

Coverage can’t tell you if critical business flows actually work. It doesn’t confirm that your APIs behave correctly when the schema changes. It won’t catch performance regressions, accessibility issues, or security gaps. It tells you nothing about whether your tests reflect how real users interact with the system. You can hit every line and still fail when a third-party service returns null. Or break login for users with special characters in their email address. Or violate accessibility standards and expose your organization to lawsuits. If your strategy is built on coverage, you’re measuring activity, not assurance. You’re holding your production code together with duct tape and dashboard metrics.

Why business apps are especially at risk

Enterprise applications: ERPs, CRMs, finance systems, low-code tools amplify the problem. They integrate across systems. They rely on real-world, unpredictable data. They must meet compliance, access control, and auditing requirements. A green coverage report in this world is like saying the blueprint looks fine while the building has no plumbing. Or heating. Or working doors.

The real cost of bad testing

The cost of getting it wrong is massive. Gartner estimates the average cost of IT downtime at around $5,600 per minute: That’s more than $300,000 per hour. In large enterprises, that number can spike much higher. Amazon once lost an estimated $2.6 million from just 13 minutes of downtime. When it comes to data breaches, IBM’s 2023 Cost of a Data Breach Report puts the global average at $4.45 million.

These are just the direct financial losses. The indirect costs cut deeper: burned out developers scrambling to patch things that should’ve been caught earlier, users losing trust after yet another oops moment, and a team culture where people quietly tape things back together instead of fixing the foundation.

Fix it at the source: requirements

If testing happens after coding, it’s already too late. At that point, you’re not validating understanding — you’re validating assumptions you hope were right. Testing should begin the moment you define the requirement.

- What’s the expected behavior?

- What’s the risk if it fails?

- How will we know it works — and how will we prove it?

Good teams test. Great teams integrate testing into the requirement process.

Modern engineering teams treat testing as part of requirements engineering. They don’t separate the what from the how we’ll know it worked. When a feature is proposed, so is its verification. A requirement without a testable outcome is a red flag 🚩.

This is where Behavior-Driven Development (BDD) and example mapping change the game. Instead of vague feature specs, teams define shared examples: edge cases, failure modes, and success criteria. A user story becomes a testable scenario: Given a customer has no active subscription, when they log in, then they are redirected to the upgrade screen. It’s not just about automation, but about alignment. When tests are derived from well-defined requirements:

- Developers know what success looks like

- QA doesn’t need to reverse-engineer intent

- Business stakeholders can read and understand the criteria

- Ambiguities are exposed before they become bugs

This practice shifts the energy from writing tests that merely touch the code, to designing tests that challenge the logic, the integration, the value. It closes the gap between we thought it worked and we proved it works.

It also changes team dynamics. Now, QA isn’t just testing functionality, they’re challenging clarity. Product owners aren’t just writing features, they’re shaping outcomes. Developers aren’t just building to spec, they’re validating intent.

This is where you stop taping quality on at the end and start designing it in from the beginning.

What to do instead of chasing 100%

Shift your strategy. Focus on critical user flows; the parts of the system that matter most in production. Write tests that validate behavior and integration, not trivial implementation details. Treat accessibility and security as testable requirements, not afterthoughts. Use coverage as a signal, not a goal. And involve QA from the start of the process, not the end.

Final thoughts

Testing isn’t about writing more tests. It’s about writing the right ones. Coverage makes you feel safe. Confidence means you are safe. Because if your test strategy is held together by tape and bubble gum, you can expect it to fall apart the moment anyone leans on it.

This blog post is the first part of a series on testing - stay tuned for the following parts or just hit the RSS button 💡

Published on:

Learn more