Azure VMware Solution Performance Design Considerations

Overview

A global enterprise wants to migrate thousands of VMware vSphere virtual machines (VMs) to Microsoft Azure as part of their application modernization strategy. The first step is to exit their on-premises data centers and rapidly relocate their legacy application VMs to the Azure VMware Solution as a staging area for the first phase of their modernization strategy. What should the Azure VMware Solution look like?

Azure VMware Solution is a VMware validated first party Azure service from Microsoft that provides private clouds containing VMware vSphere clusters built from dedicated bare-metal Azure infrastructure. It enables customers to leverage their existing investments in VMware skills and tools, allowing them to focus on developing and running their VMware-based workloads on Azure.

In this post, I will introduce the typical customer workload performance requirements, describe the Azure VMware Solution architectural components, and describe the performance design considerations for Azure VMware Solution private clouds.

In the next section, I will introduce the typical performance requirements of a customer’s workload.

Customer Workload Requirements

A typical customer has multiple application tiers that have specific Service Level Agreement (SLA) requirements that need to be met. These SLAs are normally named by a tiering system such as Platinum, Gold, Silver, and Bronze or Mission-Critical, Business-Critical, Production, and Test/Dev. Each SLA will have different availability, recoverability, performance, manageability, and security requirements that need to be met.

For the performance design quality, customers will normally have CPU, RAM, Storage and Network requirements. This is normally documented for each application and then aggregated into the total performance requirements for each SLA. For example:

|

SLA Name |

CPU |

RAM |

Storage |

Network |

|

Gold |

Low vCPU:pCore ratio (<1 to 2), Low VM to Host ratio (2-8) |

No RAM oversubscription (<1) |

High Throughput or High IOPS (for a particular I/O size), Low Latency |

High Throughput, Low Latency |

|

Silver |

Medium vCPU:pCore ratio (5 to 8), Medium VM to Host ratio (10-15) |

Medium RAM oversubscription ratio (1.1-1.3) |

Medium Latency |

Medium Latency |

|

Bronze |

High vCPU:pCore ratio (10-15), High VM to Host ratio (20+) |

High RAM oversubscription ratio (1.5-2) |

High Latency |

High Latency |

Table 1 – Typical Customer SLA requirements for Performance

The performance concepts introduced in Table 1 have the following definitions:

- CPU: CPU model and speed (this can be important for legacy single threaded applications), number of cores, vCPU to physical core ratios.

- Memory: Random Access Memory size, Input/Output (I/O) speed and latency, oversubscription ratios.

- Storage: Capacity, Read/Write Input/Output per Second (IOPS) with Input/Output (I/O) size, Read/Write Input/Output Latency.

- Network: In/Out Speed, Network Latency (Round Trip Time).

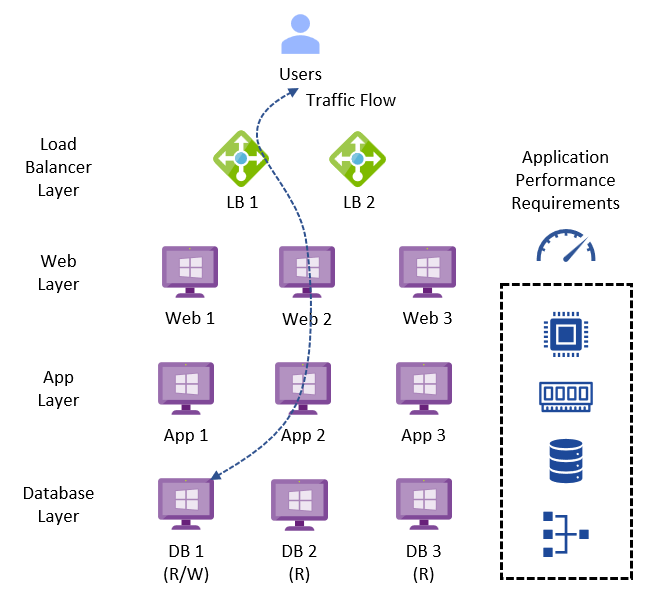

A typical legacy business-critical application will have the following application architecture:

- Load Balancer layer: Uses load balancers to distribute traffic across multiple web servers in the web layer to improve application availability.

- Web layer: Uses web servers to process client requests made via the secure Hypertext Transfer Protocol (HTTPS). Receives traffic from the load balancer layer and forwards to the application layer.

- Application layer: Uses application servers to run software that delivers a business application through a communication protocol. Receives traffic from the web layer and uses the database layer to access stored data.

- Database layer: Uses a relational database management service (RDMS) cluster to store data and provide database services to the application layer.

The application can also be classified as OLTP or OLAP, which have the following characteristics:

- Online Transaction Processing (OLTP) is a type of data processing that consists of executing several transactions occurring concurrently. For example, online banking, retail shopping, or sending text messages. OLTP systems tend to have a performance profile that is latency sensitive, choppy CPU demands, with small amounts of data being read and written.

- Online Analytical Processing (OLAP) is a technology that organizes large business databases and supports complex analysis. It can be used to perform complex analytical queries without negatively impacting transactional systems (OLTP). For example, data warehouse systems, business performance analysis, marketing analysis. OLAP systems tend to have a performance profile that is latency tolerant, requires large amounts of storage for records processing, has a steady state of CPU, RAM and storage throughput.

Depending upon the performance requirements for each service, infrastructure design could be a mix of technologies used to meet the different performance SLAs with cost efficiency.

Figure 1 – Typical Legacy Business-Critical Application Architecture

In the next section, I will introduce the architectural components of the Azure VMware Solution.

Architectural Components

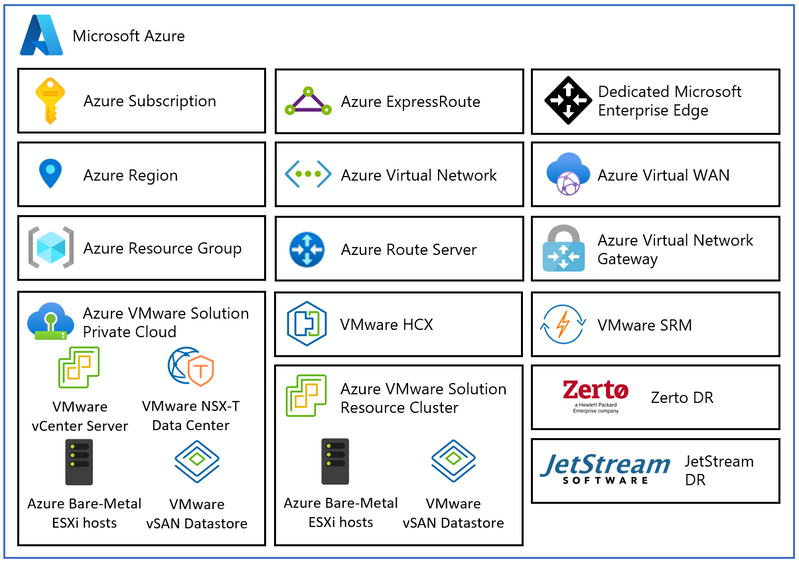

The diagram below describes the architectural components of the Azure VMware Solution.

Figure 2 – Azure VMware Solution Architectural Components

Each Azure VMware Solution architectural component has the following function:

- Azure Subscription: Used to provide controlled access, budget, and quota management for the Azure VMware Solution.

- Azure Region: Physical locations around the world where we group data centers into Availability Zones (AZs) and then group AZs into regions.

- Azure Resource Group: Container used to place Azure services and resources into logical groups.

- Azure VMware Solution Private Cloud: Uses VMware software, including vCenter Server, NSX-T Data Center software-defined networking, vSAN software-defined storage, and Azure bare-metal ESXi hosts to provide compute, networking, and storage resources.

- Azure VMware Solution Resource Cluster: Uses VMware software, including vSAN software-defined storage, and Azure bare-metal ESXi hosts to provide compute, networking, and storage resources for customer workloads by scaling out the Azure VMware Solution private cloud.

- VMware HCX: Provides mobility, migration, and network extension services.

- VMware Site Recovery: Provides Disaster Recovery automation and storage replication services with VMware vSphere Replication. Third party Disaster Recovery solutions Zerto Disaster Recovery and JetStream Software Disaster Recovery are also supported.

- Dedicated Microsoft Enterprise Edge (D-MSEE): Router that provides connectivity between Azure cloud and the Azure VMware Solution private cloud instance.

- Azure Virtual Network (VNet): Private network used to connect Azure services and resources together.

- Azure Route Server: Enables network appliances to exchange dynamic route information with Azure networks.

- Azure Virtual Network Gateway: Cross premises gateway for connecting Azure services and resources to other private networks using IPSec VPN, ExpressRoute, and VNet to VNet.

- Azure ExpressRoute: Provides high-speed private connections between Azure data centers and on-premises or colocation infrastructure.

- Azure Virtual WAN (vWAN): Aggregates networking, security, and routing functions together into a single unified Wide Area Network (WAN).

In the next section, I will describe the performance design considerations for the Azure VMware Solution.

Performance Design Considerations

The architectural design process takes the business problem to be solved and the business goals to be achieved and distills these into customer requirements, design constraints and assumptions. Design constraints can be characterized by the following three categories:

- Laws of the Land – data and application sovereignty, governance, compliance, etc.

- Laws of Physics – data and machine gravity, network latency, etc.

- Laws of Economics – owning versus renting, total cost of ownership (TCO), return on investment (ROI), capital expenditure, operational expenditure, earnings before interest, taxes, depreciation, and amortization (EBITDA), etc.

Each design consideration will be a trade-off between availability, recoverability, performance, manageability, and security design qualities. The desired result is to deliver business value with the minimum of risk by working backwards from the customer problem.

Design Consideration 1 – Azure Region: Azure VMware Solution is available in 27 Azure Regions around the world. Select the relevant Azure Regions that meet your geographic requirements. These locations will typically be driven by your design constraints and the required Azure services that will be dependent upon the Azure VMware Solution.

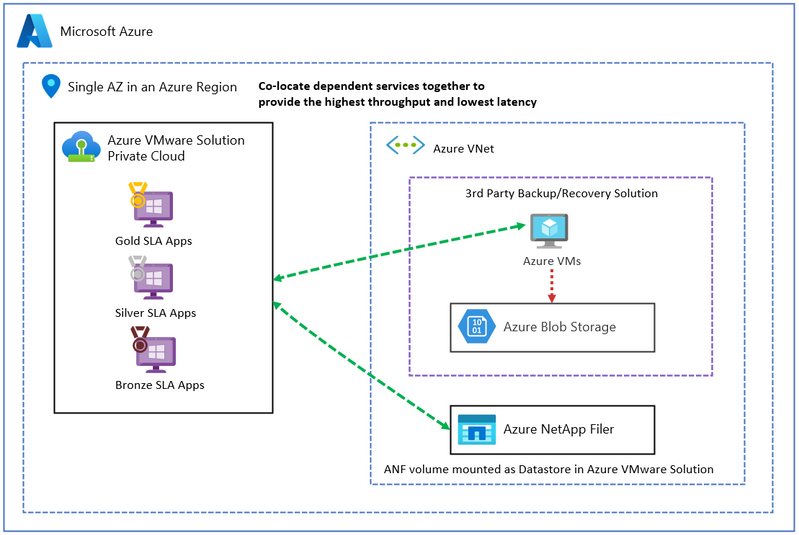

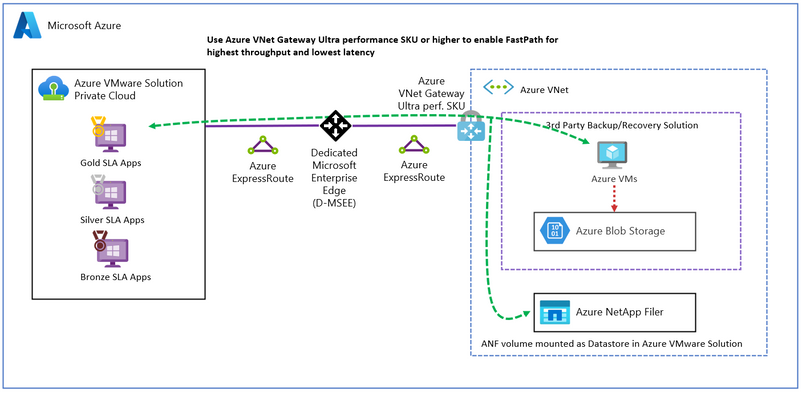

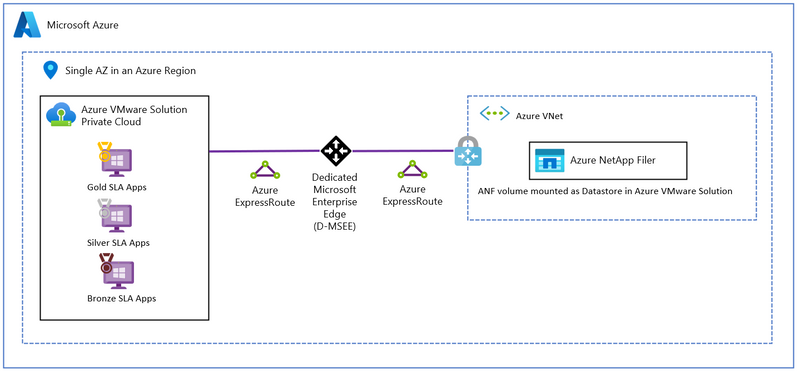

For highest throughput and lowest network latency, the Azure VMware Solution and dependent Azure services such as third-party backup/recovery and Azure NetApp Filer volumes should be placed in the same Availability Zone in an Azure Region.

Unfortunately, the Azure VMware Solution does not have a Placement Policy Group feature to allow Azure services to be automatically deployed in the same Availability Zone. You will have to work with your Microsoft account team to ensure that your Azure services are placed as closely together as possible.

In addition, the proximity of the Azure Region to the remote users and applications consuming the service should also be considered for network latency and throughput.

Figure 3 – Azure VMware Solution Availability Zone Placement for Performance

Design Consideration 2 – SKU type: Table 2 lists the three SKU types can be selected for provisioning an Azure VMware Solution private cloud. Depending upon the workload performance requirements, the AV36 and AV36P nodes can be used for general purpose compute and the AV52 nodes can be used for compute intensive and storage heavy workloads.

The AV36 SKU is widely available in most Azure regions and the AV36P and AV52 SKUs are limited to certain Azure regions. Azure VMware Solution does not support mixing different SKU types within a private cloud. You can check Azure VMware Solution SKU availability by Azure Region here.

Currently, Azure VMware Solution does not have SKUs that support GPU hardware.

For more information, refer to SKU types.

|

SKU Type |

Purpose |

CPU (Cores/GHz) |

RAM (GB) |

vSAN Cache Tier (TB, raw) |

vSAN Capacity Tier (TB, raw) |

Network Interface Cards |

|

AV36 |

General Purpose Compute |

Dual Intel Xeon Gold 6140 CPUs (Skylake microarchitecture) with 18 cores/CPU @ 2.3 GHz, Total 36 physical cores (72 logical cores with hyperthreading) |

576 |

3.2 (NVMe) |

15.20 (SSD) |

4x 25 Gb/s NICs (2 for management & control plane, 2 for customer traffic) |

|

AV36P |

General Purpose Compute |

Dual Intel Xeon Gold 6240 CPUs (Cascade Lake microarchitecture) with 18 cores/CPU @ 2.6 GHz / 3.9 GHz Turbo, Total 36 physical cores (72 logical cores with hyperthreading) |

768 |

1.5 (Intel Cache) |

19.20 (NVMe) |

4x 25 Gb/s NICs (2 for management & control plane, 2 for customer traffic) |

|

AV52 |

Compute/Storage heavy workloads |

Dual Intel Xeon Platinum 8270 CPUs (Cascade Lake microarchitecture) with 26 cores/CPU @ 2.7 GHz / 4.0 GHz Turbo, Total 52 physical cores (104 logical cores with hyperthreading) |

1,536 |

1.5 (Intel Cache) |

38.40 (NVMe) |

4x 25 Gb/s NICs (2 for management & control plane, 2 for customer traffic) |

Table 2 – Azure VMware Solution SKUs

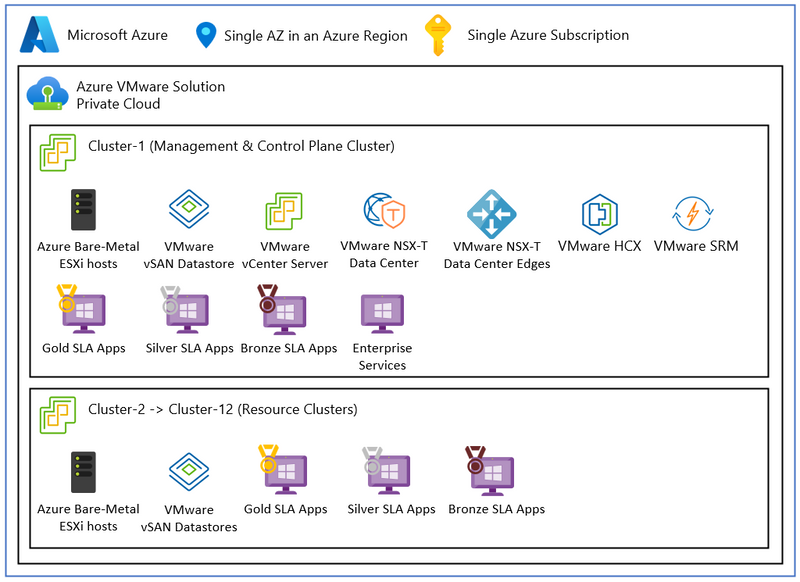

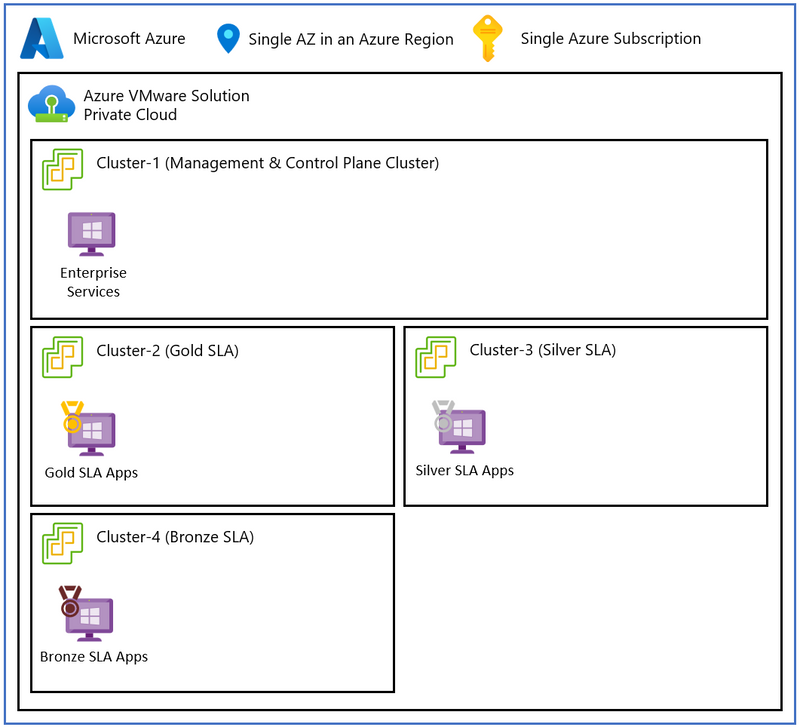

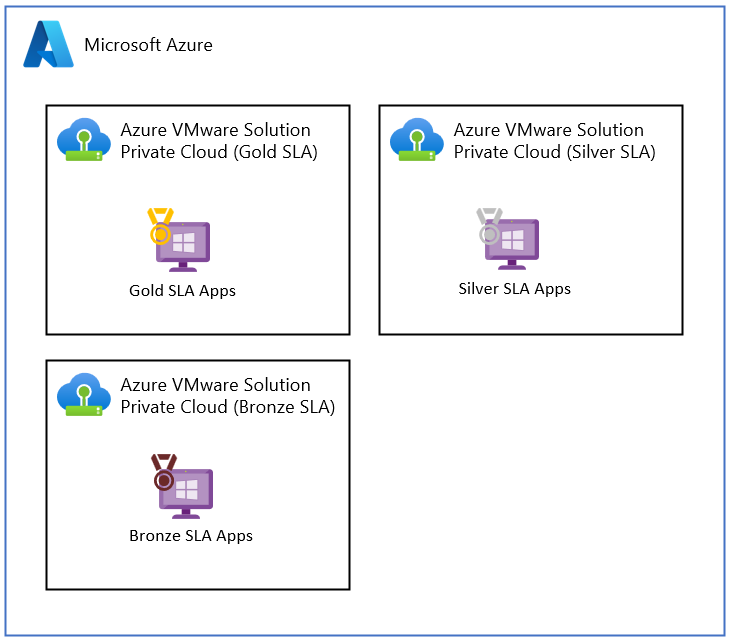

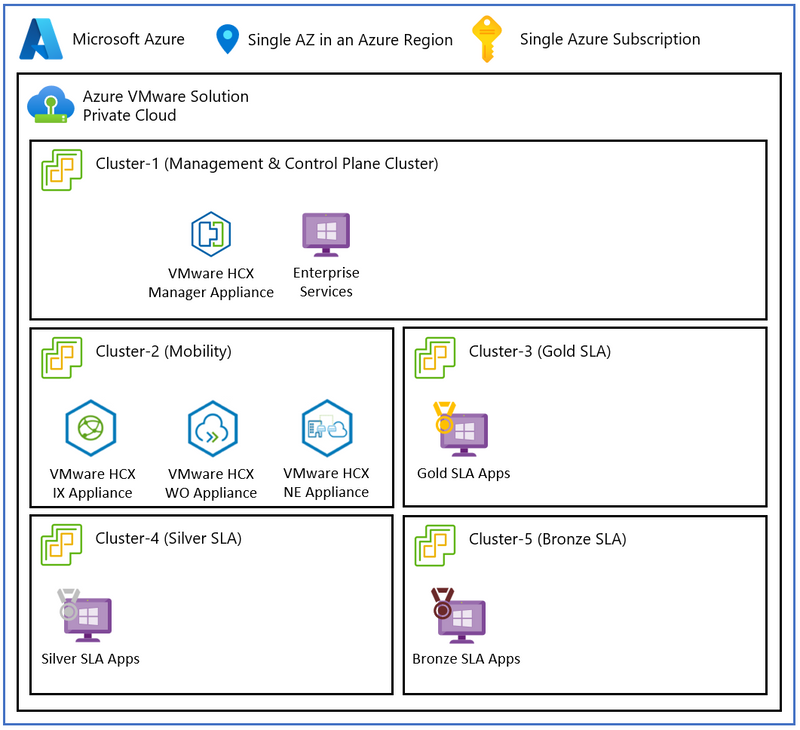

Design Consideration 3 – Deployment topology: Select the Azure VMware Solution topology that best matches the performance requirements of your SLAs. For very large deployments, it may make sense to have separate private clouds dedicated to each SLA for optimum performance.

The Azure VMware Solution supports a maximum of 12 clusters per private cloud. Each cluster supports a minimum of 3 hosts and a maximum of 16 hosts per cluster. Each private cloud supports a maximum of 96 hosts.

VMware vCenter Server, VMware HCX Manager, VMware SRM and VMware vSphere Replication Manager are individual appliances that run in Cluster-1.

VMware NSX-T Manager is a cluster of 3 unified appliances that have a VM-VM anti-affinity placement policy to spread them across the hosts of the cluster. The VMware NSX-T Data Center Edge cluster is a pair of appliances that also use a VM-VM anti-affinity placement policy.

All northbound customer traffic traverses the NSX-T Data Center Edge cluster. All vSAN storage traffic traverses the VLAN-backed Portgroup of the Management vSphere Distributed Switch, which is part of the management and control plane.

The management and control plane cluster (Cluster-1) can be shared with customer workload VMs or be a dedicated cluster for management and control, including customer enterprise services, such as Active Directory, DNS, & DHCP. Additional resource clusters can be added to support customer demand. This also includes the option of using dedicated clusters for each customer SLA.

Topology 1 – Mixed: Run mixed SLA workloads in each cluster of the Azure VMware Solution private cloud.

Figure 4 – Azure VMware Solution Mixed Workloads Topology

Topology 2 – Dedicated Clusters: Use separate clusters for each SLA in the Azure VMware Solution private cloud.

Figure 5 – Azure VMware Solution Dedicated Clusters Topology

Topology 3 – Dedicated Private Clouds: Use dedicated Azure VMware Solution private clouds for each SLA for optimum performance.

Figure 6 – Azure VMware Solution Dedicated Private Cloud Instances Topology

Design Consideration 4 – Network Connectivity: Azure VMware Solution private clouds can be connected using IPSec VPN and Azure ExpressRoute circuits, including a variety of Azure Virtual Networking topologies such as Hub-Spoke and Azure Virtual WAN with Azure Firewall and third-party Network Virtualization Appliances.

From a performance perspective, Azure ExpressRoute and AVS Interconnect should be used instead of Azure Virtual WAN and IPSec VPN. The following design considerations (5-9) elaborate on network performance design.

For more information, refer to the Azure VMware Solution networking and interconnectivity concepts. The Azure VMware Solution Cloud Adoption Framework also has example network scenarios that can be considered.

Design Consideration 5 – Azure VNet Connectivity: Use FastPath for connecting an Azure VMware Solution private cloud to an Azure VNet for highest throughput and lowest latency.

For maximum performance between Azure VMware Solution and Azure native services, a VNet Gateway with the Ultra performance or ErGw3AZ SKU is needed to enable the Fast Path feature when creating the connection. FastPath is designed to improve the data path performance to your VNet. When enabled, FastPath sends network traffic directly to virtual machines in the VNet, bypassing the gateway, resulting in 10 Gbps or higher throughput.

For more information, refer to Azure ExpressRoute FastPath.

Figure 7 – Azure VMware Solution connected to VNet Gateway with FastPath

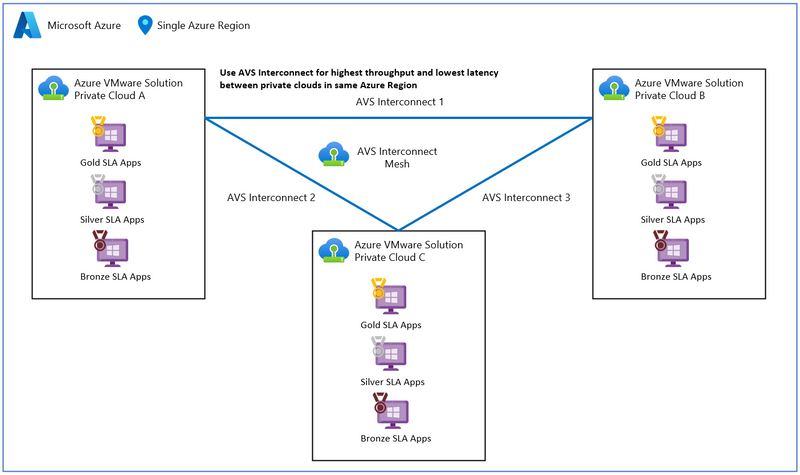

Design Consideration 6 – Intra-region Connectivity: Use AVS Interconnect for connecting Azure VMware Solution private clouds together in the same Azure Region for the highest throughput and lowest latency.

You can select Azure VMware Solution private clouds from another Azure Subscription or Azure Resource Group, the only constraint is it must be in the same Azure Region. A maximum of 10 private clouds can be connected per private cloud instance.

For more information, refer to AVS Interconnect.

Figure 8 – Azure VMware Solution with AVS Interconnect

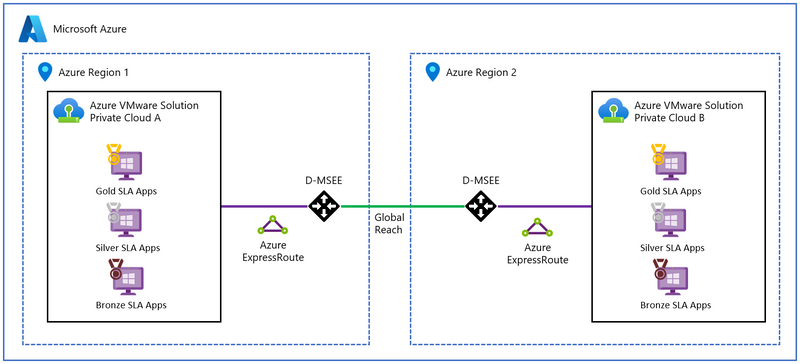

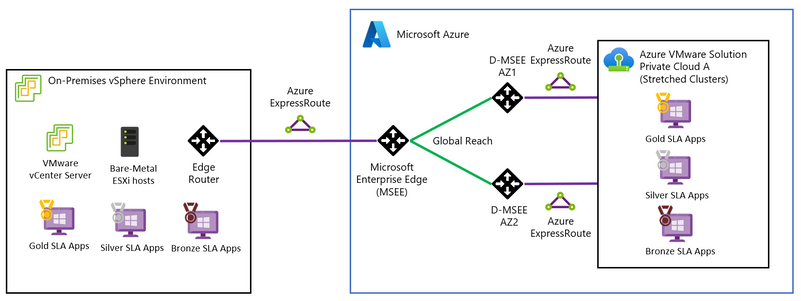

Design Consideration 7 – Inter-region/On-Premises Connectivity: Use ExpressRoute Global Reach for connecting Azure VMware Solution private clouds together in different Azure Regions or to on-premises vSphere environments for the highest throughput and lowest latency.

For more information, refer to Azure VMware Solution network design considerations.

Figure 9 – Azure VMware Solution with ExpressRoute Global Reach

Figure 10 – Azure VMware Solution with ExpressRoute Global Reach to On-premises vSphere infrastructure

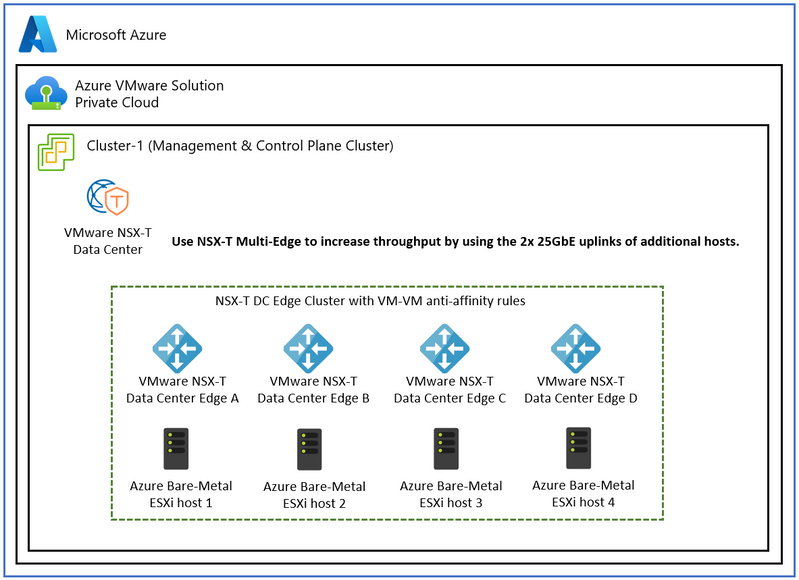

Design Consideration 8 – Host Connectivity: Use NSX-T Data Center Multi-Edge to increase the throughput of north/south traffic from the Azure VMware Solution private cloud.

This configuration is available for a management cluster (Cluster-1) with four or more nodes. The additional Edge VMs are added to the Edge Cluster and increase the amount of traffic that can be forwarded through the 25Gbps uplinks across the ESXi hosts. This feature needs to be configured by opening an SR.

For more information, refer to Azure VMware Solution network design considerations.

Figure 11 – Azure VMware Solution Multi-Edge with NSX-T Data Center

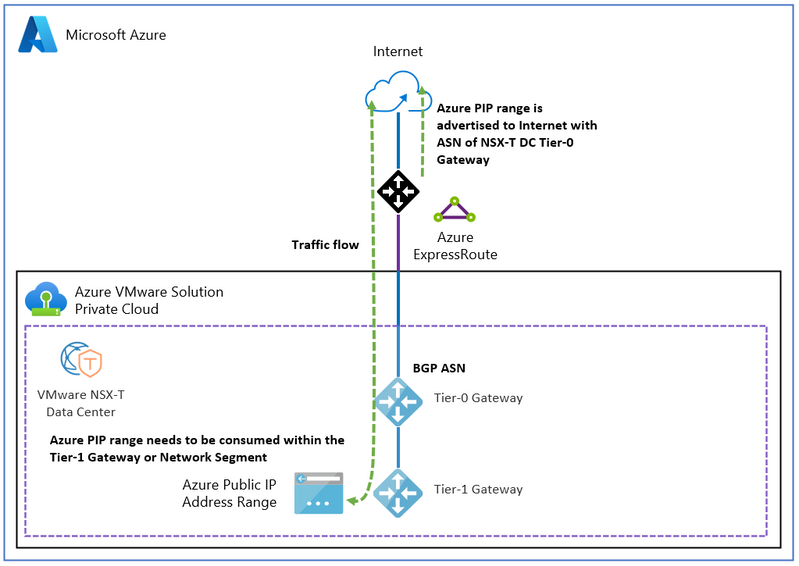

Design Consideration 9 – Internet Connectivity: Use Public IP on the NSX-T Data Center Edge if high speed internet access direct to the Azure VMware Solution private cloud is needed.

This allows you to bring an Azure Public IPv4 address range directly to the NSX-T Data Center Edge for consumption. You should configure this public range on a network virtual appliance (NVA) to secure the private cloud.

For more information, refer to Internet Connectivity Design Considerations.

Figure 12 – Azure VMware Solution Public IP Address with NSX-T Data Center

Design Consideration 10 – VM Optimization: Use VM Hardware tuning, and Resource Pools to provide peak performance for workloads.

VMware vSphere Virtual Machine Hardware should be optimized for the required performance:

- vNUMA optimization for CPU and RAM

- Shares

- Reservations & Limits

- Latency Sensitive setting

- Paravirtual network & storage adapters

- Multiple SCSI controllers

- Spread vDisks across SCSI controllers

Resource Pools can be used to apply CPU and RAM QoS policies for each SLA running in a mixed cluster.

For more information, refer to Performance Best Practices.

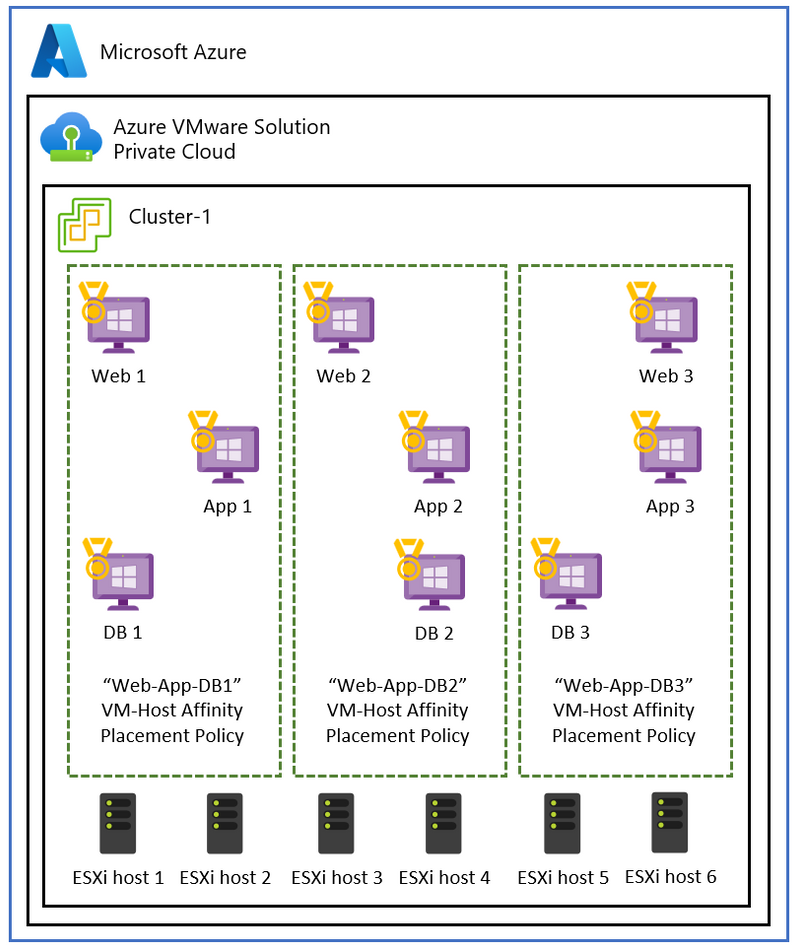

Design Consideration 11 – Placement Policies: Placement policies can be used to increase the performance of a service by separating the VMs in an application availability layer across ESXi hosts. This allows you to pin workloads to a particular host for exclusive access to CPU and RAM resources. Placement policies support VM-VM and VM-Host affinity and anti-affinity rules. The vSphere Distributed Resource Scheduler (DRS) is responsible for migrating VMs to enforce the placement policies.

For more information, refer to Placement Policies.

Figure 13 – Azure VMware Solution Placement Policies

Design Consideration 12 – External Datastores: Use a third-party storage solution to offload lower SLA workloads from VMware vSAN into a separate tier of storage.

Azure VMware Solution supports attaching Azure NetApp Files as Network File System (NFS) datastores for offloading virtual machine storage from VMware vSAN. This allows the VMware vSAN datastore to be dedicated to Gold SLA virtual machines.

For more information, refer to Azure NetApp Files datastores.

Figure 14 – Azure VMware Solution External Datastores with Azure NetApp Filer

Design Consideration 13 – Storage Policies: Table 3 lists the pre-defined VM Storage Policies available for use with VMware vSAN. The appropriate redundant array of independent disks (RAID) and failures to tolerate (FTT) settings per policy need to be considered to match the customer workload SLAs. Each policy has a trade-off between availability, performance, capacity, and cost that needs to be considered.

The highest performing VM Storage Policy for enterprise workloads is the RAID-1 policy.

To comply with the Azure VMware Solution SLA, you are responsible for using an FTT=2 storage policy when the cluster has 6 or more nodes in a standard cluster. You must also retain a minimum slack space of 25% for backend vSAN operations.

For more information, refer to Configure Storage Policy.

|

Deployment Type |

Policy Name |

RAID |

Failures to Tolerate (FTT) |

Site |

|

Standard |

RAID-1 FTT-1 |

1 |

1 |

N/A |

|

Standard |

RAID-1 FTT-2 |

1 |

2 |

N/A |

|

Standard |

RAID-1 FTT-3 |

1 |

3 |

N/A |

|

Standard |

RAID-5 FTT-1 |

5 |

1 |

N/A |

|

Standard |

RAID-6 FTT-2 |

6 |

2 |

N/A |

|

Standard |

VMware Horizon |

1 |

1 |

N/A |

Table 3 – VMware vSAN Storage Policies

Design Consideration 14 – Mobility: VMware HCX can be tweaked to improve throughput and performance.

VMware HCX Manager can be upsized through Run Command. The number of network extension (NE) instances can be increased to allow Portgroups to be distributed over instances to increase L2E performance. You can also establish a dedicated Mobility Cluster, accompanied by a dedicated Service Mesh for each distinct workload cluster, thereby increasing mobility performance.

Application Path Resiliency & TCP Flow Conditioning are also options that can be enabled to improve mobility performance. TCP Flow Conditioning dynamically optimizes the segment size for traffic traversing the Network Extension path. Application Path Resiliency technology creates multiple Foo-Over-UDP (FOU) tunnels between the source and destination Uplink IP pair for improved performance and resiliency and path diversity.

For more information, refer to VMware HCX Best Practices.

Figure 15 – VMware HCX with Dedicated Mobility Cluster

Design Consideration 15 – Anti-Patterns: Try to avoid using these anti-patterns in your performance design.

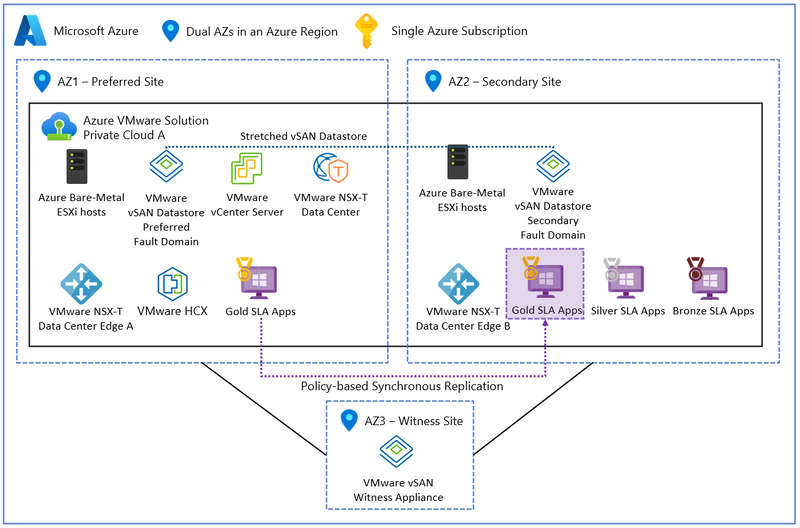

Anti-Pattern 1 – Stretched Clusters: Azure VMware Solution Stretched Clusters should primarily be used to meet a Multi-AZ or Recovery Point Objective of zero requirement. If stretched clusters are used, there will be a write throughput and write latency impact for all synchronous writes using the site mirroring storage policy.

For more information, refer to Stretched Clusters.

Figure 16 – Azure VMware Solution Private Cloud with Stretched Clusters

In the following section, I will describe the next steps that need to be made to progress this high-level design estimate towards a validated detailed design.

Next Steps

The Azure VMware Solution sizing estimate should be assessed using Azure Migrate. With large enterprise solutions for strategic and major customers, an Azure VMware Solution Solutions Architect from Azure, VMware, or a trusted VMware Partner should be engaged to ensure the solution is correctly sized to deliver business value with the minimum of risk. This should also include an application dependency assessment to understand the mapping between application groups and identify areas of data gravity, application network traffic flows and network latency dependencies.

Summary

In this post, we took a closer look at the typical performance requirements of a customer workload, the architectural building blocks, and the performance design considerations for the Azure VMware Solution. We also discussed the next steps to continue an Azure VMware Solution design.

If you are interested in the Azure VMware Solution, please use these resources to learn more about the service:

- Homepage: Azure VMware Solution

- Documentation: Azure VMware Solution

- SLA: SLA for Azure VMware Solution

- Azure Regions: Azure Products by Region

- Service Limits: Azure VMware Solution subscription limits and quotas

- SKU types: Introduction

- Storage policies: Configure storage policy

- VMware HCX: Configuration & Best Practices

- GitHub repository: Azure/azure-vmware-solution

- Well-Architected Framework: Azure VMware Solution workloads

- Cloud Adoption Framework: Introduction to the Azure VMware Solution adoption scenario

- Network connectivity scenarios: Enterprise-scale network topology and connectivity for Azure VMware Solution

- Enterprise Scale Landing Zone: Enterprise-scale for Microsoft Azure VMware Solution

- Enterprise Scale GitHub repository: Azure/Enterprise-Scale-for-AVS

- Azure CLI: Azure Command-Line Interface (CLI) Overview

- PowerShell module: Az.VMware Module

- Azure Resource Manager: Microsoft.AVS/privateClouds

- REST API: Azure VMware Solution REST API

- Terraform provider: azurerm_vmware_private_cloud Terraform Registry

- Learning Resources: Azure VMware Solution (AVS) (microsoft.github.io)

Author Bio

René van den Bedem is a Principal Technical Program Manager in the Azure VMware Solution product group at Microsoft. His background is in enterprise architecture with extensive experience across all facets of the enterprise, public cloud & service provider spaces, including digital transformation and the business, enterprise, and technology architecture stacks. In addition to being the first quadruple VMware Certified Design Expert (VCDX), he is also a Dell Technologies Certified Master Enterprise Architect, a Nutanix Platform Expert (NPX) and an NPX Panelist.

Published on:

Learn moreRelated posts

Part 1: Building Your First Serverless HTTP API on Azure with Azure Functions & FastAPI

Introduction This post is Part 1 of the series Serverless Application Development with Azure Functions and Azure Cosmos DB, where we explore ...

Announcing GPT 5.2 Availability in Azure for U.S. Government Secret and Top Secret Clouds

Today, we are excited to announce that GPT-5.2, Azure OpenAI’s newest frontier reasoning model, is available in Microsoft Azure for U.S. Gover...

Sync data from Dynamics 365 Finance & Operations Azure SQL Database (Tier2) to local SQL Server (AxDB)

A new utility to synchronize data from D365FO cloud environments to local AxDB, featuring incremental sync and smart strategies.

Azure Cosmos DB Conf 2026 — Call for Proposals Is Now Open

Every production system has a story behind it. The scaling limit you didn’t expect. The data model that finally clicked. The tradeoff you had ...

Powering Real-Time Gaming Experiences with Azure Cosmos DB for NoSQL

Scenario: When Every Millisecond Counts in Gaming Imagine millions of players logging in at the exact moment a new game season launches. Leade...

Access Azure Virtual Desktop and Windows 365 Cloud PC from non-managed devices

Check out this article via web browser: Access Azure Virtual Desktop and Windows 365 Cloud PC from non-managed devices Many organizations use ...