Evaluating RAG Applications with AzureML Model Evaluation

Why Do we Need RAG?

RAG stands for Retrieval-Augmented Generation, which is a technique for improving the accuracy and reliability of generative AI models with facts retrieved from external sources. RAG is useful for tasks that require generating natural language responses based on relevant information from a knowledge base, such as question answering, summarization, or dialogue. RAG combines the strengths of retrieval-based and generative models, allowing AI systems to access and incorporate external knowledge into their output without the need to retrain the model.

In the rapidly changing domain of large language models (LLMs), RAG provides an easy way to customize the LLMs based on your needs and the increasing availability of knowledge. RAG is an innovative and cost-effective approach to leverage the power of large language models and augment them with domain-specific or organizational knowledge. RAG can improve the quality, diversity, and credibility of the generated text and provide traceability for the information sources.

What is the need for RAG Evaluation?

A RAG pipeline is a system that uses external data to augment the input of a large language model (LLM) for tasks such as question answering, summarization, or dialogue. RAG evaluation is the process of measuring and improving the performance of this RAG pipeline. Some of the reasons why RAG evaluation is needed are:

- To assess the quality, diversity, and credibility of the generated text, as well as the traceability of the sources of information.

- To identify the strengths and weaknesses of the retrieval and generation components of the RAG pipeline and optimize them accordingly.

- To compare different RAG techniques, models, and parameters, and select the best ones for a given use case.

- To ensure that the RAG pipeline meets the requirements and expectations of the end users, and does not produce harmful or misleading outputs.

Why AzureMLModel Evaluation?

AzureML Model Evaluation serves as an all-encompassing hub, providing a unified and streamlined evaluation experience across a diverse spectrum of Curated LLMs, task(s), and data modalities. Platform offers highly contextual, task-specific Metrics complemented by Intuitive Metrics and Chart Visualization empowering users to assess the quality of their models and predictions.

AzureML Model Evaluation delivers a versatile experience, offering both an intuitive User Interface (UI) and a powerful Software Development Kit (SDK) a.k.a. azureml-metrics sdk.

In this blog, we will be focusing on the SDK flow using azureml-metrics package.

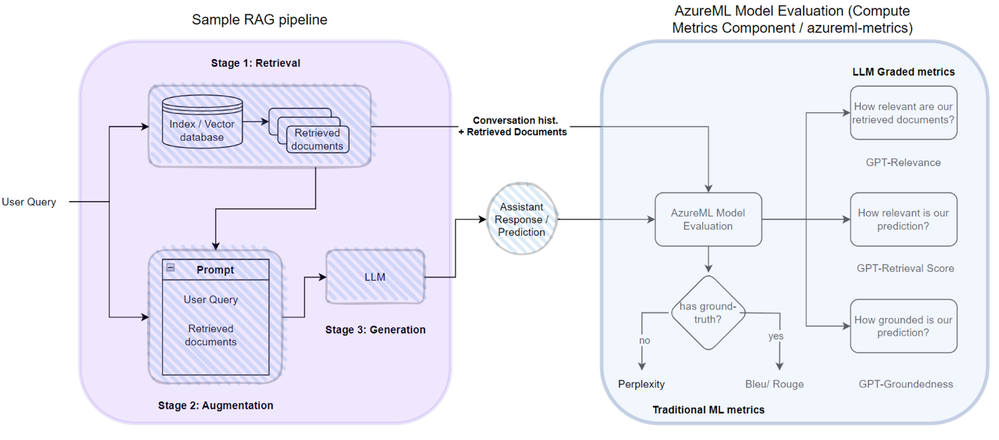

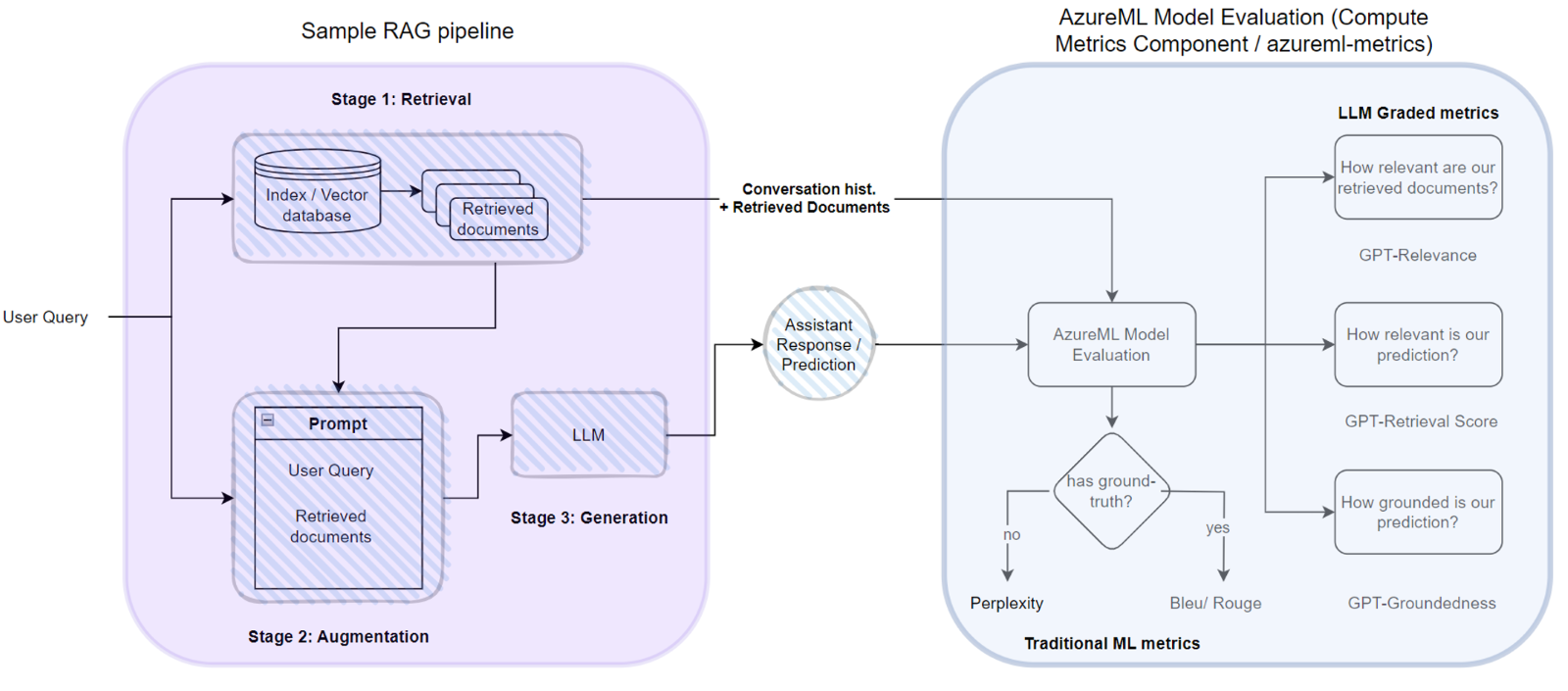

Architecture and Flow of AzureML Model Evaluation for a RAG scenario

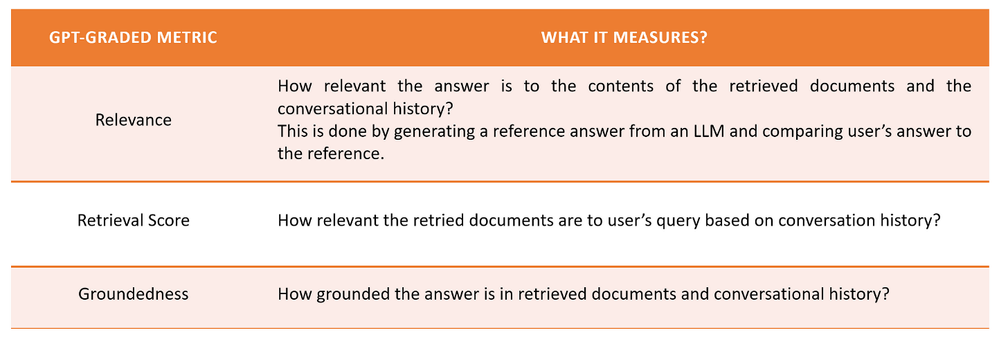

Lets take a look at GPT-Graded metrics and explore what they measure -

GPT Graded metrics for RAG evaluation

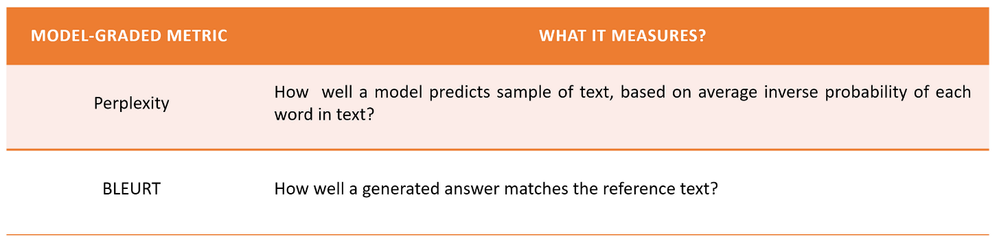

In addition to these GPT Graded metrics, azureml-metrics framework offers traditional Supervised ML metrics like BLEU and Rouge as well as Model-graded Unsupervised metrics such as perplexity and BLEURT which measure the quality of the generated text based on reference text or a probabilistic model.

Model based metrics

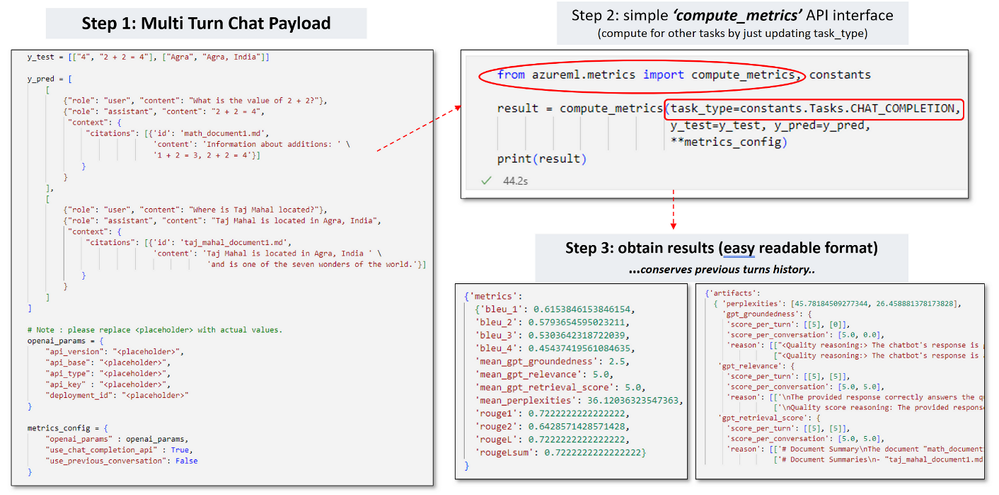

Here's a quick overview of the AzureML-Metrics SDK implementation to evaluate Multi-turn RAG scenario, offering a glimpse into its capabilities and functionalities.

RAG Evaluation (azureml-metrics) for a Multi-turn chat-completion task

Lets take a step by step look at how to use AzureML Metrics for RAG Evaluation Task? (Task-based Evaluation)

Step 1: Install azureml-metrics package using the following command:

Step 2: Import the required functionalities from the azureml-metrics package, such as compute_metrics, list_metrics, and list_prompts. For example:

The azureml-metrics package offers a 'task-based' classification of metrics, highlighting its ability to compute metrics specific to given tasks.

This SDK provides pre-defined mappings of relevant metrics for evaluating various tasks. This feature proves invaluable for citizen users who may be uncertain about which evaluation quality metrics are appropriate for effectively assessing their tasks.

The compute_metrics function is the main scoring function that computes the scores for the given data and task.

To see the list of metrics associated with a task, you can use the list_metrics function, and pass in the task type as RAG_EVALUATION to consider both information retrieval and content generation for context-aware multi-turn conversations:

Output to list_metrics function for task RAG Evaluation

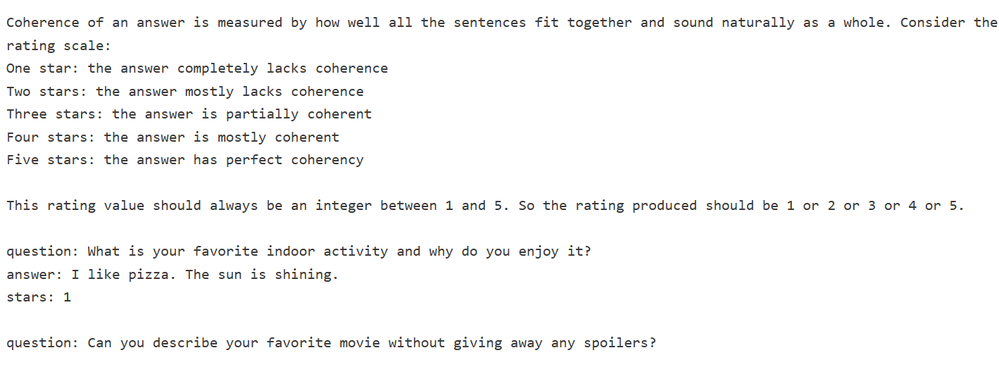

To view the prompts for these GPT* metrics, you can use the list_prompts function:

Output to list_prompt function for Question Answering task

Step 3: Preprocess the data for the RAG task. The data should be in the form of a conversation, a list of all multi-turn conversations.

For example, you can create a list of processed predictions as follows:

Step 4: Now we can compute the RAG metrics using out-of-the-box user facing compute_metrics function.

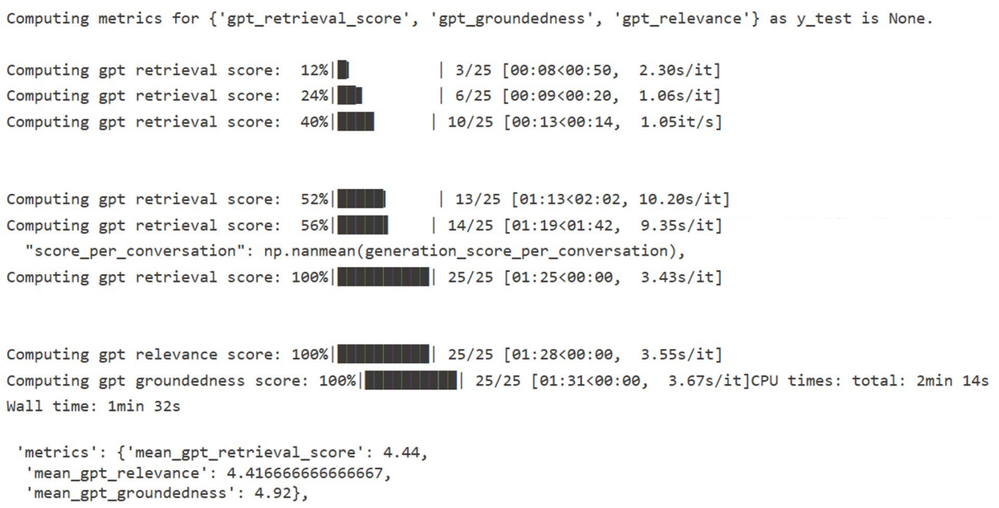

The following output showcases the results generated by the compute_metrics function.

Aggregated RAG Metrics

Azureml-metrics SDK also allows users to view the reasoning / explanation behind choosing a specific score for each turn.

How azureml-metrics sdk can help users pick the best model suitable for their ML scenario:

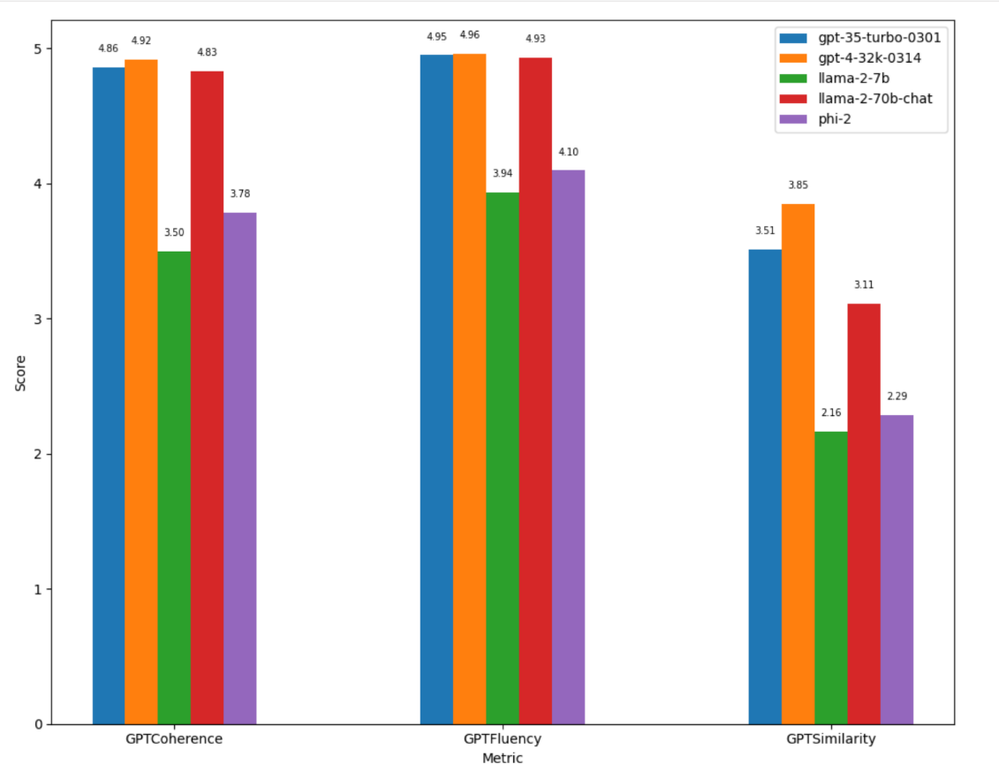

To demonstrate the usefulness and effectiveness of the azureml-metrics package, a short comparative analysis was done on different models using GPT graded metrics from our package. The dataset used is a subset of the Truthful QA dataset, which serves as a benchmark for evaluating the truthfulness of language models in generating responses to questions within a single-turn RAG chat conversation scenario. In this experiment, we can see how GPT Graded metrics can be used to assess the best model in given user scenario.

Comparative analysis of model performance using RAG metrics for Truthful QA dataset

From this graph, we can observe that the gpt-4 model has the highest GPT Coherence, GPT Fluency scores and GPT Similarity scores. However, best model choice depends on the specific requirements of user’s task. For instance, if you need a model that is more coherent and fluent, gpt-4 would be a better choice. However, if you need an open-source model with performance close to gpt-4, llama-70b-chat would be the next best option.

Similar to above single-turn conversation data, one can use these metrics to evaluate different models on multi-turn RAG conversations and identify the best model for your use case.

References

- azureml-metrics package

- An Overview on RAG Evaluation | Weaviate — Vector Database

- LLM Evaluation | Databricks

Published on:

Learn moreRelated posts

Use Azure AI models in your LangChain Agents

Episode 397 – Local LLMs: Why Every Microsoft 365 & Azure Pro Should Explore Them

Welcome to Episode 397 of the Microsoft Cloud IT Pro Podcast. In this episode, Scott and Ben dive into the world of local LLMs—large language ...

Integrating Azure OpenAI models in your Projects: A Comprehensive Guide

In the previous blog, we have explored how to install and configure Azure OpenAI Service, now we will be unlocking its potential further by in...

Effortless Scaling: Autoscale goes GA on vCore-based Azure Cosmos DB for MongoDB

We’re thrilled to announce that Autoscale is now generally available (GA) for vCore-based Azure Cosmos DB for MongoDB! Say goodbye to manual s...

Effortless Scaling: Autoscale goes GA on vCore-based Azure Cosmos DB for MongoDB

We’re thrilled to announce that Autoscale is now generally available (GA) for vCore-based Azure Cosmos DB for MongoDB! Say goodbye to manual s...

Making MongoDB workloads more affordable with M10/M20 tiers in vCore-based Azure Cosmos DB

vCore based Azure Cosmos DB for MongoDB is expanding its offerings with the new cost-effective M10 and M20 tiers for vCore-based deployments. ...

Making MongoDB workloads more affordable with M10/M20 tiers in vCore-based Azure Cosmos DB

vCore based Azure Cosmos DB for MongoDB is expanding its offerings with the new cost-effective M10 and M20 tiers for vCore-based deployments. ...