Create ADF Events trigger that runs an ADF pipeline in response to Azure Storage events.

Storage Event Trigger in Azure Data Factory is the building block to build an event-driven ETL/ELT architecture (EDA). Data Factory's native integration with Azure Event Grid let you trigger processing pipeline based upon certain events. Currently, Storage Event Triggers support events with Azure Data Lake Storage Gen2 and General Purpose version 2 storage accounts, including Blob Created and Blob Deleted.

Event-driven architecture (EDA) is a common data integration pattern that involves production, detection, consumption, and reaction to events. Data integration scenarios often require customers to trigger pipelines based on events happening in storage account, such as the arrival or deletion of a file in Azure Blob Storage account. Data Factory and Synapse pipelines natively integrate with Azure Event Grid, which lets you trigger pipelines on such events.

This blog demonstrates how we can use ADF triggers for running the ADF pipeline in events of Azure Storage events.

Prerequisites:

- An ADLS Gen2 storage account or GPv2 Blob Storage Account

Create a storage account - Azure Storage | Microsoft Docs - The integration described in this article depends on Azure Event Grid. Make sure that your subscription is registered with the Event Grid resource provider. For more info, see Resource providers and types. You must be able to do the Microsoft.EventGrid/eventSubscriptions/* action. This action is part of the EventGrid EventSubscription Contributor built-in role.

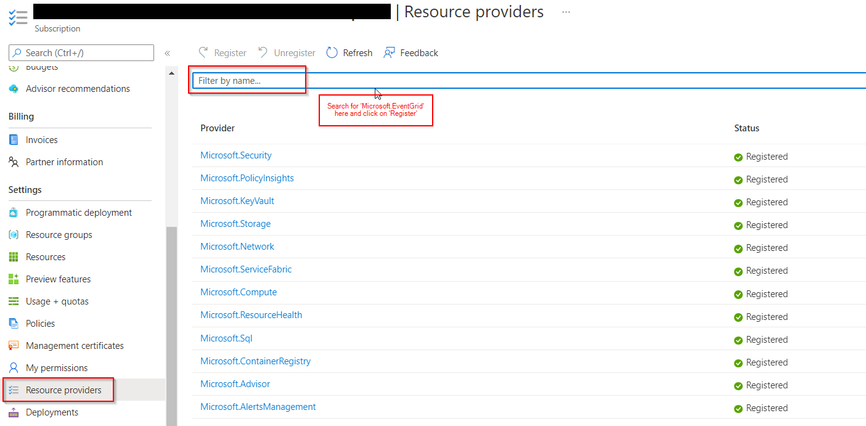

To do so, the Resource Provider 'Microsoft.EventGrid' needs to be registered in the Subscription as per the below screenshot:

- If the blob storage account resides behind a private endpoint and blocks public network access, you need to configure network rules to allow communications from blob storage to Azure Event Grid. You can either grant storage access to trusted Azure services, such as Event Grid, following Storage documentation, or configure private endpoints for Event Grid that map to VNet address space, following Event Grid documentation

- The Storage Event Trigger currently supports only Azure Data Lake Storage Gen2 and General-purpose version 2 storage accounts.

- To create a new or modify an existing Storage Event Trigger, the Azure account used to log into the service and publish the storage event trigger must have appropriate role based access control (Azure RBAC) permission on the storage account

- Service Principal for the Azure Data Factory does not need special permission to either the Storage account or Event Grid

Demo:

Step 1:

Create ADF resource on Azure Portal. If you are new to ADF, please refer this link on how to create one:

Create an Azure data factory using the Azure Data Factory UI - Azure Data Factory | Microsoft Docs

Step 2:

Once Data Factory is created, navigate to Azure Data Factory Studio present in the Overview section:

Step 3:

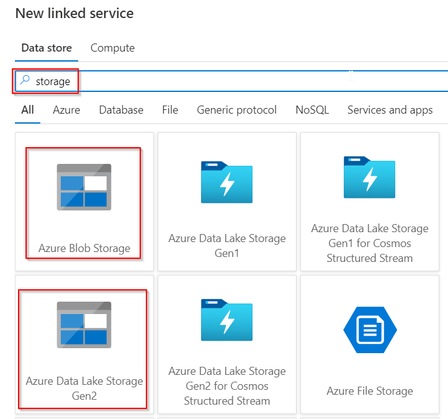

As we land on the ADF portal, Create Linked service for storage account in ADF Portal as per the below screenshots:

Once you click on ‘+New’, we need to first select the data source. If you are using GPv2 Blob Storage account use ‘Azure Blob Storage’ and if you are working with ADLS Gen2 account use ‘Azure Data Lake Storage Gen2’. I’ve used Gen2 in this demo.

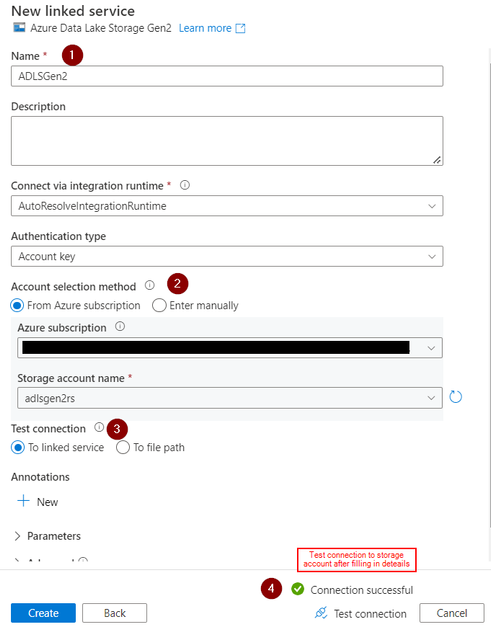

After selecting the Data Store fill in required details as below:

Once the Test Connection is successful, click on ‘Create. This will create the storage account linked service.

Step 4:

Creating Input and Output Datasets

In this demo, we will create a simple ADF pipeline that will copy an ‘emp.txt’ file from one folder ‘input’ to another folder ‘output’ within a container. Hence, we need input and output datasets in ADF that maps to the blobs in input and output folder. So let’s create InputDataset and OutputDataset:

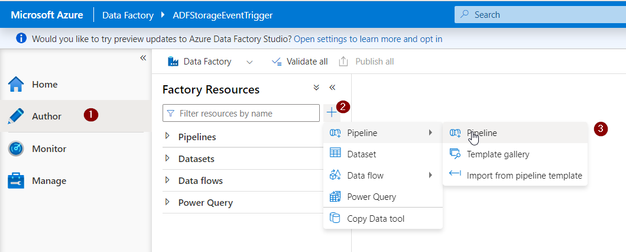

Go to ‘Author’ on ADF portal and click on ‘New Dataset’ as per below screenshot:

Then click on ‘Ok’.

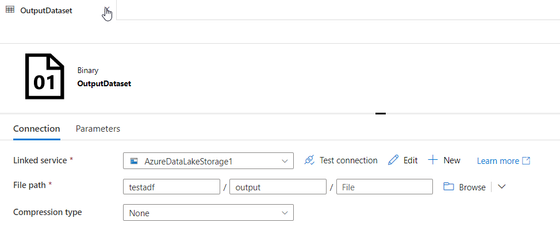

Similarly, you can create OutputDataset as below:

Step 5:

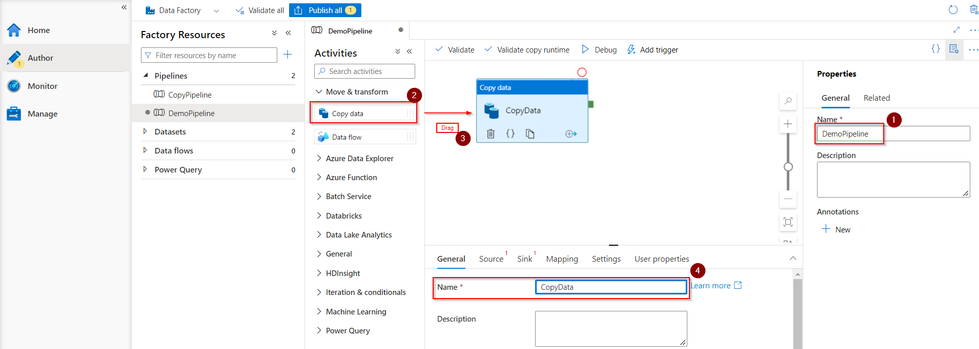

Create the ADF pipeline to copy data from ‘input’ to ‘output’ folder as per the below screenshots:

Give a pipeline name and drag ‘Copy Data’ activity to the designer surface. Name the activity:

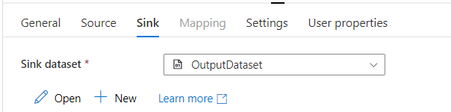

Select Source and Sink as below:

Now ‘Validate’ the pipeline and ‘Debug’ to check whether it works as expected.

Step 6:

Once the pipeline is validated, let’s Create BlobCreated event Trigger as per below screenshot:

Choose Trigger--> New:

After clicking on ‘Continue’, you will get ‘Data Preview’. This shows the blobs that matches the event trigger filters thus you can verify whether the filter is correct or not. Click ‘Continue’ and you will see ‘Parameters’ section. This is helpful when you want to pass any parameters to the pipeline. Skip this as we are not using parameters in this demo and click ‘Ok’.

Now we have all the components in place and next step would be to ‘Publish’ all the changes.

Once publish is completed, let’s test the trigger.

Upload file ‘emp.txt’ to input folder and this should fire the BlobCreated event thus firing ADF trigger.

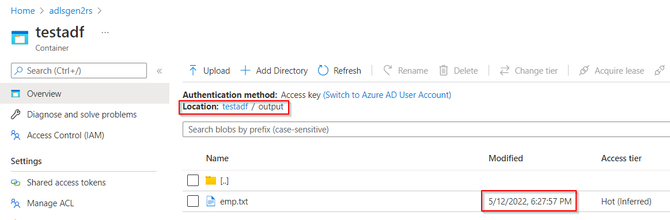

File copied to output folder:

ADF Trigger run:

Pipeline run:

As we see from the result screenshots above, the BlobCreated trigger works as expected and runs the attached ADF pipeline.

Similarly, BlobDeleted event can be created.

Reference link:

Create event-based triggers - Azure Data Factory & Azure Synapse | Microsoft Docs

Hope this helps!

Published on:

Learn moreRelated posts

What’s new with Azure Repos?

We thought it was a good time to check in and highlight some of the work happening in Azure Repos. In this post, we’ve covered several recent ...

Part 1: Building Your First Serverless HTTP API on Azure with Azure Functions & FastAPI

Introduction This post is Part 1 of the series Serverless Application Development with Azure Functions and Azure Cosmos DB, where we explore ...

Announcing GPT 5.2 Availability in Azure for U.S. Government Secret and Top Secret Clouds

Today, we are excited to announce that GPT-5.2, Azure OpenAI’s newest frontier reasoning model, is available in Microsoft Azure for U.S. Gover...

Sync data from Dynamics 365 Finance & Operations Azure SQL Database (Tier2) to local SQL Server (AxDB)

A new utility to synchronize data from D365FO cloud environments to local AxDB, featuring incremental sync and smart strategies.

Azure Cosmos DB Conf 2026 — Call for Proposals Is Now Open

Every production system has a story behind it. The scaling limit you didn’t expect. The data model that finally clicked. The tradeoff you had ...