Power BI Reports for Azure Lab Services - Part 2: Visualizing Lab Information

In the last blog post, we used Power BI to visualize data from Cost Management for your labs. This blog post will focus on how to gather information about your labs and visualize that data. We’ll create a sample report that answers the questions “What is the total time a student has used a vm for a lab?” and “How many students have claimed a vm for a lab?”

To create this report, here are the

- Gather the data. We need to call to Azure and get information about our lab related resources and store in format usable by Power BI.

- Get the data. We need to import data into Power BI.

- Transform the data. We will be gathering data from a few types of Lab Services resources and need to update the data schema to show how each piece is related.

- Create the data visualization.

- Publish the report for others to see.

Gather the data

Most of the information we will need can be gathered from the Az.LabServices PowerShell module (preview). If you’ve never used the Az.LabServices, see the LabServices PowerShell Module blog post.

To gather the information, we’ll need to run a PowerShell script routinely to gather all the information. There are few options to choose from. You can create an Azure PowerShell function that has a timer trigger. Alternately, you can create a PowerShell runbook in Azure Automation . In this blog post, I’ll go over running a script in an Azure PowerShell function.

The Azure Function will navigate through the subscription and find

- all labs in the subscription

- all lab virtual machines in a lab

- note any lab virtual machines assigned to/claimed by a student in a lab

- all users for each lab.

Information will be saved in CSV files in separate blob containers in Azure Storage. One blob container for lab related information, one for lab virtual machine related information, and one for lab user information.

- Create an Azure Function App.

- For Runtime Stack, select PowerShell Core.

- For Publish setting, select Code.

- By default, Azure Function Apps use the Consumption (Serverless) plan. The consumption plan has a maximum timeout of 10 minutes. If you have many labs, consider using premium or dedicated plans, which have longer timeouts.

- Create a Timer triggered function.

- When creating the function, you’ll be prompted to enter a CRON expression. Enter 0 0 5 * * * to have the function Default time zone is UTC. To change the time zone, see NCRONTAB time zones.

- Copy code from Publish-LabServicesData.ps1 into the run.ps1 file for the newly created trigger function. Don’t forget to change the parameter signature to match the expected inputs for the function. In our example, param block should be param($Timer).

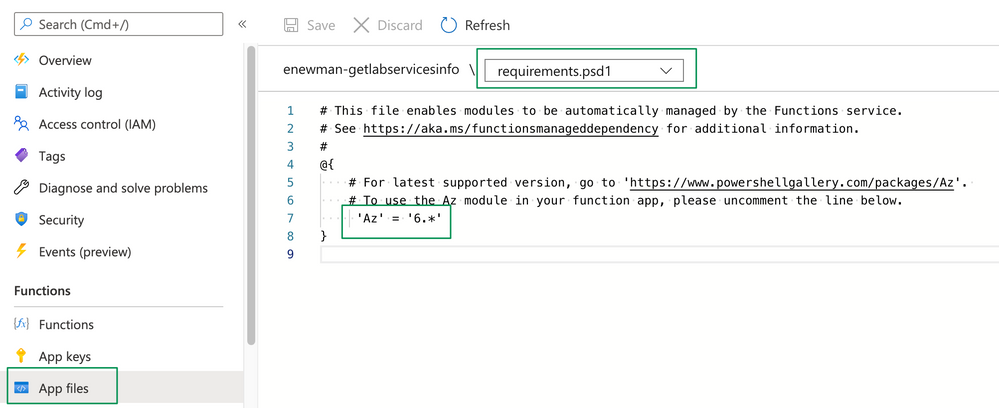

- The code for run.ps1 requires the Az module. Azure PowerShell functions manage their dependencies in the requirements.psd1 file. Uncomment the line to use the Az module.

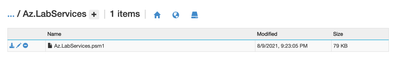

- The code for run.ps1 uses the Az.LabServices.psm1 module. We need to add the psm1 and psmd1 files to the Function App to use the Az.LabServices module. Custom modules need to be placed in a specific directory pattern. The final pattern for the files will be

- Open the Kudu console for the Function App.

- Create a Modules folder under the wwwroot folder, if it doesn’t exist already.

- Create an Az.LabServices folder under the Modules folder. It should be on the same level as the folder for the functions.

- Upload the Az.LabServices.psm1 to the Az.LabServices folder. You can drag the file from your file explorer to the directory structure in Kudo.

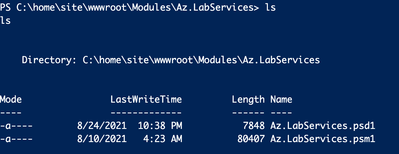

- The Az.LabServices module will need a module manifest to be recognized by the FunctionApp, so let’s create that now. Run the command from the site/wwwroot/Modules/Az.LabServices folder:

New-ModuleManifest -Path Az.LabServices.psd1 -ModuleVersion "1.0" -Author "Microsoft Corporation" -RootModule 'Az.LabServices.psm1' -CmdletsToExport "*"

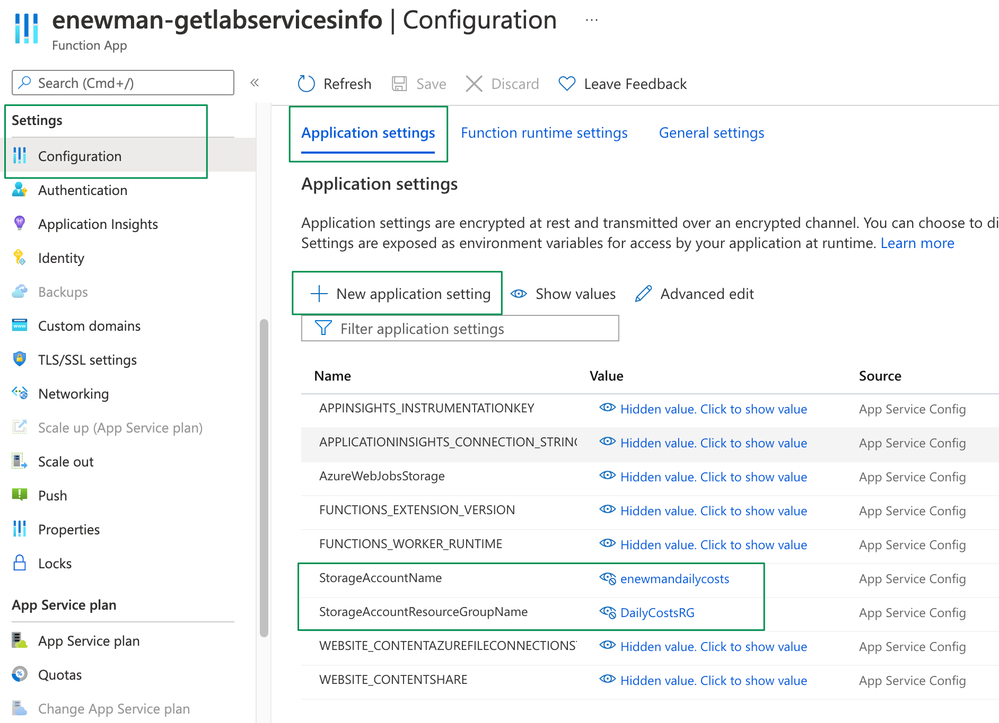

- The function gathers all the information about your labs and saves all the information in blob storage of a Storage Account. Before we run the function, we need to give it the values for our and its resource group. We’ll do that by setting the values in the application settings for the Function App. Add StorageAccountName and StorageAccountResourceGroupName application settings to describe

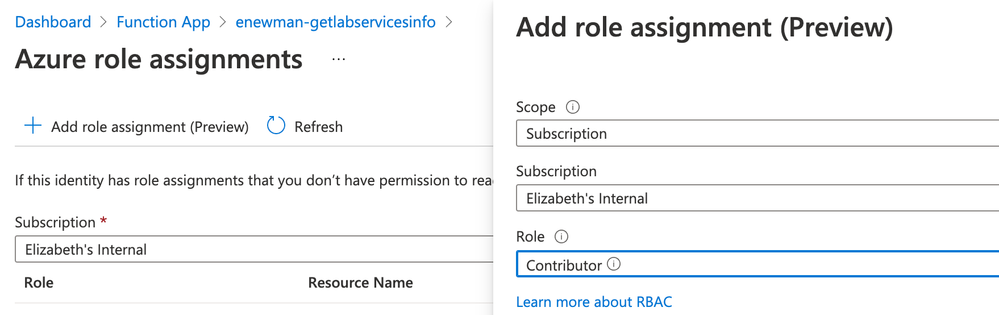

- Lastly, we need to enable a Managed Identity for the Azure Function App. This will allow the app the permissions needed to execute the Az.LabServices and Az.Storage cmdlets used in the run.ps1. Create a system-assigned identity for the Function App or add an user-assigned identity to the Function App.

Important! Don’t forget to create a role assignment for the managed identity. I allowed contributor access to the subscription, but you can create more restrictive assignments.

To create a role assignment, click the Azure role assignments button, then choose Add Role Assignment (preview). One the flyout menu, set the Scope to Subscription, Subscription to your subscription and the Role to Contributor. Click Save. Note, it will take a few minutes for the permission to propagate.

The function is ready to go! Run the function to publish our lab data so we can start creating our Power BI report.

Note: There is some personally identifiable information being recorded, including user email and name. Information will be gathered from resources you specify and saved in storage accounts you specify. You have control over where data is stored, how it used, and when it is purged.

Consume the data

In Power BI Desktop we will use the Azure Table Storage connector to connect to the data. This can be done in the same report used in last week’s blog post or an entirely new report.

- name of the storage account specified in previous step. Select Next.

- Enter the storage account key for the storage account.

- Select the containers r. The default name for the containers (as specified in the run.ps1) are labinfo-dailyexports, vminfo-dailyexports, and userinfo-dailyexports.

- Select Transform Data. This will open the Power Query editor

Transform the data

We now have data for labs, virtual machines and users. Let’s cleanup the data so we can create our reports.

The data from the blobs contains the historical and latest data for labs in the subscription. First thing we need to do is prune the data because the blob storage has both the latest and historical data. Optionally, you can create duplicates of all the tables and use one for historical data and one for the latest data.

For each table (labinfo-dailyexports, vminfo-dailyexports, and userinfo-dailyexports), transform the data as follows.

- If you want to work the latest data, Filter the Name column to keep rows that equal the name of the file that contains the latest file. In our example, that would be labinfo-latest.csv, vminfo-latest.csv, or userinfo-latest.csv, depending on which table you are in.

If you want to work with the historical data, keep only the files that do not equal the latest file.

- Select combine files. PowerBI will automatically change data types for the date and duration columns.

- Save transformations.

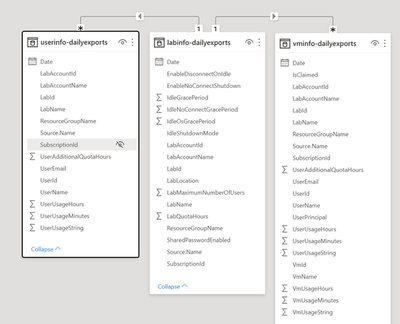

Before creating any visualizations, let’s make sure Power BI has correctly detected how the lab, virtual machine and user tables are related. To do this, open the model view in PowerBI desktop. If working with historical data, all relationships will be many to many. If working with the latest data, relationships should be:

|

Source |

Relationship |

Destination |

|

labinfo-dailyexports[LabId] |

1 to many |

userinfo-dailyexports[LabId] |

|

labinfo-dailyexports[LabId] |

1 to many |

vminfo-dailyexports[LabId] |

The detected link only uses the LabIds of each table. Consider modifying some relationships manually to include all columns that match up. Depending on what type of reports are created, the minimum relationship description may not be enough. Model should look like the following.

Create the data visualization

Now, that we have all the relationships set, let’s cover just a couple of the questions we can answer with this information.

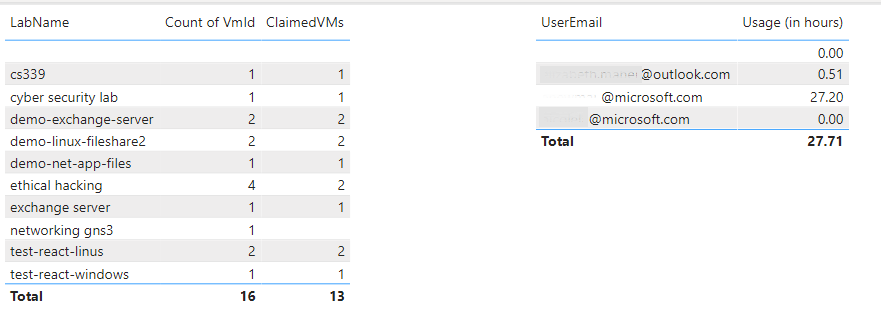

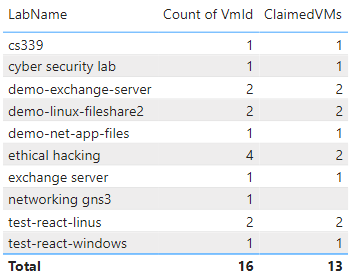

How many students have claimed a virtual machine for a lab?

We’ll create a table that shows the lab name, max number of users the lab can hold (lab capacity), number of users added to the lab, and number of users that have registered for the lab (count of claimed virtual machines). Note, in our data model, when a student is assigned a virtual machine by registering for a course, the virtual machine is considered claimed. Therefore, counting the IsClaimed property on a virtual machine will tell use how many students have a lab virtual machine for their exclusive use for a lab.

We need to create a measure on the table for count of the claimed virtual machines.

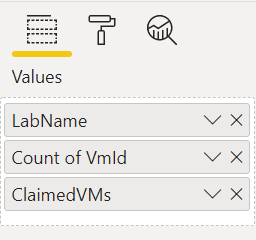

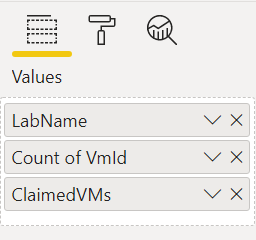

For the table, set values to

- 'vminfo-dailyexports’[LabName]

- count of ‘vminfo-dailyexplorts’[VmId]

- ‘labinfo-dailyexports’[ClaimedVMs]

What is the total time a student has used a vm for a lab?

To see the total usage for a student over all their labs, we’ll use the virtual machine usage information. The total usage is stored as a duration type in the VmUsageString column. It’s important to note, that durations are visualized as the total number of days in decimal form. For our visualization, let’s display the usage in hours. We’ll need to create a new column in the vminfo-dailyexports table that converts the values from days to hours. Don’t forget to set the datatype to decimal before saving the column formula.

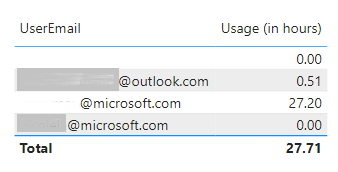

For the table, set the values to

- 'vminfo-dailyexports'[UserEmail]

- sum of 'vminfo-dailyexports'[VMUsageInHours]

Rename the VMUsageInHours column to Usage (in hours) for this visual.

Publish the data

Last step is to publish the report! See Publish datasets and reports from Power BI Desktop for

Happing Reporting!

The Lab Services Team

Published on:

Learn moreRelated posts

Microsoft Azure Maia 200: Scott Guthrie EVP

Modern Visual Tooltips in Power BI (Generally Available)

Power BI’s latest update introduces an enhancement to how users interact with reports with the general availability of modern visual tooltips....

Calculate(), DAX Fusion And Filters On 0 In Power BI

Here’s a fun DAX performance tip that I found this week. Do you have measures that use Calculate() with a filter on a numeric column? Is...

Azure Cosmos DB TV Recap: Supercharging AI Agents with the Azure Cosmos DB MCP Toolkit (Ep. 110)

In Episode 110 of Azure Cosmos DB TV, host Mark Brown is joined by Sajeetharan Sinnathurai to explore how the Azure Cosmos DB MCP Toolkit is c...

Introducing the Azure Cosmos DB Agent Kit: Your AI Pair Programmer Just Got Smarter

The Azure Cosmos DB Agent Kit is an open-source collection of skills that teaches your AI coding assistant (GitHub Copilot, Claude Code, Gemin...