Migration of containerized services from GKE to AKS

Overview

Organizations nowadays have the flexibility to move from one cloud vendor to another due to a lot of factors, one of the primary being the micro services architecture. Application can be designed as a collection of services which interact with each other through interfaces or API’s. Kubernetes is a open source platform for managing containerized workloads and handles automatic failover and scaling ensuring the service is available with features like selfheal. AKS and GKE are PaaS services where the control plane is taken care by the vendor and workload deployment is done on the worker nodes.

There is growing need for vendor agnostic flexibility when moving from across public cloud, be it on- premise to public cloud or one public cloud to another. GKE to AKS is one of its kind of requirement where containers based at GKE are to be migrated to AKS. The document below details around deciding factors, approach, pre requisites, migration planning and steps required.

Deciding factors for migration

- Networking plays an important role during migration and we have two networking models available(CNI and Kubenet) and we need to decide which networking model are we going to go for and ensure if we are going for CNI we select the right size for the subnet using the formula

(number of nodes + 1) + ((number of nodes + 1) * maximum pods per node that you configure

- Kubenet uses and internal IP range for pod communication so the problem if IP address exhaustion does not surface.

- Name resolution is important since the application might need to resolve names in the on-premises/different cloud vendor. Hybrid name resolution might needs setup in the cloud(DNS servers in cloud or DNS resolver as PaaS).

- Type of cluster(Public or private) based on which API server will be accessed. If its public cluster, we need to ensure NSG/Authorization range/Azure Firewall or other security controls are in place. Since it’s a migration it will usually be the same type as the source.

- If the traffic is getting routed through Azure Firewall or any other firewall we need to ensure the URL’s are whitelisted taking reference from the following MS article

https//learn.microsoft.com/en-us/azure/aks/limit-egress-traffic

- Additional URL’s might need to be whitelisted if we are using nginx ingress controllers or helm charts.

- Integration with other services like SQL database which will need planning and downtime. Replication can be setup between the databases in GKE and AKS if possible or if that doesn’t work import/export option can be used which will need downtime based on the size of the database.

- CI/CD pipelines used in GKE to be pointed to AKS cluster to deploy the resources for like to like migration.

- Persistent volumes can be migrated using backup and restore method by following the below mentioned method

https//velotio.medium.com/kubernetes-migration-how-to-move-data-freely-across-clusters-d391dd7c2e0d

- Alternate method is to follow the below mentioned article

https//learn.microsoft.com/en-us/azure/aks/aks-migration

- Rerouting traffic from GKE to AKS where the Primary endpoint will be GKE and we gradually reduce the load from GKE to AKS and once traffic hits the application in AKS reduce the pod count in GKE to 0.

- If we are using application gateway as the Frontend we need to have the proper certificates on the application gateway for SSL termination.

- Monitoring to be setup using either AMA or Prometheus as per the requirement.

Process Flow

The flow below depicts the thought process to achieve the migration of the containerized workload. The process involves 5 stages

- Precheck Validation Ensure all pre-requisites required for the migration are in place. List with the details is shared later in the document.

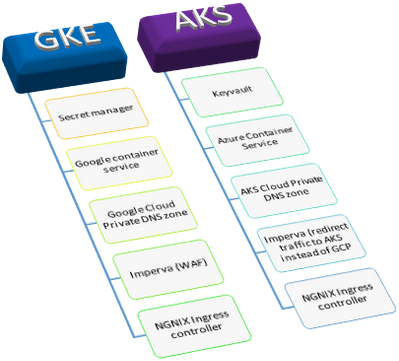

- GKE to AKS service mapping Migration involved as is migration of the various workloads and it was important to map services in GKE to AKS to ensure workloads can run in the AKS cluster.

- AKS Managed services build AKS cluster with the required configuration for the workloads to run, needs to be deployed. Links to create the cluster(private/public) are shared later in the document.

- Container Migration: Once the AKS cluster is built, workloads need to be migrated by executing the pipelines (can be any CI/CD tool used to deploy the GKE containers).

- Post migration Validation Monitor the workloads to ensure all applications are running fine under various load conditions without any issues.

Mapping of services from GKE to AKS

To achieve as is migration it is important to create a mapping between the services provided between GKE and AKS so that service configuration can be planned and deployed in a manner that will allow workloads to run without issues. Diagram below shows the service mapping for the services used in this migration.

Pre-requisites

- Azure Subscription should be in place before the migration activity starts.

- Landing zone should be setup and network should be setup for on-premises/GCP connectivity.

- URL list to be whitelisted on Firewall for AKS and applications to function smoothly.

https//learn.microsoft.com/en-us/azure/aks/limit-egress-traffic

Additional URL's will be needed in case if ingress controller/certificate manager.

- Name resolution should be in place for hybrid name resolution and private DNS name resolution in case of Private AKS cluster.

Various options are Azure AD DNS servers with conditional forwarding/Azure DNS resolver.

- Check the flow of traffic in GCP and ensure we have the network connectivity/ports opened on Azure end for the applications to function.

- Applications might have to communicate with exchange server to send notifications and integration of application with exchange server and other addons to be configured for the application to work properly.

Pre-check and validations

- Traffic blocked for Ubuntu repository resulting in nodes getting recreated and cluster failing to start. After Whitelisting URL's on the Azure firewall cluster started fine.

- Name resolution not working for the private DNS name of the cluster resulting in cluster not starting as the Vnet where the AKS cluster was built was pointing to on-premises DNS server and conditional forwarding was not set as there were no DNS servers in Azure. As we could not setup Azure DNS servers we deployed azure resolver with the outbound endpoints for name resolution to work.

- After namespace migration we were unable to browse the site as it was giving an error "Page not found". Health probe was not set correctly for the application gateway. We setup the probe for /health for the ingress controller and we were able to browse the site.

- Certain modules were not working as they were getting blocked by WAF. Worked with Microsoft and created exclusions after talking to security team after which the modules started working.

- Certain functions like uploading was not working as notifications were failing owing to communication failure with SMTP relay. Ensure Pod can resolve the SMTP relay and telnet works to the SMTP relay agent.

Step by Step Process for Migration

- Create AKS cluster based on the requirements defined in the previous sections.

- Private/Public

- AKS authentication and Authorization method.

- Node size

- Kubenet/CNI

- Pods per node

- CIDR for nodes/Service/Docker bridge

- AKS cluster version

- Cluster Name based on organization naming convention.

- Availability zones

Microsoft links that can be referred for step by step process

Private cluster creation

https//learn.microsoft.com/en-us/azure/aks/private-clusters#create-a-private-aks-cluster

Public Cluster creation

https//learn.microsoft.com/en-us/azure/aks/learn/quick-kubernetes-deploy-portal?tabs=azure-cli

- Steps to migrate namespace and application using pipeline

- Setup of GitHub-runner (self-hosted agent) inside Azure network where the AKS cluster resides. Steps for setting up the VM will be provided in the GitHub page with the PAT token and commands required for the VM to communicate with the GitHub repository.

- Link below explains the process to setup the self hosted agent

- GitHub actions used in conjunction with GitHub runner to deploy the containers/workloads in AKS cluster.

https//docs.github.com/en/actions/using-github-hosted-runners/about-github-hosted-runners

- Certificate to be installed on the Application gateway for the domain or wild card certificate for SSL traffic termination.

- Connection strings to be changed to databases in Azure.

- Migrate the traffic by slowly switching over the traffic from GKE to AKS(DNS aliases can be created for the Azure).Number of pods can be scaled to zero in GKE so that the traffic gets routed to AKS cluster.

- Browse the various applications hosted in Azure and ensure that the web page is being displayed.

Post Migration Validation

- Migrate the traffic by slowly switching over the traffic from GKE to AKS (DNS aliases can be created for the Azure). Number of pods can be scaled to zero in GKE so that the traffic gets routed to AKS cluster.

- Browse the various applications hosted in Azure and ensure that the web page is being displayed.

Author of this article: Aneeta Vithalkar ([email protected]) – Architect DXC Technology India

Published on:

Learn more