NLP Inferencing on Confidential Azure Container Instance

Thanks to the advancements in the area of natural language processing using machine learning techniques & highly successful new-age LLMs from OpenAI, Google & Microsoft - NLP based modeling & inferencing are one of the top areas of customer interest. These are being widely used in almost all Microsoft products & there is also a huge demand from our customers to utilize these techniques in their business apps. Similarly, there is a demand for privacy preserving infrastructure to run such apps.

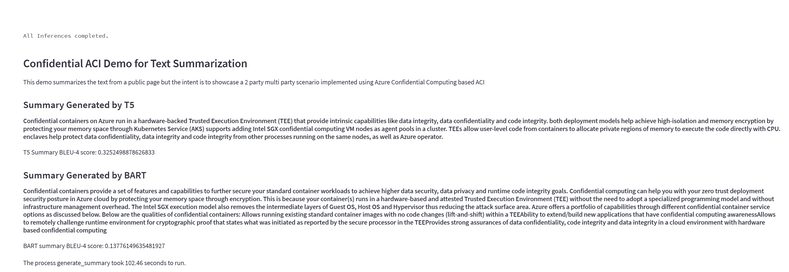

In this blog I am going to show how to run basic NLP text summarization using T5 & BART in a python streamlit.io based app & run it as a container on Confidential ACI container group. Before we go there, here are some details about Azure Confidential Computing for the uninitiated. Azure already encrypts data at rest and in transit, and confidential computing helps protect data in use, including the cryptographic keys. Azure confidential computing helps customers prevent unauthorized access to data in use, including from the cloud operator, by processing data in a hardware-based and attested Trusted Execution Environment (TEE).

In this article we will create a python streamlit.io app and deploy it to Confidential ACI. The use case is loosely based on a 2 party multi party computing scenario where party I provides text data in encrypted format because they cannot allow party II to take a look at the text. However, their contract with party II allows party II to summarize the encrypted text and consume the summary. This entire process runs in the Azure tenant that belongs to party I but due to Confidential ACI, party I is at no point able to take a look at the original text data from party II.

To start with, we need to create and deploy this app on ACI. Please follow the instructions given below.

Pre-requisites

Before starting please create the following pre-requisites in your portal or using CLI:

a) Create your app and push it to Azure Container Registry (please see the appendix section to see how I created my app and ACR entry)

b) Create a Managed HSM instance Quickstart - Provision and activate an Azure Managed HSM | Microsoft Learn

c) Create an Azure Storage Account & create a container inside it Create a storage account - Azure Storage | Microsoft Learn

d) Create a SAS token for the container you created in Step c. https://learn.microsoft.com/en-us/azure/cognitive-services/translator/document-translation/how-to-guides/create-sas-tokens

e) Create a user-assigned managed identity Manage user-assigned managed identities - Microsoft Entra | Microsoft Learn

f) Give the following permissions to your AAD user and the newly created user assigned managed identity on the MHSM

- Managed HSM Crypto User

- Managed HSM Crypto Officer

Demo Steps (windows or linux environment will work; I am using Windows 11 here with WSL2 for Ubuntu Shell):

a) Open WSL2 on Windows and run the following installs:

Now, switch to /mnt

b) Clone our main github repo as follows: (you may need to install git if not installed)

c) Switch to the folder containing the next shell script as follows:

d) Install python to run this script if not already done

e) Run the shell script ./generatefs.sh

This should generate the image (.img file & .bin file). We will use these in later steps.

This step creates an encrypted file system that will be uploaded in the next step to the azure storage account as a block blob.

f) Now install the azure cli if not already installed

Upload the encfs.img to the blob storage. (Before this steps please create an Azure Storage Account & create a container inside it, then create a SAS token)

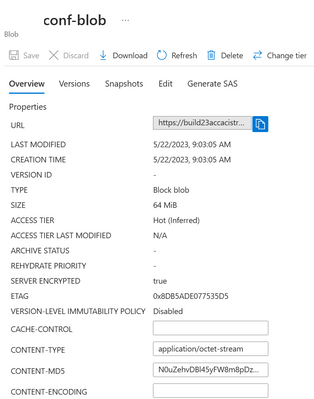

g) Post the step above your encfs should show in the portal.

h) Now run the following to figure out the key that was used to encrypt the file system:

The following is a sample template of the file called encfs-sidecar-args.json which is located in the encfs folder (/confidential-sidecar-containers/examples/encfs/)

Please fill in the rest of the details.

i) Install base64 if not already installed:

Use the following to convert the above json file into base64 (since this is going to be sent as arguments in another json tempalate)

i) Take the base64 value and update the following section (value field) in the file at aci-arm-template.json at (/confidential-sidecar-containers/examples/encfs)

Then, please follow instructions here azure-cli-extensions/src/confcom/azext_confcom at main · Azure/azure-cli-extensions (github.com) to install the confcom extension.

j) Next let's modify the aci-arm-template.json file.

At this point, we need to generate the CCE policy using confcom we installed in the previous step. But before that, we need to ensure that we add information about our container and replace the already provided container information in the aci-arm-template.json file.

I would also add the following value but it is optional.

Please ensure that the CCE policy value is an empty string for now. This will be generated when we run the next command.

k) Now, let's run the command to fill in the CCE policy using policygen.

Note: Ensuring no debug-mode and auditing are currently something that needs to be performed by the parties together to ensure there is no violation of confidentiality in the infrastructure code.

After this is run, we will see that the same template file aci-arm-template.json has a large hash value in the ccepolicy field.

The output of this will also have the value to be filled into in the next step.

This needs to be filled into the file called importkeyconfig.json at the same location (/confidential-sidecar-containers/examples/encfs)

l) Now, the next step is to upload the key which will be used to decrypt the file system to MHSM and this key will be released to the container side car using the Secure Key Release process.

In order to upload the key we need to fill up another json template called importkeyconfig.json located in(/confidential-sidecar-containers/examples/encfs). Here is a sample.

Once the values are filled in, now we need to upload the Key into MHSM.

Before we run the actual step, we will need to generate a bearer token (short lived) and use it in the template above as shown.

To generate the bearer token, we can use the following:

Now, the template should have all placeholders filled in with real value. Let's run it as follows:

Check and validate that the key has been uploaded to MHSM.

m) The time has come to finally create the container group using the template aci-arm-template.json. This can be done from the CLI or the portal. I am going to use a template deployment approach from the portal.

Step 1: Copy the contents of the template from VSCODE or whatever editor you used so far.

Step 2: Go to Azure Portal and search "Deploy a custom template"

Step 3: Click on Build Your Own Template

Step 4: Now replace the contents in the editor with the contents of your aci-arm-template.json & Save

Step 5: It will show a page like this

Step 5a: Deploy!! by clicking Review + Create

Once this is done, you should be able to run your app from a portal.

APPENDIX

Here is how to create & deploy the app - the files can be also found on our official github page.

Published on:

Learn moreRelated posts

Fabric Mirroring for Azure Cosmos DB: Public Preview Refresh Now Live with New Features

We’re thrilled to announce the latest refresh of Fabric Mirroring for Azure Cosmos DB, now available with several powerful new features that e...

Power Platform – Use Azure Key Vault secrets with environment variables

We are announcing the ability to use Azure Key Vault secrets with environment variables in Power Platform. This feature will reach general ava...

Validating Azure Key Vault Access Securely in Fabric Notebooks

Working with sensitive data in Microsoft Fabric requires careful handling of secrets, especially when collaborating externally. In a recent cu...

Azure Developer CLI (azd) – May 2025

This post announces the May release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) – May 2025 appeared first on ...

Azure Cosmos DB with DiskANN Part 4: Stable Vector Search Recall with Streaming Data

Vector Search with Azure Cosmos DB In Part 1 and Part 2 of this series, we explored vector search with Azure Cosmos DB and best practices for...

General Availability for Data API in vCore-based Azure Cosmos DB for MongoDB

Title: General Availability for Data API in vCore-based Azure Cosmos DB for MongoDB We’re excited to announce the general availability of the ...

Efficiently and Elegantly Modeling Embeddings in Azure SQL and SQL Server

Storing and querying text embeddings in a database it might seem challenging, but with the right schema design, it’s not only possible, ...