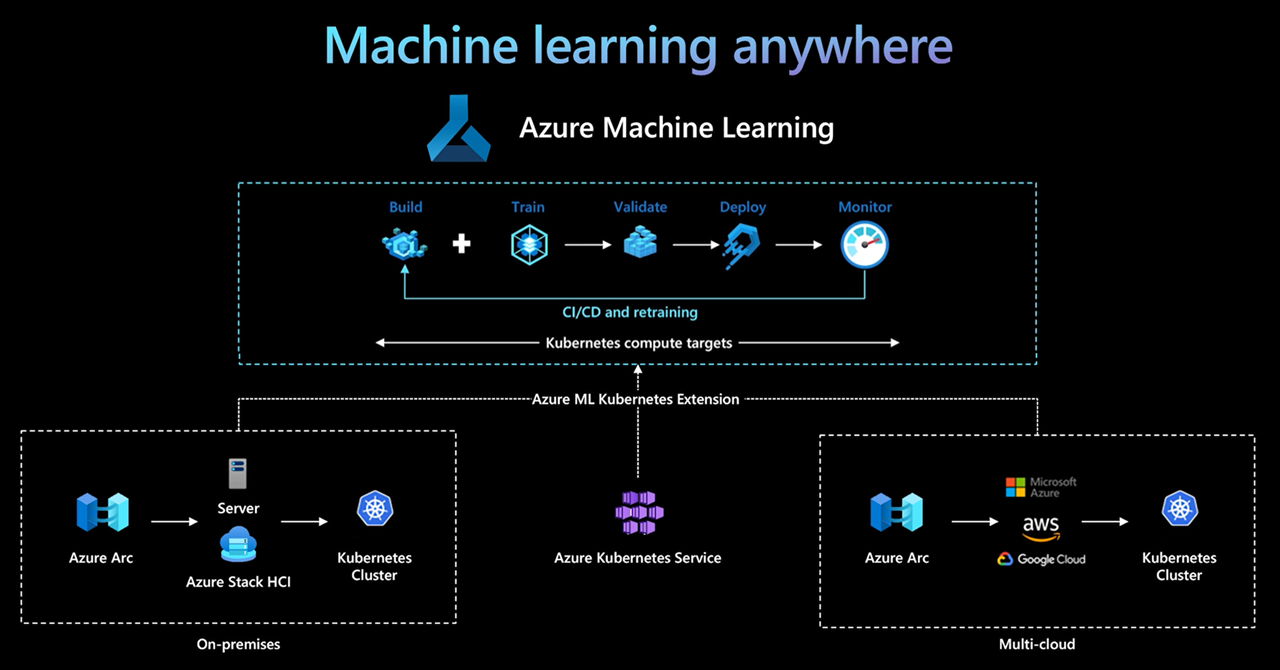

Realizing Machine Learning anywhere with Azure Kubernetes Service and Arc-enabled Machine Learning

We are thrilled to announce the general availability of Azure Machine Learning (Azure ML) Kubernetes compute, including support of seamless Azure Kubernetes Service (AKS) integration and Azure Arc-enabled Machine Learning.

With a simple cluster extension deployment on AKS or Azure Arc-enabled Kubernetes (Arc Kubernetes) cluster, Kubernetes cluster is seamlessly supported in Azure ML to run training or inference workload. In addition, Azure ML service capabilities for streamlining full ML lifecycle and automation with MLOps become instantly available to enterprise teams of professionals. Azure ML Kubernetes compute empowers enterprises ML operationalization at scale across different infrastructures and addresses different needs with seamless experience of Azure ML CLI v2, Python SDK v2 (preview), and Studio UI. Here are some of the capabilities that customers can benefit

- Deploy ML workload on customer managed AKS cluster and gain more security and controls to meet compliance requirements.

- Run Azure ML workload on Arc Kubernetes cluster right where data lives and meets data residency, security, and privacy compliance, or harness existing IT investment.

- Use Arc Kubernetes cluster to deploy ML workload or aspect of ML lifecycle across multiple public clouds.

- Fully automated hybrid workload in cloud and on-premises to leverage different infrastructure advantages and IT investments.

How it works

The IT-operations team and data-science team are both integral parts of the broader ML team. By letting the IT-operations team manage Kubernetes compute setup, Azure ML creates a seamless compute experience for data-science team who does not need to learn or use Kubernetes directly. The design for Azure ML Kubernetes compute also helps IT-operations team leverage native Kubernetes concepts such as namespace, node selector, and resource requests/limits for ML compute utilization and optimization. Data-science team now can focus on models and work with productivity tools such as Azure ML CLI v2, Python SDK v2, Studio UI, and Jupyter notebook.

It is easy to enable and use an existing Kubernetes cluster for Azure ML workload with the following simple steps:

IT-operation team. The IT-operation team is responsible for the first 3 steps above: prepare an AKS or Arc Kubernetes cluster, deploy Azure ML cluster extension, and attach Kubernetes cluster to Azure ML workspace. In addition to these essential compute setup steps, IT-operation team also uses familiar tools such as Azure CLI or kubectl to take care of the following tasks for the data-science team:

- Network and security configurations, such as outbound proxy server connection or Azure firewall configuration, Azure ML inference router (azureml-fe) setup, SSL/TLS termination, and no-public IP with VNET.

- Create and manage instance types for different ML workload scenarios and gain efficient compute resource utilization.

- Trouble shooting workload issues related to Kubernetes cluster.

Data-science team. Once the IT-operations team finishes compute setup and compute target(s) creation, data-science team can discover list of available compute targets and instance types in Azure ML workspace to be used for training or inference workload. Data science specifies compute target name and instance type name using their preferred tools or APIs such as Azure ML CLI v2, Python SDK v2, or Studio UI.

Recommended best practices

Separation of responsibilities between the IT-operations team and data-science team. As we mentioned above, managing your own compute and infrastructure for ML workload is a complicated task and it is best to be done by IT-operations team so data-science team can focus on ML models for organizational efficiency.

Create and manage instance types for different ML workload scenarios. Each ML workload uses different amounts of compute resources such as CPU/GPU and memory. Azure ML implements instance type as Kubernetes custom resource definition (CRD) with properties of nodeSelector and resource request/limit. With a carefully curated list of instance types, IT-operations can target ML workload on specific node(s) and manage compute resource utilization efficiently.

Multiple Azure ML workspaces share the same Kubernetes cluster. You can attach Kubernetes cluster multiple times to the same Azure ML workspace or different Azure ML workspaces, creating multiple compute targets in one workspace or multiple workspaces. Since many customers organize data science projects around Azure ML workspace, multiple data science projects can now share the same Kubernetes cluster. This significantly reduces ML infrastructure management overheads as well as IT cost saving.

Team/project workload isolation using Kubernetes namespace. When you attach Kubernetes cluster to Azure ML workspace, you can specify a Kubernetes namespace for the compute target and all workloads run by the compute target will be placed under the specified namespace.

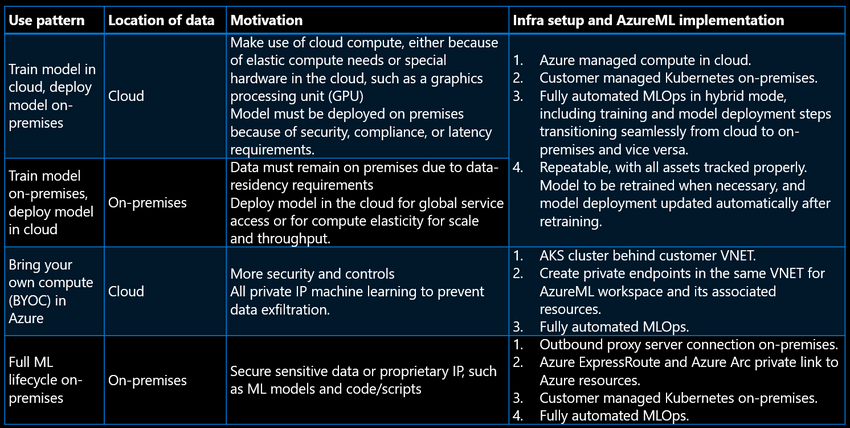

New Azure ML use patterns enabled

Azure Arc-enabled ML enables teams of ML professionals to build, train, and deploy models in any infrastructure on-premises and across multi-cloud using Kubernetes. This opens a variety of new use patterns previously unthinkable in cloud setting environment. Below table provides a summary of the new use patterns enabled by Azure ML Kubernetes compute, including where the training data resides in each use pattern, the motivation driving each use pattern, and how the use pattern is realized using Azure ML and infrastructure setup.

Get started today

To get started with Azure Machine Learning Kubernetes compute, please visit Azure ML documentation and GitHub repo, where you can find detailed instructions to setup Kubernetes cluster for Azure Machine Learning, and train or deploy models with a variety of Azure ML examples. Lastly, visit Azure Hybrid, Multicloud, and Edge Day and watch “Real time insights from edge to cloud” where we announced the GA.

Published on:

Learn moreRelated posts

Automating Business PDFs Using Azure Document Intelligence and Power Automate

In today’s data-driven enterprises, critical business information often arrives in the form of PDFs—bank statements, invoices, policy document...

Azure Developer CLI (azd) Dec 2025 – Extensions Enhancements, Foundry Rebranding, and Azure Pipelines Improvements

This post announces the December release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) Dec 2025 – Extensions En...

Unlock the power of distributed graph databases with JanusGraph and Azure Apache Cassandra

Connecting the Dots: How Graph Databases Drive Innovation In today’s data-rich world, organizations face challenges that go beyond simple tabl...

Azure Boards integration with GitHub Copilot

A few months ago we introduced the Azure Boards integration with GitHub Copilot in private preview. The goal was simple: allow teams to take a...

Microsoft Dataverse – Monitor batch workloads with Azure Monitor Application Insights

We are announcing the ability to monitor batch workload telemetry in Azure Monitor Application Insights for finance and operations apps in Mic...

Copilot Studio: Connect An Azure SQL Database As Knowledge

Copilot Studio can connect to an Azure SQL database and use its structured data as ... The post Copilot Studio: Connect An Azure SQL Database ...

Retirement of Global Personal Access Tokens in Azure DevOps

In the new year, we’ll be retiring the Global Personal Access Token (PAT) type in Azure DevOps. Global PATs allow users to authenticate across...

Azure Cosmos DB vNext Emulator: Query and Observability Enhancements

The Azure Cosmos DB Linux-based vNext emulator (preview) is a local version of the Azure Cosmos DB service that runs as a Docker container on ...