Infra in Azure for Developers - The How (Part 2)

Still working our way through infrastructure for developers here. I've done the why & what. Not to mention part one of how, and now we're back with part two of creating stuff. You should work your way through part one first to get the necessary context for this part.

When we left off last time we had a network and a private container app environment on top. That could have been done by a non-programmer, but in this part your development experience might come in handy. And that is part of my motivation here - at some point in the deployment cycle developer eyes are needed to cross the finish line.

We were implementing our infra as logical layers, and that's where we will continue.

All the code can be found here: https://github.com/ahelland/infra-for-devs

Level 4

Actual application code - yay! I know, a lot of work to get there. There are a number of different ways to deploy code to Azure depending on which service you use. If you use Azure Static Web Apps you just point to a git repo and once you check in your code it's all taken care of for you. If you're using Kubernetes it might involve a yaml file with the necessary specs. Old school app services could even work with FTP. In our case we can just continue down the Bicep path that we are already on.

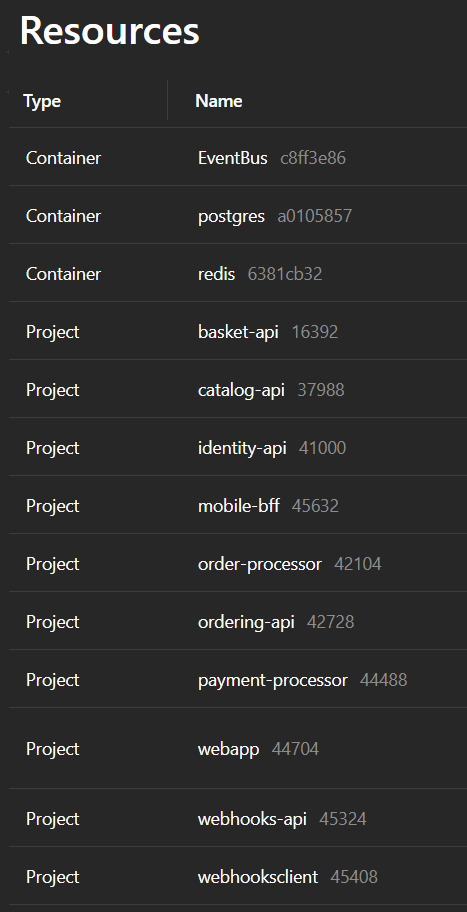

Let's remind ourselves of the components listed on our Aspire dashboard:

Three of our services are container images pulled from Docker Hub whereas the rest will be hosted in the container registry we created. For simplicity we will create separate Bicep modules for the two. You can create one for both, but then you would need some extra conditionals inside to handle the fact that Docker Hub allows anonymous access and our ACR does not so for learning purposes we're keeping them separate.

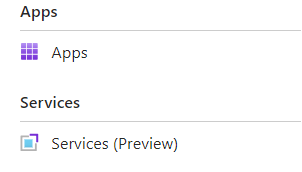

Let's start with these Docker containers. Container Apps are agnostic to where the images come from and as long as the wrapping of the binaries is in the right format it treats third-party code the same as your first-party code. There is however a a relatively new feature where some common third-party products are available in an easier way - "Services" (below "regular Apps"):

There's a list to choose from here. I must admit I'm not familiar with all of them, but you can see we find both Redis and Postgres on this list. We can find these in Program.cs for the Aspire code as well:

This annotation means that both Redis and RabbitMQ use the latest official images. However, Postgres uses a separate version that embeds vector search capabilities. The Service feature in Container Apps doesn't let you specify the image, which is part of why they are so easy to use, so unfortunately that blocks us from using the PostgreSQL service. (Yes, I tried and you will get errors runtime without the Vector support.) RabbitMQ is absent from the list so it's just Redis we can deploy this way. I wrapped the actual implementation in a Bicep module, but basically this is all the code required to deploy Redis this way:

The RabbitMQ and PostgreSQL images are similar in the way that both pull from Docker Hub so the core of a module for these looks like this:

And as an example instantiating RabbitMQ looks like this:

Hold on a moment. There might not be a native RabbitMQ offering in Azure, but surely both Redis and PostgreSQL are available - why would I use these instead? The reason is simplicity during the development phase. When you fire up your code locally to test and debug you don't care about high availability for the database and you don't care if the cache doesn't handle millions of items. There isn't an easy way to do geo-replication of the Postgres container and I'm pretty sure there wil be hickups if you hammer the Redis cache with enough traffic as well. These are great for development purposes within the Container Environment, but if you want to move to production you should look into the full offerings and configure them to your liking.

I should also note here that while I had no issues creating a Redis service for some reason I did have issues with the Basket API connecting to it. (It threw up an unhelpful stack trace.) Deploying as a "regular" container app seemed to fix this. This means that while a module for services is included we don't actually use it. (This is not a deal-breaker for our scenario, but as a side note the Azure Developer Cli attempts to use Services when deploying Aspire-based solutions.)

There are some low level details needed before actual deployment so hold on before pressing the roll-out button. We will get back to that after briefly looking at the actual eShop microservices.

Packaging microservices

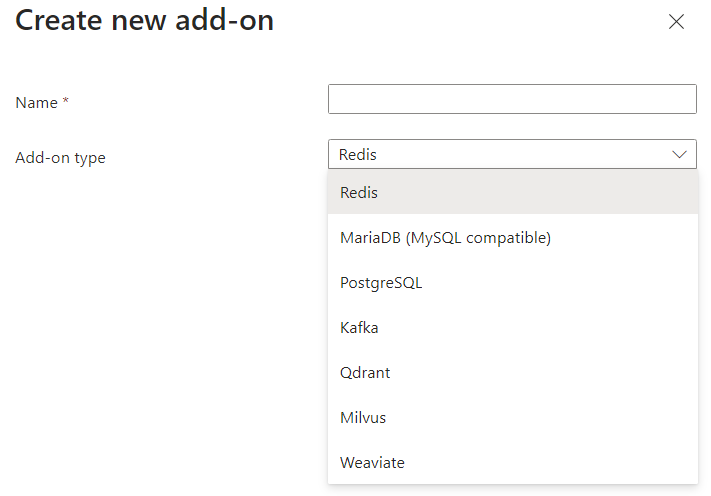

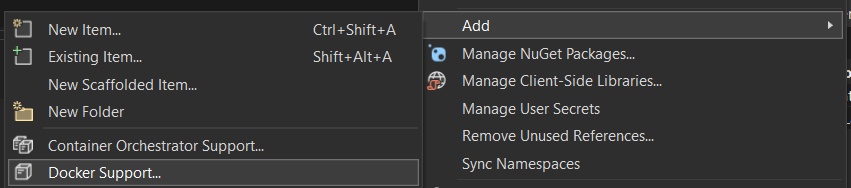

We can't avoid containerizing our microservices since we use Azure Container Apps. There's nothing preventing us from pushing this to Docker Hub as well and consume from there. (Since eShop is open source there's nothing to hide.) Let's pretend it's our company internal app and that it would be better served by the Azure Container Registry we created in the previous part. How do we get the images into the registry you ask? Ah, minor details. Quickest thing to do is to is to fire up Visual Studio and do the process from there. First add a Dockerfile:

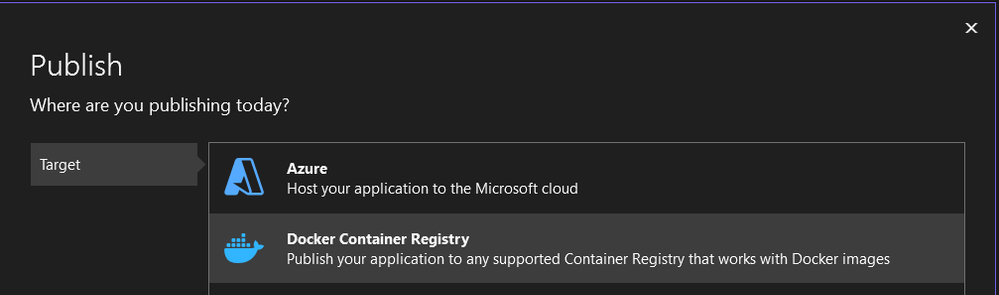

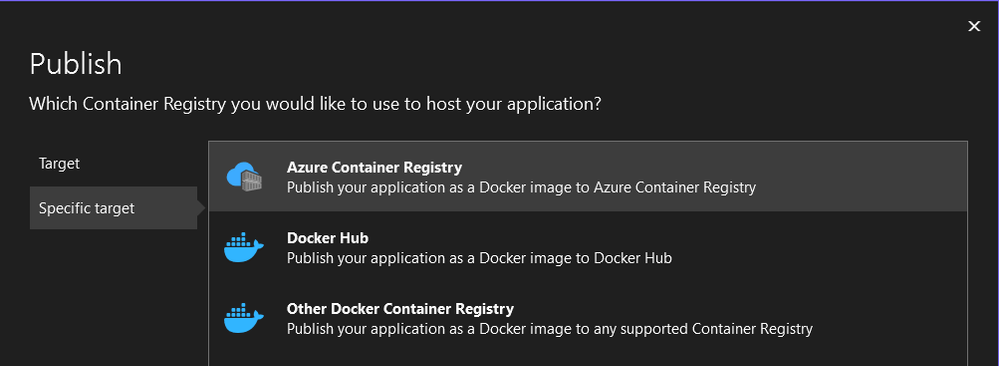

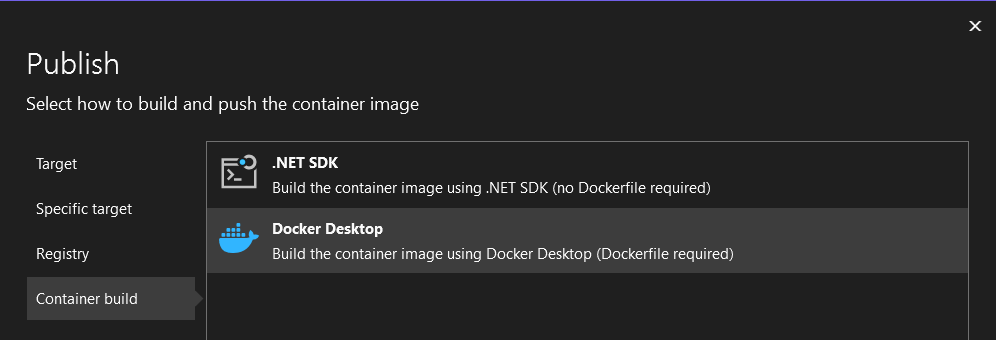

Then go through the process of publishing to ACR:

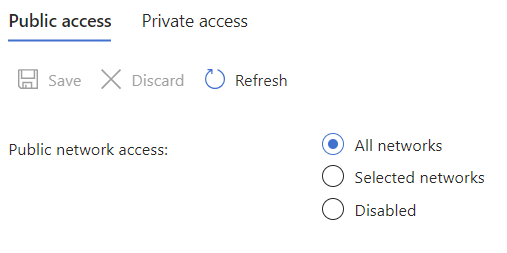

You might hit a snag here - we locked down our ACR to access from our private vnet and unless you happen to be on the same network you are not able to upload these images. This is the control plane vs data plane split hitting us. We are able to deploy our resources without being on the same network. Our requests go through the ARM API which by nature is public, but governed by control plane RBAC. (No permissions on a subscription equals no creation of resources.) When we want to push data into a registry that is going over the endpoints by the registry which we have said should be private. We need data plane permissions as well for private resources, but the private thing kicks in first. The solution here is to either login to the Dev Box if you created one, or as a quick fix do a click-ops and enable public access. (Which you can disable after uploading the images.)

This is a blocker if you want to do the push outside of Visual Studio too. You have the command az acr build, but the way this works behind the scenes is that the files are uploaded to a container instance not connected to the vnet (regardless of your computer). This is however slightly more complicated so I'm skipping the details of this one.

Environment variables

This was one of the trickier things to figure out - most code needs some environment variables to get going and the eShop services are no different. Part of the reason you want to use Aspire is that it takes care of this for you, but we are not using Aspire so we have to hack things manually. If you inspect Program.cs in the eShop.AppHost project you will find things like:

Both .WithEnvironment and .WithReference injects variables. To figure out what the values are we can look up the manifests for Aspire here:

https://learn.microsoft.com/en-us/dotnet/aspire/deployment/manifest-format

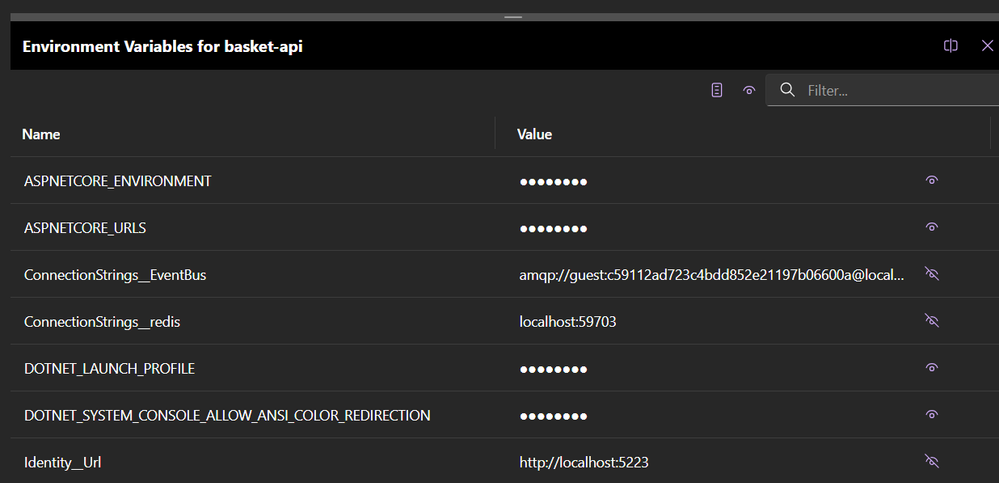

And we can inspect the values in the Aspire dashboard as well:

Now, normally appsettings.json would be a good place to check as well, but it looks like Aspire does some extra magic so not all variables are on file. (This is a totally valid pattern in .NET - sometimes you don't want to hardwire things and want them more dynamic.)

Coming in blind to the code base it took a little detective work looking in various places and digging into how Aspire works with Dependency Injection.

Container App Module

This brings us to building a module for container apps that pull their image from Azure Container Registry:

Of notice here is that we add identity and specify it is to be used with the ACR. We can also see a reference to a Redis service bind for those apps that need it. That is the Redis service we referred to a few paragraphs up. The fact that we only support one bind here is because we only identified Redis as a possible service - we could have reworked the Bicep to handle multiple bindings. The binding takes care of network plumbing and the like between apps and services.

Ingress

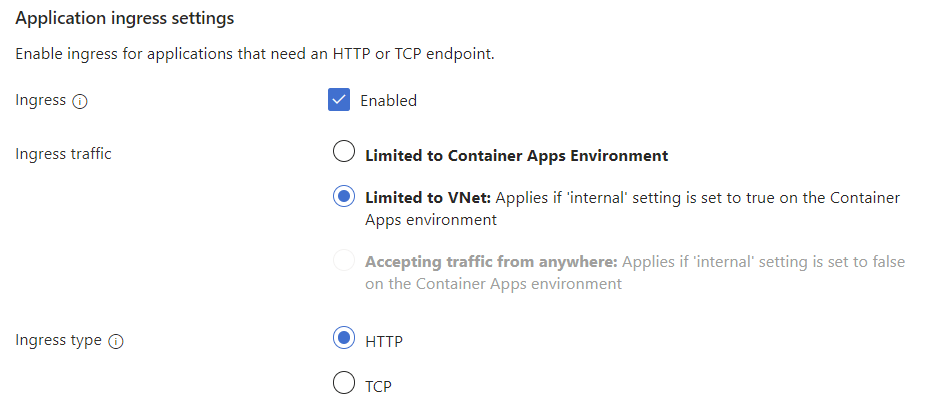

Network is a good cue to talk about ingress. We have an element in the Bicep code called "ingress" with a few parameters attached to it. If you try to create a container app in the Azure Portal you will see a couple of options appear if you enable ingress:

If you have something like a cron job that wakes up every 24 hours and cleans up old database records that would be an app that didn't require an ingress - it isn't supposed to react to incoming traffic.

A web app on the other hand would need an ingress because the whole purpose is that it will expose an interface for receiving inbound traffic.

You can however get more granular with this ingress. The web app needs an external ingress because it will receive traffic originating from outside the cluster (like my web browser), but other microservices may only need internal exposure. A classic problem when you have multiple microservices is how they should reach each other - by DNS name or IP. IP addresses are fickly since they can easily change, and proper DNS registration often involves other systems on-premises. In both plain Kubernetes and Azure Container Apps it is easy to facilitate service discovery. This means that I can hit a frontend in my browser, and it makes calls to http://backend without worrying about the IP address.

Unfortunately, it is mostly a guessing game for us how eShop works in this regard. The architecture diagram does show the logical flows, but not the network requirements.

Note that if we want external ingress (like accessing from the Dev Box) we need to add a DNS record to the Container App. This is not because the app doesn't like IP addresses, but because there is a load balancer in front exposing a shared IP for all container apps and this uses the host name header to direct calls to the right app on the other side. (You can use mechanisms like editing the hosts-file on your computer, but if you're using Dev Box you already have the DNS resolver in place so you don't need that extra step.)

Database initialization

We are almost ready for deployment, but since we covered environment variables let's revisit Postgres. If you use the "Service" version it comes with a few defaults. We didn't use that so we will add a few variables; basically a not to secure password and defining things needed to bootstrap the instance.

Aspire did a few extra things for us though:

It added databases. The default Docker image isn't clever enough to preprovision this for us. There are different ways to approach this. We could create a sql-file that we embed in a Dockerfile that takes care of this, but we are not using Dockerfiles for the third-party containers. (And we don't use Dockerfiles during the deployment phase anyways.) We could create a script that is passed as a command line argument by Bicep during creation - in which case we need somewhere to put that script.

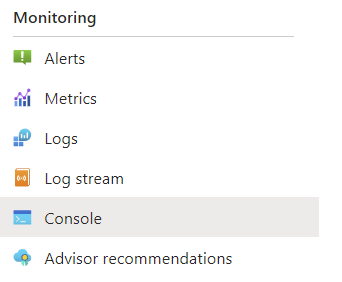

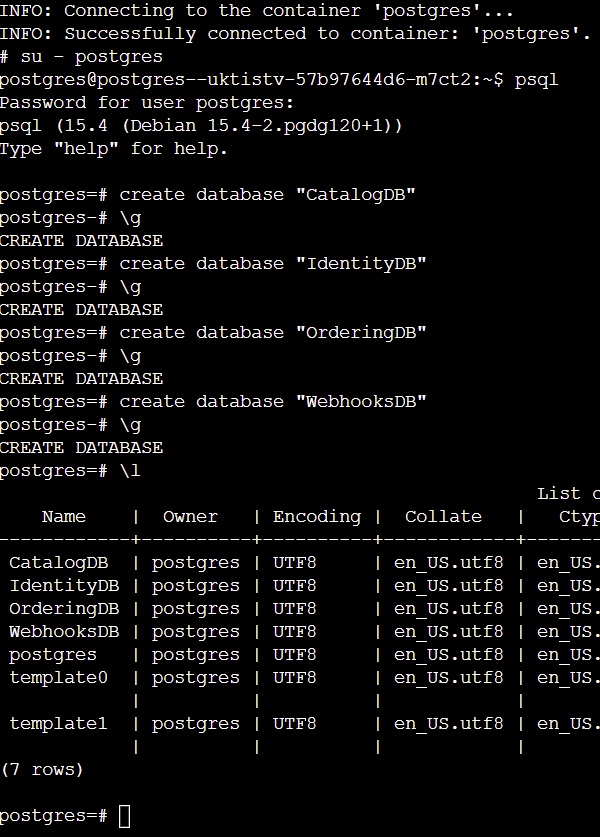

The microservices use Entity Framework so they should take care of the actual seeding of the databases. EF will create the databases too if the timing is right. By that I mean that if the PostgreSQL container gets created first, and the containers "owning" the database comes in second before you start querying it should work. I saw mixed results though so in case you need to create the databases manually you can use a little backdoor into the container going through the console in the Azure Portal. (Seeding still needs to be done by EF unless you create scripts for that yourself.)

The commands used:

I know - we could line them up and trigger things at the last line, but I went with \g to commit on each database. The password you get prompted for is the one you set as an environment variable. I realize it is a bit of a preemptive strike as we haven't deployed Postgres yet, but I'm setting the bar for what you should expect to do after running the deployment.

Assembling our main.bicep

Our Level-4 is easy in the sense that it's the same resource type repeated a number of times. If it was just pushing the images to the container registry and duplicating a few lines of Bicep - what bliss that would be. A spoiler: there was more to it and I was not able to fix everything. We will still go through it all.

We already covered PostgreSQL, RabbitMQ and Redis so we'll skip those. If you refer to the complete file you will also notice DNS records being created, but they aren't interesting to explain here.

Basket API:

We've wired things up with references to the Identity API, Redis and RabbitMQ (called eventBus) through environment variables. The transport is set to http2 due to gRPC.

Catalog API:

RabbitMQ here as well and we connect to the PostgreSQL database just by using the short-form "postgres" for name resolution. For demonstration purposes I injected the connection string in a different way as explained here:

Identity API:

The Identity API is sort of a hub since other APIs depend on it. The Identity API must have external ingress enabled for you to be able to log in - your browser needs a line of sight to the Identity Provider (Duende IdentityServer here but that applies to all IdPs). We're making references to the full DNS names of other APIs here implying they also have external ingress enabled. Apart from the Basket API which is internal, but that just means suffixing .internal as part of the FQDN. That's more of a matter of convenience since that allows us to hit the Swagger endpoints if we want to use the APIs without the web app.

Order API:

References to Identity API, Rabbit and PostgreSQL.

Order Processor:

Supporting service for ordering.

Payment Processor:

To process payments when you check out your order.

Web App:

The Web App needs to use various supporting APIs and has references to these. I added ASPNETCORE_ENVIRONMENT for debugging purposes. The ASPNETCORE_FORWARDEDHEADERS_ENABLED was needed to get through the load balancer correctly.

The web app caused me the most grief. It has depencies to everything else and it is of course the UI of eShop. Incorrect settings in other places surfaces here. I wouldn't go so far as to say monoliths are easier, but there's fewer cross-cutting concerns so it would be something to be aware of when dealing with a container environment full of worker services fronted by a pretty interface.

Webhooks API:

The Webhooks API is for subscribing to web hooks.

Webhook client:

Supporting client for web hooks.

Mobile BFF:

For connecting from mobile apps. Since we deployed on a private virtual network I didn't test any of this and it is more for completeness sake.

All in all it doesn't look to bad. But I said I didn't get it working like this. Don't mistake me for one who doesn't try :)

Debugging and code level changes

During the initial testing what you need to do is to step into the Azure Portal and go to "Log Stream" for each individual app and watch the output to see if there are any errors.

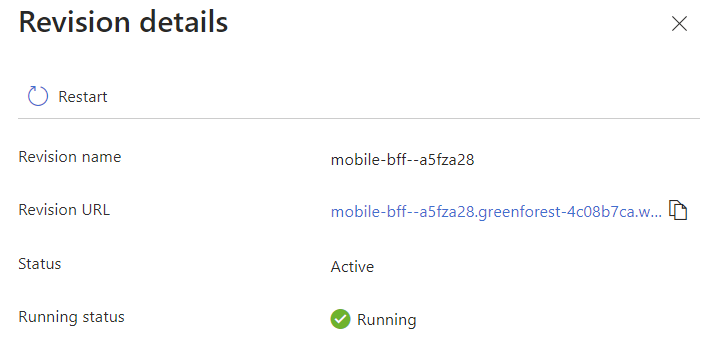

Some are easy to fix - for instance if one of the apps relying on RabbitMQ is ready before the eventBus app is ready it will not be able to connect. It should sort itself out given some time, but if not you might jumpstart things by restarting the revision:

Other errors…

The Identity API has an issue with encryption keys as well as serving up plain http in the wrong places.

- Encryption keys is fixed by deleting the /keys subfolder before building the container image. (Republish to ACR if you already pushed it.) You should be able to add an ignore directive to the Dockerfile as well, but for unknown reasons this didn't work for me.

- Forcing https can be done by adding a few lines to Program.cs:

These fall more in the category workaround than proper fix, but that's what we'll use here.

The OrderProcessor Dockerfile was for some reason incorrectly built by Visual Studio using an incorrect base image. Change the Dockerfile to look like the others:

Web App needs the same https fix as the Identity API in Program.cs.

The Catalog API exposes an API for getting all items:

This throws http 500 (tested via Swagger to exclude the Web App), but returns working JSON by changing to the following:

There are a number of API variants exposed, but I somehow feel I'm not fixing the root cause so it's something to be aware of.

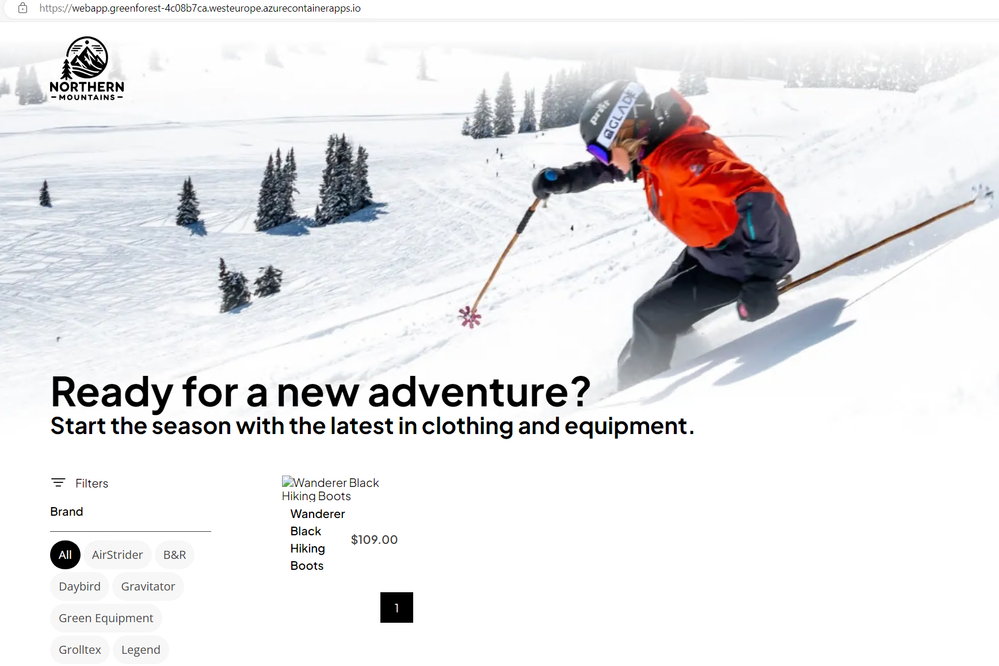

Related to the Catalog the web app misbehaves and throws an error that it's not able to parse the JSON so I implemented the bluntest workaround I could find and hardwired a single item skipping the API call:

You should be able to login, you should be able to check your orders and cart (both empty) and you should be able to see the main page:

The database story felt a little non-optimal as well. Sure, mostly Entity Framework would figure out migrations status and seeding, but seems like it's not a sure thing. If those technically are bugs or would have been better using PostgreSQL as a service (whether it's the built-in offering in Container Apps or proper Azure service) I do not know. I would probably look into figuring out a better way for a stable environment. Possibly splitting the deployment into 2 where PostgreSQL, RabbitMQ Redis are spun up first along with a SQL script before doing the microservices. (Then again - would I use PostgreSQL and RabbitMQ or Cosmos/SQL & Service Bus?)

Why not just fix the remaining bugs? (There could be more than the ones listed.) Ideally I would. I skipped this for a couple of reasons:

- I did not write this code so while I can browse through it and do regular debugging it's still me looking in from the outside. By making "errors go away" I could even be introducing new bugs.

- The intent of this series is to get developers going with the infra part of deployment. You are probably better than me at both the code you write and some of the details in eShop.

- Even with it being caused by an incorrect environment variable being injected or something similar the solution is able to deploy as such and demo the basic concepts.

If one has to rewrite substantial parts to work on cloud there's something else amiss here too. But of course - if an eagle-eyed reader spots something I didn't I'll be happy to correct things in the repo. It could be a "silly me" issue.

The rest of both the infra and app code can be found here: https://github.com/ahelland/infra-for-devs

Concluding remarks

This brings us to the conclusion of our little experiment. It feels a little anticlimactic that we didn't get everything to work, but there are still plenty of learnings here.

The process is the same if you have a solution you want to deploy to Azure Container Apps. You can get the Container Environment going the same way, and you can deploy the apps and services the same way. I could have happy-pathed things and deploy a simple Hello World type backend + frontend app - that would have worked without problems. And then you would have experienced problems instead when trying to scale into more code. Better to demonstrate that there are things you need to be aware of.

Does it make me have more opinions on Aspire and the Azure Developer Cli? Aspire is still a bit rough around the edges and that's probably why it's in preview. To be fair - Aspire has not advertised itself as a tool for deploying to cloud; the purpose is to bring a better inner loop for the developer locally which I believe it does. The secret sauce that makes you wonder what is happening behind the scenes is a bit more annoying.

The dev cli is more mature, but it's challenging when you have something that doesn't fit into the templates that come out of the box. If you put it to work on something that will produce deployable Bicep you will notice that it generates code that is structured differently than my samples. I don't present my code as the one correct solution so I'm ok with that, but I've tried to present a setup that is less tightly coupled and abstracts the different resources as layers/levels so that's just the way things are.

I hope you enjoyed this mini-series on infra for devs and that you agree there is no lack of code in this brave new world :)

Published on:

Learn moreRelated posts

November Patches for Azure DevOps Server

Today we are releasing patches that impact our self-hosted product, Azure DevOps Server. We strongly encourage and recommend that all customer...

Configuring Advanced High Availability Features in Azure Cosmos DB SDKs

Azure Cosmos DB is engineered from the ground up to deliver high availability, low latency, throughput, and consistency guarantees for globall...

IntelePeer supercharges its agentic AI platform with Azure Cosmos DB

Reducing latency by 50% and scaling intelligent CX for SMBs This article was co-authored by Sergey Galchenko, Chief Technology Officer, Intele...

From Real-Time Analytics to AI: Your Azure Cosmos DB & DocumentDB Agenda for Microsoft Ignite 2025

Microsoft Ignite 2025 is your opportunity to explore how Azure Cosmos DB, Cosmos DB in Microsoft Fabric, and DocumentDB power the next generat...

Episode 414 – When the Cloud Falls: Understanding the AWS and Azure Outages of October 2025

Welcome to Episode 414 of the Microsoft Cloud IT Pro Podcast.This episode covers the major cloud service disruptions that impacted both AWS an...