Accessing the EESSI Common Stack of Scientific Software using Azure HPC-on-demand

Microsoft has been working with the EESSI consortium for the past two years and provided Azure Credits to support the hosting of EESSI’s geographically distributed CernVM-FS servers on Azure and for the building, testing and benchmarking of the EESSI software layer across different types of CPU generations. As the year draws to a close, this article provides a brief summary of progress to date, specifically in relation to accessing EESSI using Azure HPC-on-demand, and looks at what’s planned for 2023.

What is EESSI?

The European Environment for Scientific Software Installations (EESSI) is a collaboration between different European partners in the HPC community: the goal of which is to build a common stack of scientific software installations which provides a uniform experience for users whilst minimising duplicate work across HPC sites for HPC System Administrators.

Designed to work on laptops, personal workstations, HPC clusters and in the cloud, the EESSI software stack was inspired by the Compute Canada software stack (now coordinated by Digital Research Alliance of Canada), a unified software environment for Canada’s national advanced computing centres serving the needs of over 10,000 researchers across the country and providing a shared stack of scientific software applications in over 4,000 different combinations.[1]

What is Azure HPC OnDemand?

The Azure HPC OnDemand Platform (azhop) delivers an end to end deployment mechanism for a complete HPC cluster solution in Azure which makes use of industry standard tools to provision and configure the environment Azure HPC OnDemand Platform | Welcome to the Azure HPC OnDemand Platform’s homepage.

How does EESSI Work?

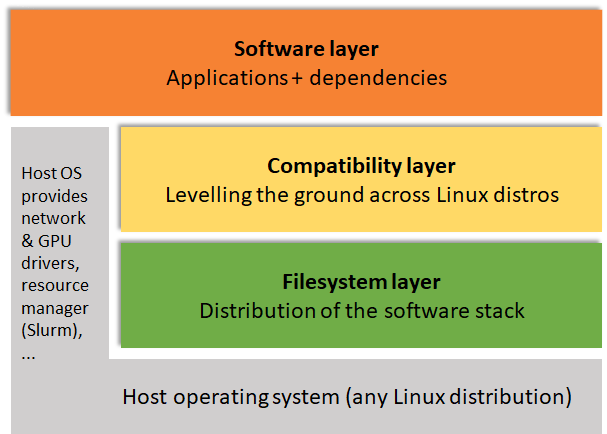

EESSI comprises a number of layers as shown in the high-level architecture overview (Figure 1): a filesystem later based on CernVM-FS which provides a scalable, reliable and low-maintenance software distribution service across clients, a compatibility layer required for ensuring compatibility of the software stack with the multiple different client operating systems and the software layer, installed using EasyBuild and which provides the scientific software installations and their dependencies[2].

Figure 1: EESSI High-level architecture overview

While EESSI is still in pilot phase the focus is very much focused on automation, procedures, testing and collaboration; recent developments and continued efforts by the consortium partners to secure dedicated workforce to ensure EESSI can become production ready is a definite goal of the collaboration and will start as soon as 2023.

Why Azure?

Azure is currently the only public cloud provider which provides a fleet of HPC Virtual Machines with InfiniBand RDMA so ensuring EESSI runs well on these VMs is essential.

The partnership is collaborative and mutually-beneficial: EESSI can help Microsoft to bridge the gap between on-premise and cloud by helping to make optimised bursting in the cloud possible using the same software stack.

The addition of applications that are useful to Azure customers will also help EESSI in the development/provision of a stable, optimised stack of scientific software, also helping to verify regression of the Azure HPC fleet and serving as a development layer upon which to build proprietary applications.

Verifying EESSI on Azure with RDMA

Ensuring that EESSI is freely available through open projects like Azure HPC on Demand (azhop) has been a key focus over the past few months. As part of this, WRF3 was selected as an important application to verify EESSI on Azure with RDMA and a successful evaluation was recently conducted which leveraged EESSI for WRF simulations at scale on Azure HPC to determine if EESSI could help to lower the adoption curve for customers running HPC on Azure and also to learn and hopefully improve Azure for HPC end-users.

Key differences between Azure and an on-premise HPC Cluster

The total Microsoft Azure estate comprises more than 60 geographically distributed datacentres: of those datacentres, the larger ‘Hero’ datacentres contain a considerable fleet of HPC and InfiniBand-enabled nodes.

Azure is currently the only public cloud provider to provide InfiniBand network, but unlike an on-premise HPC cluster, this InfiniBand network is purely focused on compute, not storage. Furthermore, Azure InfiniBand connectivity is not heterogeneous and connectivity is limited to single stamps, meaning that if multiple stamps are needed then some additional steps are required in order to ensure IB connectivity:

- Either by making sure only a single zone is being used, forcing the VMs to be landing on the same physical cluster which will allow the IB connectivity

- Or by using VM Scale Sets (VMSS) which will provide IB connectivity by default[3].

Running WRF3 to verify EESSI on Azure with RDMA

The aim of the exercise undertaken was to make sure EESSI could be fully compatible with the Azure HPC infrastructure and that the IB would work with no (or at least minimal) additional input required from the end user.

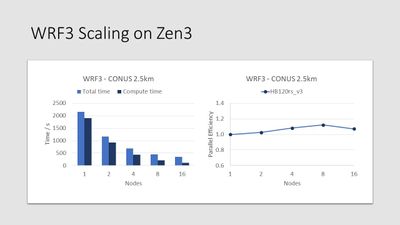

For the exercise, it was decided to use the WRF CONUS 2.5km Benchmark to ensure scaling over many nodes, see Figure 2 below. The total time and compute time were separated to make sure that the RDMA and scaling were performed correctly, and the parallel efficiency and scalability was then calculated using only the compute time (directly related to RDMA).

Figure 2: WRF3 Scaling on Zen3

Using a simple setup with no OpenMP or hybrid parallelism and undertaken in the most vanilla way possible, WRF demonstrated linear behaviour up to 16 nodes. There is certainly room for optimization but importantly this exercise demonstrated that out of the box with no specific changes or modifications this did indeed perform.

So what’s next?

Several next steps are planned for the evaluation from the Microsoft side, including simplifying the CPU detection, to allow more consistent determination of e.g. Zen3 (and upcoming Zen4), ARM64 and more CPU architectures. Extending the software suite with (benchmark) datasets and example submit scripts to allow consistent regression testing and easy onboarding for new users. And adding new software like WRF4 to investigate the potential for further scaling beyond 16 nodes.

Strategies to better optimize EESSI and using ReFrame for automated regression testing will also be areas requiring further investigation in the future.

With funding secured via the MultiXscale EuroHPC JU Centre of Excellence which will start in 2023, it is expected by all that the EESSI Consortium will secure the dedicated human resource to ensure EESSI itself can soon become production ready, continuing to help advance the industry and help in the design, delivery and deployment of new installation technologies: ultimately enabling the impact of end users and helping to further scientific outcomes.

[1] Providing a Unified Software Environment for Canada’s National Advanced Computing Centres (linklings.net)

[2] EESSI Architecture – EESSI (eessi-hpc.org)

[3] Using Azure CycleCloud for orchestration can also be used to combine multiple VMSS in a single running HPC cluster, allowing the total number of VMs to be scaled above the total number that can be in a single stamp.

Published on:

Learn moreRelated posts

BYOM: Using Azure AI Foundry models in Copilot Studio

Copilot Studio gives you a fast, secure way to build conversational agents and deploy them into the Microsoft 365 environment without writing ...

Building a Modern Python API with Azure Cosmos DB: A 5-Part Video Series

I’m excited to share our new video series where I walk through building a production-ready inventory management API using Python, FastAP...

Azure Developer CLI (azd) – June 2025

This post announces the June release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) – June 2025 appeared first o...

Restricting PAT Creation in Azure DevOps Is Now in Preview

As organizations continue to strengthen their security posture, restricting usage of personal access tokens (PATs) has become a critical area ...

Building Copilot Studio agents with Azure AI Search and Mapped Citations

Integration with custom systems and enterprise use cases often requires more than the out-of-the-box Copilot Studio knowledge sources. Azure A...