Automate BeeOND Filesystem on Azure CycleCloud Slurm Cluster

OVERVIEW

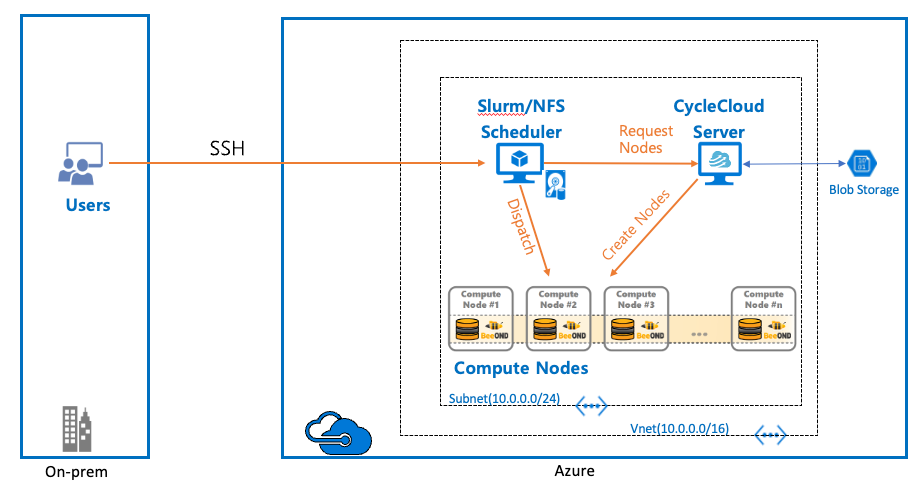

Azure CycleCloud (CC) is an enterprise-friendly tool for orchestrating and managing High-Performance Computing (HPC) environments on Azure. With CycleCloud, users can provision infrastructure for HPC systems, deploy familiar HPC schedulers, deploy/mount filesystems and automatically scale the infrastructure to run jobs efficiently at any scale.

BeeOND ("BeeGFS On Demand", pronounced like the word "beyond") is a per-job BeeGFS parallel filesystem that aggregates the local NVMe/SSDs of Azure VMs into a single filesystem for the duration of the job (NOTE: this is not persistent storage and only exists as long as the job is running...data needs to be staged into and out of BeeOND). This provides the performance benefit of a fast, shared job scratch without additional cost as the VM local NVMe/SSD is included in the cost of the VM. The BeeOND filesystem will utilize the Infiniband fabric of our H-series VMs to provide the highest bandwidth (up to 200Gbps HDR) and lowest latency compared to any other storage option.

This blog will describe how to automate a BeeOND filesystem with an Azure CycleCloud orchestrated Slurm cluster. It will demonstrate how to install and configure BeeOND on compute nodes using a Cloud-Init script via CycleCloud. The process of starting and stopping the BeeOND filesystem for each job is implemented via provided Slurm Prolog and Epilog scripts. By the end you will be able to add a BeeOND filesystem to your Slurm cluster (NOTE: creating the Slurm cluster is outside of this scope) with minimal interactions from the users running jobs.

REQUIREMENTS/VERSIONS:

- CycleCloud server (My CC version is 8.2.2-1902)

- Slurm cluster (My Slurm version is 20.11.7-1 and my CC Slurm release version is 2.6.2)

- Azure H-series Compute VMs (My Compute VMs are HB120rs_v3, each with 2x 900GiB ephemeral NVMe drives)

- My Compute OS is OpenLogic:CentOS-HPC:7_9-gen2:7.9.2022040101

TL/DR:

- Use this Cloud-Init script in CC to install/configure the compute partition for BeeOnD (NOTE: script is tailored to HBv3 with 2 NVMe drives)

- Provision (ie. start) the BeeOND filesystem using this Slurm Prolog script

- De-provision (ie. stop) the BeeOND filesystem using this Slurm Epilog script

SOLUTION:

- Ensure you have a working CC environment and Slurm cluster

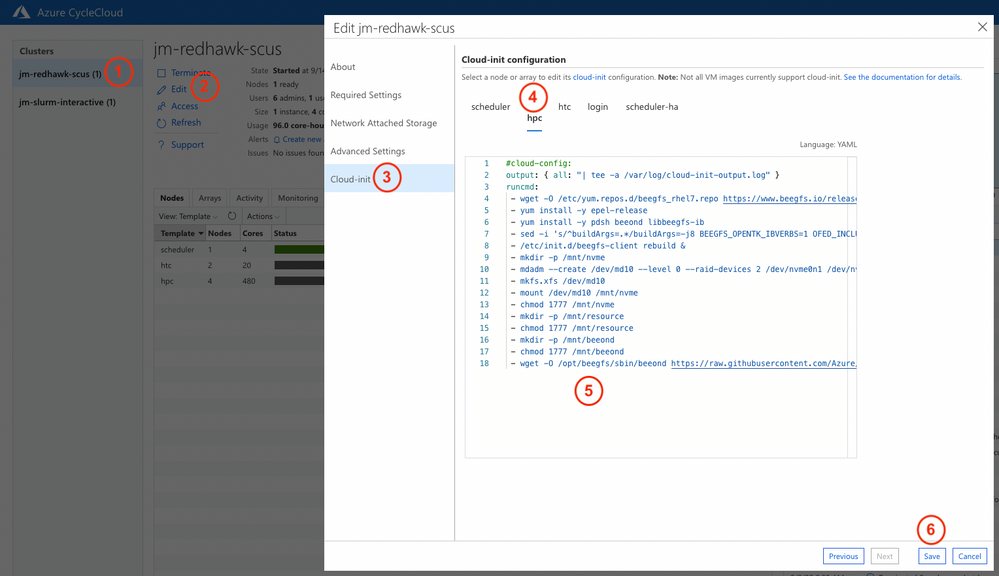

- Copy the cloud-init script from my Git repo and add to the CC Slurm cluster:

2.1: Select the appropriate cluster from your CC cluster list

2.2: Click "Edit" to update the cluster settings

2.3: In the popup window click "Cloud-init" from the menu

2.4: Select your partition to add the BeeOND filesystem (NOTE: the default partition name is hpc but may differ in your cluster)

2.5: Copy the Cloud-init script from git and paste here

2.6: Save the settings

3. SSH to your Slurm scheduler node and re-scale the compute nodes to update the settings in Slurm

4. Add the Prolog and Epilog configs to slurm.conf:

VALIDATE SETUP:

1. Download the test job from Github:

#!/bin/bash

#SBATCH --job-name=beeond

#SBATCH -N 2

#SBATCH -n 100

#SBATCH -p hpc

logdir="/sched/log"

logfile=$logdir/slurm_beeond.log

#echo "$DATE creating Slurm Job $SLURM_JOB_ID nodefile and starting Beeond" >> $logfile 2>&1

#scontrol show hostnames $SLURM_JOB_NODELIST > nodefile-$SLURM_JOB_ID

#beeond start -n /shared/home/$SLURM_JOB_USER/nodefile-$SLURM_JOB_ID -d /mnt/nvme -c /mnt/beeond -P >> $logfile 2>&1

echo "#####################################################################################"

echo "df -h: "

df -h

echo "#####################################################################################"

echo "#####################################################################################"

echo ""

echo "beegfs-ctl --mount=/mnt/beeond --listnodes --nodetype=storage: "

beegfs-ctl --mount=/mnt/beeond --listnodes --nodetype=storage

echo "#####################################################################################"

echo "#####################################################################################"

echo ""

echo "beegfs-ctl --mount=/mnt/beeond --listnodes --nodetype=metadata: "

beegfs-ctl --mount=/mnt/beeond --listnodes --nodetype=metadata

echo "#####################################################################################"

echo "#####################################################################################"

echo ""

echo "beegfs-ctl --mount=/mnt/beeond --getentryinfo: "

beegfs-ctl --mount=/mnt/beeond --getentryinfo /mnt/beeond

echo "#####################################################################################"

echo "#####################################################################################"

echo ""

echo "beegfs-net: "

beegfs-net

#beeond stop -n /shared/home/$SLURM_JOB_USER/nodefile-$SLURM_JOB_ID -L -d -P -c >> $logfile 2>&1

2. Submit the job: sbatch beeond-test.sbatch

3. When the job completes you will have an output file named slurm-2.out in your home directory (assuming the job # is 2, else substitute your job # in the filename). A sample job output will look like this:

|

##################################################################################### df -h: Filesystem Size Used Avail Use% Mounted on devtmpfs 221G 0 221G 0% /dev tmpfs 221G 0 221G 0% /dev/shm tmpfs 221G 18M 221G 1% /run tmpfs 221G 0 221G 0% /sys/fs/cgroup /dev/sda2 30G 20G 9.6G 67% / /dev/sda1 494M 119M 375M 25% /boot /dev/sda15 495M 12M 484M 3% /boot/efi /dev/sdb1 473G 73M 449G 1% /mnt/resource /dev/md10 1.8T 69M 1.8T 1% /mnt/nvme 10.40.0.5:/sched 30G 33M 30G 1% /sched 10.40.0.5:/shared 100G 34M 100G 1% /shared tmpfs 45G 0 45G 0% /run/user/20002 beegfs_ondemand 3.5T 103M 3.5T 1% /mnt/beeond ##################################################################################### #####################################################################################

beegfs-ctl --mount=/mnt/beeond --listnodes --nodetype=storage: jm-hpc-pg0-1 [ID: 1] jm-hpc-pg0-3 [ID: 2] ##################################################################################### #####################################################################################

beegfs-ctl --mount=/mnt/beeond --listnodes --nodetype=metadata: jm-hpc-pg0-1 [ID: 1] ##################################################################################### #####################################################################################

beegfs-ctl --mount=/mnt/beeond --getentryinfo: Entry type: directory EntryID: root Metadata node: jm-hpc-pg0-1 [ID: 1] Stripe pattern details: + Type: RAID0 + Chunksize: 512K + Number of storage targets: desired: 4 + Storage Pool: 1 (Default) ##################################################################################### #####################################################################################

beegfs-net:

mgmt_nodes ============= jm-hpc-pg0-1 [ID: 1] Connections: TCP: 1 (172.17.0.1:9008);

meta_nodes ============= jm-hpc-pg0-1 [ID: 1] Connections: RDMA: 1 (172.16.1.66:9005);

storage_nodes ============= jm-hpc-pg0-1 [ID: 1] Connections: RDMA: 1 (172.16.1.66:9003); jm-hpc-pg0-3 [ID: 2] Connections: RDMA: 1 (172.16.1.76:9003); |

CONCLUSION

Creating a fast parallel filesystem on Azure does not have to be difficult nor expensive. This blog has shown how the installation and configuration of a BeeOND filesystem can be automated for a Slurm cluster (will also work with other cluster types with adaptation of the prolog/epilog configs). As this is a non-persistent shared job scratch the data should reside on a persistent storage (ie. NFS, Blob) and staged to and from the BeeOND mount (ie. /mnt/beeond per these setup scripts) as part of the job script.

LEARN MORE

Learn more about Azure Cyclecloud

Read more about Azure HPC + AI

Take the Azure HPC learning path

Published on:

Learn moreRelated posts

Microsoft Power BI: Tenant setting changes for Microsoft Azure Maps in Power BI

Admins will have updated tenant settings for Azure Maps in Power BI, split into three controls. The update requires Power BI Desktop April 202...

Build 2025 Preview: Transform Your AI Apps and Agents with Azure Cosmos DB

Microsoft Build is less than a week away, and the Azure Cosmos DB team will be out in force to showcase the newest features and capabilities f...

Fabric Mirroring for Azure Cosmos DB: Public Preview Refresh Now Live with New Features

We’re thrilled to announce the latest refresh of Fabric Mirroring for Azure Cosmos DB, now available with several powerful new features that e...

Power Platform – Use Azure Key Vault secrets with environment variables

We are announcing the ability to use Azure Key Vault secrets with environment variables in Power Platform. This feature will reach general ava...

Validating Azure Key Vault Access Securely in Fabric Notebooks

Working with sensitive data in Microsoft Fabric requires careful handling of secrets, especially when collaborating externally. In a recent cu...