How to enable IPv4+IPv6 dual-stack feature on Service Fabric cluster

As the IPv4 addresses are already exhausted, more and more service providers and website hosts start using the IPv6 address on their server. Although the IPv6 is a new version of IPv4, their packet headers and address format are completely different. Due to this reason, users can consider IPv6 as a different protocol from IPv4.

In order to be able to communicate with a server with IPv6 protocol only, we’ll need to enable the IPv6 protocol on Service Fabric cluster and its related resources. This blog will mainly talk about how to enable this feature on Service Fabric cluster by ARM template.

Prerequisite:

We should be familiar with how to deploy a Service Fabric cluster and related resources by ARM template. This is not only about downloading the ARM template from the official document, but also including preparing a certificate, creating a Key Vault resource and uploading the certificate into the Key Vault. For detailed instructions, please check this official document.

Abbreviation:

|

Abbreviation |

Full name |

|

VMSS |

Virtual Machine Scale Set |

|

SF |

Service Fabric |

|

NIC |

Network Interface Configuration |

|

OS |

Operation System |

|

VNet |

Virtual Network |

Limitation:

Currently all the Windows OS Image with container support, which means the name is ending with -Containers such as WindowsServer 2016-Datacenter-with-Containers, does not support to enable IPv4+IPv6 dual stack feature.

The design changes before and after enabling this feature:

Before talking about the ARM template change, it’s better to have a full picture about the changes on each resource type.

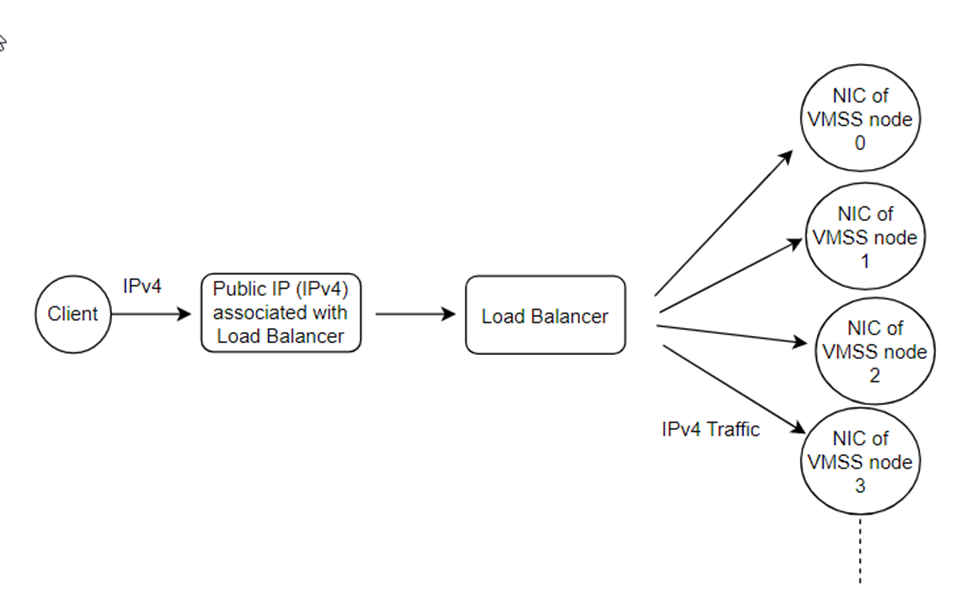

Before enabling the IPv6 dual stack feature, the traffic flow of the Service Fabric will be as following:

- Client sends request to the public IP address with IPv4 format address. The protocol used is IPv4.

- Load Balancer listens to its frontend public IP address and decides which VMSS instance to route this traffic according to 5-tuple rule and the load balancing rules

- Load Balancer forwards the request to the NIC of the VMSS

- NIC of the VMSS forwards the request to the specific VMSS node with IPv4 protocol. The internal IP addresses of these VMSS nodes are also in IPv4 format

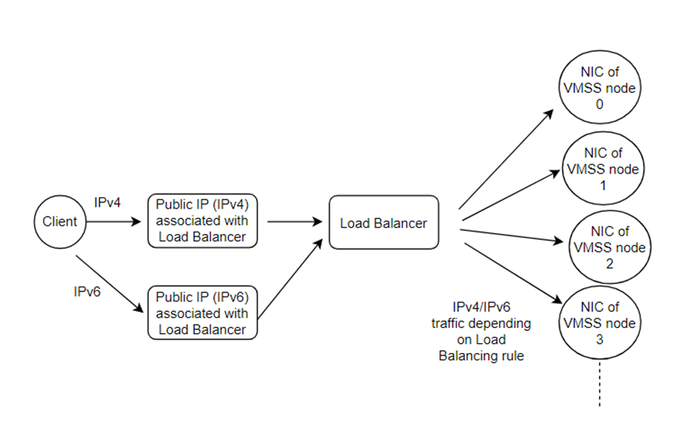

After enabling the IPv6 dual stack feature, the traffic flow of the Service Fabric will be as following: (The different parts are in bold.)

- Client sends request to one of the public IP addresses associated with Load Balancer. One of them is with IPv4 format address and another is with IPv6 format address. The protocol used can be IPv4 or IPv6 depending on which public IP address the client sends request to

- Load Balancer listens to its frontend public IP addresses and decides which VMSS instance to route this traffic according to 5-tuple rule. Then according to the load balancing rules and incoming request protocol, Load Balancer decides which protocol to forward the request

- Load Balancer forwards the request to the NIC of the VMSS

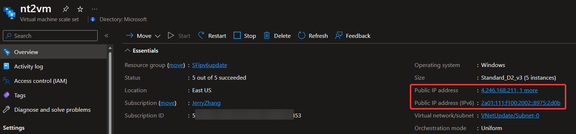

- NIC of the VMSS forwards the request to the specific VMSS node. The protocol here is decided by Load Balancer in second point. These VMSS nodes have both IPv4 and IPv6 format internal IP addresses

By comparing the traffic before and after enabling this dual stack feature, it’s not difficult to find the different points, which are also the configuration to be changed:

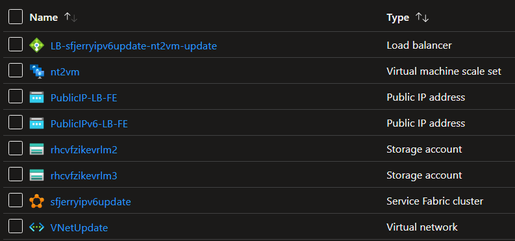

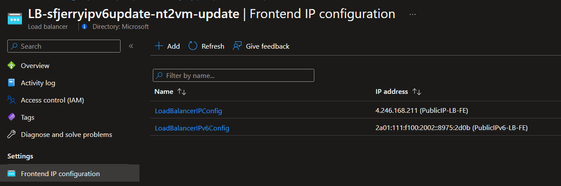

- Users need to create a second public IP address with IPv6 address and set the first public IP address type to IPv4.

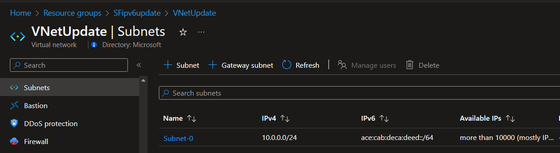

- Users need to add the IPv6 address range into the VNet and subnet used by our VMSS.

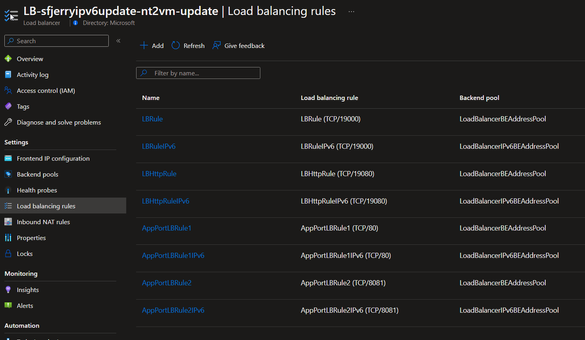

- Users need to add additional load balancing rule in Load Balancer for IPv6 traffic.

- Users need to modify the NIC of the VMSS to accept both IPv4 and IPv6 protocol.

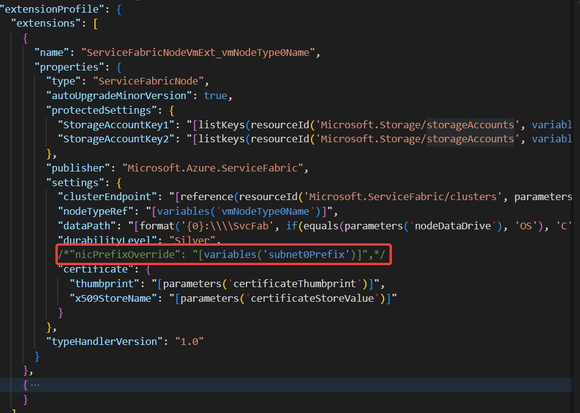

In addition to the above four points, users also need to remove the nicPrefixOverride setting of the VMSS SF node extension because this override setting currently doesn't support IPv4+IPv6 dual stack yet. There isn’t risk of removing this setting because this setting only takes effect when the SF cluster works with containers which won't be in this scenario due to the limitation of the OS image.

Changes to the ARM template:

After understanding the design changes, the next part is about the changes in the ARM template.

If users are going to deploy a new SF cluster with this feature, here are the ARM template and parameter files. After downloading these files:

- Modify the parameter values in parameter file

- Decide whether to change the value of some variables. For example, the IP range of the VNet and subnet, the DNS name, the Load Balancer resource and load balancing rule name etc. These values can be customized according to users’ own requirements. If this is for test purposes only, this step can be skipped.

- Use the preferred way, such as Azure Portal, Azure PowerShell or Azure CLI, to deploy this template.

If users are going to upgrade the existing SF cluster and resources to enable this feature, here are the points which users will need to modify in their own ARM template. The template of this Blog is modified based on the official example ARM template.

To understand the change in template more easily, the templates before and after the change are both provided here. Please follow the explanation below and compare the two templates to see what change is needed.

- In the variables part, multiple variables should be added. These variables will be used in the next parts.

Tips: As documented here, the IP range of the subnet with IPv6 (subnet0PrefixIPv6) must be ending with “/64”.

|

Variable name |

Explanation |

|

dnsIPv6Name

|

DNS name to be used on the public IP address resource with IPv6 address |

|

addressPrefixIPv6 |

IPv6 address range of the VNet |

|

lbIPv6Name |

IPv6 public IP address resource name |

|

subnet0PrefixIPv6 |

IPv6 address range of the subnet |

|

lbIPv6IPConfig0 |

IPv6 IP config name in load balancer |

|

lbIPv6PoolID0 |

IPv6 backend pool name in load balancer |

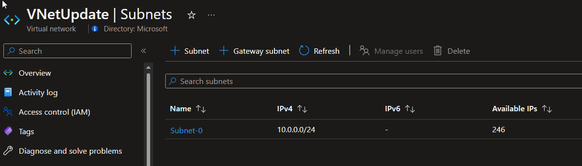

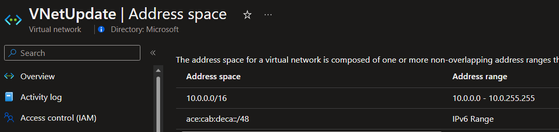

- For VNet: Add the IPv6 address range into VNet address range and subnet address range.

- For public IP address:

- Set publicIPAddressVersion property of existing public IP address to IPv4

- Create a new public IP address resource with IPv6 address

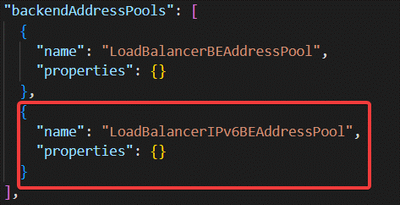

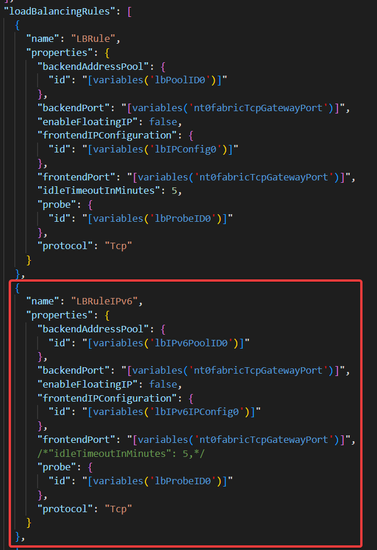

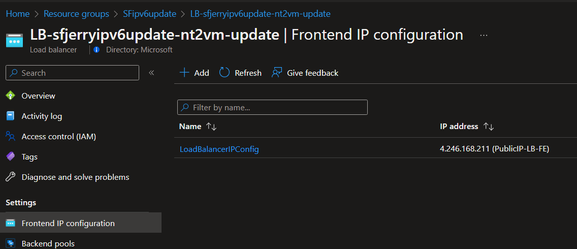

- For Load Balancer: (Referred document)

a. Add IPv6 frontend IP configuration

b. Add IPv6 backend IP address pool

c. Duplicate every existing load balancing rule into IPv6 version (Only one is shown as example here)

Note: As documented here, the idle timeout setting of IPv6 load balancing rule is not supported to be modified yet. The default timeout setting is 4 minutes.

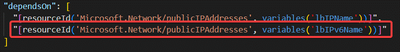

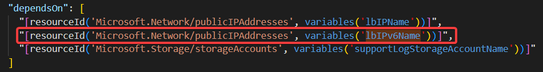

d. Modify depending resources

- For VMSS: (Referenced document)

- Remove nicPrefixOverride setting from the SF node extension

b. Set primary and privateIPAddressVersion property of existing IP Configuration and add the IPv6 related configuration into NIC part.

- For SF: Modify the depending resources

Tips: This upgrade operation sometimes will take long time. My test took about 3 hours to finish the upgrade progress. The traffic of IPv4 endpoint will not be blocked during this progress.

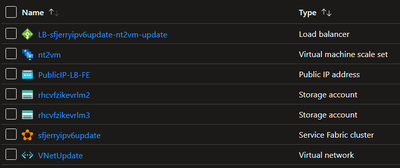

The result of the upgrade:

Before:

After:

The way to test IPv6 communication to SF cluster:

To verify whether the communication to SF cluster by IPv6 protocol is enabled, we can do it this way:

For Windows VMSS:

Please simply open the Service Fabric Explorer website with your IPv6 IP address or domain name.

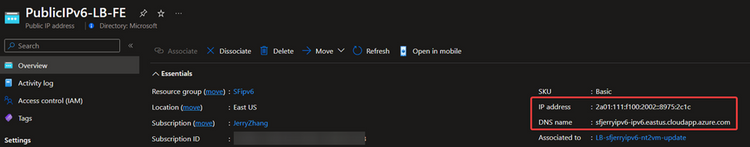

The domain name of IPv4 and IPv6 public IP address can be found in your public IP address overview page: (IPv6 public IP address as example here)

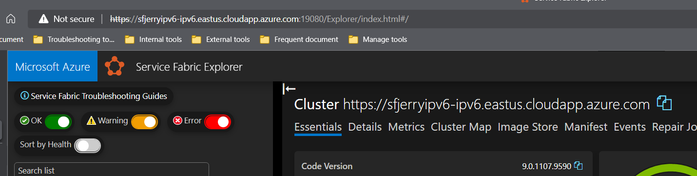

The result with IPv4 domain URL: https://sfjerryipv6.eastus.cloudapp.azure.com:19080/Explorer

For IPv6, we only need to replace the part between https:// and :19080/Explorer by the domain name in your IPv6 public IP address page, such as: https://sfjerryipv6-ipv6.eastus.cloudapp.azure.com:19080/Explorer

If the tests can both return the SF explorer page correctly, then the IPv4+IPv6 dual stack feature of SF cluster is also verified as working.

For Linux VMSS:

Due to design, currently the SF explorer doesn’t work on IPv6 for Linux SF cluster. To verify the traffic by IPv6 to a Linux SF cluster, please kindly deploy a webpage application which listens to 80 port and use the IPv6 domain name with 80 port the visit the website. If everything works well, it will return the same page as we visit the IPv4 domain name with 80 port.

(Optional) The way to test IPv6 communication in SF cluster backend VMs:

As explained in the first part, the communication from SF cluster to other remote servers with IPv6 will also be an increasing requirement. After enabling the IPv4+IPv6 dual stack feature, not only user can reach SF cluster by IPv6, but also the communication from SF cluster to other servers with IPv6 is enabled. To verify this point, we can do it this way:

For Windows VMSS:

RDP into whichever node, install and open Edge browser and visit https://ipv6.google.com

If it can return a page as normal Google homepage, then it’s working well.

For Linux VMSS:

SSH into whichever node and run the command:

If the result starts with <!doctype html> and you can find data like <meta content=”Search the world’s information, …>, then it’s working well.

Summary

By following this step-by-step guideline, enabling the IPv4+IPv6 dual stack feature should not be a question blocking the usage of SF cluster in the future. As the SF cluster itself has a complicated design and also during this process it contains the change of multiple resources, if there is any difficulty, please do not hesitate to reach Azure customer support to ask for help.

Published on:

Learn more