Mount ADLS Gen2 or Blob Storage in Azure Databricks

Scenario:

Azure Databricks offers many of the same features as the open-source Databricks platform, such as a web-based workspace for managing Spark clusters, notebooks, and data pipelines, along with Spark-based analytics and machine learning tools. It is fully integrated with Azure cloud services, providing native access to Azure Blob Storage, Azure Data Lake Storage, Azure SQL Database, and other Azure services. This blog shows example of mounting Azure Blob Storage or Azure Data Lake Storage in the Databricks File System (DBFS), with two authentication methods for mount: Access Key and SAS token.

Objective:

To become acquainted with Databricks storage mount with ABFS/WASB driver and various authentication methods.

Pre-requisites:

For this example, you would need:

- An Azure Databricks Service.

- A Databricks Cluster (compute).

- A Databricks Notebook.

- An Azure Data Lake Storage or Blob Storage.

Steps to mount storage container on Databricks File System (DBFS):

- Create storage container and blobs.

- Mount with dbutils.fs.mount().

- Verify mount point with dbutils.fs.mounts().

- List the contents with dbutils.fs.ls().

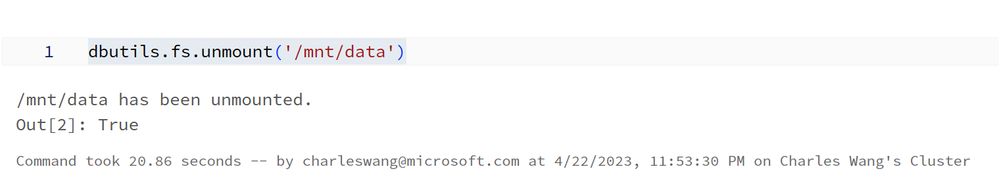

- Unmount with dbutils.fs.unmount().

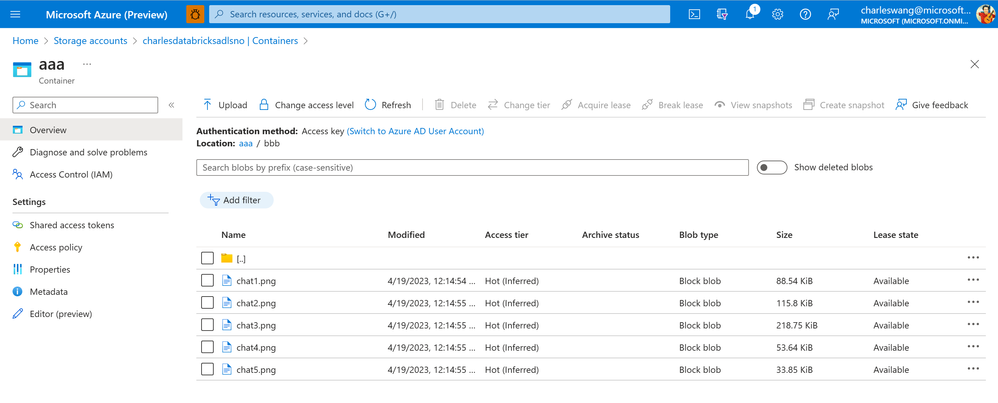

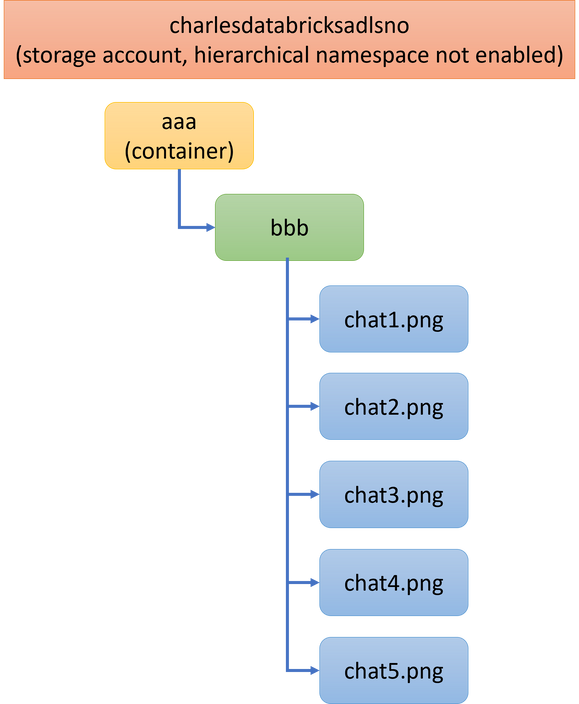

[STEP 1]: Create storage container and blobs

Below is the storage structure used in this example. I have created a container “aaa”, a virtual folder “bbb”, in which has 5 PNG files. The storage “charlesdatabricksadlsno” is a blob storage with no hierarchical namespace.

[STEP 2]: Mount with dbutils.fs.mount()

We can use below code snippet to mount container "aaa" with Azure Databricks.

Some keypoints to note:

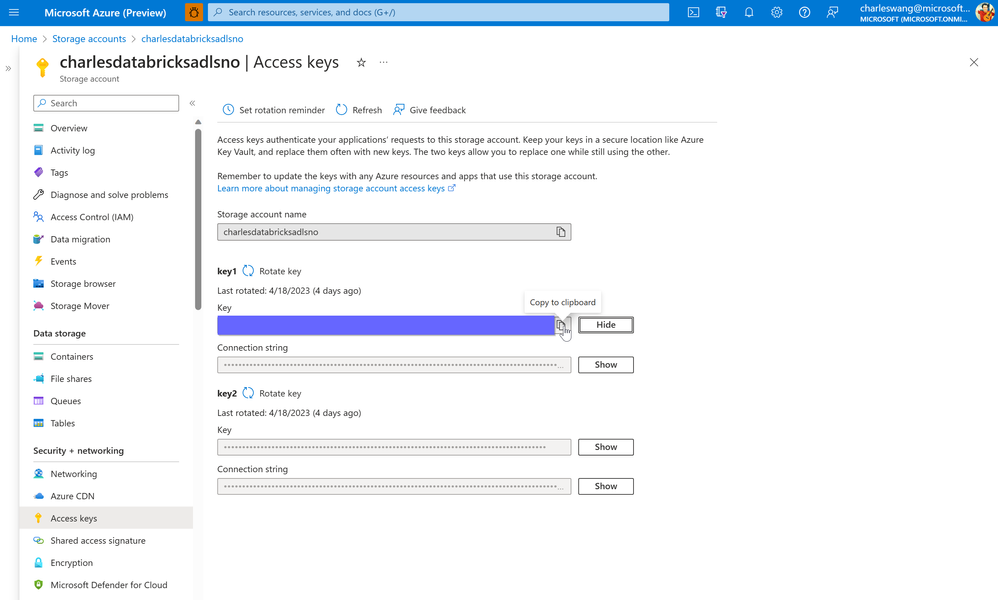

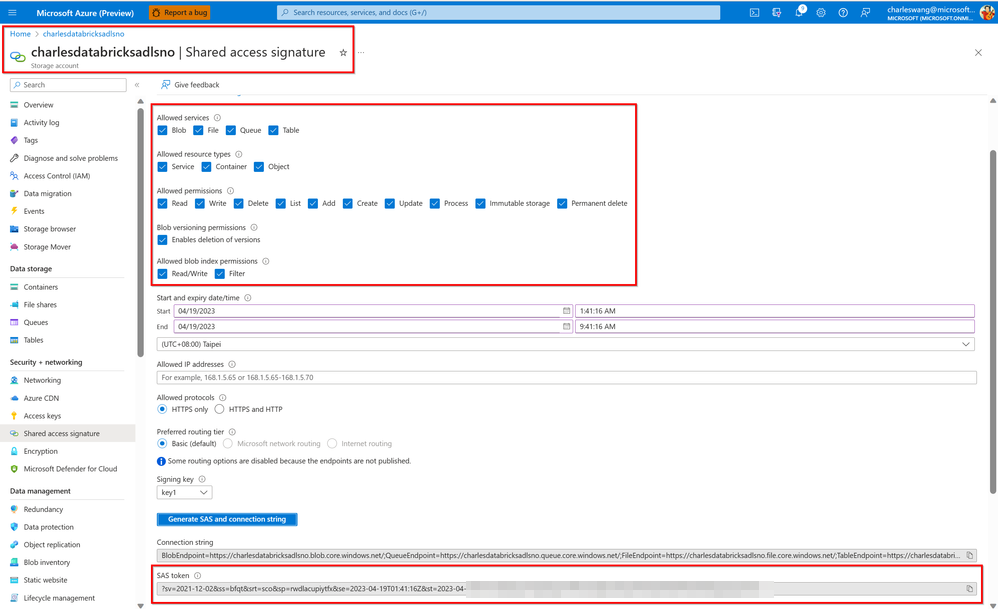

- I provide two authentication methods for mount: Access Key and SAS token. You may use either (by choosing the 1st or 2nd line that starts with "extra_configs". Instructions for getting the Access Key and SAS token are in the next section.

- This mount example does not re-mount an existing mount point. To re-mount, you have to unmount (mentioned in later section) and then mount again.

To get the Access Key, you would go to Azure portal/Access Keys and copy either key1 or key2.

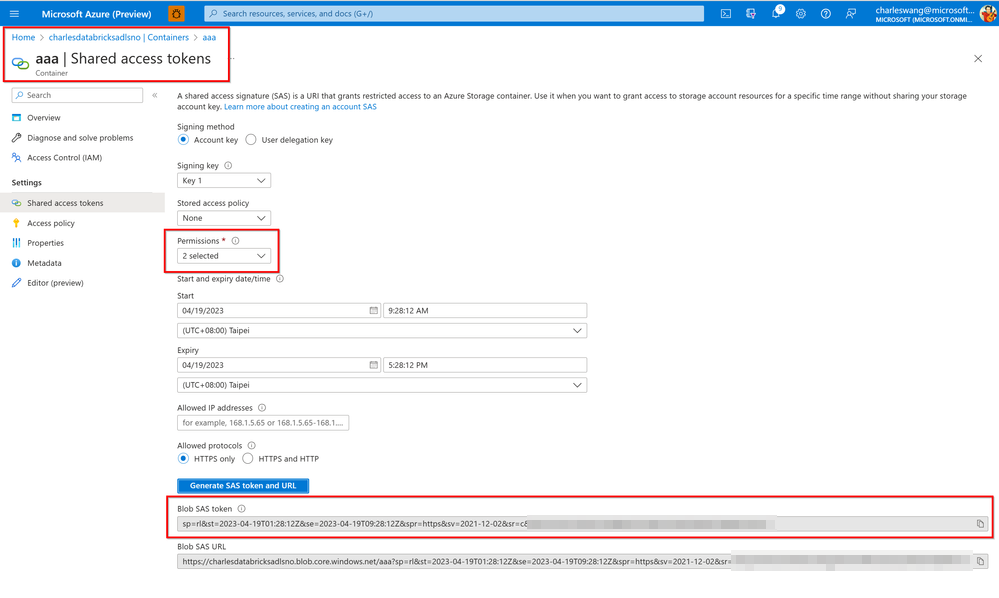

To get a SAS token, you can generate in two ways:

- Generate an account level SAS with all Allowed Resource Types enabled.

- Generate a container level SAS with read and list permissions. For this example, I generate a SAS for container “aaa” which I would later mount on the Databricks cluster.

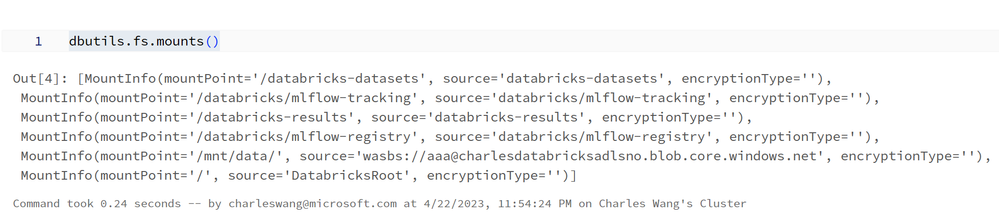

[STEP 3]: Verify mount point (/mnt/data) with dbutils.fs.mounts()

[STEP 4]: List the contents with dbutils.fs.ls()

[STEP 5]: Unmount with dbutils.fs.unmount()

Others:

- To use ADLS Gen2 storage as mount source, just replace the storage account name, Access Key, and SAS token in the mount step. You may reuse the BLOB endpoint (blob.core.windows.net).

- If you want to take advantage of the hierarchical namespace feature of ADLS Gen2, such as ACL on the files and folders, you can switch to use ABFS, which stands for Azure Blob File System, and the DFS endpoint (dfs.core.windows.net), from the previous WASBS (Windows Azure Storage Blob) used with BLOB endpoint. The mount source would become:

abfss://<container-name>@<storage-account-name>.dfs.core.windows.net/

- from

wasbs://<container-name>@<storage-account-name>.blob.core.windows.net/

- To prevent mount point authentication error in case an Access Key or SAS token is rotated, you can modify the mount condition such that if there is an existing mount point, it will first unmount before mounting.

References:

- https://docs.databricks.com/dbfs/mounts.html

- https://docs.databricks.com/storage/azure-storage.html

- https://docs.databricks.com/dev-tools/databricks-utils.html

- https://learn.microsoft.com/en-us/azure/databricks/dbfs/mounts

- https://azureops.org/articles/mount-and-unmount-data-lake-in-databricks/

- https://learn.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-use-databricks-spark

- https://docs.databricks.com/_extras/notebooks/source/data-sources/mount-azure-blob-storage.html

- https://stackoverflow.com/questions/60277545/what-is-the-difference-between-abfss-and-wasbs-in-azure-storage

Published on:

Learn moreRelated posts

Microsoft Dataverse – Monitor batch workloads with Azure Monitor Application Insights

We are announcing the ability to monitor batch workload telemetry in Azure Monitor Application Insights for finance and operations apps in Mic...

Copilot Studio: Connect An Azure SQL Database As Knowledge

Copilot Studio can connect to an Azure SQL database and use its structured data as ... The post Copilot Studio: Connect An Azure SQL Database ...

Retirement of Global Personal Access Tokens in Azure DevOps

In the new year, we’ll be retiring the Global Personal Access Token (PAT) type in Azure DevOps. Global PATs allow users to authenticate across...

Azure Cosmos DB vNext Emulator: Query and Observability Enhancements

The Azure Cosmos DB Linux-based vNext emulator (preview) is a local version of the Azure Cosmos DB service that runs as a Docker container on ...

Azure Cosmos DB : Becoming a Search-Native Database

For years, “Database” and “Search systems” (think Elastic Search) lived in separate worlds. While both Databases and Search Systems oper...