Azure Storage | How to migrate Azure Queue Data from One Account to Another

Storage queues are like service bus queue, the messages are not designed to store permanent like blobs/files, once the receiver processes the messages, they would be deleted. As queues use a publisher subscriber model, and the data is transient it may be easiest to recreate the queue. Please follow the below steps to migrate Azure Queue Data from one Storage account to another storage account:

- List all queues from the source storage account.

- create queues with the same names in the Target Storage account.

- Read every message from the source and write them to the destination.

Guidelines:

- Your client must have network access to both the source and destination storage accounts. To learn how to configure the network settings for each storage account, see Configure Azure Storage firewalls and virtual networks | Microsoft Learn

- Append a SAS token to REST API calls as needed.

- We assume process described in this blog requires user to develop an application of their own choice by leveraging azure storage client libraries as mentioned in Technical documentation | Microsoft Learn

- Ensure your application can access and both source and target storage accounts and configured with appropriate roles.

- The examples in this article assume that you've authenticated your identity by using your storage account with SAS key for making REST API calls.

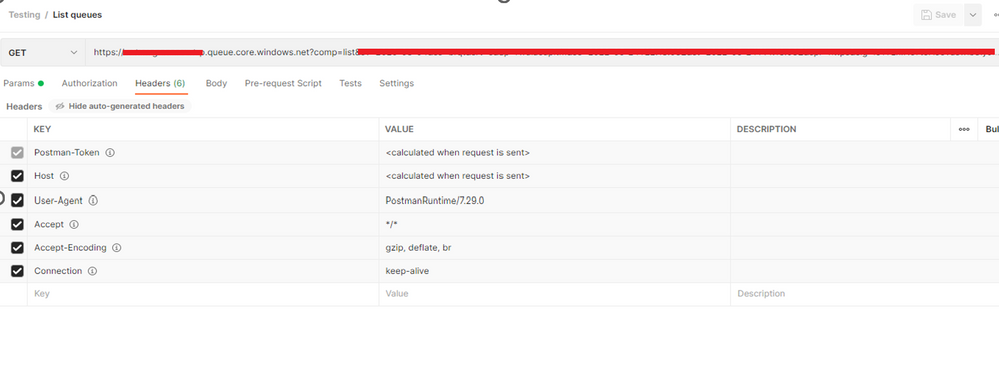

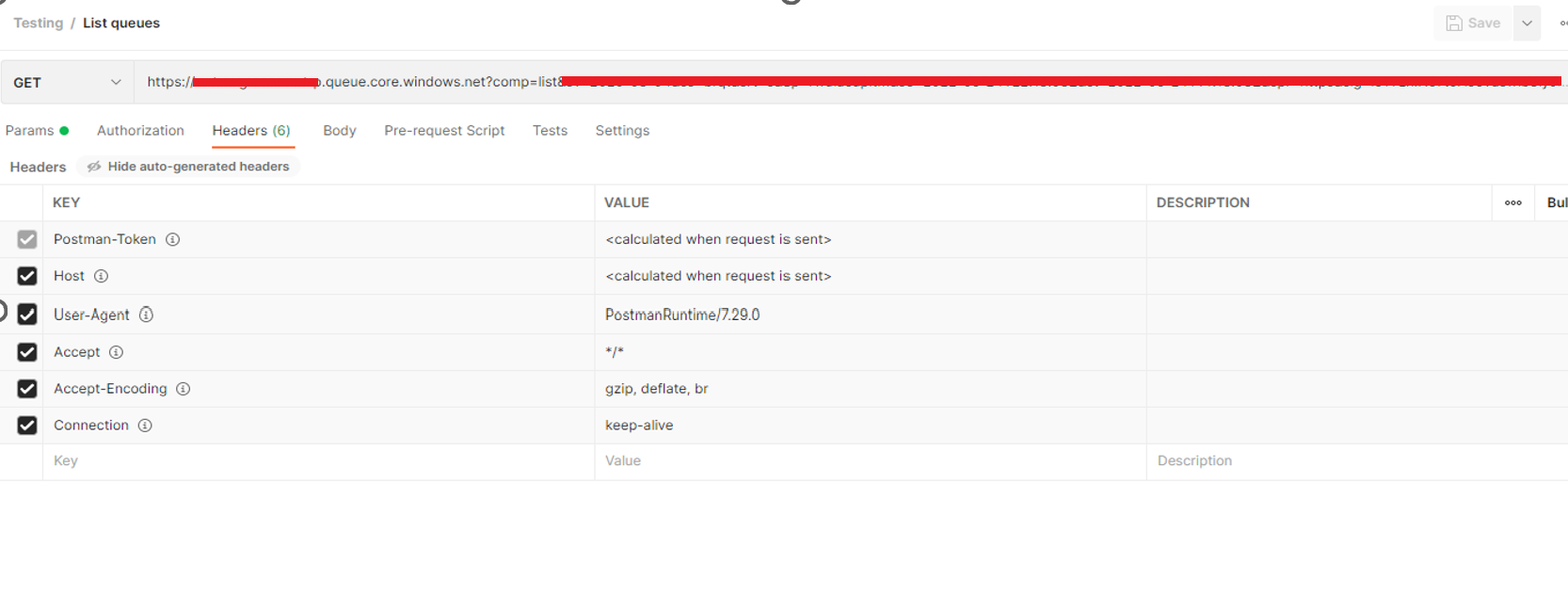

- List all queues from the source storage account.

Request:

The List Queues lists all the queues in each storage account. This request may be constructed as follows. HTTPS is recommended. Replace storage account with the name of your storage account:

Response:

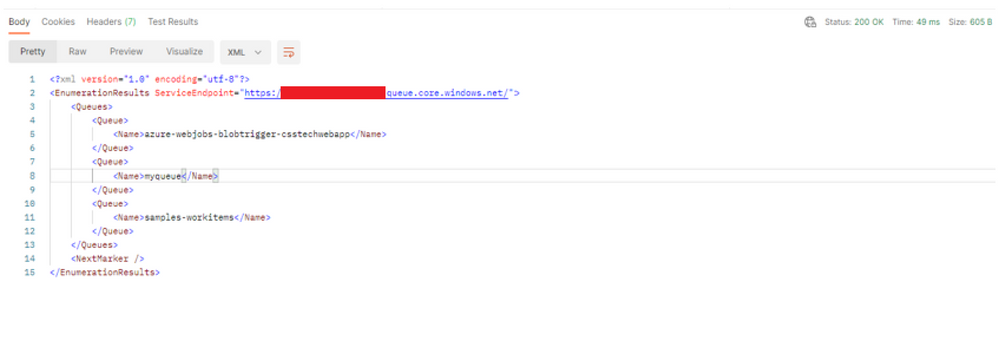

- Create queues with the same names in the Target storage account.

The Create Queue operation creates a queue in a storage account.

Request:

The Create Queue request may be constructed as follows. HTTPS is recommended. Replace storageaccountname with the name of your storage account:

Response:

The response includes an HTTP status code and a set of response headers. A successful operation returns status code 201 (Created).

Refer to below official documentation for the details specific to response headers

- Read every message from the source and write them to the destination

- Read message from Source storage account.

The Get Messages operation retrieves one or more messages from the front of the queue.

Request:

The Get Messages request may be constructed as follows. HTTPS is recommended. Replace myaccount with the name of your storage account, and myqueue with the name of your queue:

Response:

The response includes an HTTP status code and a set of response headers. A successful operation returns status code 200 (OK).

- Write message to Target storage account.

The Put Message operation adds a new message to the back of the message queue. A visibility timeout can also be specified to make the message invisible until the visibility timeout expires. A message must be in a format that can be included in an XML request with UTF-8 encoding. The encoded message can be up to 64 KiB in size for versions 2011-08-18 and newer, or 8 KiB in size for previous versions.

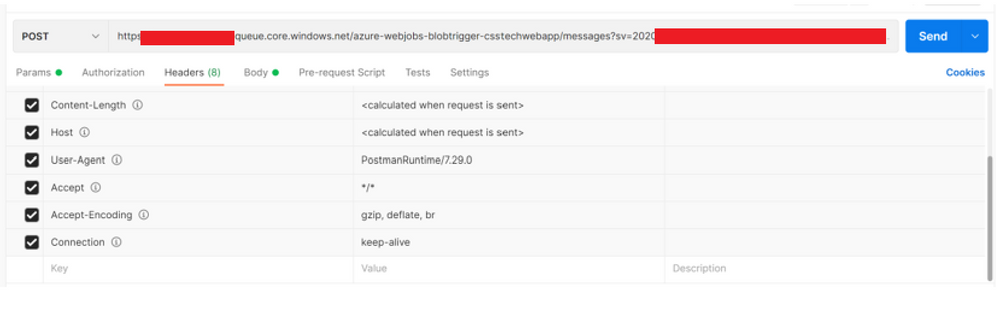

Request:

The Put Message request may be constructed as follows. HTTPS is recommended. Replace storageaccount with the name of your storage account, and myqueue with the name of your queue:

Request Body:

The body of the request contains the message data in the following XML format. Note that the message content must be in a format that may be encoded with UTF-8.

<QueueMessage> <MessageText>message-content</MessageText> </QueueMessage>

Response:

The response includes an HTTP status code and a set of response headers. A successful operation returns status code 201 (Created).

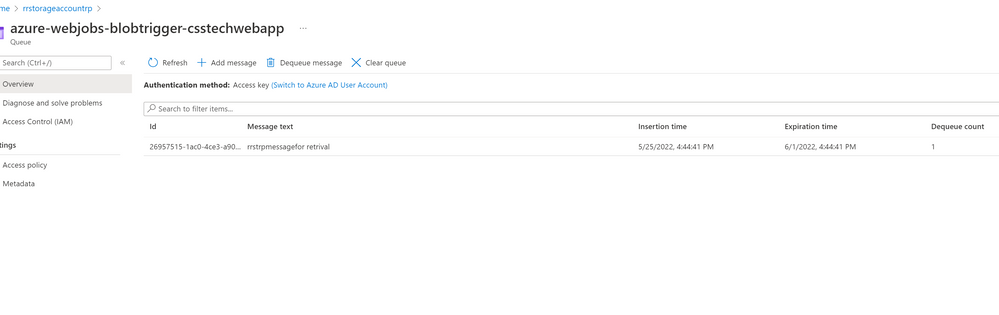

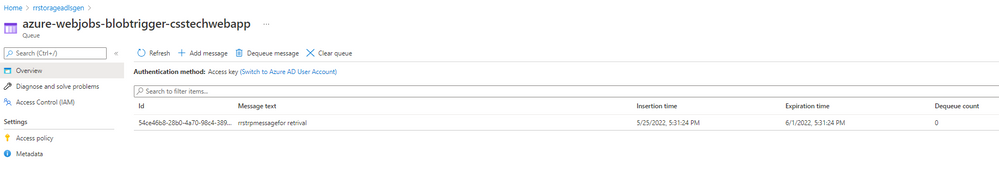

Once the POST operation is successful, you can check if the queue/message is copied properly by visiting source and target storage account.

Source Storage account:

Target Storage account:

- Upon the message is successfully written into the destination queue or PUT operation is successful. We need to delete the queue from source queue.

The Delete Queue operation permanently deletes the specified queue.

Request:

You can construct the Delete Queue request as follows. HTTPS is recommended. Replace myaccount with the name of your storage account.

Response:

A successful operation returns status code 204 (No Content). For information about status codes, see Status and error codes.

Published on:

Learn moreRelated posts

What runs ChatGPT, Sora, DeepSeek & Llama on Azure? (feat. Mark Russinovich)

Build and run your AI apps and agents at scale with Azure. Orchestrate multi-agent apps and high-scale inference solutions using open-source a...

Azure Cosmos DB TV – Everything New in Azure Cosmos DB from Microsoft Build 2025

Microsoft Build 2025 brought major innovations to Azure Cosmos DB, and in Episode 105 of Azure Cosmos DB TV, Principal Program Manager Mark Br...

Azure DevOps with GitHub Repositories – Your path to Agentic AI

GitHub Copilot has evolved beyond a coding assistant in the IDE into an agentic teammate – providing actionable feedback on pull requests, fix...

Power Platform Data Export: Track Cloud Flow Usage with Azure Application Insights

In my previous article Power Platform Data Export: Track Power Apps Usage with Azure Data Lake, I explained how to use the Data Export feature...

Announcing General Availability of JavaScript SDK v4 for Azure Cosmos DB

We’re excited to launch version 4 of the Azure Cosmos DB JavaScript SDK! This update delivers major improvements that make it easier and faste...

Confluent Cloud Releases Managed V2 Kafka Connector for Azure Cosmos DB

This article was co-authored by Sudhindra Sheshadrivasan, Staff Product Manager at Confluent. We’re excited to announce the General Availabili...

Now in Public Preview: Azure Functions Trigger for Azure Cosmos DB for MongoDB vCore

The Azure Cosmos DB trigger for Azure Functions is now in public preview—available for C# Azure Functions using Azure Cosmos DB for MongoDB vC...

Now Available: Migrate from RU to vCore for Azure Cosmos DB for MongoDB via Azure Portal

We are thrilled to introduce a cost-effective, simple, and efficient solution for migrating from RU-based Azure Cosmos DB for MongoDB to vCore...

Generally Available: Seamless Migration from Serverless to Provisioned Throughput in Azure Cosmos DB

We are excited to announce the general availability (GA) of a highly requested capability in Azure Cosmos DB: the ability to migrate from serv...