Azure DNS Private Resolver topologies

Introduction

Before diving into Azure DNS Private Resolver (ADPR), let's go back in time a little bit, to understand its value. Before ADPR, companies that had to deal with hybrid DNS resolution, had to host custom DNS servers in Azure. They were mostly hosting at least a pair of DNS servers, or extending their Infoblox (or similar technology) in the Cloud. The reason why this had to be tackled that way was due to the fact that DNS zones in Azure could not be used as forwarders (still today), and Azure DNS itself is only reachable from within an Azure Virtual Network.

In mid 2023, most production setups are still making use of custom DNS servers in Azure. There is nothing wrong with it but it incurs more operational work and you must also pay a particular attention to security, since DNS is a common attack vector. Very recently, Microsoft launched ADPR, a fully managed service that you can take advantage of, to avoid self-hosting your own DNS servers.

The official documentation https://learn.microsoft.com/en-us/azure/architecture/example-scenario/networking/azure-dns-private-resolver walks you through some basic scenarios, while this other article https://learn.microsoft.com/en-us/azure/dns/private-resolver-architecture is a little more focused on architecture styles. But, as often, real-world situations can be more complex, in that they may require a hard split between production and non-production at all levels, including DNS, and be based on multiple hubs instead of a single hub. That's what I will tackle in this article.

First things first, back to basics

Let's first have a quick look at Azure DNS itself, while focusing only on private DNS resolution. The container of any private service or machine, is a virtual network (VNET). Whenever you create a VNET, you have two options, DNS-wise:

- Use Azure DNS (168.63.129.16)

- Use custom DNS, which allows you to cross the VNET boundaries

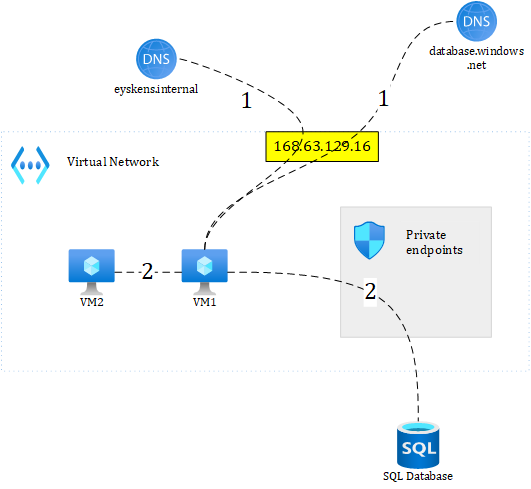

When you use Azure DNS, it is expected that any DNS query can be resolved locally in the VNET, because DNS traffic is sent to 168.63.129.16, which isn't visible outside of the VNET. So, for example, the following setup would work just fine:

Figure 1: basic DNS example

where VM1 is directly connecting to Azure DNS to resolve both a custom domain and a private link enabled service. Both domain names are managed with private DNS zones which are attached to the VNET. The first step is the DNS resolution itself, and the second, is to connect to the target, which in this example, are a virtual machine and a database. This basic example shows how DNS resolution works with Azure DNS. Of course, reality often proves to be more complex than that, but the fundamentals are there.

Basic Hub & Spoke setup

In a basic and typical hub & spoke setup, consisting of a single hub with no split between production and non-production, things can already be more complicated but still rather easy to understand. The previous example could also work in a Hub & Spoke setup:

Figure 2: unlikely Hub & Spoke DNS setup

Private DNS zones can be distributed and attached to all your spokes as well as to your hub. You may even decide to let spokes work with dedicated zones. In such a distributed setup, it can become hard to manage DNS at scale, since you might lose track of all the domains in use within your company. Moreover, with Private DNS zones alone, whether distributed or not, you wouldn't be able to resolve on-premises domains nor resolve Azure domains from your on-premises environment, since your on-prem DNS servers cannot reach out to 168.xxx.

So, prior to ADPR, most companies would end up with something like this, in order to deal with hybrid DNS resolution:

Figure 3: common DNS setup

This drawing shows a centralized approach with self-hosted DNS servers in the Hub. Every spoke's DNS is sent to the Hub. The Hub's DNS servers forward on-premises domains to the on-premises DNS servers and vice versa. This is probably still one of the most common topologies in use today. The orange flow in this diagram, shows how to resolve an on-premises domain from Azure. The black flow shows how to resolve a private link enabled database from within Azure. At last, the red flow shows how to resolve an Azure domain from on-premises. In this setup, even though we're using custom DNS, Azure's default DNS is still in use, because our custom DNS servers will forward Azure specific DNS to 168.63.129.16.

The only downside of this approach, is the fact that you have to manage the self hosted servers (upgrading, ensure scaling, ensure security, etc.). Let us now see how we can introduce ADPR.

ADPR to the rescue

The value proposition of ADPR, is to let Microsoft manage the underlying DNS infrastructure. ADPR is very easy to work with, and can be summarized in a few lines:

- ADPR's inbound endpoints are used to forward traffic from your on-premises (or other clouds) to Azure. They can also be used for Azure-to-Azure related traffic, as we'll see later.

- ADPR's outbound endpoints are used to resolve non-Azure domains.

- DNS forwarding rulesets let you define explicit DNS rules to deal with both Azure and non-Azure domains.

- ADPR fallbacks automatically on Azure DNS .

ADPR and its associated components, allow you work in a distributed or centralized approach. I will not elaborate on this since the Microsoft online docs already explain this, so I'll just keep using a centralized approach.

The below diagram does exactly the same thing as the previous one (Figure 3), using ADPR:

Figure 4: simple centralized setup with ADPR instead of VMs

Flows are illustrated using the same colors as before. This is very similar to the self-hosted approach. The only difference is that you use DNS forwarding rulesets to let ADPR know where to send non-Azure related domains. This is all great and easy but as I said before, more and more companies consider security in the Cloud as a very important topic, and want to have a hard split between production and non-production. This is what we will look at next!

Hard split between production and non-production and the Private Link challenge

Before talking about how to use ADPR in a highly segregated environment, it is important to highlight Private Link's biggest shortcoming in that matter. Some organizations also want to split their DNS infrastructure. However, while this might sound simple enough, a major downside of Azure Private Link, is that it only has a single domain name per PaaS resource, no matter the environment (DEV,TEST,ACC,PROD), for which it is used.. Let's illustrate this with an example. Say you have two SQL databases, one for dev and one for prod (to make our life easy :)):

- dev-db.database.windows.net ==> CNAME ==> dev-db.privatelink.database.windows.net

- db.database.windows.net ==> CNAME ==> db.privatelink.database.windows.net

Microsoft adds the CNAME record whenever private link is enabled for a given record. This is something totally transparent for the Cloud consumer. But, as you can see, the domain is the same for both the non-prod and prod databases, making it impossible to distinguish the environment from the domain name itself.

The recent release of ADPR does not provide any magic to workaround this issue. This constraints us in the way we can design our DNS infrastructure, for both our Cloud and our on-premises environments.

In a nutshell, here are the only two possibilities I see (feel free to add more in the comments):

1. You have an end-to-end hard split between production and non-production, including your on-premises DNS environment. This is the easiest setup since your on-premises non-production DNS servers can forward to Azure's non-prod resolver's inbound endpoint, as well as your production DNS servers can reach out to Azure's production resolver's inbound endpoint.

2. You only have the hard split in Azure but not on-premises. This use case leads to extra complexity since your single on-premises environment's DNS forwarders can only forward to one Azure destination, because of the single domain name per private link enabled PaaS resource. So, if an on-premises user wants to resolve abc.database.windows.net, your single DNS environment will only be able to forward to a single destination in Azure...

3. You have a smart DNS technology on-premises that is able to conditionally forward traffic by matching the source IP of the client with the expected destination (prod or non-prod in Azure). This is out of scope of this post.

Let us examine a possible design for both scenarios, and start with scenario 1, an end-to-end hard split including your on-premises environment.

Here is how you could deal with option 1 (removed the flows for clarity):

Figure 5: hard split between production and non-production

For sake of simplicity, I have regrouped all the non-production environments inside a single "non-production" one. You might want to go even further and follow the traditional DTAP approach for your DNS, but I think this is a bit overkill. You simply replicate each environment, with its own dedicated components, including the Private DNS zones destined to private link. In this case, there would be no collision between production and non-production since your on-premises non-prod and prod DNS can simply forward to their corresponding inbound endpoint in Azure. This gives the advantage to split the RBAC of both, private DNS zones and ADPRs as well. So, both connectivity and RBAC are highly segregated.

As I said, option 1 is rather easy. Let us now explore option 2, where you only have a hard split between prod and non-prod in Azure but not on-premises.

Here is a diagram showing how to achieve this:

We try to maximize segregation in Azure while having a single environment on-premises. So, we end up with:

- Each environment in Azure has its own hub to ensure a hard segregation between production and non-production. In reality, you'd have an NVA or an Azure Firewall in each hub (same for all other diagrams of course).

- Each environment in Azure has its own dedicated ADPR as well as private DNS zones but only for non private link domains

- Because your single on-premises DNS infrastructure can only send everything destined to abc.database.windows.net to a single destination, you need to foresee that single destination, that is neither production, neither non-production. To accommodate this, I have created a Common Services VNET, that is peered with both hubs. Notice that this is just a VNET without an NVA/Azure Firewall inside. The only thing you share, is the ADPR that handles private-link only traffic, as well as the private DNS zones that hold the private link records. To make this VNET accessible from your on-premises environment, you will need a VPN gateway and/or Expressroute connection. The alternative is to route traffic to either the prod, either the non-prod hub. I have colored in red, private link related DNS resolution, from all over the places. Both prod and non-prod hubs forward private link traffic to the Common Services' ADPR's inbound endpoint, as well as the on-premises environment.

This scenario is still rather simple because we have only have a single hub per environment. Beware that you might be confronted to situations where more than one hub per environment is used...which makes it even harder than the use case I showed here. Hope this helps tackling scenarios that are a little more advanced than the ones highlighted in the official docs.

Published on:

Learn moreRelated posts

Microsoft Purview: Data Lifecycle Management- Azure PST Import

Azure PST Import is a migration method that enables PST files stored in Azure Blob Storage to be imported directly into Exchange Online mailbo...

How Snowflake scales with Azure IaaS

Microsoft Rewards: Retirement of Azure AD Account Linking

Microsoft is retiring the Azure AD Account Linking feature for Microsoft Rewards by March 19, 2026. Users can no longer link work accounts to ...

Azure Function to scrape Yahoo data and store it in SharePoint

A couple of weeks ago, I learned about an AI Agent from this Microsoft DevBlogs, which mainly talks about building an AI Agent on top of Copil...

Maximize Azure Cosmos DB Performance with Azure Advisor Recommendations

In the first post of this series, we introduced how Azure Advisor helps Azure Cosmos DB users uncover opportunities to optimize efficiency and...

February Patches for Azure DevOps Server

We are releasing patches for our self‑hosted product, Azure DevOps Server. We strongly recommend that all customers stay on the latest, most s...

Building AI-Powered Apps with Azure Cosmos DB and the Vercel AI SDK

The Vercel AI SDK is an open-source TypeScript toolkit that provides the core building blocks for integrating AI into any JavaScript applicati...

Time Travel in Azure SQL with Temporal Tables

Applications often need to know what data looked like before. Who changed it, when it changed, and what the previous values were. Rebuilding t...