Securing Network Egress in Azure Container Apps

Introduction

Since its inception nearly two years ago, Azure Container Apps (ACA) has added significant features to make it a relevant container hosting platform. Built atop Kubernetes, Azure Container Apps is a fully managed Platform-as-a-Service that empowers Azure container workloads to focus on the business value they provide, not be mired in infrastructure management. Many of my colleagues and I believe that Azure Container Apps has grown into a viable alternative for hosting many different containers compared to other, more specialized hosting platforms in Azure. No longer must customers learn the ins and outs of Kubernetes, cluster management, Kubernetes versions, etc. The gap to Azure Kubernetes Service (AKS) has been reduced significantly, and while the two products are not intended to compete, comparisons of the two are commonplace.

A barrier of entry, however, has been Azure Container Apps' inability to restrict outbound traffic from containers, even when set up in a virtual network (VNet). Given the lack of a control over a container connecting to the internet, customers understandably passed on adoption.

This limitation has been removed as of August 2023 as we have announced general availability of user defined routes (UDR). This impactful feature addition motivated the following article.

Acknowledgement

This article would not have been possible without the diligent research and proof of concept by Steve Griffith. In addition to this article, I encourage you to also explore his aca-egress-lockdown in GitHub.

Pieces of the Puzzle

In addition to an Azure Container App Environment with a container app, we need a virtual network, a route table for our user defined routes, an Azure Firewall instance, as well as a Log Analytics Workspace. We will set up everything sequentially using the Azure CLI. I encourage you to use an Azure cloud shell for maximum compatibility, automatically using the latest Azure CLI components.

Laying the Groundwork

Since this GA release is very new (August 30th), you may need to upgrade the AZ CLI.

We start by setting our variables, following by the creation of the resource group. Naming is generally based on the Cloud Adoption Framework.

Next, the VNet gets created with two subnets for the Azure Firewall and one subnet for the Azure Container Apps Environment. The dedicated Azure Container Apps tier can use subnets as small as a /27 CIDR.

The Azure Container App subnet must be delegated to be managed by the Azure Container App service.

We need to retrieve the Azure Container App subnet resource ID for later use.

Creating the Azure Firewall

Note that while we use the Basic tier, Azure Firewall still consumes a considerable amount of resources and cost. Please be advised to clean up your resources at the end. Setting up a Standard tier is simpler but also considerably more costly.

Two public IP addresses are needed for the Basic Azure Firewall tier.

Create the Azure Firewall.

The public IP addresses need to be configured for the Azure Firewall.

We need to retrieve the Azure Firewall ID for later use. Since we only have one Azure Firewall, we simply select the first of the Azure Firewall to get its ID.

Azure Firewall blocks all traffic by default. In order for our container images to be pulled and our egress to be tested later, we must add appropriate application rules. Here, we are grouping Microsoft service fully qualified domain names (FQDNs) for container registries into a Microsoft-specific allowed collection.

To demonstrate reaching an obviously external URL, we also add an allowed-external collection.

Note that these are the minimum targets that must be available. If you expand on this tutorial and include other services, you may likely need to open up more.

Next, we need to get the public and private Azure Firewall IP addresses for the route table.

Creating the Routes

To ensure that our Azure Container Apps will later be forced to traverse the Azure Firewall for outbound traffic, we now create the route table with the default routes.

Lastly, we must associate the route table with the Azure Container App subnet.

Creating the Log Analytics Workspace

While not necessary for the success of this tutorial, a Log Analytics Workspace illustrates the successes and failures of requests through the Azure Firewall. I encourage you to always consider logging and telemetry in your architectures.

Retrieve the Workspace ID.

We want to send the Azure Firewall logs to the Log Analytics Workspace.

Creating the Azure Container App Environment and Container App

We begin by creating the Azure Container App Environment, which will be VNet-injected into the previously-created Azure Container App subnet. The environment will be configured as internal-only to prevent external access. While we could also send logs to the Log Analytics Workspace, this is not needed for this tutorial.

Azure Container Apps support Consumption and Dedicated plans. In order to use user defined routes, we need to configure our container app for Dedicated. This means we need to create a workload profile that has a specific type of vCPU and memory dedicated to our Azure Container App Environment.

Lastly, we pull an nginx image from Microsoft's container registry to serve as our image for the Azure Container App we will use for testing egress.

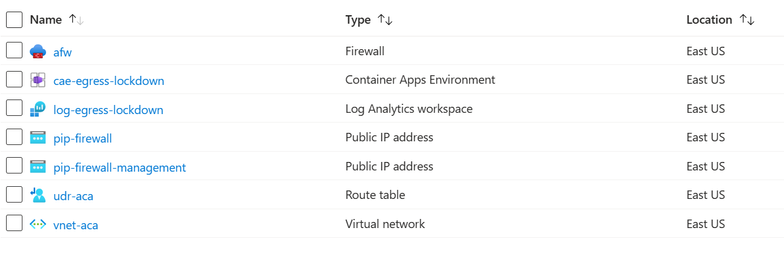

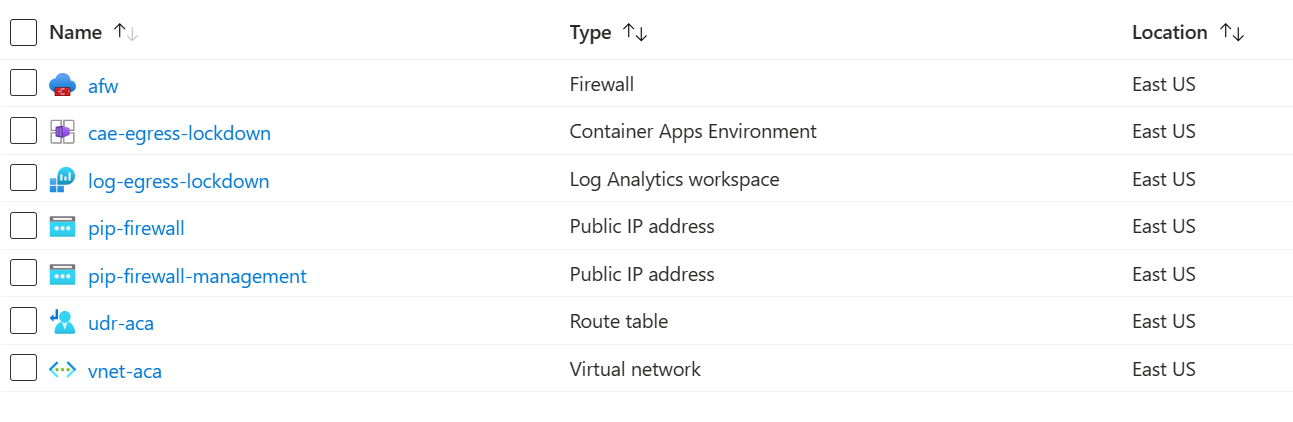

Everything is set up now. Your list of resources should look similar to this:

Validation

Now that we have a test container app inside a VNet that uses a user defined route to the Azure Firewall, we can validate that we are indeed blocked when we should be and successful when we are allowed to be.

We now use the cloud shell to connect to the container app and launch a bash shell.

Next, issue a curl command against an allowed target as well as targets that we did not allow. Only the first target is allowed in our application rules. Even though we are also targeting Microsoft URLs, we did not explicitly allow those URLs.

The first requested expectedly returns an IP address. The second and fourth request are similar in nature and are blocked with a default message by the Azure Firewall. The third request is blocked in the same manner; however, `curl` shows us the SSL instead of the Firewall error, which is appropriate.

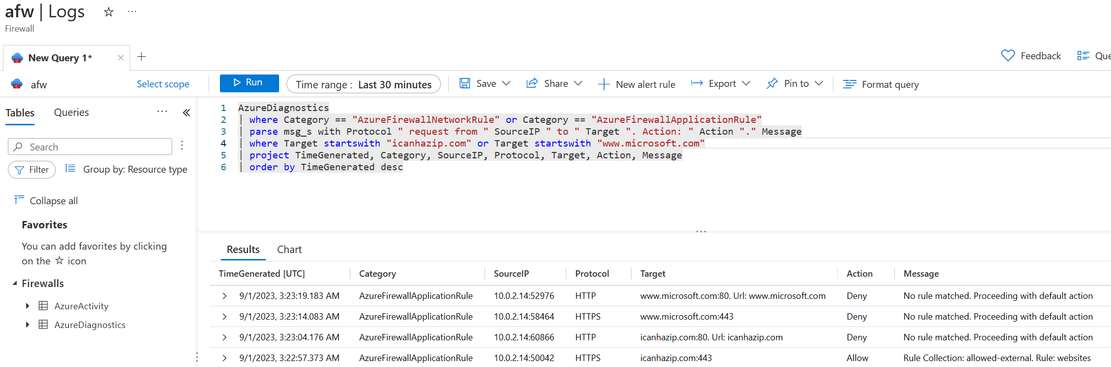

Furthermore, we can validate these results in our Azure Firewall logs that we sent to the Log Analytics Workspace. To do so, open the Azure Firewall instance in your resource group, navigate to the Logs blade, and execute the following query:

Similarly to the curl output, you can see the rules that were not matched. Note that we do not see the same SSL error that curl showed us but the true underlying message from the Azure Firewall.

Conclusion

Hopefully, this tutorial gave you insight into this exciting new Azure Container Apps feature to secure your container workloads' traffic!

Please follow or connect with me on LinkedIn where I frequently post about feature updates. Thank you!

Published on:

Learn moreRelated posts

Video: Copilot Studio – Connect Azure SQL As Knowledge

SQL is where the worlds data is stored. And in this video I’m going to ... The post Video: Copilot Studio – Connect Azure SQL As Knowled...

Azure Backup Threat Detection

One Azure, Many Logins: How Users Access Microsoft’s Cloud Safely

Users can access Microsoft Azure through several flexible and secure methods, depending on their role, device, and workload needs. The most co...

Unlocking New Possibilities: Microsoft Azure Hyperscale AI Computing with H200 GPUs Accelerates Secure AI Innovation in Azure for U.S. Government Secret and Top Secret

As artificial intelligence continues to reshape industries and redefine the boundaries of innovation, Microsoft is proud to announce a leap fo...

Tata Neu delivers personalized shopping experiences for millions of users with Azure DocumentDB

With Azure DocumentDB, Tata Neu delivers seamless authentication for millions of users, accelerates credit card onboarding across partners, un...