Consistent DNS resolution in a hybrid hub spoke network topology

Introduction

DNS is one of the most essential networking services, next to IP routing. A modern hybrid cloud network may have various sources of DNS: Azure Private DNS Zones, public DNS, domain controllers, etc. Some organizations may also prefer to route their public Internet DNS queries through a specific DNS provider. Therefore, it is crucial to ensure consistent DNS resolution across the whole (hybrid) network.

This article describes how DNS Private Resolver can be leveraged to build such architecture.

This article assumes that the network topology was built using traditional networking. If you leverage AZURE Virtual WAN, the solution requires one adjustment, described in the Virtual WAN paragraph.

Architecture

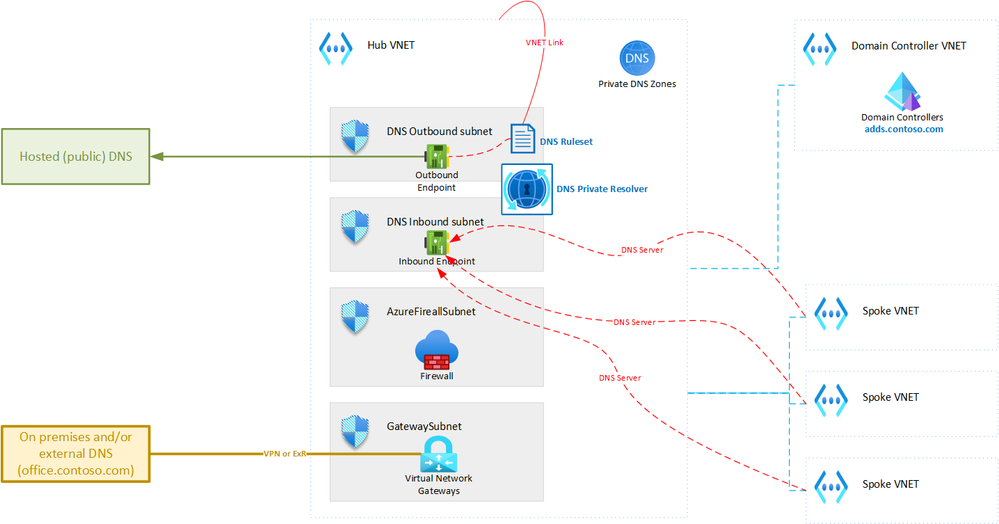

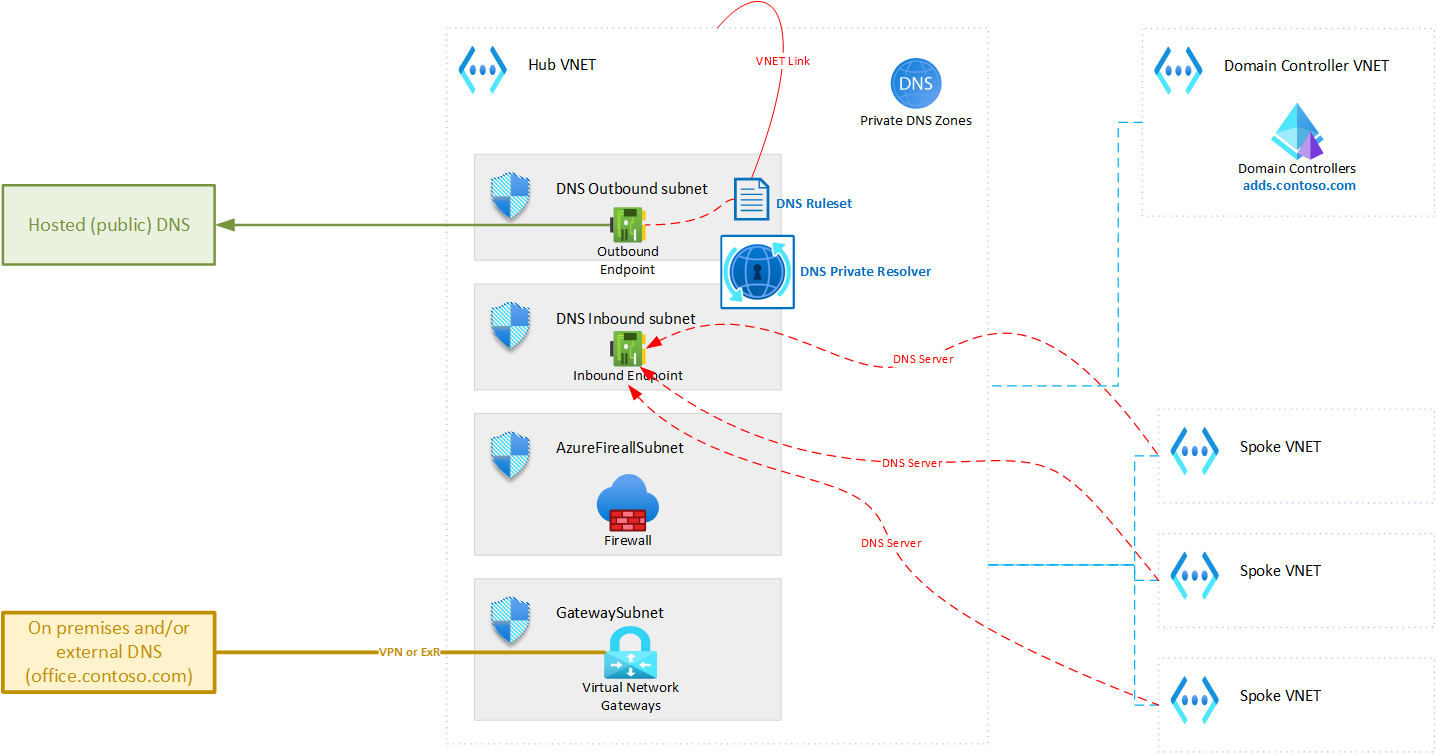

The diagram below shows the topology that we will be creating:

This document focuses on a single hub-spoke network topology. Often, the complete AZURE network includes multiple hub-spoke topologies connected in a mesh. The solution described here can easily be extended to support such meshed hub-spoke topology.

The solution described in this article is based on AZURE DNS Private Resolver deployed in the hub Virtual Network. This AZURE service allows combining multiple DNS sources seamlessly with AZURE DNS. However, in a hybrid hub spoke topology there are a couple of requirements and limitations that need to be considered. This is outlined further in this article.

Deploying the architecture

Step 1: Networking for DNS Private Resolver deployment

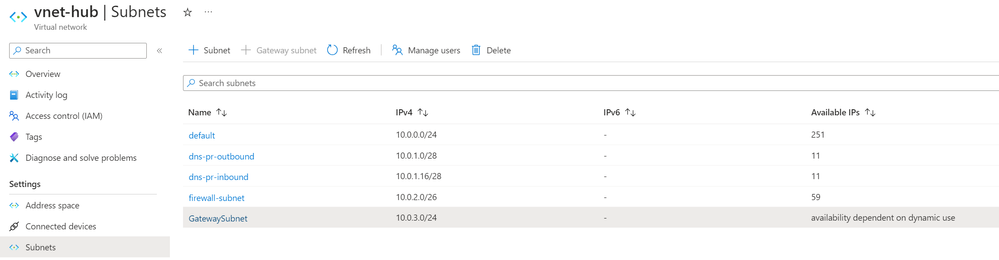

To deploy DNS Private Resolver in a Virtual Network, you need to dedicate two subnets to it: one for inbound endpoints and one for outbound endpoints. Both subnets should have a minimum size of /28. In a hub-spoke topology, the DNS Private Resolver must reside in the hub Virtual Network.

Therefore, you need to create two /28 subnets in the hub, as shown in the screenshot below (the subnets are named "pr-dns-inbound" and "pr-dns-outbound").

Step 2: Deploying DNS Private Resolver

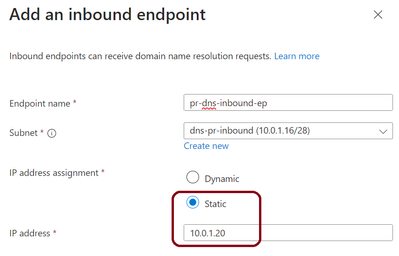

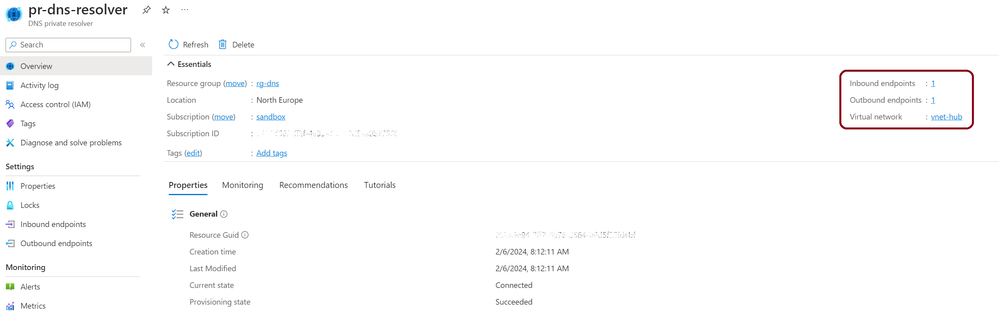

To deploy the DNS Private Resolver in the hub, it must reside in the same subscription as the hub VNET, but ideally in a different resource group. You also need to create both an inbound and an outbound endpoint, either during or after the deployment.

The inbound endpoint should be in the subnet you created earlier and have a static IP address. You can choose any IP address from the available ones in the subnet, but it is advisable to use the first one for optimal IP address utilization. The outbound endpoint only requires a name and association to the outbound subnet.

The next steps are thus to create a new resource group and a DNS Private Resolver in the subscription that has the hub VNET. First, create a new resource group in the subscription. Then, create a DNS Private Resolver in the new resource group and add one inbound endpoint (with a static IP address) and one outbound endpoint. Make sure to select the appropriate subnets that were created in the previous step for the endpoints.

Step 3: External DNS Systems

We will be integrating several DNS sources into the DNS Private Resolver. Hence, we need to ensure connectivity. The outbound interface of the DNS Private Resolver needs access to all "external" sources that need to be integrated.

Domain Controllers (AD DS or Entra ID DS)

You need to have IP connectivity from the DNS Private Resolver outbound subnet to your domain controller (deployed as IaaS or Entra ID DS) in AZURE. This involves both IP routing and NSG/firewalling. It is preferred to peer the Virtual Network that hosts your domain controller to the hub, if you haven't done so already. Adhering to good security practices, the subnet containing the domain controllers should have a Network Security Group assigned. This one requires a rule that allows inbound traffic on port 53 (TCP and UDP) from the whole subnet for the DNS Private Resolver Outbound Endpoints. The destination should be the IP addresses of your domain controller.

On Premises DNS Servers - Outbound

To resolve the names hosted in the on-premises DNS servers, these need to be integrated. The first step is to ensure IP connectivity. Usually, the servers are connected through a hybrid connection like VPN or Express Route, which terminates at a gateway (Virtual Network Gateway or NVA) in the hub. Depending on your security requirements, the DNS traffic to and from the on-premises DNS servers could either go through a firewall in the hub or bypass it. This architecture assumes a fully functional hybrid hub-spoke, so this connectivity should already be enabled. You might need to add a firewall rule and/or a Network Security Group rule though.

Note that DNS servers hosted in other external systems (e.g. other cloud connected over a VPN or Express Route) follow the same model.

On Premises DNS Servers - Inbound

For consistent DNS resolution both on premises and in AZURE, the on premises DNS servers must connect to the DNS Private Resolver Inbound Endpoint. As mentioned earlier, this traffic can either go through the firewall or bypass it, depending on your needs. To enable this IP connectivity, the inbound endpoint's IP address (or subnet CIDR range) must be announced over the hybrid connection to the on premises network.

To allow resolving names via the inbound endpoint, the on premises DNS servers, these need some configuration as well. Conditional forwarders must be configured for all domains integrated via the DNS Private Resolver, using the inbound endpoint IP address as target.

Note that DNS servers hosted in other external systems (e.g. other cloud connected over a VPN or Express Route) follow the same model.

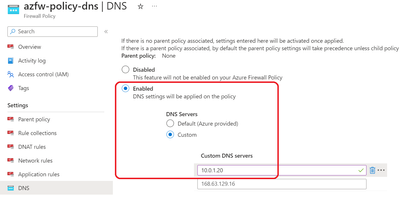

Custom public DNS servers

AZURE provides a public DNS infrastructure to resolve public DNS entries by default. However, some scenarios may require a dedicated custom DNS infrastructure that performs additional validation on DNS requests. These servers are usually hosted on the public Internet. If you have such requirement, the DNS Private Resolver outbound endpoint needs access to the public Internet.

At the time of writing this article, the default outbound Virtual Network access is still available. However, this will be discontinued and is not a secure solution. Therefore, we recommend routing this traffic through the firewall in the hub. This requires a custom route in the outbound subnet route table that directs all traffic (0.0.0.0/0) to the firewall's internal IP address. The following screenshot shows an example of such a route table (with the firewall IP address being 10.0.4.4):

The route table needs to be linked to outbound subnet used for the DNS Private Resolver.

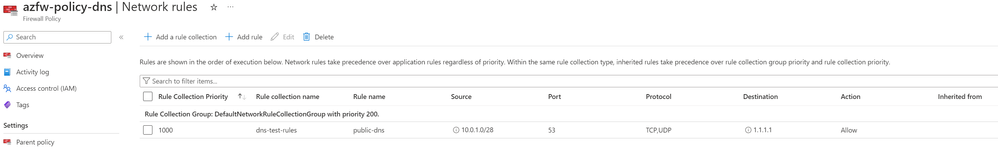

A firewall rule is also necessary along with the route table. This rule should permit traffic from the outbound subnet CIDR range over UDP and TCP port 53 to the external DNS source. The following screenshot illustrates an example for an AZURE Firewall Policy (assuming the external system is hosted at 1.1.1.1):

All DNS requests to the external DNS system will have a the public IP address assigned to the firewall as source IP address (for AZURE Firewall, this could be different for 3rd party firewalls). Typically this IP address needs to be whitelisted in the external DNS system and serves as identification of the DNS request source.

Step 4: Private DNS Zones

All Private DNS Zones used in AZURE must be linked to the hub Virtual Network only. These should not be linked to any other Virtual Network.

Step 5: DNS Forwarding Ruleset

A DNS Forwarding Rulesets is a group of DNS forwarding rules (up to 1000) that can be applied to one or more outbound endpoints or linked to one or more virtual networks. For this setup, we need one ruleset, which we will associate with the outbound endpoint of the AZURE DNS Private Resolver and linked to the hub Virtual Network.

When you link a ruleset to a Virtual Network, you add the rules to the logic of the AZURE DNS endpoints for name resolution. The DNS endpoint in the Virtual Network uses this logic to resolve names with a linked ruleset:

- If the name is defined in a Private DNS Zone, use that zone to resolve it.

- If not, use the ruleset.

- If the ruleset has no matching rule, use public DNS.

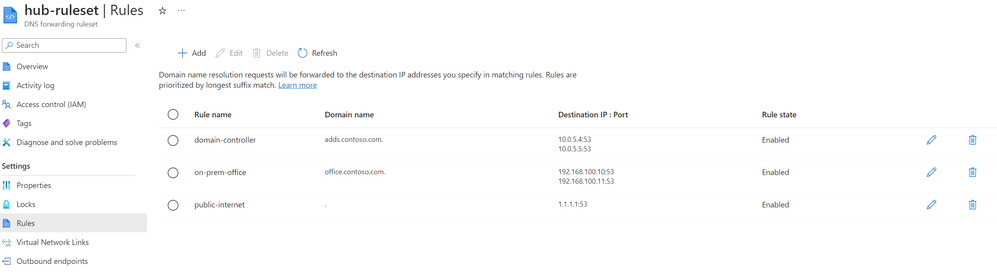

To set up this architecture, we need a DNS Forwarding Rule with these rules (the order does not matter):

- The domain(s) hosted on the domain controllers must have a rule linked to all IP addresses used by the corresponding domain controllers (e.g. 2 instances when using Entra ID DS).

- The domain names hosted on the on-prem or external DNS servers must be defined with the IP address(es) of the DNS servers.

- When using a dedicated service to resolve public DNS names, a so called wildcard rule (dot or '.' domain name) must be present, linking to the public IP addresses of this hosted service.

The screenshot below shows an example of such a ruleset:

This rule must now be linked to the hub Virtual Network, as shown in the screenshot below:

Step 6: Spoke DNS Configuration

The infrastructure for consistent DNS resolution is now set up. The last step is to assign all Virtual Networks the inbound endpoint IP address as DNS server (for use in the DCHP offer). This requires that the DNS Servers setting of every Virtual Network Spoke is set to "Custom" DNS Servers with the inbound endpoint IP address.

Once this is completed every resource (network interface) in the spoke Virtual Network needs to renew it's DHCP lease to get the DNS server assigned.

Note: As can be read in this article several DNS zones (e.g. for Private Link) are excluded from wildcard rules. Therefore, it is impossible to resolve Private Endpoints from a DNS Forwarding Rule Set in a spoke. Hence, the DNS configuration for the spoke VNETs needs to use the inbound endpoint IP address as explained earlier.

Step 6: Testing

Now that everything is set up, the only thing left to do is testing.

Testing requires the following elements:

- a virtual machine in a spoke Virtual Network

- a virtual machine on-premises (or in each external network segment connected over VPN or Express Route)

- a private endpoint integrated it the correct Private DNS Zone associated to the hub

From the virtual machine in the spoke:

- Validate that the DNS server assigned via DHCP is the DNS Private Resolver Inbound Endpoint

- Perform a NSLOOKUP for:

- The DNS name of the PaaS service that the private endpoint is linked to. This should resolve to the private IP address of the private endpoint.

- A DNS name hosted in each of the Domain Controllers. This should resolve to the IP addresses defined in the AD DS DNS configuration.

- A DNS name hosted in each of the external (on premises, other cloud, ...) DNS servers. This should resolve to the correct IP address defined in that DNS zone.

From the virtual machine located on premises or in the other cloud, execute the same NSLOOKUP commands. The IP addresses returned must be identical.

Virtual WAN

AZURE Virtual WAN uses the concept of a managed Virtual Hub. Deploying a DNS Private Resolver in this Virtual Hub is not possible. However, this architecture can still be leveraged with minor modifications:

- The DNS Private Resolver should be deployed in a new spoke Virtual Network that gets connected to the Virtual Hub. All requirements for IP connectivity, firewalling or Network Security Groups remain identical.

- The firewall in the Virtual Hub must be configured to use the DNS Private Resolver Inbound Endpoint as DNS server. The screenshot below shows how to configure this for an AZURE Firewall. For a third party firewall, this will depend on the vendor and type.

- Each NVA deployed in the Virtual Hub needs to have it's DNS server configured, similar to the firewall (point to the DNS Private Resolver Inbound Endpoint). How to do this, depends on the NVA type of course.

Q&A

Can this be leveraged in an topology with multiple hub-spokes?

Yes. When multiple hub-spoke topologies are created (e.g. in different regions), this architecture must be deployed into each hub.

Does this architecture support resiliency?

Yes, DNS Private Resolver leverages availability zones for resiliency (Resiliency in Azure DNS Private Resolver) by default. If you require region failover, you should deploy this architecture a second time, in a different region.

Published on:

Learn more