Handling Server Errors\Internal Server Errors\HTTP 500 Errors for Service Bus and Event Hubs.

Scenario: Calls to Service Bus and Event Hub failing with Server Error \ Internal Server Error \ HTTP Error 500 Errors.

Issue Description: SB\EH API calls by the client application (usually sender or consumer) fails with Internal Server Error. The corresponding exception messages are something like this – “The server was unable to process the request due to an internal error”. The exception type and exception messages may vary somewhat based on the programming language of the SDK, SDK version, Wrappers, etc. There are a lot of frequently asked questions related to the Server Error and I am attempting to answer most of them in this article.

Sample Internal Server Error for .Net Client:

Microsoft.ServiceBus.Messaging.MessagingException: The remote server returned an error: (500) Internal Server Error. The service was unable to process the request; please retry the operation. For more information on exception types and proper exception handling, please refer to http://go.microsoft.com/fwlink/?LinkId=761101. TrackingId:xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx_G#_B#, SystemTracker:namespacename:Topic:topicname, Timestamp:mm/dd/yyyy hh:mm:ss XX

Exception Type: Microsoft.ServiceBus.Messaging.MessagingException

Exception Message: The remote server returned an error: (500) Internal Server Error. The...

TrackingId: xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx_G#_B# -- This is a GUID, sometimes suffixed by _G# or _B#, that helps track operations performed by the SB\EH client application. If the request reaches the front end of the SB\EH, viz Gateway or Frontend, the tracking id is suffixed by _G#. If the request reaches the Backend SB\EH Server, the tracking id is prefixed with _G#_B#. Tracking Id without _G# or _G#_B# would mean that the request has not reached the SB\EH servers.

SystemTracker: namespacename:Topic:topicname -- Namespace, and entity details.

Timestamp: mm/dd/yyyy hh:mm:ss XX -- Time of the operation.

‘Server Errors’, ‘Internal Server Errors’, or ‘HTTP 500 Errors’ are the same and are used interchangeably. Server Errors is a Generic Error message returned by the Server (SB\EH) when it encounters an unexpected condition or an exception that is preventing it to fulfil the request. The error response is the generic catch-all response. Simply put, it means that the server is not able to process the request then.

RFC 7231: Hypertext Transfer Protocol (HTTP/1.1): Semantics and Content (rfc-editor.org)

There are certain Standard Operating Procedures when it comes to Internal Server Errors\Server Errors, especially with respect to SB and EH:

- Firstly, validate that the client exception is indeed Server Error corresponding to the SB\EH namespace.

- Secondly, check if the Server Error is impacting your application, and what is the impact.

- Thirdly, as a best practice, client applications are advised to have a Retry mechanism in their code to lower and nullify the impact of Server Errors. Ensure that an appropriate retry mechanism is in place.

- Fourthly, despite the retry mechanism, your application faces an issue; if the issue is ongoing for a considerable amount of time; Or in the recent past Server Errors impacted your application, THEN à You should open a ticket with Microsoft with relevant details (of which I will mention below).

1. Validating that the Server Error corresponds to SB\EH Namespace:

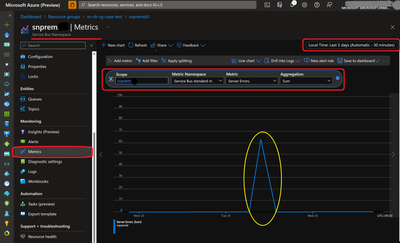

- Navigate to your SB\EH namespace in Azure Portal >> Metrics >> Add metric >> select Metric value as Server Errors >> Select relevant time duration.

- If the Server Errors in the metrics corresponds to the time when Server Errors\Exception in the application logs, then they are related.

- You can also create Server Error alerts for SB\EH by selecting Alerts from the Azure Monitor section on the home page for your Service Bus namespace.

Alerting can be slightly tricky… I am saying this because there is no set rule to say if you see 1 or 10, or even a couple of hundred Server Errors over a period (1m, 1h, or 1 day), the application is impacted. Furthermore, pointless alerts may cause unnecessary panic, fruitless investigation, and pointless audits.

So, the goal should be accurate and correct alerting, so that proper proactive measures can be taken to mitigate any incidents. For a standard (or even a premium) SB\EH a couple of hundred Server Errors should not be a cause of concern. This should not create an alarm.

I would recommend alerting after 20 minutes of continuous Internal Server Errors, clubbed along with drop-in incoming\outgoing messages by 30%. ( 20 minutes and 30% are figurative and will depend on your application).

See Create, view, and manage metric alerts using Azure Monitor for details on creating alerts.

2. Check if the Server Errors are impacting your application and if yes, What is the impact?

Administrators may receive alerts or in Metrics Chart one may see Server Errors. Users often open a support ticket with Azure Support which is okay. But I propose that before opening the support ticket consider the following:

- If the Server Error is impacting your (sender\receiver) application. Contemplate how this Server Error impacts your application. Is the send\receive impaired, any functionality impaired, is it causing application slowness, etc?

- Describe, compare, and quantify the impact. Before the time of the issue & During the issue.

- If the server error is ongoing or in the past – with considerable impact è Open a support ticket with the Azure SB\EH team with the required information in bullet #4.

- If the server error is ongoing – with minimal impact è It is not uncommon to see some server errors especially on the standard tier and in some cases on the premium tier. (Reason will be explained in the FAQ below). You can keep the product-solution under monitoring. If you have retries in place, these should not be a major concern.

- If the server error has happened in the recent past and is now resolved è The chances are that it would have self-healed, or there was manual intervention based on internal alerts. The most likely cause would be one that is listed below. And you need not open a support ticket.

Note: For server errors more than a month old we may not have all the data to provide you with an RCA.

3. The SOP to deal with Server Errors, especially for SB\EH is to retry the operations with an exponential backoff retry pattern.

When any (Cloud) Azure Services (NOT just SB\EH) fail with transient errors like Server Errors one of the Best Practices adopted by cloud applications is a retry pattern - Refer to this link for Azure service retry guidance - Best practices for cloud applications.

Retry provides a statistically better chance for an operation to succeed, thus minimizing the impact of the failed operation. Most Azure services and client SDKs include a retry mechanism. Each Azure Service and its SDK has a retry mechanism tuned to a specific service. The retry mechanism may be Fixed, Exponential, with Backoff, Random, etc.

Azure SDK for .Net

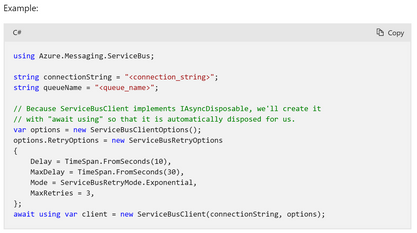

Service Bus .Net SDK Azure.Messaging.ServiceBus exposes enums and classes viz. ServiceBusRetryMode, ServiceBusRetryOptions, and ServiceBusRetryPolicy. Using this you can configure and customize retries for .Net applications.

ServiceBusRetryMode determines the approach the application will take when calculating the delays in the retry attempts. They can be Fixed or Exponential.

|

Exponential |

Retry attempts will delay based on a backoff strategy, where each attempt will increase the duration that it waits before retrying. |

|

Fixed |

Retry attempts happen at fixed intervals; each delay is a consistent duration. |

ServiceBusRetryOptions determines how retry attempts are made, and a failure is eligible to be retried.

|

A custom retry policy to be used in place of the individual option values. |

|

|

The delay between retry attempts for a fixed approach or the delay on which to base calculations for a backoff-based approach. |

|

|

The maximum permissible delay between retry attempts. |

|

|

The maximum number of retry attempts before considering the associated operation to have failed. |

|

|

The approach to use for calculating retry delays. |

|

|

The maximum duration to wait for completion of a single attempt, whether the initial attempt or a retry. |

ServiceBusRetryPolicy is used by developers with special \advanced retry logic to develop their own retry logic.

Azure SDK for Java:

Service Bus Java SDK com.azure.messaging.servicebus support enums and classes viz. AmqpRetryMode, AmqpRetryOptions, and AmqpRetryPolicy

With the advent of com.azure.messaging.servicebus ServiceBusClientBuilder is used to create all the Service Bus clients, viz.

- ServiceBusSenderClient A synchronous sender responsible for sending ServiceBusMessage to a specific queue or topic on Azure Service Bus.

- ServiceBusSenderAsyncClient A asynchronous sender responsible for sending ServiceBusMessage to a specific queue or topic on Azure Service Bus.

- ServiceBusReceiverClient A synchronous receiver responsible for receiving ServiceBusMessage from a specific queue or topic on Azure Service Bus.

- ServiceBusReceiverAsyncClient A asynchronous receiver responsible for receiving ServiceBusMessage from a specific queue or topic on Azure Service Bus.

Generally, one would use AMQP Protocol for message exchange. AMQP failures are captured as AMQP Exceptions. AmqpErrorContext. isTransient is a boolean indicating if the exception is a transient error or not. If a transient AMQP exception occurs, the client library retries the operation as many times as the AmqpRetryOptions allows.

|

EXPONENTIAL |

Retry attempts will delay based on a backoff strategy, where each attempt will increase the duration that it waits before retrying. |

|

FIXED |

Retry attempts happen at fixed intervals; each delay is a consistent duration. |

AmqpRetryOptions :

|

Gets the delay between retry attempts for a fixed approach or the delay on which to base calculations for a backoff-approach. |

|

|

Gets the maximum permissible delay between retry attempts. |

|

|

int |

The maximum number of retry attempts before considering the associated operation to have failed. |

|

Gets the approach to use for calculating retry delays. |

|

|

Gets the maximum duration to wait for the completion of a single attempt, whether the initial attempt or a retry. |

|

|

int |

|

|

Gets the delay between retry attempts for a fixed approach or the delay on which to base calculations for a backoff-approach. |

|

|

setMaxDelay(Duration maximumDelay) Sets the maximum permissible delay between retry attempts. |

|

|

setMaxRetries(int numberOfRetries) Sets the maximum number of retry attempts before considering the associated operation to have failed. |

|

|

setMode(AmqpRetryMode retryMode) Sets the approach to use for calculating retry delays. |

|

|

setTryTimeout(Duration tryTimeout) Sets the maximum duration to wait for the completion of a single attempt, whether the initial attempt or a retry. |

AmqpRetryPolicy is used by developers with special \advanced retry logic to develop their own retry logic.

|

|

|

AmqpRetryOptions amqpRetryOptions = new AmqpRetryOptions(); amqpRetryOptions.setDelay(Duration.ofSeconds(10)); amqpRetryOptions.setMaxRetries(5); amqpRetryOptions.setMaxDelay(Duration.ofSeconds(500)); amqpRetryOptions.setMode(AmqpRetryMode.EXPONENTIAL); amqpRetryOptions.setTryTimeout(Duration.ofSeconds(500));

// instantiate a client that will be used to call the service ServiceBusSenderAsyncClient serviceBusSenderAsyncClient = new ServiceBusClientBuilder() .connectionString(connectionString) .retryOptions(amqpRetryOptions) .sender() .topicName(topicName) .buildAsyncClient(); |

Refer to AmqpRetryOptions.java code

Azure SDK for Python:

retry_total, retry_backoff_factor, and retry_backoff_max are defined at the ServiceBusClient level and inherited by senders and receivers created from it. This provides the ability to configure the retry policy used by the operations on the client.

- retry_total (int) – The total number of attempts to redo a failed operation when an error occurs. Default value is 3.

- retry_backoff_factor (float) – Delta back-off internal in the unit of second between retries. Default value is 0.8.

- retry_backoff_max (float) – Maximum back-off interval in the unit of second. Default value is 120.

- retry_mode (str) – The delay behavior between retry attempts. Supported values are “fixed” or “exponential”, where default is “exponential”.

Refer: azure.servicebus package — Azure SDK for Python 2.0.0 documentation (windows.net)

|

|

|

with ServiceBusClient.from_connection_string(CONNECTION_STRING, retry_total=2, retry_backoff_factor=10) as client: with client.get_subscription_receiver(topic_name="test", subscription_name="subtest") as receiver: for message in receiver: logger.debug( f"message {message.sequence_number}, delivery count {message.delivery_count}" ) receiver.abandon_message(message)

|

Azure SDK for Javascript&colon:

RetryMode : Describes the Retry Mode type -- Exponential = 0 & Fixed = 1

RetryOptions: Retry policy options that determine the mode, number of retries, retry interval, etc.

|

Number of times the operation needs to be retried in case of retryable error. Default: 3. |

|

|

Denotes the maximum delay between retries that the retry attempts will be capped at. Applicable only when performing exponential retry. |

|

|

Denotes which retry mode to apply. If undefined, defaults to Fixed |

|

|

Amount of time to wait in milliseconds before making the next attempt. Default: 30000 milliseconds. When mode option is set to Exponential, this is used to compute the exponentially increasing delays between retries. |

|

|

Number of milliseconds to wait before declaring that current attempt has timed out which will trigger a retry A minimum value of 60000 milliseconds will be used if a value not greater than this is provided. |

|

Describes the options that can be provided while creating the ServiceBusClient. o webSocketOptions : Options to configure the channelling of the AMQP connection over Web Sockets. o websocket : The WebSocket constructor used to create an AMQP connection if you choose to make the connection over a WebSocket. o webSocketConstructorOptions : Options to pass to the Websocket constructor when you choose to make the connection over a WebSocket. o retryOptions : The retry options for all the operations on the client. o maxRetries : The number of times the operation can be retried in case of a retryable error. o maxRetryDelayInMs: The maximum delay between retries. Applicable only when performing exponential retries. o mode: Which retry mode to apply, specified by the RetryMode enum. Options are Exponential and Fixed. Defaults to Fixed. o retryDelayInMs: Amount of time to wait in milliseconds before making the next attempt. When mode is set to Exponential, this is used to compute the exponentially increasing delays between retries. Default: 30000 milliseconds. o timeoutInMs: Amount of time in milliseconds to wait before the operation times out. This will trigger a retry if there are any retry attempts remaining. Minimum value: 60000 milliseconds. |

|

|

| new ServiceBusClient("<connection-string>", { retryOptions: { mode: RetryMode.Exponential, maxRetries: 5, timeoutInMs: 3000 } });

|

Azure SDK for GO:

type ClientOptions struct {

// TLSConfig configures a client with a custom *tls.Config.

// Application ID that will be passed to the namespace.

ApplicationID string

// NewWebSocketConn is a function that can create a net.Conn for use with websockets.

// For an example, see ExampleNewClient_usingWebsockets() function in example_client_test.go.

NewWebSocketConn func(ctx context.Context, args NewWebSocketConnArgs) (net.Conn, error)

// RetryOptions controls how often operations are retried from this client and any

// Receivers and Senders created from this client.

RetryOptions RetryOptions

}

RetryOptions

MaxRetries specifies the maximum number of attempts a failed operation will be retried before producing an error.

The default value is three. A value less than zero means one try and no retries.

RetryDelay specifies the initial amount of delay to use before retrying an operation.

The delay increases exponentially with each retry up to the maximum specified by MaxRetryDelay.

The default value is four seconds. A value less than zero means no delay between retries.

MaxRetryDelay specifies the maximum delay allowed before retrying an operation.

Typically, the value is greater than or equal to the value specified in RetryDelay.

The default Value is 120 seconds. A value less than zero means there is no cap.

|

|

|

client, err = azservicebus.NewClientFromConnectionString(connectionString, &azservicebus.ClientOptions{ // https://pkg.go.dev/github.com/Azure/azure-sdk-for-go/sdk/messaging/azservicebus#RetryOptions RetryOptions: azservicebus.RetryOptions{ MaxRetries: 3, RetryDelay: 4 * time.Second, MaxRetryDelay: 120 * time.Second, }, })

if err != nil { panic(err) } |

Refer: azservicebus package - github.com/Azure/azure-sdk-for-go/sdk/messaging/azservicebus - Go Packages

Seldomly, despite the retries configured at the client application, you will see that your applications are impacted. In such a case, you should open a case with us (Microsoft Support). Steps for this are given in the next section. However, there are times when the applications are critical and may despise even a few minutes of breakage. For such critical applications only, we have Geo-Disaster Recovery Feature for both Service Bus and Event Hub. If Geo-disaster recovery is enabled and turned on, during the impact, messages can be routed and received from other regions' namespace.

Below are the links for your reference:

Azure Service Bus Geo-disaster recovery - Azure Service Bus

Geo-disaster recovery - Azure Event Hubs - Azure Event Hubs

Few important things to note:

- You will be configuring 2 Namespaces for one Namespace you need. (The second one will be the Geo DR namespace).

- To get the complete benefit you may want to use it in All–Active mode. This would mean the sender and receiver may have to do additional work i.e., additional coding and testing.

- As you will be provisioning double the resources the cost will also be proportionally higher.

- DR\Impact situation needs to be decided by the user. You can manually or automate the initiation of the DR process.

- If the server error is impacting your application, open a support ticket with the Azure Support SB\EH team with the information listed below: [

- (Required) Azure Subscription Id:

- (Required) SB\EH Namespace Name:

- (Required) Exact Time of the issue with Time zone Information:

- (Required & Important) How did Server Error Impact your application?:

- Is the issue Consistent\ Ongoing \ Intermittent \ Has happened in the past:

Here is the link on How to Create an Azure support request? It is also called a Support Ticket.

Frequently Asked Questions:

1. Is ‘Server Errors’, ‘Internal Server Errors, and ‘HTTP 500 Errors’ the same? What do ‘Server Errors’, ‘Internal Server Errors’, and ‘HTTP 500 Errors’ mean in the context of Service Bus and Event Hubs?

Ans: Yes, ‘Server Errors’, ‘Internal Server Errors’, and ‘HTTP 500 Errors’ are the same. Server Errors is a Generic Error message returned by the Server (SB\EH) when it encounters an unexpected condition or an exception that is preventing it to fulfil the request. The error response is the generic catch-all response. Simply put, it means that the server is not able to process the request at that point in time.

RFC 7231: Hypertext Transfer Protocol (HTTP/1.1): Semantics and Content (rfc-editor.org)

2. Why does the Server Error (Exception – Inner Exception) not provide a detailed cause of the error?

Ans: As explained earlier, Server Error is Generic in nature that may enumerate any condition on the server. To prevent malicious actors from taking undue advantage of the information, the error details are masked (Security by Obscurity). This is in accordance with HTTP standards. AAMF, the server will have logged all the relevant information with respect to the Server Error, it is just not exposed to the users. The user will have to report the issue to the SB\EH support team. Necessary corrective actions will be taken and RCA provided.

Server Errors cannot be corrected without having access to the server and its resources.

3. What are the most common causes of Server Errors?

Ans: Following are the most common causes for Server Errors (not in any order)

a. SB\EH Server Updates: Here is a link for Service Bus Architecture. You will see multiple distributed components like Scale unit, Gateway, Gateway Store, Broker, Messaging Stores, etc interacting with each other. All these components will regularly undergo various updates.

- Host OS Updates.

- Guest OS Updates.

- Security Updates.

- SB\EH Application Updates (Code, Configuration, Bug fix, Enhancements, etc)

- Updates for dependent components, etc.

b. Planned or Unplanned maintenance other than updates.

a-b:

Due to the upgrade/update, client applications connecting to Service Bus may have experienced timeouts, increased latency, internal server errors, or momentary disconnects. These errors may have occurred at different times between the start and end of the upgrade/update.

This upgrade/update is part of the standard and periodic procedure to incorporate upgrades/updates made to Azure products and services. It is not indicative of any specific issue with your Event Hub or the Service Bus namespace. While the impact of these upgrades/updates is low, to begin with, we further limit the perceived impact on client applications by permitting only a subset of Service Bus under a Service Bus namespace to go through an update at a time.

c. Dependent Services like Storage, SQL, etc. failing, and not able to process the operation.

d. Gateway node, Broker node, dependent service being choked for want of capacity e.g., CPU, Memory, IOPS, etc.

e. Networking Issues – Network Congestion, connectivity, etc.

c-d-e:

SB\EH may respond to operations with Server Errors if its dependency components fail; if the nodes are overwhelmed by high CPU, high memory, high IOPS, or if there are internetwork connectivity issues or network congestion.

For dependent service failure, we have appropriate exception handling, redundancy measures, and retries configured. We have Auto-Healing in place. We also have alerting in place when the metrics get skewed. SB\EH Engineering Team monitors these metrics round the clock and takes corrective action when necessary.

f. Other Transient Errors.

g. Hardware failures (very rare)

h. Bugs, Ad-hoc Issues & Other Issues.

f-g-h: For Transient, Ad-Hoc, Bugs, and other issues, we have an Engineering team monitoring the systems round the clock and taking any remedial steps as and when necessary.

4. I am on Standard SB\EH Tier, and I see a few Server Errors regularly. This does not impact my application in any way but causes Alerts to trigger and lots of questions to be asked.

Ans: Standard SB\EH Tiers are shared between tenants. So, you may see a few Server Errors over the period of time. If your applications are not impacted, and you have retries in place you need not be concerned. The cause would be the one mentioned above.

Moving to a higher tier (like Premium or Dedicate) may result in a much lesser number of Server Errors. This is because the Broker Component is not shared with other tenants unlike in Standard Tier.

Note that in Premium Tiers you will see a lot fewer Server Errors, but it will not be zero. (This also answers the question: Will moving to Premium Tier get rid of Server Errors? )

5. During Updates\Upgrades of SB\EH users encounters statistically more Server Errors. Why are SB\EH updates not announced prior so that the users are made aware of the changes so that they can take proactive mitigation measures?

Ans: Read below:

- SB\EHs is a Platform as a Service (PaaS) offering. This would mean the consumers of this offering should not have to be worried about infrastructure and OS updates. Most of our users are not concerned about updates\ upgrades. We have assured you of 99.9% availability, a reasonable amount of redundancy and scalability, and constant monitoring. So as long as the application has retry logic, one need not have to worry about SB\EH updates\upgrades. If for any reason your application is impacted (updates or otherwise) – immediately report the issue to Microsoft Support.

- The users should expect regular updates, as our product group improves our product continuously. There are different types of updates\upgrades (see above) - some may be planned and others unplanned.

- The updates do not happen on all the namespaces simultaneously. The updates\upgrades\bug fixes\enhancements\etc will be tested in Test Environment, and then moved to a few Scale Units, then to a few Data Centres, Regions, and finally to all the Regions Globally. This may space from a few Days to Weeks. Mostly the updates\upgrades are automatic based on auto feedback of the previous install. Releasing a maintenance window for Days\Weeks will serve no purpose, and create confusion especially if there are overlapping updates.

- However, we have received some feedback asking to publish update time and details – Our Product Group is looking into these requests. I am hopeful, that we will produce some feature that will announce updates on the portal. (There is no ETA for the completion of such a feature).

- If you want to get this feature upvote here. (Send notifications / alerts when Service is receiving updates )

6. Why cannot SB\EH Updates\Upgrades not be scheduled during Off-Peak Hours?

Ans: Read the Answer to Q.5. Furthermore, SB\EH Peak Hours are determined by consumption from the client. Each SB\EH Namespace – Entity will have a different consumption pattern based on the customer's application. So, we may have to depend on historic data from the past few months, which is not very scientific or accurate. As we have reasonable redundancy and follow the cloud pattern of Update Domain\Fault Domain, we should be able to perform upgrades with minimal impact on the application, staying within our SLA promises.

7. Are Server Errors associated with SLA?

Ans: Yes, For details read the reference links.

Refer:

SLA for Service Bus | Azure

SLA for Event Hubs | Azure

8. Provide more information about Retries:

Ans: Please refer to the following articles:

- Transient fault handling from Retry general guidance - Best practices for cloud applications

- Azure service retry guidance - Best practices for cloud applications, especially Service Bus and Event Hub section.

- Please note that all the SB SDK provided by us has a way to configure retries. Based on the nature of the programming language the retry patterns and implementation will slightly differ. This is more of language\implementation quark.

Additional Information:

Azure Service Bus premium and standard tiers - Azure Service Bus |

Issues that may occur with service upgrades/restarts

Service Bus messaging exceptions

Azure service retry guidance - Best practices for cloud applications

Best practices for improving performance using Azure Service Bus - Azure Service Bus

Create partitioned Azure Service Bus queues and topics - Azure Service Bus

(What is Azure Service Health? - Azure Service Health | Microsoft Docs)

Tag: Service Bus, Event Hub, Server Error, Internal Server Error, HTTP Error 500, Update, Upgrade, Maintenance.

Published on:

Learn more