Use python SDK to send and receive events with schema registry in azure event hub

This blog is the complement of another blog which is related to Azure Event Hub Schema Registry. As we known, it’s not supported to migrate Confluent(Kafka) schema registry into Azure schema registry directly. We need to create and manage the scheme registry in the azure event hub separately. The good news is Azure Event Hub supplies multiple client sdks for us to use to serialize and deserialize payloads containing schema registry identifiers and avro-encoded data. In this section, I’d like to share how to use python sdk to send or receive events with schema registry in the Azure Event Hub mostly.

Prerequisites:

1.Create a schema registry group in the event hub portal.

You could refer to this official guidance to create schema registry group.

2.Install required python packages with pip tool

a.pip install azure-schemaregistry-avroencoder

The main package we will use below.

b.pip install azure-identity

Authentication is required to take schema registry group and create schema registry. Hence, we need use TokenCredential protocol of AAD credential.

c.pip install aiohttp

We need installing an async transport to use aysnc API.

3.To implement the TokenCredential authentication flow mentioned above, the following credential types if enabled will be tried in order:

- EnvironmentCredential

- ManagedIdentityCredential

- SharedTokenCacheCredential

- VisualStudioCredential

- VisualStudioCodeCredential

- AzureCliCredential

- AzurePowerShellCredential

- InteractiveBrowserCredential

In test demo below, EnvironmentCredential is used, hence we need to register an AAD application to get tenant id, client id and client secret information.

4.If we want to pass EventData as message type while encoding, we also need to make sure that we have installed azure-eventhub>=5.9.0 to use azure.eventhub.EventData module class.

Test Demo:

1.Client initialization

2.Send event data with encoded content

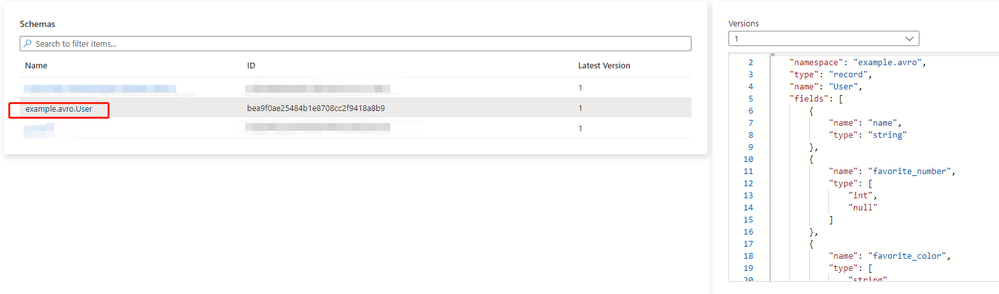

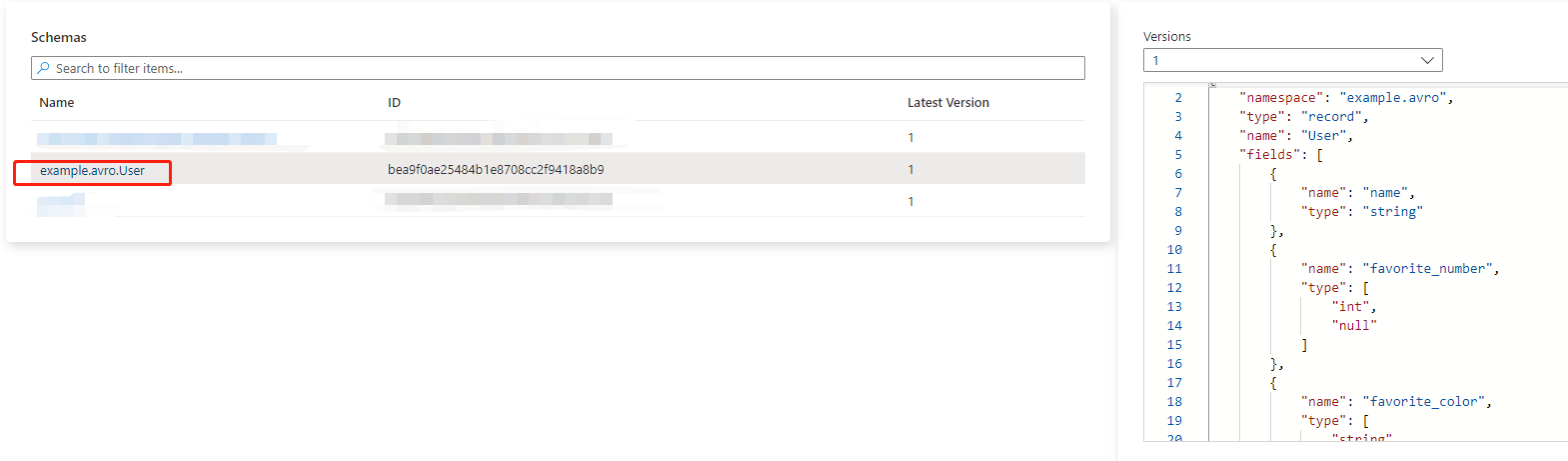

If we set the auto_register parameter as true, it registers new schemas passed to encode. We can check the created schema registry in event hub portal, the schema name is combined by value of namespace and name property in the definition we set.

If we observe that the created event data, the event text content is encoded as binary. In fact, the avro_encoder.encode method is using underlying BinaryEncoder function to encode/decode the message data.

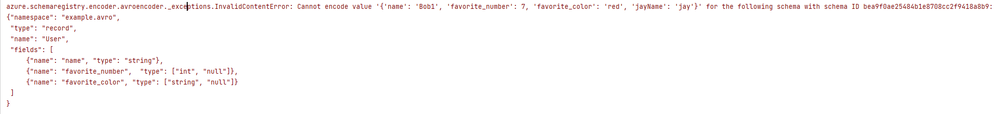

Once the schema registry is created, we can’t add extra property which is not contained in the standard schema definition.

For example, if we set dictionary content as:

we will encounter following exception:

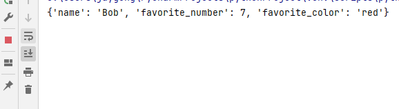

If we miss required property, it won’t passed through as well. For example, if we set dictionary content as:

we will encounter following exception:

3.Receive event data with decoded content

We could also get sync and async sample code snippets from this link.

Published on:

Learn moreRelated posts

Microsoft Purview: Data Lifecycle Management- Azure PST Import

Azure PST Import is a migration method that enables PST files stored in Azure Blob Storage to be imported directly into Exchange Online mailbo...

How Snowflake scales with Azure IaaS

Microsoft Rewards: Retirement of Azure AD Account Linking

Microsoft is retiring the Azure AD Account Linking feature for Microsoft Rewards by March 19, 2026. Users can no longer link work accounts to ...

Azure Function to scrape Yahoo data and store it in SharePoint

A couple of weeks ago, I learned about an AI Agent from this Microsoft DevBlogs, which mainly talks about building an AI Agent on top of Copil...

Maximize Azure Cosmos DB Performance with Azure Advisor Recommendations

In the first post of this series, we introduced how Azure Advisor helps Azure Cosmos DB users uncover opportunities to optimize efficiency and...

February Patches for Azure DevOps Server

We are releasing patches for our self‑hosted product, Azure DevOps Server. We strongly recommend that all customers stay on the latest, most s...

Building AI-Powered Apps with Azure Cosmos DB and the Vercel AI SDK

The Vercel AI SDK is an open-source TypeScript toolkit that provides the core building blocks for integrating AI into any JavaScript applicati...

Time Travel in Azure SQL with Temporal Tables

Applications often need to know what data looked like before. Who changed it, when it changed, and what the previous values were. Rebuilding t...